Background: I ran a small survey of attendants at EAG San Fran to crowdsource criticisms of EA and wrote a post where I tried to synthesise the complaints/suggestions into concrete policy suggestions.

Comment survey results

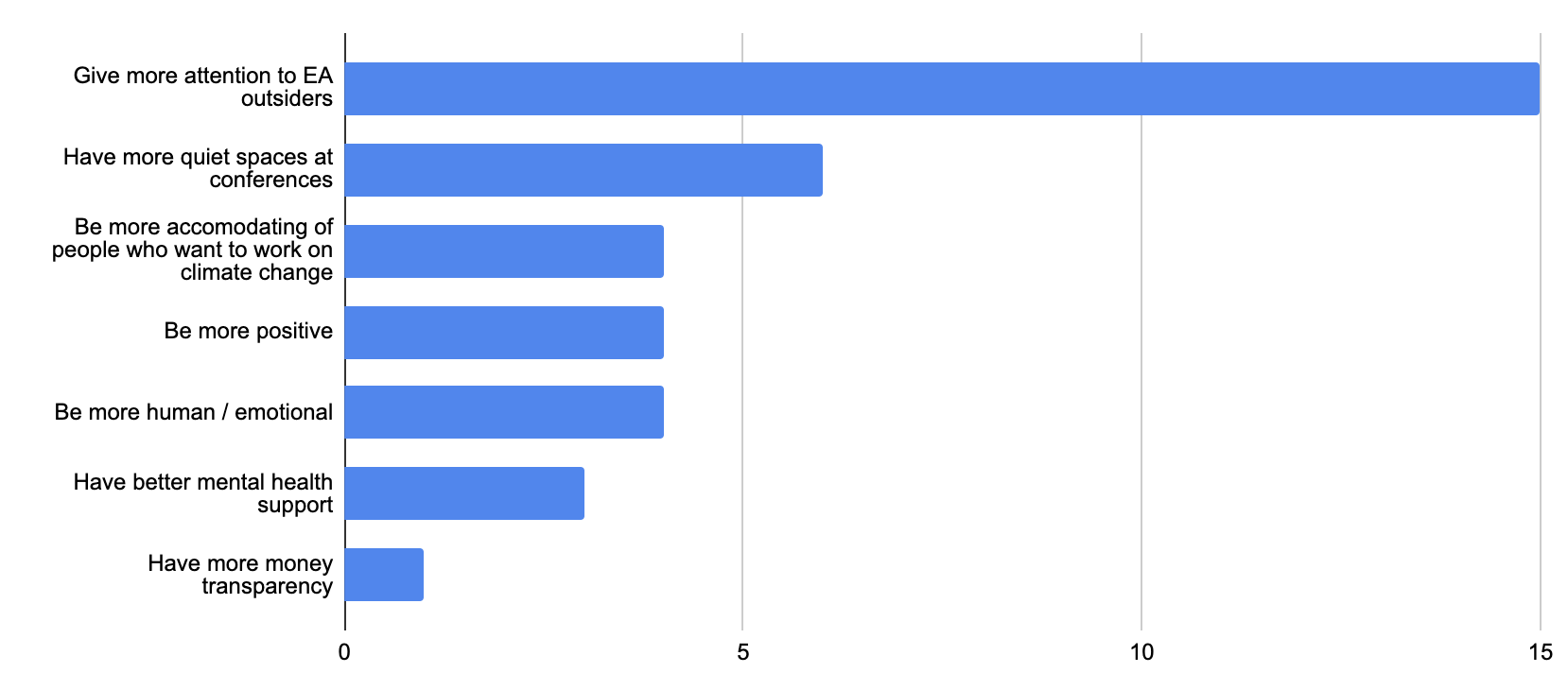

In the post I ran another survey as a crude measurement of how many people agreed with the suggestions. I left one comment for each suggestion and asked people to upvote comments they agreed with. 40 upvotes or downvotes were made, and here are the final karma counts

Karma doesn't map one-to-one onto "number of people who agree with this" because:

- People can and did downvote

- You can upvote or downvote with more than one karma

- Some people have more voting power than others

But despite these caveats, there's a clear winner to survey: give more attention to EA outsiders.

The next section of this post is a copy of the "give more attention..." section from my original post. I don't konw which (if any) parts of the section voters were agreeing with, but clearly there's something in this area worth exploring.

Survey winner: Give more attention to EA outsiders

[This section is copied from the original survey post]

Some extracts from the critques I heard at EAG:

- Jargon

- Inaccessable to newbies

- Couldn't see a bridge from their current work to something more impactful

- Too centralised

- If you're not willing or able to pack up your life and move ... you're always going to be an outsider

- clique-y

- EA elites

It's like there's an island where all the "EA elites" or "EA insiders" hang out and talk to each other. They accumulate jargon, ideosyncratic beliefs, ideosyncratic values, and inside jokes about potatoes. Over time, the EA island drifts further and further out to sea, so becomes harder and harder for people to reach from the mainland.

The island is a metaphor for EA spaces like conferences, retreats, forums, and meetups. Spaces designed by, and arguably for, EA insiders. The mainland is a metaphor for mainstream culture, where the newbies and outsiders live.

This dynamic leads to stagnation, cult vibes, and monoculture fragility.

I think the general strategy for combatting this trend is to redistribute attention in EA spaces from insiders at the frontier of doing good to everyone on a journey of doing more good.

This way, when Nancy Newbie hangs out in EA spaces, she doesn't just see an inacessible island of superstar do-gooders. She sees an entire peninsula which bridges her current position all the way to the frontier. She can see both a first step to improve do-gooding on her current margin, and a distant ideal to aspire to.

Another way of thinking about it: here are two possible messages EA could be sending out into the world:

- "We've figured out what the most important problems to solve are. You should come here and help us solve them."

- "We've figured out some tools for doing good better. What kind of good do you want to do and how can we help you do more of it?"

EA tends to send out the first message, but the second message is much is more inviting, kind, and I would guess it actually does more good in the long run.

Why does EA send out the first message? Legacies from academia? From industry? This is just how human projects work by default?

Concrete ideas:

- Keynotes should be less like "random person interviews Will MacAskill" and more like "Will MacAskill interviews random person". Or better yet, "Will MacAskill chats with a diverse sample of conference attendees about how EA can help them achieve more good in the world". I would guess that all parties would find this a more enlightening exercise.

- The 80,000 Hours Podcast should have interviews with a random sample people they've given career advice to, rather than just people who have had successful careers. This would give 80,000 Hours higher-fidelity feedback than they get by doing surveys, it would give listeners a more realistic sense of what to expect from being an ambitious altruist, and it would let everyone learn from failures rather than just successes.

- More people should do what I'm doing here: going out and talking to "the people". Give the plebs a platform to make requests and voice concerns. "The people" can be conference attendees, forum users, facebook group members, university club members, or even people who have never interacted with EA before but are interested in doing good.

- Do more user testing. Get random people off the street to read EA articles, submit grant requests, or post something on the EA forum. Take note of what they find confusing or frustrating.

- Add a couple lines to the EA Forum codebase so that whenever anyone uses jargon like "on the margin" or "counterfactual", a link to a good explanation of the term is automatically inserted.

- Run surveys to find out what people at different levels of engagement actually value, know, and believe. Make EA spaces more accomodating to these.

Second place: more quiet space at conferences

The runner up in the comment survey was more quiet space at conferences. This is a nice easy actionable suggestions.

But it's also good calibration for whoever runs the feedback surveys at EAG. Did you pick up that this was something people wanted? On the off chance that you didn't, maybe you should try getting more feedback from open-ended interviews rather than google forms.

But I'd also like to reiterate the climate change point

"Be more accomodating of people who want to work on climate change" was tied third in the comment survey, but of all the criticisms I heard, that was the one that stuck out to me the most. It was exactly the kind of thing I had hoped to find by going out and talking to EAs "on the street". It's a piece of EA messaging that's well intentioned but has hard-to-detect negative downstream consequences. So I'm also going to re-publish the climate change section from the original post here:

Accomodate people who want to work on climate change

[This section is copied from the original survey post]

I only talked to 13 people, and yet I heard two reports of this sentiment: "I care about climate change and am put off by how dismissive EA is of it".

If people are dedicated to fighting climate change, then it's preferrable to give them the EA tools so they can fight climate change better than to meet them with a dismissive attitude for a problem that's deeply important to them and turn them off of EA forever.

If someone enters an EA space looking for ideas on fighting climate change, then there should be resources available for them. You can try nudging them towards something you think is higher impact, but if they aren't interested then don't turn them away.

The EA tribe should at worst be neutral to the Climate Change tribe. But if EA comes across as dismissive to climate change, then there's the potential to make enemies due to mere miscommunication.

[Note: this section has been significantly reworked after Gavin's input.]

Anonymous Criticisms from a Google Form

I also made an anonymous Google Form and asked people to give me more criticisms that they didn't want to publish themself. I got two responses. One was unexpectedly long and carefully written. I had been planning to simply summarise any criticisms I received, but I don't think that would do justice to this person who has taken a reasonable amount of time to articulate some strong feelings. The form was anonymous, so I can't ask this respondant if they are ok with me directly publishing their response, so I think what makes most sense is for me to simply publish what this person wrote and hope that they're ok with that. If you're not ok with it, then I am sorry. I should've clarified in the form whether I was going to publish these criticisms or not.

The first criticism discusses:

- need for greater diversity

- how condescending messages to people who are productively working on non-EA issues turn them off of EA

- EA is too cult-y (or may become too cult-y)

And the second criticism discusses:

- cliqueness

- a desire for more diverse activities and interests to be showcased in EA spaces

Anonymous criticism 1

[The contents of this section is not written by me, it's written by the anonymous critic. I have added emphasis to what seemed to me like key points.]

I believe that EA has a series of issues that do and will continue to detract from its ability to maximize well-being using reason and evidence. The first is related to the fairly homogeneous nature of the space in terms of its demographics. EA seems strongly focused on recruiting well-off, highly educated members who are often focused on abstract and frequently neglected political issues on which many others in society do not necessarily have the privilege of focusing. Unless we want to fully endorse technocracy, which seems like a dangerous path that has in some ways created the very problems we are focused on mitigating, we need broader, more democratic engagement in figuring out which problems could be focused on, how we should prioritize, and how we should go about solving these problems. The sad reality is that a cadre of wealthy, white, Oxonian and San Franciscan men is not going to have full insight into the problems of the world nor the best ways to solve them, and if we lean too heavily on this kind of group, we will risk not identifying large but often unidentified problems that actually do affect many other people and minimizing the size of our coalition. I am by no means advocating for identity politics here; I believe that anyone of any social identity can understand and work to mitigate problems faced by any other group with enough engagement and good-faith work. But what I am saying is that not actually engaging with different groups will deprive EA of the ability to fully consider all relevant possible problems and will reduce the collective intelligence and political influence of the group.

To continue my point above, I also tend to believe that "interest" as part of the ITN framework is often itself neglected, and we need to focus much more on this component. This is important as certain groups might be most interested in eliminating some problem that is particularly relevant to them and could benefit from principles and tools from EA. For example, it might be the case that African Americans are particularly interested in eliminating police brutality in the U.S., even if this issue only affects a small number of people each year relative to issues like heart disease or malaria. If this is true, then it would be offensive to claim that African American activists should abandon their work on stopping police violence to focus on mitigating AI risk. While no one explicitly makes this claim, having "top cause areas" certainly implies it. Just because a certain group isn't working on what CEA or some other EA authority deems as the most important problem, it seems like there are ways to partner with them instead of shunning them as profligate. Other movements could certainly make use of EA frameworks (e.g., there are probably much more effective ways to eliminate police brutality that should be invested in first), and this could help build partnerships and coalitions as well as use existing tools and frameworks to more rapidly make societal progress. This could also have the added benefit of mitigating other high-profile societal issues, leaving people more time, energy, attention, and resources to focus on some of the more abstract and neglected problems. (To be clear, I am not refuting the inclusion of neglectedness in the ITN framework but am rather saying that there are ways of engaging with those who are currently working on various issues in productive ways and that interest as an additional criterion should be further emphasized. I believe this probably already happens to some extent but should be formally accepted and encouraged.)

The second main issue is the cult-like nature of much of EA. While the discussion of whether EA is some kind of odd, secular cult has been had multiple times, there are at least elements that certainly appear cultish and likely take away more from the movement than they contribute. Various features of religions and often cults such as (a) demands on substantial fractions of income; (b) pressures (implicit or explicit) to romantically or platonically engage with other EAs; (c) the nature of EA communities where many EAs live or even work together; (d) control of diets and other lifestyle choices; (e) ritualistic practices ranging from attending conferences to making forum posts; (f) the presence of EA celebrities who are virtually worshipped; (g) an obsession with doomsday scenarios of existential and catastrophic risk; (h) a large focus on spreading or "proselytizing" the movement; (i) reinforcing the notion that extremely important ends justify odd means that may be crazy to most; (j) implicit and possibly explicit use of shame to control the actions of members; and (k) the totalizing nature of the movement all contribute to making the movement off-putting to some, particularly the more educated, secularly minded individuals who are often the target of EA recruiting. While there are generally good arguments for most of these features of the movement and many might be features of any serious social movement, becoming a manipulative, quasi-religious movement is probably easier than it seems. There might even be a drift toward this kind of structure over time, which would not only undermine EA's recruiting goals but also destroy the movement's ability to be flexible and transform and criticize itself. It may also lead to loss of key members or even some becoming enemies of EA given its theoretically dogmatic nature. There are probably a series of controls that could be installed to avoid this future and to avoid drifting toward or giving the impression of being a religion or cult.

Anonymous criticism 2

[The contents of this section is not written by me, it's written by an anonymous critic]

This won't be a big critique or anything, but I feel like EA can often be super—sometimes to a fault—close knit and cliquey. Like, I love that many of my friends have been made through EA, but feeling close to a lot of other people who are also your colleagues can lead to a lot of messiness. Especially since a lot of EA consists of young people who may not have a lot of experience navigating complex personal relationships.

Maybe we could encourage people to have hobbies outside of EA and that it's OK to view EA as a professional space and not your core social community.

As a sidenote, I'd love if EA social events/conferences could include like Musicians of EA, Sports fans of EA, and so on. Sometimes I really don't want to talk about QALYs, AI, or factory farming.

More Criticisms Please

More data is still useful!

If you have anything to add to something discussed here, please leave a comment, message me, or enter something into this anonymous form.

If you have any additional criticisms which you don't expect to write-up yourself, please leave a comment, message me, or enter something into this anonymous form.

Please continue voting on the original post and filling out the anonymous criticism form.

I'm also intested in hearing feedback on this entire project. If anyone important finds this information useful (or would find something adjacent useful) please get in touch and we can discuss future work like this.