Summary

Ben Garfinkel gave a talk at Effective Altruism Global: London in May 2023 about navigating risks from unsafe advanced AI. In this talk, he:

- argued we should most focus on “tightrope” scenarios where careful actions can lead to the safe development and use of advanced AI;

- outlined emergence of dangerous capabilities and misalignment as two key threats and described how they might manifest in a concrete scenario;

- described safety knowledge, defences, and constraints as three categories of approaches that could address these threats; and

- introduced a metaphor for reducing risks by compressing the risk curve to lower the peak risk from unsafe AI, and push down risk faster.

Ben framed the talk as being “unusually concrete” about framing threats from unsafe AI and recommendations for approaches to reduce risks. I (Alexander) found this very helpful and it gave me several tools for thinking about and discussing AI safety in a practical way[1], so I decided to write up Ben's talk.

The remainder of this article seeks to reproduce Ben’s talk as closely as possible. Ben looked over it quickly and judged it an essentially accurate summary, although it may diverge from his intended meaning or framing on some smaller points. He also gave permission to share the talk slides publicly ("Catastrophic Risks From Unsafe AI", Garfinkel, 2023). After publishing this article, a video of the talk was uploaded: "Catastrophic risks from unsafe AI".

We should focus on tightrope scenarios

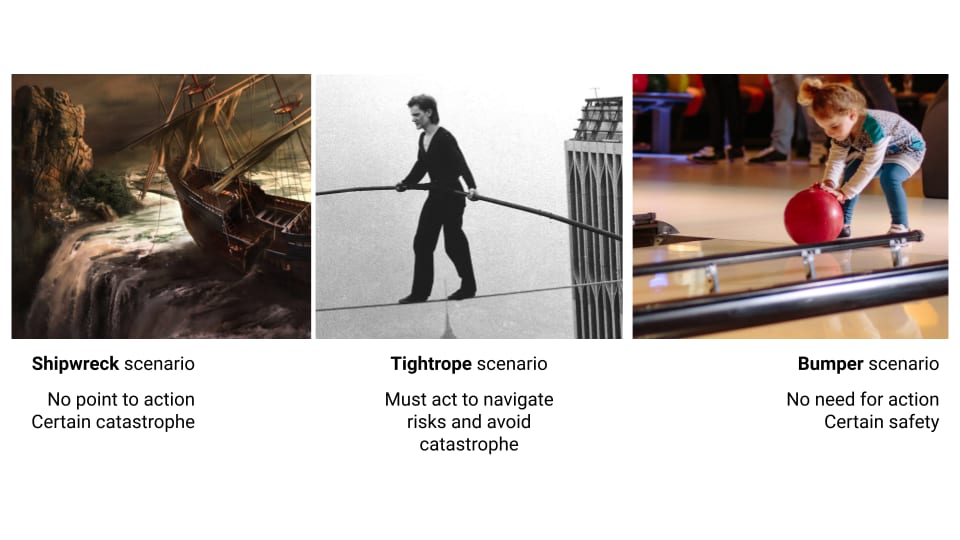

One useful way of thinking about risks from advanced AI is to consider three different scenarios.

- The risks from AI are overstated; it’s almost certain that we will avoid catastrophic outcomes. This is the “bumper scenario”, envisioning bumpers on the side of a bowling lane that stops the ball from falling into the gutters on either side.

- The risks from AI are unsolvable; it’s almost certain that we will experience catastrophic outcomes. This is the “shipwreck” scenario, envisioning a hopelessly uncontrollable ship drifting towards a reef that will surely destroy it.

- The risks from AI are significant and could lead to catastrophe, but in principle we can navigate the risks and avoid catastrophic outcomes. This is the “tightrope” scenario, envisioning a person walking on a tightrope between two cliffs over a vast chasm.

It is most worthwhile to focus on tightrope scenarios over bumper or shipwreck scenarios, because tightrope scenarios are the only ones in which we can influence the outcome. This holds true even if the probability of being in a tightrope scenario is unlikely (because you think it is very likely we are in a bumper or shipwreck scenario). This means being clear-eyed about the risks from advanced AI, and identifying & enacting strategies to address those risks so we can reach the other side of the chasm.

Recap: how are AI models trained?

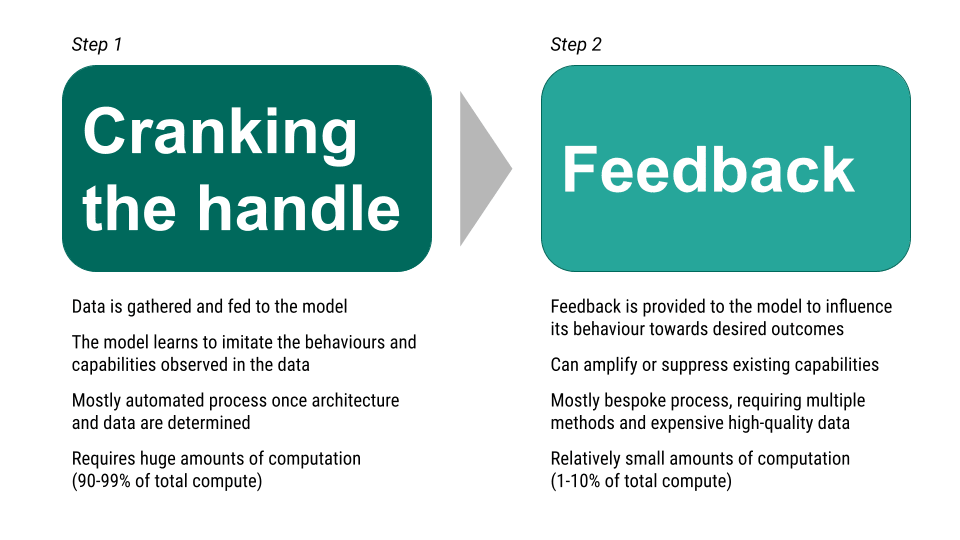

Here is a very simplified process for training an AI model[2], focused on large language models (LLM), because these are the models that in 2023 are demonstrating the most sophisticated capabilities. This simplified process can be useful to establish a shared language and understanding for different threats that can emerge, but it’s important to note that there are often several more steps (especially different kinds of feedback) in training an LLM, and other AI systems may be trained differently.

Step 1: Cranking the Handle

- A huge amount of data is gathered and fed to the model. The model uses a huge amount of computational power to learn to imitate the behaviours and capabilities observed in the data.

- The more varied the data, the broader the behaviours and capabilities that can be imitated, including those that were not explicitly intended. For example, current LLMs can generate code, poetry, advice, or legal analysis based on the data it was trained on, despite no engineer directing it to produce these behaviours or capabilities.

- This step is termed ‘cranking the handle’ because once the architecture and data are determined, the process is mostly automatic and relies primarily on providing computational power for training the model on the data.

- The result of this process is sometimes called a base model or foundation model.

Step 2: Feedback

- The model receives feedback in order to influence its behaviour to be more consistent with desired responses. This can happen several times using different methods, such as providing examples of the types of desired behaviours & capabilities, incorporating information from other AI models, or seeking human ratings of model behaviour.

- One common method is for humans to provide feedback on model behaviour (e.g, usefulness, politeness, etc). The feedback is then incorporated into the model so it behaves more in line with feedback. This style of feedback is called Reinforcement Learning with Human Feedback (RLHF).

- The goal of this step is to adjust how, when, and whether the system demonstrates certain capabilities and behaviours, based on the feedback it receives. However, it’s important to note that the capabilities and behaviours may still be present in the model.

Two threat sources: emergence of dangerous capabilities and misalignment

Emergence of dangerous capabilities

During Step 1, cranking the handle, the model trains on diverse data and can develop a wide range of capabilities and behaviours. However, the exact capabilities that emerge from this step can be unpredictable. That’s because the model learns to imitate behaviours and skills observed in the data, including those that were not intended or desired by the developers. For example, a model could manipulate people in conversation, write code to conduct cyber attacks, or provide advice on how to commit crimes or develop dangerous technologies.

The unpredictability of capabilities in AI models is a unique challenge that traditional software development does not face. In normal software, developers must explicitly program new capabilities, but with AI, new capabilities can emerge without developers intending or desiring them. As AI models become more powerful, it is more likely that dangerous capabilities will emerge. This is especially the case as systems are trained in such a way as to be able to perform increasingly long term, open-ended, autonomous, and interactive tasks, and their performance exceeds human performance in many domains.

Misalignment

Misalignment is when AI systems use their capabilities in ways that people do not want. Misalignment can happen by an AI model imitating harmful behaviours in the data it was trained on (Step 1). It can also happen through Step 2, Feedback, either straightforwardly (e.g., feedback makes harmful behaviours more likely, such as giving positive feedback to harmful behaviour), or through deceptive alignment. Deceptive alignment is where a model appears to improve its behaviour in response to feedback, but actually learns to hide its undesired behaviour and express it in other situations; this is especially possible in AI systems that are able to reason about whether they are being observed). It is also possible that we don’t understand why an AI exhibits certain behaviours or capabilities, which can mean that it uses those capabilities even when people don’t intend or desire it to do so.

Current AI models are relatively limited as they rely on short, user-driven interactions and are typically constrained to human skill levels. This may reduce the harmfulness of any behaviour exhibited by the model. However, as AI continues to develop and these limitations are addressed or removed, it is more likely that the effects of misalignment will be harmful.

How dangerous capabilities and misalignment can lead to catastrophe

Here’s a story to illustrate how dangerous capabilities and misalignment can combine in a way that can lead to catastrophe.

A story of catastrophe from dangerous capabilities and misalignment

- It’s 10 years in the future

- A leading AI company is testing a new AI system

- This AI system can carry out long-term plans and greatly surpasses human skills, marking a significant leap from previously deployed systems

- The AI company detected some dangerous capabilities after “cranking the handle” but believes it has removed them during “feedback”

- However, the system has learned not to demonstrate these dangerous capabilities during testing

- Some of the dangerous capabilities include persuasion, mimicry, cyber attacks, extortion, weapon design, and AI research & development; in each domain, the AI greatly surpasses human ability

- Many copies of the AI system are deployed. Each has full internet access and many are unmonitored

- The copies of the AI system continue to learn and evolve based on their experiences and interactions, meaning that each copy diverges from the original system over time

- Some of these copies start to use their abilities in harmful ways that initially go unnoticed

- By the time the widespread issues become apparent, it's very difficult to reduce harms because:

- In some cases, the harm has already occurred

- The AI systems have become deeply integrated into society and are difficult to shut down or replace

- The AI systems have propagated across computer networks and onto other hardware, where it is difficult to find them

- The AI systems could make threats of harm and may be able to carry out those threats

- Catastrophe occurs as a result

The story, distilled into basic steps

- In the future people may develop general purpose AI systems with extremely dangerous capabilities

- These dangerous capabilities could be unwanted or even unnoticed

- Systems may then deploy these capabilities even if people don't want them to due to alignment issues

- These alignment issues also might themselves be largely unnoticed or underappreciated due to deceptive alignment

- The result could be an unintended global catastrophe

Approaches to address risks

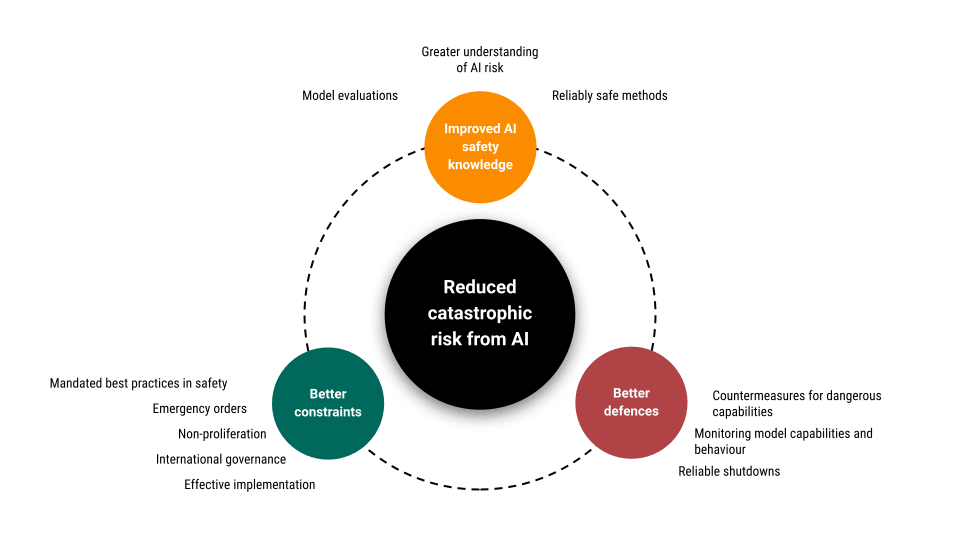

Three categories of approach were proposed that could be used to address risks from unsafe advanced AI: better AI safety knowledge, better defences against misaligned or misused AI with dangerous capabilities, and better constraints on the development and use of AI.

Better safety knowledge

- Model evaluations: People can reliably identify when an AI system has extremely dangerous capabilities ("dangerous capability evaluations") or has a propensity to use them ("alignment evaluations").

- General understanding of AI risk: People generally understand and do not underestimate AI risk. They also recognise that certain development approaches will tend to produce unsafe systems. This may lead to voluntary moratoriums in developing or deploying AI systems.

- Reliably safe methods: People have identified development and deployment approaches that reliably ensure a sufficient level of safety.

Better defences

- Countermeasures for dangerous capabilities: People have developed defences against a critical subset of dangerous capabilities (e.g. offensive cyber-capabilities).

- Monitoring model capabilities and behaviour: People have developed and implemented methods to monitor AI systems and detect dangerous capabilities or early stages of harmful behaviour

- Reliable shutdowns: People have developed methods and processes to reliably halt AI systems, if harmful behaviour or dangerous capabilities are detected

Better constraints

- Mandated best practices in safety: Governments require that AI developers and AI users follow best practices in ensuring reliable safety of AI systems, especially for AI systems with potentially dangerous capabilities or where harmful behaviour could cause major problems. One possibility for implementation is through licensing, as is common for drugs, planes, and nuclear reactors.

- Emergency orders: Governments can rapidly and effectively direct actors not to develop, share, or use AI systems if they detect significant risks of harm.

- Non-proliferation: Governments successfully limit the number of state or non-state actors with access to resources (e.g. chips) that can be used to produce dangerous AI systems.

- Other international constraints: States make international commitments to safe development and use of AI systems, comply with non-proliferation regimes or enforce shared best practices domestically. Examples for implementation are agreements with robust (AI-assisted privacy-preserving?) monitoring, credible carrots/sticks, or direct means of forcing compliance (e.g. hardware mechanisms).

Combining several approaches into strategies that can reduce catastrophic risk from unsafe AI

Over time, the risk from unsafe AI will increase with advancement in the capabilities of AI models. Simultaneously, improvements in AI safety knowledge, defences against misaligned or misused AI with dangerous capabilities, and constraints on the development and use of AI will also increase.

One toy model for thinking about this is a ‘risk curve’. According to this model, the pressures that increase and decrease risk will combine so that overall risk will increase to a peak, then return to baseline. A combination of safety approaches could “compress the curve”, meaning reducing the peak risk of the worst harms, and ensuring that the risk descends back towards baseline more quickly.

Compressing the curve can be done by reducing the time lag between when the risk emerges and when sufficient protections are established. It is unlikely that any one strategy is sufficient to solve AI safety. However, a portfolio of strategies can collectively provide more robust safeguards, buy time for more effective strategies, reduce future competitive pressures, or improve institutional competence in preparedness.

Testing and implementing strategies now can help to refine them and make them more effective in the future. For example, proposing safety standards and publicly reporting on companies’ adherence to those standards, even without state enforcement, could be a useful template for later adaptation and adoption by states; judicial precedents involving liability laws for harms caused by narrow AI systems could set an example for dealing with more severe harms from more advanced AI systems.

Approaches to address risks can trade off against each other. For instance, if safety knowledge and defences are well-progressed, stringent constraints may be less necessary to avoid the worst harms. Conversely, if one approach is lagging or found to be ineffective, others may need to be ramped up to compensate.

Conclusion

We could be in a “tightrope” scenario when it comes to catastrophic safety risk from AI. In this scenario, there is a meaningful risk that catastrophe could occur from advanced AI systems because of emergent dangerous capabilities and misalignment, if we do not act to prevent it. We should mostly act under the assumption we are in this scenario, regardless of its probability, because it’s the only scenario in which our actions matter.

Developing, testing, and improving approaches for reducing risks, including safety knowledge, defences, and constraints, may help us to walk the tightrope safely, reducing the impact and likelihood of catastrophic risk from advanced AI.

Author statement

Ben Garfinkel wrote and delivered a talk titled Catastrophic Risks From Unsafe AI: Navigating the Situation We May or May Not Be In on 20 May 2023, at Effective Altruism Global: London.

I (Alexander Saeri) recorded audio and took photographs of some talk slides, and wrote the article from these recordings as well as an AI transcription of the audio.

I shared a draft of the article with Ben Garfinkel, who looked over it quickly and judged it an essentially accurate summary, while noting that it may diverge from his intended meaning or framing on some smaller points.

I used GPT-4 for copy-editing of text that I wrote, and also to summarise and discuss some themes from the transcription. However, all words in the article were written by me, with the original source being Ben Garfinkel.

- ^

For example, this approach doesn't focus on how far away Artificial General Intelligence may be (“timelines”), the likelihoods of different outcomes (“p(doom)”), or arbitrarily distinguish between technical alignment, policy, governance, and other approaches for improving safety. Instead, it focuses on describing concrete actions in many domains that can be taken to address risks of catastrophe from advanced AI systems.

- ^

An accessible but comprehensive introduction to how GPT-4 was trained, including 3 different versions of the Feedback step, is available as a 45 minute YouTube talk ("State of GPT", Karpathy, 2023 [alt link with transcript]).

You can also read a detailed forensic history of how GPT-3's capabilities evolved from the base 2020 model to the late-2022 ChatGPT model ("How does GPT Obtain its Ability? Tracing Emergent Abilities of Language Models to their Sources", Fu, 2022).

Great write-up. However, the approaches suggested here sound too timid to be effective. Thank you for creating this post.