Context

Few weeks ago, we published Local charity evaluation: Lessons from the "Maximum Impact" Program in Israel , which summarize our experience in the planning process and execution of the first-ever local nonprofit evaluation in Israel.

In the last two months, we conducted a thorough analysis of this pilot and we're happy to share it with the community as well. You can also read this post on google docs.

Executive Summary

Background and methodology

- The Maximum Impact program aimed to increase evidence-based philanthropy in Israel by partnering with local nonprofits to conduct cost-effectiveness analyses on interventions.

- This research presents our evaluation of the pilot program outcomes, with the goals of refining the program, sharing key learnings, and serving as a testing ground for similar initiatives globally.

Methodology

- We used mixed methods evaluation, combining surveys, interviews, and self-assessments to analyze progress across four metrics: user satisfaction, best practices, impact on participating NPOs, and ecosystem effects.

- To enhance the credibility of our methods, we collaborated with the Rethink Priorities survey team[1] to build the participants' survey, which serves as our primary database in the current evaluation.

Main Results

- Participants gave high satisfaction ratings, averaging 8.2/10 overall, with a strong Net Promoter Score of 48.

- Personalized support during the research process and deadlines seemed most critical in encouraging completion of the program, while monetary incentives were surprisingly less impactful.

- Positive impacts were found on skills, activities, and short-term choices, but we found variation in the participants readiness to make significant changes on the basis of cost-effectiveness. Further work is required to achieve a shift in funding allocations based on our research.

Key lessons

- We need to refine our messaging on the impact of the research on fundraising, standardize the research process, build long-term partnerships with the philanthropic ecosystem and continue to improve evaluation skills, while investing more resources in donor engagement.

Key lessons for potential organizers of local cost-effectiveness research

- Make hands-on research assistance and a structured process the top priority to demonstrate tangible value and build partnerships with nonprofits - note that this requires at least some expertise in M&E or quantitative social science; general EA knowledge is insufficient. Cultivate relationships with donors or work in partnerships with a large, well-established organization before launch to better channel funding toward effective programs that are discovered. On the margin, invest in research grants rather than other monetary incentives (e.g. - prizes or donation matching).

Background

In the current research, we will evaluate the effectiveness of the Maximum Impact program, which aims to increase the impact of Israeli philanthropy significantly. Over the past year, Maximum Impact partnered with promising local nonprofits to conduct cost-effectiveness analyses. In total, 21 such analyses were published, and a panel of expert judges awarded three organizations a prize for outstanding work. Two of these, Smoke-Free Israel, which does successful tobacco taxation lobbying, and NALA, which cost-effectively leverages existing infrastructure to provide running water to schools in Ethiopia, have the potential to be as cost-effective as GiveWell’s top charities, albeit with less room for funding.

This research aims to find best practices for future program implementation and assess the current impact on participating nonprofits and the philanthropic ecosystem in Israel. While the program has a broader approach, this research focused on a subset of the key objectives. Specifically, we’ll focus on the impact on the participating organizations - have we succeeded in transforming the measurement and strategic culture among Israeli nonprofits to be more effectiveness-oriented? Increased money transfers towards effective organization is an important goal, and a key part of our TOC, but not a focus of this evaluation, because it is too soon to see significant funding changes based on the research program. Later evaluation should also be implemented to examine the success level of this crucial aspect.

Theory of Change

Miro presentation of our ToC: link

| Inputs | Activities | Outputs | Outcomes |

Total costs (including paying for researchers time) : $224,000 Researchers time (From this cost) - approx. 150 hours per CEA Volunteer hours - approx. 500 hours total Nonprofit time - on average, 157 per CEA. Experts time - 60 hours for Judges + 20 hours advisors | Donation Portal - a website where we promote our top charities and GiveWell's top charities. Donation matching - 100k ILS designated to donation matching for our top charities during a two-month period. Guidance for nonprofits – Personal and group meetings Connecting researchers, Analysts, and EA activists with nonprofits Guidance for researchers and volunteers Prize - 80k ILS for each of the outstanding nonprofits. Ready to use templates and research guidelines (both original and external public files) Experts review of the reports and outputs Hard submission deadlines | Cost - effectiveness reports List of cost-effective Israel charities (partial) Improved charities research capabilities Advertise MI widely (podcast, website) Connections with donors and funds Money transferred through Donation Portal Improve researchers' and volunteers' skills in cost-effectiveness research Written materials for nonprofits and researchers “Going global” toolkit - run this program in other countries | Increased donations to effective Israeli charities Increased salience of cost-effectiveness considerations among relevant Israeli NGO stakeholders (Incl. management, donors, charities, and investors) Improve the cost-effectiveness of participating charities [Long Shot] More cost-effective IL charities (at least 2 years from now) Growth for effective altruism ideas and and community in Israel

|

Methodology

The ideal evaluation of whether our Maximum Impact program genuinely increases Israeli nonprofits' expected impact and promotes more effective altruism would require extensive follow-up research. For now, we’ll start by trying to assess the short-term effect of participation using well-developed surveys (developed together with Rethink Priorities survey team)[2], supported by the interviews we conducted with most of the participants.

This research utilized a mixed methods approach, gathering both quantitative and qualitative data to evaluate the effectiveness of our program and the tools provided.

Specifically, we leveraged three primary data sources:

1. Interviews with Participating Nonprofits

Semi-structured interviews were conducted with most nonprofits that participated in the Maximum Impact program during the pilot year (N=15 out of 21). The interviews averaged 60 minutes and gathered in-depth perspectives on program experiences, effectiveness, areas for improvement and impact.

2. Participant Questionnaires

Detailed online questionnaires were sent to all participating nonprofits, including those that dropped out before completing the program (N=19, ~86% response rate). These questionnaires utilized Likert scale and open-ended questions to quantify and qualify perceptions of the program's value, influence on priorities, community-building impact, and more.

3. Nonprofit Community Population Survey [Work in Progress][3]

Shorter online questionnaires were distributed to the Israeli nonprofit community to assess general perceptions of Maximum Impact, effective altruism concepts, and beliefs around cost-effectiveness analyses. This supported comparative insights between the participants and the general population. The results of this database will not be used in the current study (see footnote 3).

Results

Descriptive statistics

Summary statistics were calculated on key characteristics of the nonprofits participating in the Maximum Impact pilot program. Metrics included the annual budget, years in operation, number of employees, and volunteers. Averages for these metrics were also gathered for the broader population of Israeli nonprofits to enable comparison between the sample and the whole population. The table below details these descriptive statistics. In addition, we gathered data about the participant's research experience for counterfactual evaluation. The Median results (and SDs) are presented in the following table:

Variable | Program sample | All nonprofits[4] |

Obs. | 19 | 17,248[5] |

Annual Budget | 5,433,333 nis (5,664,705.9) | 4,961,030 nis (40,131,665) |

Tenure | 14.77 years (11.88) | 19.12 years (11.77) |

Employees | 19 (41) | 32.24 (330.81) |

Volunteers[6] | 447.38 (median = 50) (945.85) | 48.63 (897.39) |

Cost-effectiveness Research experience | 22.2% | NA |

Avg. weekly research hours6 | 30.73 (median = 8) (65.04) | NA |

The descriptive statistics reveal that nonprofits self-selecting into the Maximum Impact program differ in some ways from the average Israeli nonprofit organization. The participating nonprofits were younger organizations, with fewer employees and more volunteers compared to the general population. These results provide valuable context on the types of organizations accepted into and completed the research process. Overall, the sample organizations can be characterized as younger, smaller, and more data-driven than the average Israeli nonprofit.

This is reasonable given that around 50% of the participating organizations were identified as relatively new, growth-oriented groups that opted into the pilot in order to refine their operations and outcome measurement. This raises a strategic question for the next round of the program: What factors predict success in a program like this? Which types of organizations should be targeted for recruitment?

Unfortunately, there are no clear demographic patterns predicting success, given the small sample size currently. The participating organizations varied in size, wealth, seniority, and maturity. However, there were no significant differences in success rates across these characteristics. One issue worth considering is the fact that very young programs, existing for less than two years, did have some difficulty gathering sufficient information to conduct research of this scale. We recommend that future programs to decide on a minimum maturity threshold for participants in order to conduct a rigorous evaluation process successfully

However, the main predictor variable was, unsurprisingly, the organization's existing data culture and its readiness to utilize this data for measuring its activity effectiveness. Organizations already collecting information to quantify their activities, even if not for research purposes, are typically prone to be better candidates for success in this program. Nonprofits not accustomed to data collection struggled more, only achieving moderate research levels.

Main Results

To evaluate the success of the program, we collected data on four key metrics that we expected would serve as useful indicators of progress and effectiveness:

- Participant Satisfaction - assess the overall satisfaction of participants from the program

- Best Practices Identification - Evaluated the usefulness and effectiveness of different program aspects through surveys.

- Effect on Participants - Measured impact on participating nonprofits' effectiveness, priorities, and operations.

- Ecosystem Ripple Effects - Estimate the program's role in enhancing cost-effectiveness salience in the broader Israeli nonprofit ecosystem.

To assess these four metrics, we’ll combine the results from our two main data sets: the interviews and questionnaires with our participants. This mixed-methods approach allowed us to gather multiple perspectives on the program's influence at the participant, organizational, and ecosystem levels.

Participant Satisfaction

To directly estimate our participant satisfaction, our survey included two key questions - a Net Promoter Score (NPS)[7] and an overall satisfaction rating.

The NPS asks participants how likely they are to recommend the program to others on a 0-10 scale. Our NPS was 48, considered a great score. We also asked participants to directly rate their overall satisfaction with the Maximum Impact program on a scale from 1-10. The average satisfaction level reported was 8.2 out of 10, indicating a high level of satisfaction among participants.

The strong Net Promoter Score (NPS) and high average satisfaction ratings indicate most participants had a positive experience with the program overall. However, as a free service offering extensive complementary resources, including financial and professional assistance, we expected an even higher NPS. This suggests there is room for improvement in fully satisfying participant needs and accurately addressing their priorities.

As will be discussed in the following sections, one potential enhancement area is providing more support to help participants leverage their research efforts for fundraising purposes. We could better assist nonprofits in explaining and applying their findings when engaging with prospective donors focused on effectiveness and evidence-based giving, or better improve our messaging to be clear that only top performers will get funding assistance. Facilitating connections with relevant funders aligned with these priorities could also increase the program's value.

Further examination of the interviews revealed mixed views on donor engagement. While participants were highly satisfied with the research, analysis, and guidance, some felt the program fell short on securing funding. 5 of 15 interviewees expressed concerns about insufficient donor support. As one CEO put it, "An expectation was created that [the research] would connect them to new donors (and specifically from the EA community), but now it doesn't seem to be materializing."

Although we’ll take it into consideration, funding was not our core objective - the focus was enabling analytical rigor for nonprofits and advocating for effective giving based on the results. While we aim to demonstrate links between effectiveness and funding, this requires additional communication to avoid misconceptions. We think the misaligned expectations were partially due to the fact we were over-emphasizing increased funding when initially marketing the program, in part due to the "funding overhang" pre FTX-explosion.

Another issue worth considering is the significant time demands. Survey results show participating nonprofits invested over 150 work hours on average, on top of approximately 150 research hours completed by our team per organization. Interviews validated these demanding time requirements, a finding we aim to address moving forward.

Best Practices Identification

Which components were the most important?

The main purpose of this chapter is to identify the level of relevance and usefulness of the components of our program to our beneficiaries. During the pilot we provided participants with three types of support:

- Personal support - research grants of $5,200 for 15 selected organizations; affordable paid researchers(paid by the organizations) - all of the researchers were PhDs and MA students from Israeli top universities quantitative social sciences departments (Mostly economists) and EA community volunteers which served as a personal project manager on our behalf.

- Expert support - meetings with the program director, an experienced economist who specialized in intervention evaluation at the Bank Of Israel; Technical help from data analysts on demand for modeling and code-related tasks.

- Group guidance - group meetings with our director in which the learned basics in effectiveness research and cause-prioritization, econometrics and general research skills; ready-to-use research templates and written materials.

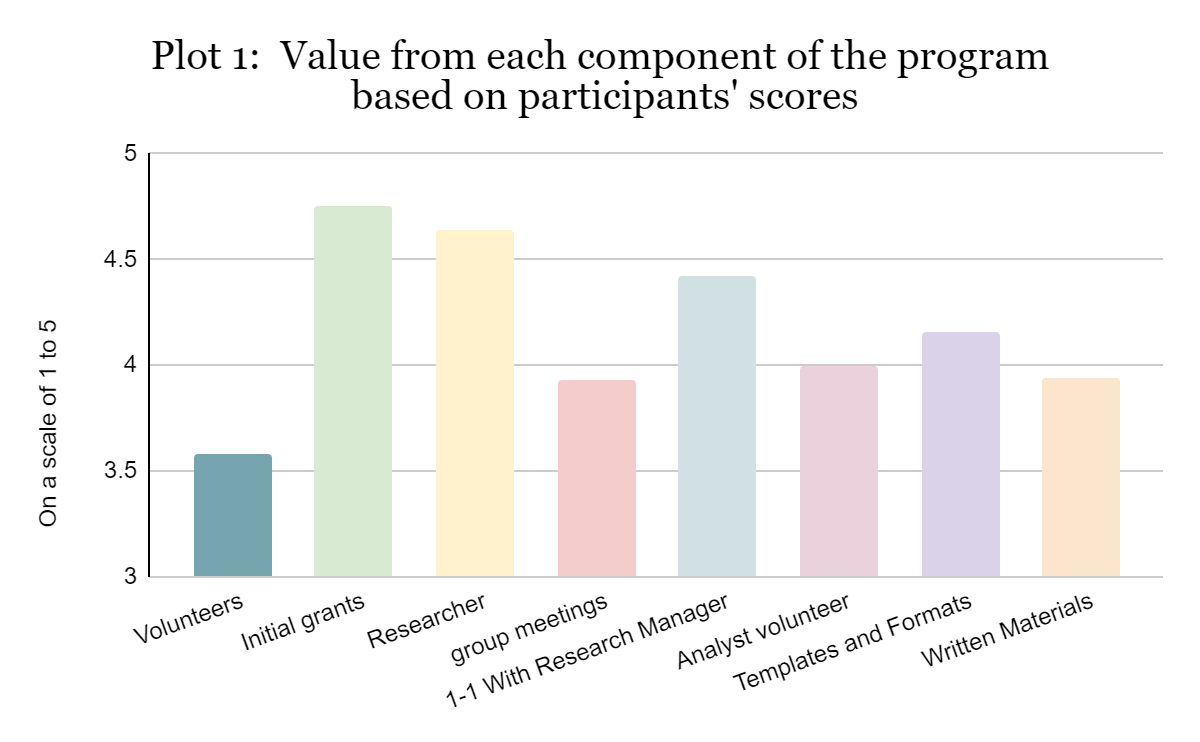

Firstly, participants were asked to specify the value they received from each component (on a 1-5 scale). The following graph plots the survey results:

Research grants received the top rating, with a score of 4.75 out of 5. Unsurprisingly, the paid researcher ranked very similarly, as the grant primarily funded the researcher's role in most cases. Two more cost-effective elements also ranked highly - meetings with our research director and ready-to-use templates/materials. These results are encouraging to us as they do not require any variable costs on our side.

Although EA volunteer researchers were the lowest-rated component, interviews suggested a more nuanced view. From our understanding, the volunteer's value depended on two factors: The volunteer's commitment level and expertise level and whether the nonprofit had a paid researcher (from their behalf or ours). In cases lacking a paid researcher, committed and skilled volunteers were critical to the research's success. The volunteer role has some value for resource-constrained organizations, but may be redundant when affordable paid researchers are available. Regardless, it seems crucial to screen the volunteers to ensure they add value to the organization in the evaluation process.

Although the written materials ranked relatively low in their usefulness, they require minimal ongoing effort to maintain as publicly available resources. Since these materials are already published, we will continue providing them when requested and occasionally create new content when required. The materials serve as useful references for participants and enable a level of standarization to allow comparison, which is important from the programs perspective. Even if they are not seen as central to the program's value, it’s seems as cost-effective for us to continue to use them as integral part of this kind of programs.

Incentives analysis

The next area we want to explore in our best practices analysis is identifying the most effective incentives for participants to fully engage with and successfully complete programs. While the previous section offered insights into making research more accessible and rigorous for organizations, this section aims to provide guidance on what most motivates organizations to see programs through to completion despite potential challenges. The goal is to understand how to encourage full participation (rather than how to best support them, as presented before).

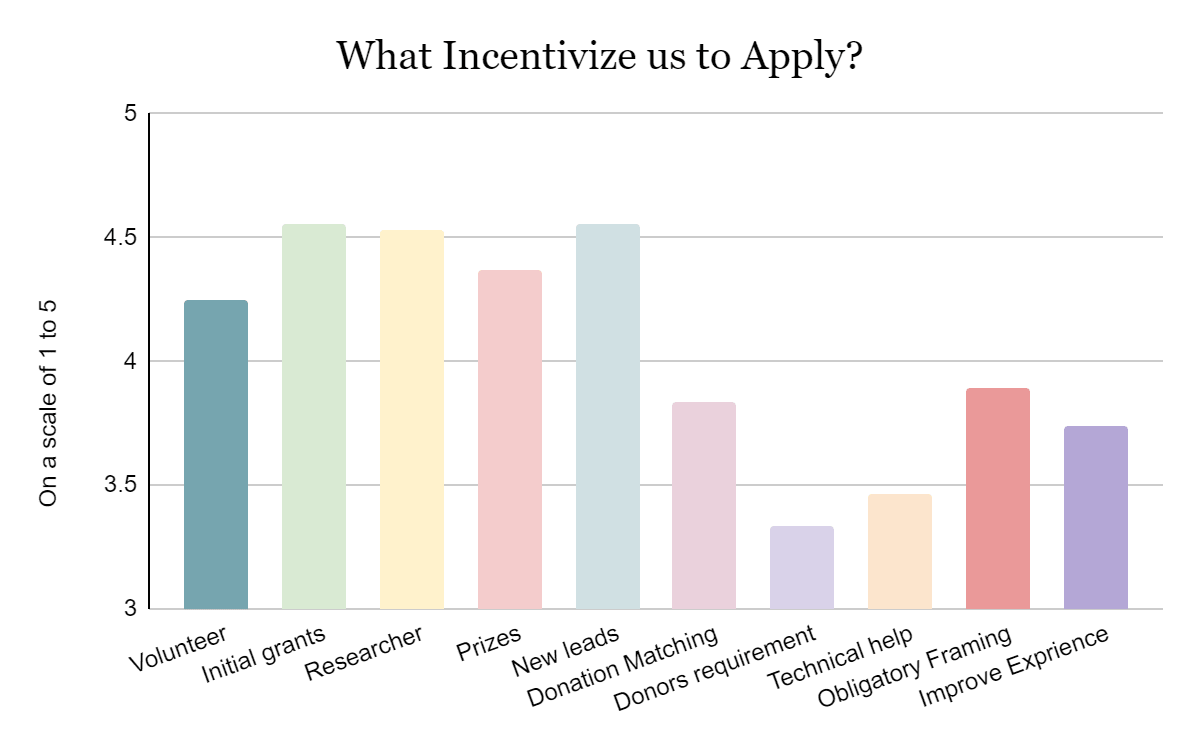

As can be seen in plot 1-a (appendix), three key incentives - personalized professional assistance from researchers, research grants, and the prospect of new donors - were perceived as equally significant motivators for participants to apply to our program. Interestingly, donation matching was given a relatively lower rank by the participants.

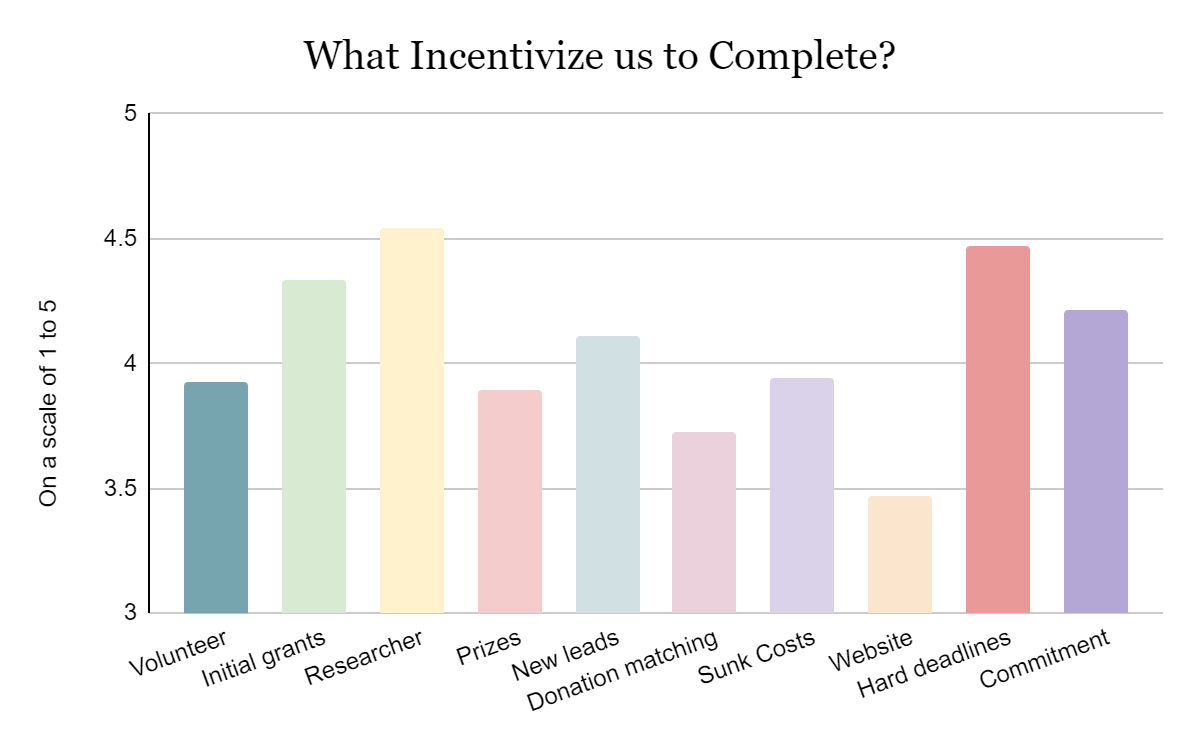

When examining the incentives that facilitated participants in successfully navigating through the program, the role of personal researchers and firm deadlines for different stages of the program stood out. The participants' dedication to the process and commitment to our team also scored highly, along with the initial grants. Conversely, prizes and donation matching were accorded lower scores despite consuming a substantial portion of the program's budget.

The quantitative results were supported by the interviews, where most recipients stated the hard deadlines were highly contributory for them to complete the process, if not the most important feature. The interviews suggested that what initially incentivized them to apply were two key things: the initial research grant (they could not conduct research without this due to budget constraints) and the guarantee of a complete and rigorous final research product. This qualitative data provides valuable insights into which specific features were most important in motivating our participants to engage in this extensive process.

These findings suggest that a successful replication of this model could be achieved with a lower investment in monetary incentives, apart from the initial research grants. Such a strategic move could result in an overall savings of approximately $90,000 (which is ~40% of our total costs) for a similar execution of the program. This means the program has the chance to significantly improve the costs-effectiveness ratio in the future.

Effect on Participants

One of the main strengths of our current program was that we had a personal and tailored process with each one of the organizations being evaluated. This kind of relationship was highly beneficial on the grounds of our impact on the organization's strategy and operations, specifically in the monitoring and evaluation field (M&E). This result was highlighted in all the metrics we measured in our questionnaires.

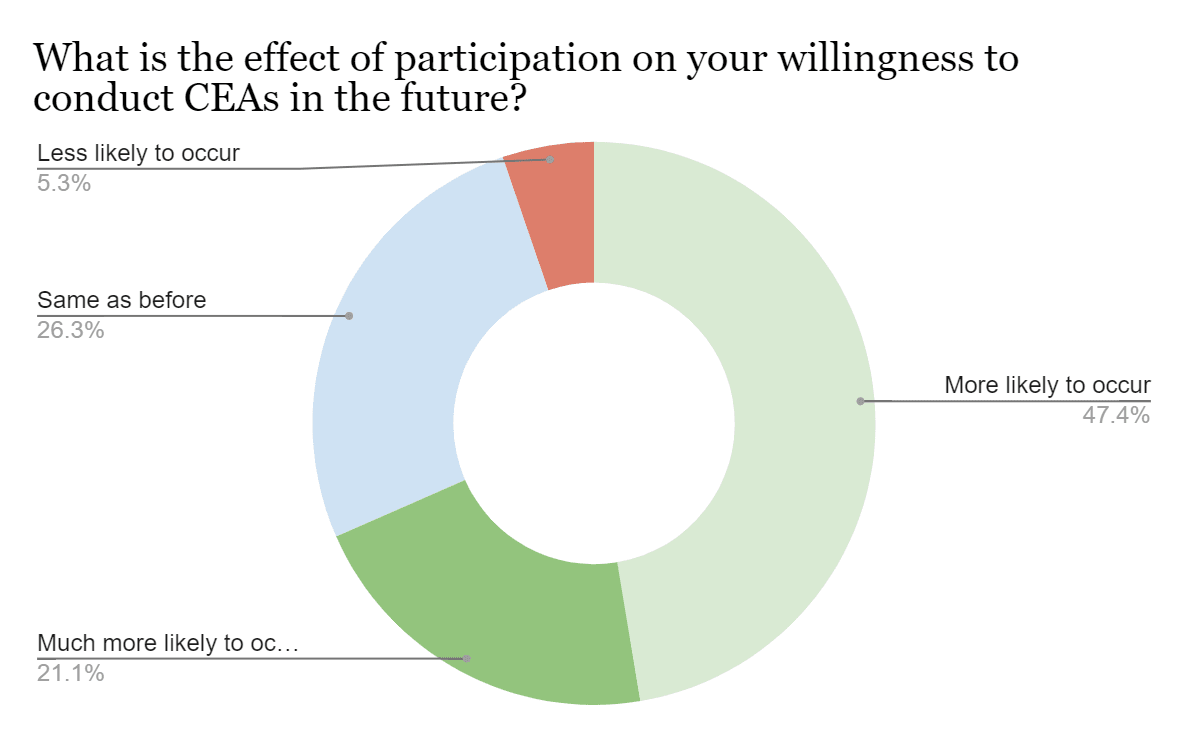

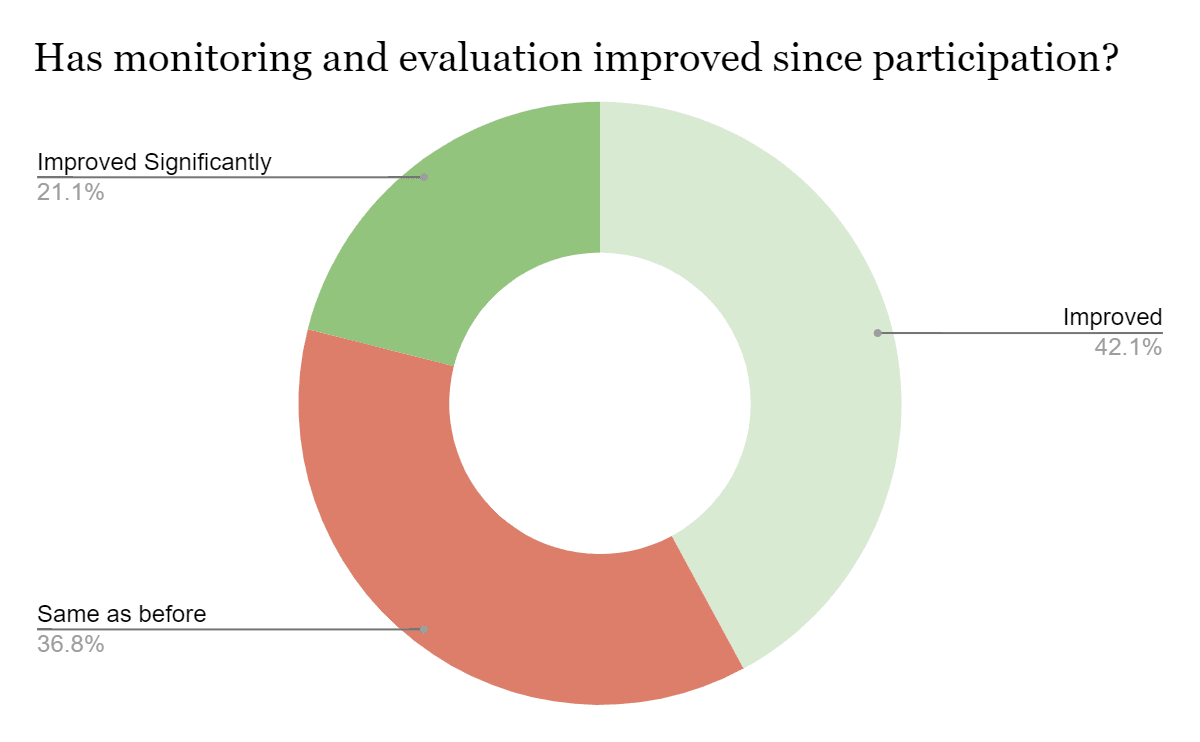

As can be seen in the plots below, recipients report on a positive effect of the program on their organization measurement skills. Specifically, we measured an increase of the probability participants will conduct similar analyses in the future among 67.5% of the participants. Additionally, 63.2% of participants reported an improvement of their M&E skills due to the program. The interviews suggest that most of the participants who reported no effect in this metric were already well-aligned and experienced with evaluation methods, some of them had already conducted CEAs in the past.

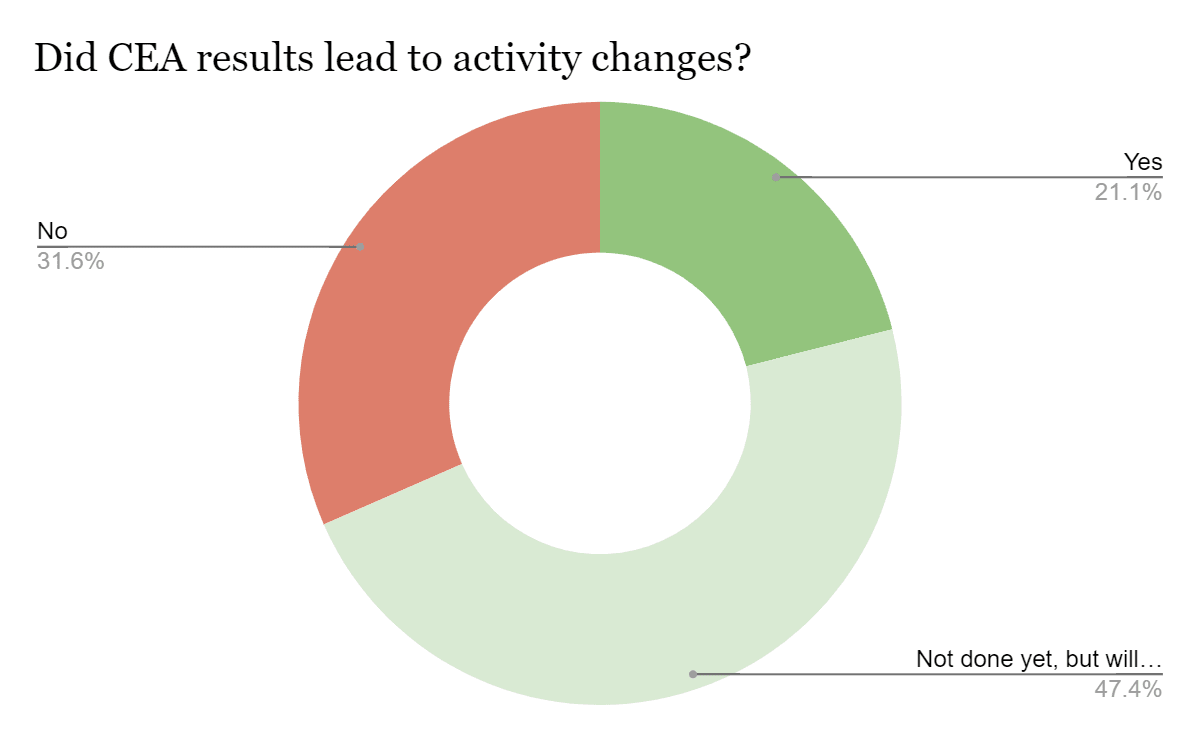

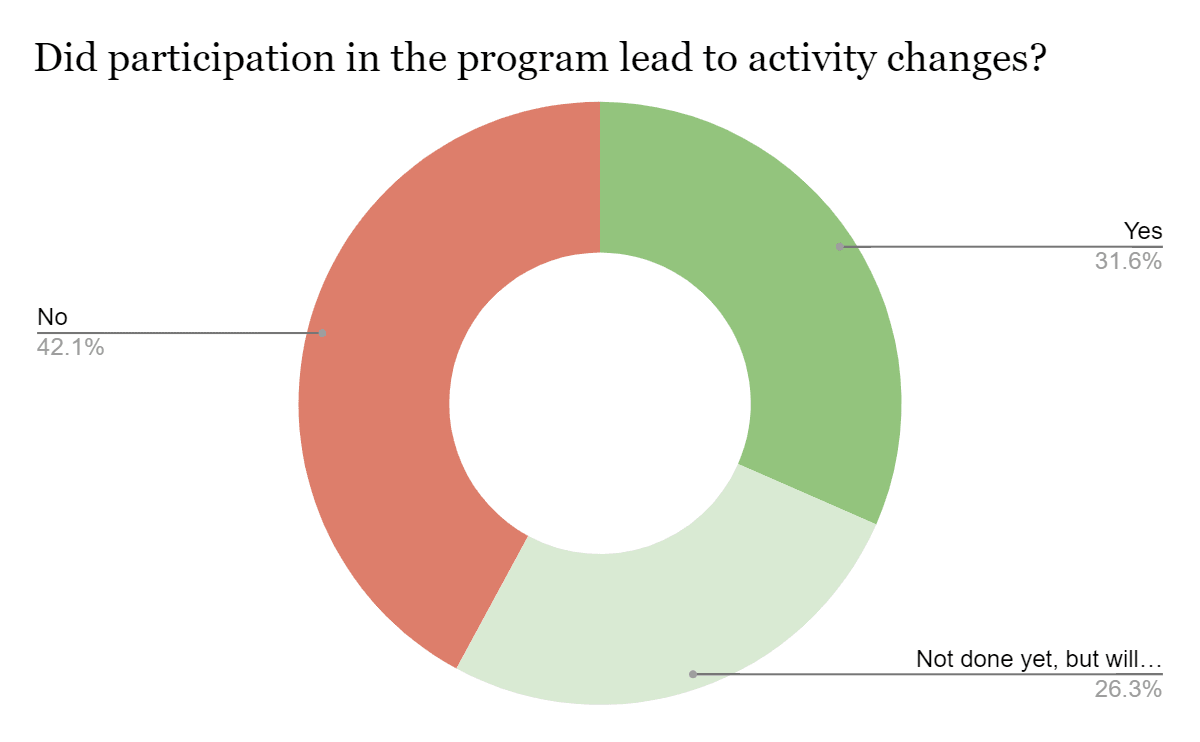

Plot 2 - Reported effects on the organizational level

The results also suggest an apparent effect in the short term on the activities and the investment choices of the organizations participating. About 68.5% of the organizations reported that major changes were conducted in the evaluated program due to the CEA findings and the feedback they received. Even without considering the results themselves, participants indicated being part of a program motivated by effectiveness optimization was helpful already. Precisely, 57.9% of the participants reported that program participation led to immediate activity changes to improve its effectiveness, as can be red in the following paragraph.

One of our participants, NALA Foundation, provided an insightful example. As they developed their theory of change with our researcher for their WASH program in Ethiopia, one realization stood out. NALA aims to “restore safe water access in schools and reduce hygiene-related illness." During discussions, the researcher questioned how NALA would ensure the children don’t use this water for drink? (as it not safe-to-drink water). For NALA it seemed obvious the water was not for drinking, but they recognized beneficiaries and outsiders may not find that so clear. As they told us, "That was a significant and much needed realization for us, even before starting the evaluation." Working with the researcher helped NALA gain a new perspective on effectively communicating objectives. Changes that may prevent undesired circumctances in the future.

The interviews show similar results. Some participants suggest a positive and major impact on their activity choices, and some report no major effect. As one CEO stated, “There was some ceiling effect to the impact you could have on us on this aspect, since we were already aligned with effectiveness principles”, while others suggest major implications: “It's changed how we operate fundamentally. We're constantly assessing ‘How effective is this?’ in a way we never did before. It's just embedded in how we think now”.

Ecosystem Effects

The last desired outcome of our program is to harness the research products and new partners in order to magnify the salience of effectiveness in the broader ecosystem of the nonprofit sector in Israel. Within our numeric qualification, we decided to check two factors:

- The frequency of mentions of effectiveness in discussions with funders and within the organization.

- The role of effectiveness and KPI’s measurements in the decision making of both organizations and donors.

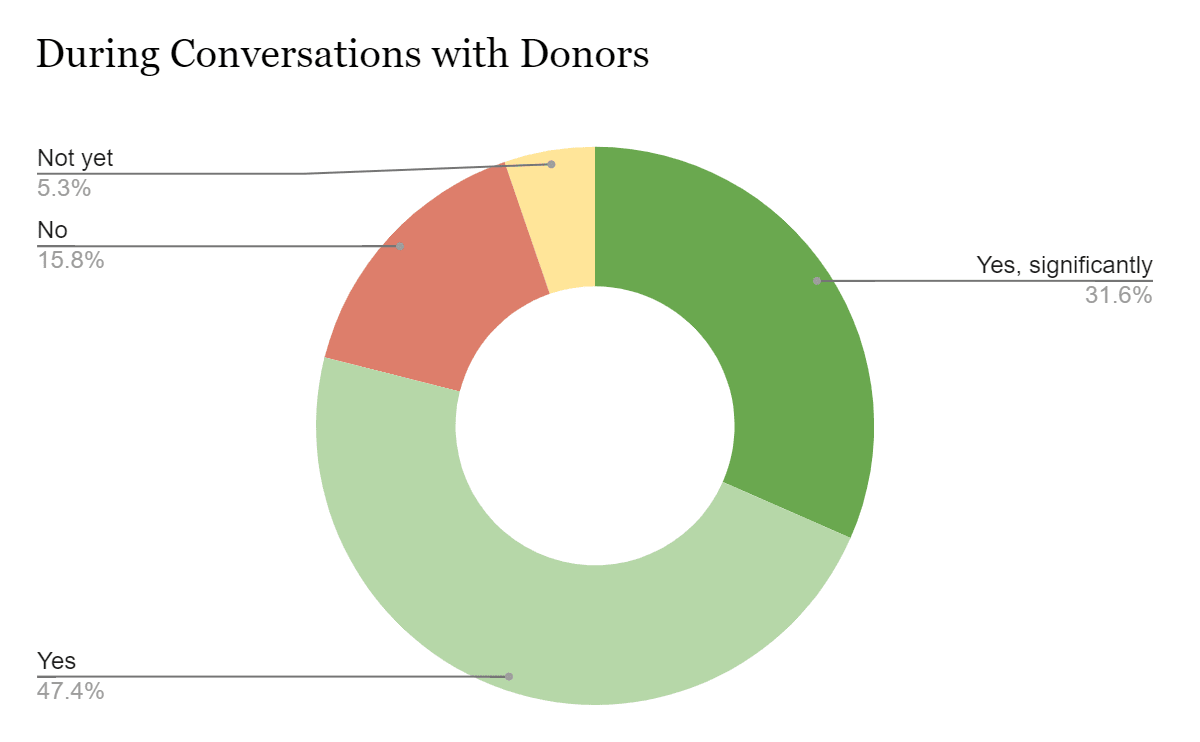

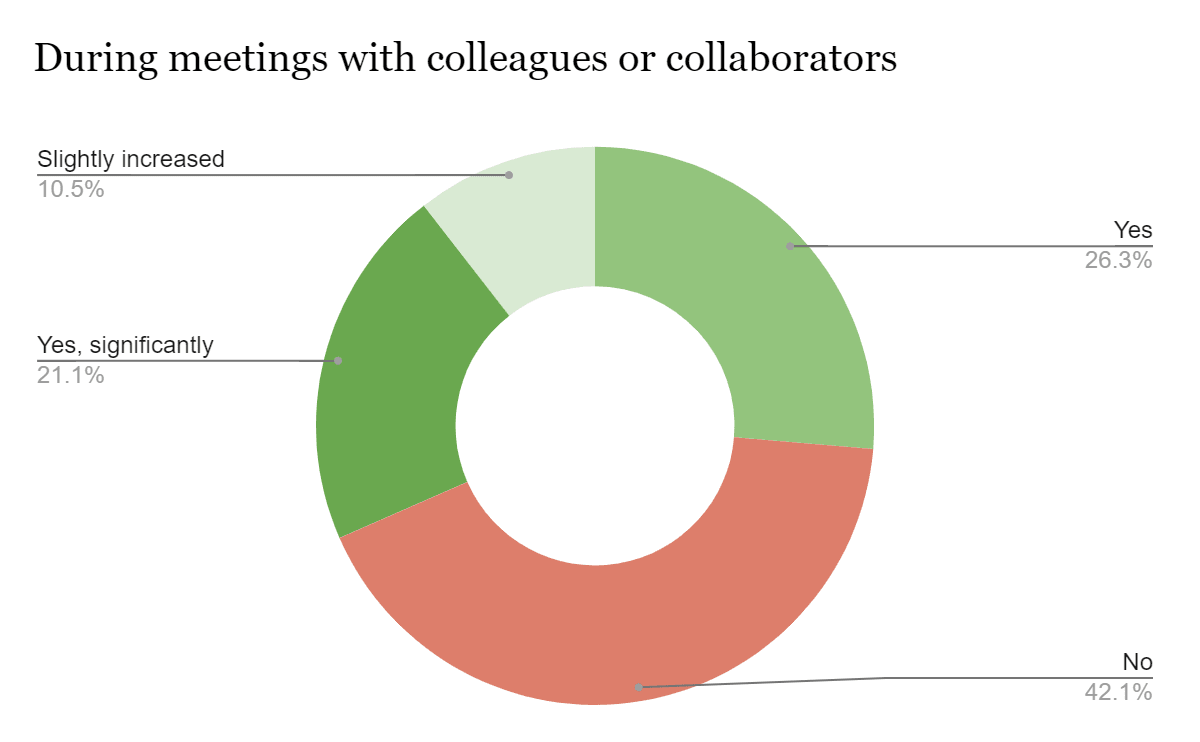

To measure the first parameter, participants were directly surveyed on the rate they mentioned effectiveness in conversations post-program compared to prior norms, the results are presented in plot 3.

Plot 3: Frequency of effectiveness mentions compared to one year ago

Remarkably, over 84% of participants reported increased frequency of effectiveness-related discussions with donors. This result is supported by the fact that 15 out of 21 participating organizations have already utilized the effectiveness evaluations produced during the program in fundraising efforts. On average, each organization applied for more than 6 grant requests using our results in the past 3 months alone.

While increased effectiveness discussions and research utilization for fundraising are positive signs, there is a risk these could be used to cast ineffective organizations in a more favorable light. Organizations participating in the program and conducting research could point to these actions as signals of being data-driven and rigorous, even if the research does not demonstrate true impact. This may enable some less effective nonprofits to secure more funding by creating an appearance of effectiveness.

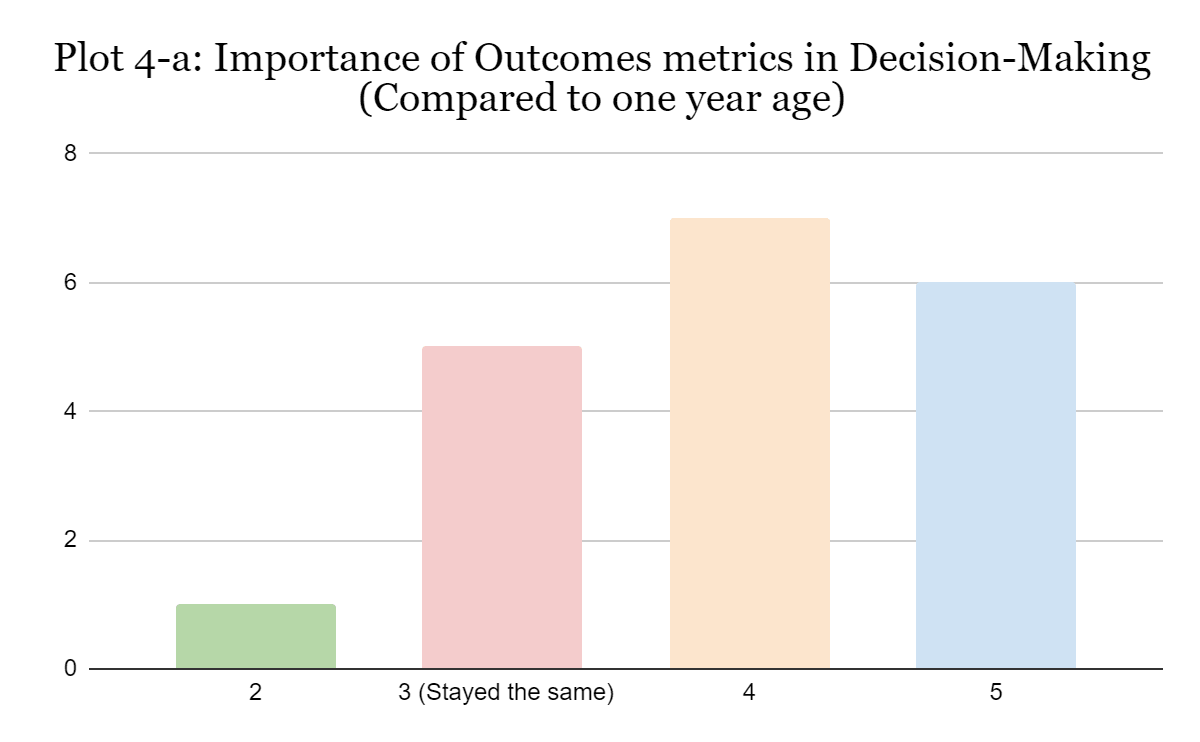

Regarding the second factor, prioritizing effectiveness in decision-making shows significant shifts compared to one year ago. The results presented in Plot 4-a and 4-b in the appendix indicate that overall, 68.4% of participants stated that effectiveness considerations have become more integral to their decision-making compared to the previous year.

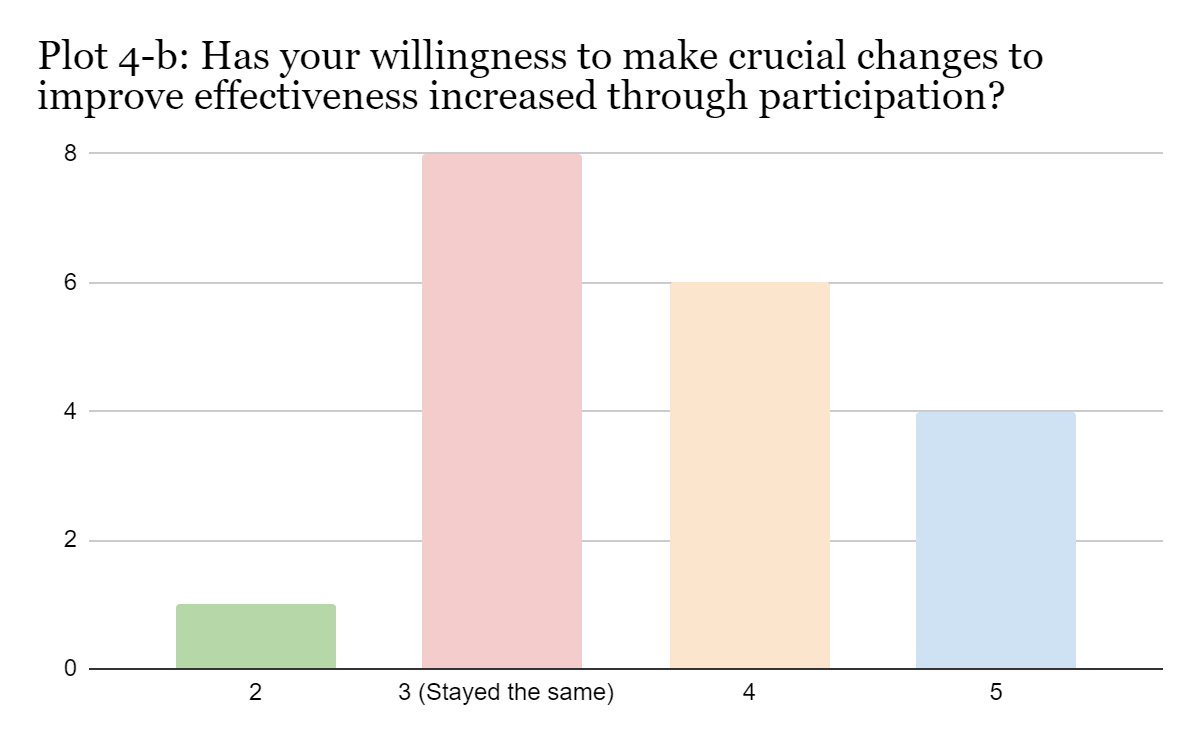

However, when asked directly about their willingness to make major changes to improve effectiveness, such as changing staff or canceling ineffective programs, the results were more mixed. 52.6% of participants indicated they were more willing to take significant steps to optimize impact compared to before the program.

This hesitation was reflected in an interview with one nonprofit CEO, who noted: "The research did not catalyze big changes in our activities because the researcher remained external. Enacting major change requires much deeper involvement from us as the partner organization - we can't just be the subject, but must be actively embedded in the analysis".

However, this point of view seems to be much connected to the engagement level of the individual organization. Another CEO reported different feelings: “The program motivated us to invest heavily in M&E from now. We've decided to transition our entire organization to a CRM system for the first time after over 40 years. We learned the importance of ensuring measurement and data are accessible at any time.”

Discussion

Main Takeaways

Overall, the results indicate the program succeeded in increasing participant focus on cost-effectiveness and evidence-based decision-making. Both qualitative and quantitative data showed most organizations increased prioritization of impact considerations. This is a very encouraging sign of a shift towards an effectiveness-focused culture emerging among participants.

However, the findings also highlight areas needing improvement. While participant satisfaction was high overall, we must address concerns on insufficient donor connections and heavy time burdens. The interviews revealed mixed views on donor engagement and highlighted the extensive time demands on nonprofits. Although securing funding was not a core goal, we aim to demonstrate clear links between effectiveness and funding. Additionally, we can mitigate demands by refining research protocols and providing more resources.

Best Practices – The results suggest that personalized research support and firm deadlines were most critical for completion, while prizes and matches were less impactful. This indicates the program could likely replicate its success with fewer monetary incentives and more focus on providing high-quality personalized research guidance.

In addition, the results highlights the important role of personal researchers and firm deadlines as key incentive to the completion of the program. While we found prizes and donation matching as less important for this purpose. This results highly important for colleagues considering to operate similar programs

Effects on participants – The program positively impacted evaluation skills, activities, and short-term choices. However, the readiness to make major changes based on findings varied. As the interviews highlighted, deeper ongoing engagement is required to drive large-scale change. We believe long-term partnerships and capacity building (specifically in M&E) are likely needed to transform organizations holistically toward improved effectiveness.

Ecosystem effects - increased effectiveness discussions and some decision-making shifts were promising signs. However, the mixed readiness for substantive changes indicates a need for more work on the donors' side rather than the organizations. Achieving the program's full vision requires moving beyond a one-time thing to a continuous and prolonged work. We believe a substantial change will be available only within 2-3 years of work.

Counterfactual

To estimate the counterfactual scenario of organizations conducting similar quality cost-effectiveness analyses without our program, we utilized a simple methodology based on nonprofit self-assessments. Specifically, we asked participants to subjectively judge the likelihood they could have achieved similar analytical outputs independently, given their backgrounds. We quantified these estimations into percentages reflecting each nonprofit's probability of successfully completing comparable research absent our involvement. Explanations for the scoring are provided in Table 5-a (see appendix). In total, 18 organizations provided sufficient data for analysis.

Based on their explanation in the interviews, along with their relevant background variables (past effectiveness research experience and weekly research hours in the organization), we calculated for each organization the expected likelihood (in %) that they could achieve the same product without our program. Overall, we determined that our program played a “crucial role”[8] in achieving a rigorous effectiveness report for 15 of 18 recipients (83.3%). For 12 organizations (66.6%), we concluded our involvement was vital - meaning there was negligible probability[9] of generating this level of effectiveness research in the next three years without our program or similar external support.

With the current data, our program likely enabled most participants to achieve more robust and higher-quality cost-effectiveness analyses than they could have developed independently in this same timeframe. However, quantifying the precise counterfactual probability is difficult without more rigorous methods and data sources. Supplementing the self-assessments with expert risk assessments of participants' counterfactual research proposals, as well as weighting by analytical resource levels, could provide a more refined estimate. Nonetheless, the participant perspectives offer valuable indicative evidence that our program expanded its capacity to generate actionable findings on resource allocation optimization.

Recommendations and Conclusions

This chapter aims to synthesize key recommendations based on learnings from the pilot year, both to strengthen Maximum Impact and provide guidance to peer organizers seeking to initiate similar programs in other communities. While initial results indicate the program model shows promise, refinements can enhance impact and help translate insights to fellow practitioners.

Operationally, scaling impact requires balancing analysis depth with feasible timelines. We suggest developing standardized research protocols and tools to streamline the process for partners while preserving analytical rigor. Building dedicated research staff capacity could also reduce organizational time burdens. Rather than one-time projects, cultivating multi-year partnerships with a smaller cohort of nonprofits may offer greater potential for lasting culture change. Concentrating on M&E training and resources can enable deeper organizational transformation and evaluation skill building.

Additionally, participant feedback indicates that managing expectations on fundraising assistance is crucial. We recommend clearly positioning fundraising support as a secondary goal and providing guidance on utilizing application results. Facilitating introductions to aligned funders could also be beneficial. However, the core focus going forward should remain to conduct rigorous research to empower nonprofits in optimizing impact.

Finally, expanding on the pilot to shape the broader philanthropic ecosystem will be critical going forward. Educating donors on utilizing effectiveness data and advocating its integration into funding criteria can help drive large-scale impact. Maintaining public databases of cost-effectiveness research is also valuable for spurring informed giving.

Although we did not discuss it in this report, we do think it important to mention we succeeded in identifying some effective local nonprofits. The most promising organization among those we evaluated (and one of the most effective nonprofits in Israel) is Smoke-Free Israel. Our evaluations suggest they may be more effective than GiveWell's top charities, with an estimated 7.18 QALYs per dollar, although they currently have limited room for funding. We rewarded two other organizations for their relatively high levels of effectiveness. NALA, a WASH intervention in Ethiopia, and Kav LaOved, that help migrant workers from developing countries recover unpaid wages, an important yet neglected cause. We were also impressed by the Better Plate accelerator operated by MAF, a local accelerator focused on alternative protein startups. Kudos to their hard work and commitment to our process.

Appendix

Plot 1-a: Incentives scores on apply and complete the program

Table 5-a : Counterfactual analysis summary table

This is a anonymized version of the counter-factual analysis data summary. The main outcome variable is “Probability of achieving similar result” - i.e what are the chances this organization could achieve the same research level without our program. It was calculated mainly on the interviews, in which we asked direct questions on this probability and asked them to explain exactly why they think this way.

In addition, we integrated two qualitative results from the survey :

1. How many FTEs was dedicated to research in the organization before the program started?

2. Did they conducted any effectiveness\cost-effectiveness research of any program in their organization before the program?

Organizational Indicator | Research hours (total per week) | Effectiveness research dummies | Probability of achieving similar results | Explanation for counterfactual |

|---|---|---|---|---|

1 | 10 | 0 | 10 | Didn't have enough funding or research skills, collected data |

2 | 3 | 0 | 50 | Did not have anything except documentation of the results; the high score is because the result is not sufficient as well |

3 | 10 | 0 | 10 | Needed skills and knowledge that we provided them with |

4 | 145 | 1 | 70 | Did similar research. We contributed by giving much-needed motivation to continue the existing process that faded away with one of our researchers |

5 | 4 | 0 | 40 | Needed the research grant and time constraints for motivation, but they had OK research skills. |

6 | 2 | 0 | 20 | Needed skills and knowledge that we provided them with |

7 | 1 | 1 | 70 | Did similar research with external researcher; they needed us for research skills and for grant |

8 | 34 | 1 | 80 | They had everything. Reported that the researcher and our materials changed their perspective |

9 | 2 | 0 | 40 | Did not have anything except documentation of the results; the relatively high score is because the result is not sufficient as well |

10 | 2 | 0 | 20 | They were data-based and research-oriented but needed more tools and resources. Recieved a needed grant from Charity Entrepreneurship for this purpose as well as mentors on our behalf |

11 | 15 | 1 | 30 | Not sufficient knowledge on how to conduct this things, definitely research-oriented. Until now they conducted some cost-benefit analysis with external evaluator |

12 | 4 | 0 | 40 | Already had part of the research, although no knowledge on cost-effectiveness at all. We were much needed as mentors and structure, although we did not gave them any grants or researchers. Therefore the counterfactual probability is medium. |

13 | 1 | 0 | 30 | Very far from their domain, but they had good data orientation. The research grant and professional help was much needed |

14 | 3 | 0 | 30 | Good data orientation, needed the knowledge from me and framing |

15 | 10 | 0 | 60 | Already had part of the research, although no knowledge on cost-effectiveness at all. We were much needed as mentors and structure, although we did not gave them any grants or researchers. Therefore the counterfactual probability is medium. |

16 | 8 | 0 | 10 | Needed skills and knowledge that we provided them with, did not had the sufficient resources to conduct it without us. |

17 | 40 | 0 | 60 | They have well-functioning research team. Nevertheless, it was the first time they conducted this kind of research on their WASH project. They supplied with motivated researcher and did nice work, which we didn't saw from them before |

18 | 70 | 0 | 40 | Lacked proper research skills although they are aligned with effectiveness based work. We gave them the money needed for the report, nevertheless it was in was medium quality only. |

Mean | 20.22 | 0.22 | 39.44 |

|

- ^

We would like to express our gratitude to David Moss, Willem Sleegers, and Jamie Elsey from Rethink Priorities for their invaluable assistance with the survey design.

- ^

We would like to express our gratitude to David Moss, Willem Sleegers, and Jamie Elsey from Rethink Priorities for their invaluable assistance with the survey design.

- ^

These results will not be part of this version of the report, as they’re still being collected for the next two months. An update of the results here will be reported if required.

- ^

Data from Guidestar Monthly Nonprofits Report data base, August 2023.

- ^

Population defined as all nonprofits who holds “proper management” certification and which submitted all required documents according to August 2023.

- ^

Sample median result is presented as well due to the fact we had 2 much larger volunteer-based organizations which biases the average.

- ^

For those unfamiliar with the term - NPS = total % of people who would recommend your product – total % of those who wouldn’t. The recommendation level is based on scale of 1-10 (any score ≥ 9 is considered “recommend”, any score ≤ 6 is considered “unrecommend”)

- ^

For this purpose, we decided on more than 30% involvement as our threshold. i.e., we’ll not be considered a “crucial part” of the success of organizations that had a 70% or more likelihood of achieving similar results without us.

- ^

Less then 40% they will succeed without us.