In 2023, I provided research consulting services to help AI Safety Support evaluate their organisation’s impact through a survey[1]. This post outlines a) why you might evaluate impact through a survey and b) the process I followed to do this. Reach out to myself or Ready Research if you’d like more insight on this process, or are interested in collaborating on something similar.

Epistemic status

This process is based on researching impact evaluation approaches and theory of change, reviewing what other organisations do, and extensive applied academic research and research consulting experience, including with online surveys (e.g., the SCRUB study). I would not call myself an impact evaluation expert, but thought outlining my approach could still be useful for others.

Who should read this?

Individuals / organisations whose work aims to impact other people, and who want to evaluate that impact, potentially through a survey.

Examples of those who may find it useful include:

- A career coach who wants to understand their impact on coachees;

- A university group that runs fellowship programs, and wants to know whether their curriculum and delivery is resulting in desired outcomes;

- An author who has produced a blog post or article, and wants to assess how it affected key audiences.

Summary

Evaluating the impact of your work can help determine whether you’re actually doing any good, inform strategic decisions, and attract funding. Surveys are sometimes (but not always) a good way to do this.

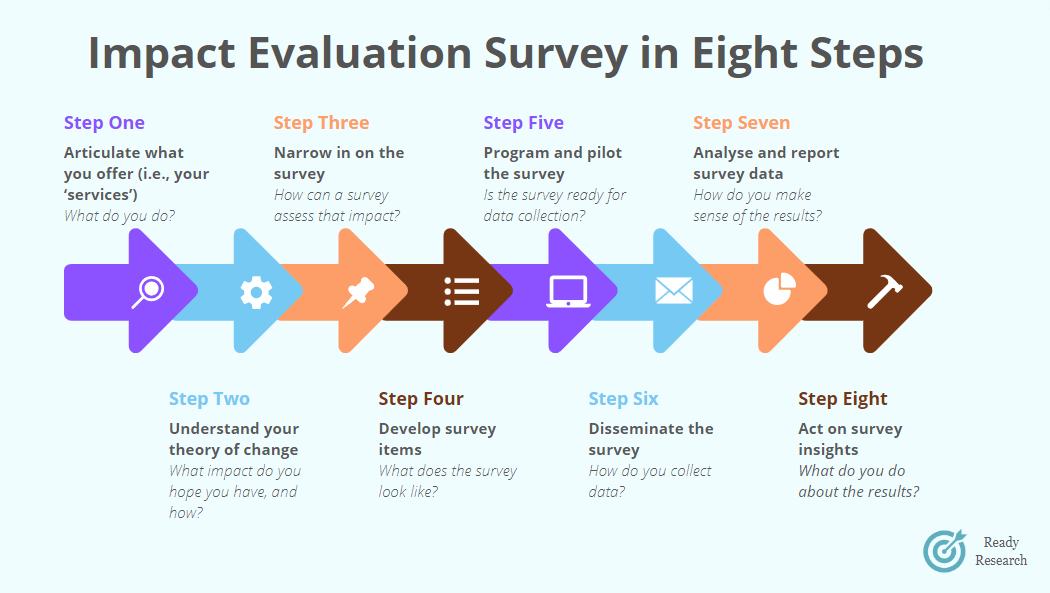

The broad steps I suggest to create an impact evaluation survey are:

- Articulate what you offer (i.e., your ‘services’): What do you do?

- Understand your theory of change: What impact do you hope it has, and how?

- Narrow in on the survey: How can a survey assess that impact?

- Develop survey items: What does the survey look like?

- Program and pilot the survey: Is the survey ready for data collection?

- Disseminate the survey: How do you collect data?

- Analyse and report survey data: How do you make sense of the results?

- Act on survey insights: What do you do about the results?

Why conduct an impact evaluation survey?

There are two components to this: 1) why evaluate impact and 2) why use a survey to do it.

Why evaluate impact?

This is pretty obvious: to determine whether you’re doing good (or, at least, not doing bad), and how much good you’re doing. Impact evaluation can be used to:

- Inform strategic decisions. Collecting data can help you decide whether doing something (e.g., delivering a talk, running a course) is worth your time, or what you should do more or less of.

- Attract funding. Being able to demonstrate (ideally good) impact to funders can strengthen applications and increase sustainability.

Impact evaluation is not just about assessing whether you’re achieving your desired outcomes. It can also involve understanding why you’re achieving those outcomes, and evaluating different aspects of your process and delivery. For example, can people access your service? Do they feel comfortable throughout the process? Do your services work the way you expect them to?

Why use a survey to evaluate impact?

There are several advantages of using surveys to evaluate impact:

- They are relatively low effort (e.g., compared to interviews);

- They can be easily replicated: you can design and program a survey that can be used many times over (either by you again, or by others);

- They can have a broad reach, and are low effort for participants to complete (which means you’ll get more responses);

- They are structured and standardised, so it can be easier to analyse and compare data;

- They are very scalable, allowing you to collect data from hundreds or thousands of respondents at once.

Surveys are not the holy grail, and are not always appropriate. Here are just a couple of caveats:

- Surveys are not suitable for all demographics or populations (e.g., it may be hard to reach people with limited internet accessibility);

- You may want to capture richer, qualitative data. If you are in the early stages of designing and testing your service, if the area you work in is highly unstable, or if the context is very complex, then surveys might give an artificially simplified or narrow perspective. In those cases, you may benefit from interviews, focus groups, or other kinds of evaluation methods;

- Surveys only show part of the story. They often, for example, fail to shed light on the broader landscape (e.g., who else is offering similar services).

- You can get biased responses. For example, people who had a poor experience or were less impacted may not respond. This can result in misleading conclusions about what you do.

Note that this post focuses on evaluating current services, not launching new ones. While surveys could aid decision-making for new initiatives (e.g., by polling your target audience on how useful they would find X new service), the emphasis here is on refining or enhancing existing offerings.

How to create an impact evaluation survey

In my mind, there are eight key steps to creating an impact evaluation survey[2].

Before you start, establish your intention

This process can take time, resources, and energy. To make the most of that, establish why you intend to collect data and evaluate your impact. Your intention shouldn’t just be, ‘to know if I’m having an impact’: make it more concrete. How do you see this process influencing decisions you’re trying to make? Who would do what with which information? Are there particular stakeholders or external parties that will benefit from this information (e.g., grantmakers)? Establishing this intention will help shape the whole process.

In addition to establishing your intention, consider scope and logistics. What's the time frame for this project? Which stakeholders do you need to involve so you can make sure you're capturing the right information? Who is ‘owning’ or leading this evaluation process (e.g., is it someone internal to the organisation, or external and independent?).

Step 1: Articulate what you offer (i.e., your ‘services’)

You need to understand what you offer in order to evaluate the impact of it. This step involves articulating all the types of things you do to (hopefully) create impact. I call this the ‘services’ you offer. For some people / organisations, this may be simple (e.g., “We just offer career coaching”). For others, like AI Safety Support, it may be more extensive (e.g., “We offer career coaching, but also seminars, fiscal support, and informal conversations”).

Be rigorous when listing these services. If you run events, for example, capture the different kinds of events (e.g., social events, unconferences, lectures).

Step 2: Understand the theory of change behind each service

To assess whether your services are having the desired impact, you need to know a) what impact is actually desired and b) what information you need to assess that. To do this, it’s useful to consider your assumed theory of change.

Theory of change encompasses your desired goal / outcome, and how you think you get there[3]. You can use theory of change to determine what you should do. For example, “I want to achieve X, so, working backwards, that means I should do Y and Z”. In this context though, the goal is to articulate your implied / assumed theory of change, in order to then test whether it holds up.

The basic gist is that you’re already doing a bunch of things (which you’ve articulated in Step 1), and you then think about a) why you’re doing those things and b) what information you need in order to keep doing them. To make this process more concrete, I’ll outline what I did with AI Safety Support.

With AI Safety Support, I ran a workshop where we recapped what their broader mission was, then went through each of their services and discussed three prompts:

- What’s the theory of change? What outcome do you hope the service leads to, and how do you believe it leads to that outcome? One template you could use is: “We think [service] is useful because it helps people do [outcome] by [mechanism]. Ultimately, this results in [ultimate outcome]”. For example, “We think providing career coaching is useful because it helps people apply for jobs they wouldn’t have otherwise applied for, because we encourage them to take action. Ultimately, this results in more people working on AI safety”. You may use this multiple times for a single service, if there are multiple outcomes or mechanisms.

- What evidence do you need? Looking back at the template suggested above, how do you know that you’re achieving the outcome, and that it’s by that mechanism? What would convince you that it’s worthwhile to do? For example, “We’d continue running events if we knew that people felt more connected to the community, were making new connections, and those connections were resulting in concrete outcomes (e.g., collaborations, new projects, a job)”. This may feel repetitive to Step 1, but this framing makes you focus on the concrete data you could collect.

- Are there other assumptions / uncertainties you have about this service? Is there something else you’re not sure about, like whether other organisations offer this service, or whether you’re the best fit to provide it? Are there other barriers or enablers you suspect may influence the impact? For example, “We’re not actually sure whether increasing people’s confidence to apply for jobs is important, or whether it’s more about suggesting what they could apply for”. Or, “My expertise isn’t really in this area, so I’m not sure I’m best placed to offer this”.

These prompts spark ideas on how to assess your impact. During this stage, don’t confine your responses to what could be assessed in a survey: Step 3 is where you consider survey implementation.

Step 3: Narrow in on the survey

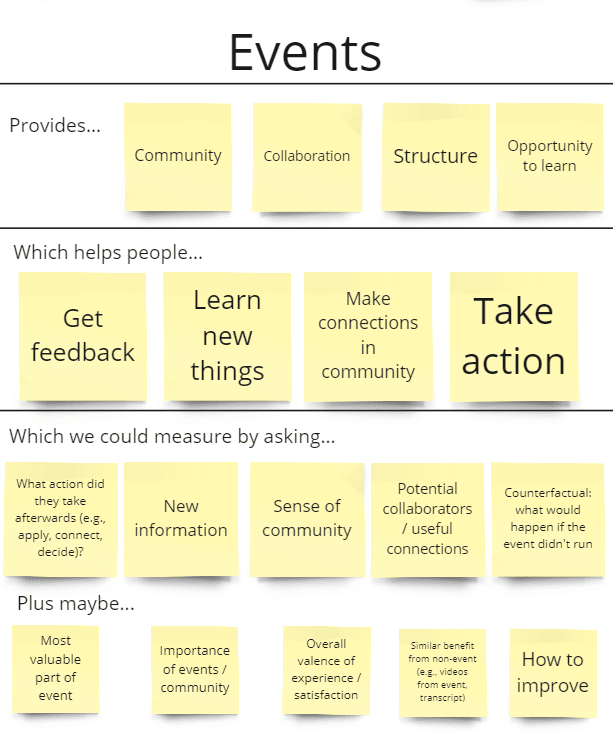

Once you’ve articulated what you do, why you do it, and what evidence you need, it’s time to consider this in the context of a survey[4]. You want to translate the information you’ve generated in Step 2 into survey ideas.

My process for doing this with AI Safety Support was as follows:

- Synthesise workshop notes (i.e., notes generated from Step 2) in Miro. This involved removing repetitive information and placing notes into a format that had more of a survey lens (i.e., ‘[Service] provides [key components of service] which helps people [do outcome] which we could measure by asking [survey ideas], plus maybe [other survey ideas]’. See Figure 2 for an example.

- Generate initial ideas for what we want to measure, based on workshop notes.

- Collate and extract other impact evaluation surveys (especially those from similar organisations), to see what others have measured and make sure we hadn’t overlooked anything.

- Combine 2) and 3) to generate a comprehensive list of what could be measured.

- Discuss this list with AI Safety Support to understand what’s irrelevant, what’s essential, and what’s missing.

In this case, where AI Safety Support offered multiple services, the result was a list of what we wanted to ask for all services (e.g., the impact, how helpful / harmful it was, the counterfactual), plus what we wanted to ask for specific services.

Step 4: Develop survey items

Now you know the kinds of things you want to ask in your survey, you need to figure out how to ask them.

Many resources exist on developing survey items, so I’ll just highlight three tips here:

- Use existing items, where possible. This saves time, and can result in higher quality items (depending on who created them). For AI Safety Support, for example, I collated impact evaluation surveys that effective altruism-aligned organisations had run. In Miro, I extracted all relevant items from these surveys. I then grouped these items by theme, rather than organisation, so I could see how these items had been asked (e.g., many organisations asked about the biggest impact of their service, so I grouped these items together to compare the approaches).

- Translate the survey items to example findings, to check it’s what you want to measure. Concretely, I did this by writing an example finding beneath every item listed in the survey draft. One item was, ‘How helpful do / did you find [service]?’, with multiple-choice options ranging from ‘Very harmful’ to ‘Very helpful’. Beneath that, I wrote, ‘Purpose / output: E.g., ‘74% of people indicate that [service] is very helpful, and no participants report any services as harmful.’ AI Safety Support then reviewed both the item wording and example findings and could say things like, “That’s a good item, because knowing something like that would help inform X strategic decision” or, “This finding sounds confusing, maybe we need to change the item wording”.

- Get feedback from a range of people. Ideally, this includes your target audience (i.e., someone who would potentially be filling in this survey in future) and people with survey expertise. Check that your items make sense, that they’re relevant to your audience, that they’re not double-barrelled etc.

You can view a copy of the survey I developed with AI Safety Support here.

Step 5: Program and pilot the survey

Next you’ll need to determine how participants are going to complete the survey (e.g., on what platform), prepare the survey so it can be completed (e.g., program it), then perform final checks (e.g., pilot it).

Programming the survey

There are many platforms where you could host your survey, which I won’t summarise here[5]. Some considerations when choosing a platform include:

- Budget. Can you afford to pay for a platform? Free options exist but, as usual, paid options can accommodate more complexity.

- Complexity of survey. Are there two questions in your survey, or 50? What types of questions do you require (e.g., multi-select, matrices, sliders)? Do you need to use looping or embedded logic? Some platforms accommodate more complexity, especially if they’re paid.

- Anticipated response number. How many responses do you think you’ll get? You might find a free platform that accommodates your complexity, but has limits on response numbers (e.g., 100 responses / month).

Piloting the survey

Piloting your survey involves testing it, before you distribute it. It’s most useful to do this once it’s programmed on the platform you’re using. The people you get to pilot it may include yourself, colleagues, and your target audience. Always indicate what people should be looking for when piloting. Examples include:

- How long is the survey? Keep in mind that to estimate this, people will need to move through the survey like a participant. This means they ideally shouldn’t be also paying attention to spelling errors, logic, etc. because this will impact the time estimate.

- Do questions make sense? Do people understand what you’re asking, and are the provided options comprehensive?

- Errors with logic. If you’re using survey logic (e.g., “only people who select this option will be shown that question”), you need to check that it’s functioning properly.

- Grammar and spelling.

When asking others to pilot the survey, I will either a) send a google document for them to note feedback (see my template here) or b) offer for them to go through the survey with me on zoom, so they can provide verbal feedback in real-time (this is especially useful for time-poor people).

Step 6: Disseminate the survey to participants

There’s no point to this survey, if no one ever fills it out. How are you going to collect responses? Will it be immediately after your event or call, or in monthly batches? Do you want to follow-up with participants longer term?

For AI Safety Support, we decided on two recruitment methods:

- Weekly recruitment of people who had recently engaged with AI Safety Support (e.g., received career coaching or been to an event);

- Annual recruitment of anyone who may have engaged with AI Safety Support in the past year (plus to collect longer-term data on those targeted via weekly recruitment).

For each approach, we discussed the purpose and how we were going to reach those people. For example, I developed a list of places the survey would be advertised in the annual recruitment, in addition to template emails, forum posts, and social media posts.

Note that how you disseminate the survey may impact the survey itself, so this needs to be considered throughout the development process. For example, if you want the survey to collect feedback both immediately after the service, and one year later, you’ll need a survey item that collects data on when participants are completing it.

You can view the dissemination strategy I developed with AI Safety Support here.

Step 7: Analyse and report survey data

You have the data, now it’s time to understand it, derive actionable insights, and communicate these to various stakeholders. Key considerations include:

- Frequency of analysis. Is this a once-off survey and analysis, or are you going to repeat it regularly (e.g., annually)?

- Making replication easier: If you will be repeating the survey and analysis, consider how you can save time in future. If you’re able, you could develop code that can be run every time you have new data or are at a decision point. For example, with AI Safety Support I developed code in R Markdown which generates an updated report when you read in the most recent data.

- Who is the user? Before you start your analysis or report, refresh yourself on who is using the outputs. How you analyse the data or what you produce will be different, depending on whether it's just for your internal decision-making or for broader external communication (e.g., a post on the forum). For example, perhaps you highlight different findings, depending on the audience.

- Transparent communication. Always consider how you can communicate findings in a way that doesn't oversell them, and that conveys the limitations. For example, highlighting if you have a small sample size, or when a change may not be significant or meaningful.

Step 8: Act on survey insights

Lastly, what actions will you take based on your results? This should link back with your reflections at the start on why you were doing a survey, and who would do what with the outputs.

Your results may suggest that you prioritise or de-prioritise specific services, or change the way you provide them. They may indicate that you should focus on particular audiences, or that you need to improve how you communicate about certain topics. They may provide information you can use to support future grant applications.

There are always caveats to your outputs, and it’s unlikely that your survey data is painting the full picture. Your results are less reliable if fewer people fill in the survey. They are influenced by the kind of person filling in the survey (e.g., people may be more likely to respond if they had a really good experience). You may benefit from additional information to aid your decision-making (e.g., interviews with people, feedback from key stakeholders). Plus, it’s always useful to consider the counterfactual – what would happen if you stopped doing what you’re doing (e.g., is there someone else / another organisation that people will go to?).

There you have it: a beginners guide to impact evaluation surveys. If you want more detail about this process, please feel free to reach out to me or Ready Research.

Resources

Here are some resources from my work with AI Safety Support, which could be used as templates:

- The impact evaluation survey in a google document;

- The survey dissemination strategy;

- The survey pilot feedback template.

Acknowledgements

Thank you to AI Safety Support for being a fantastic client to work with throughout this process. Thanks also to Alexander Saeri and Megan Weier for their valuable feedback on this post.

Funding disclosure

I was funded by the EA Infrastructure Fund to conduct the original project for AI Safety Support, but this writeup was not funded.

- ^

AI Safety Support shut down operations in 2023, for reasons unrelated to this project.

- ^

The scope of my work with AI Safety Support involved completing steps 1-5, then assisting with steps 6-7 (e.g., creating a detailed plan for dissemination, and developing R code to analyse data when it eventually came in).

- ^

Michael Aird’s workshop provides a good overview of theory of change.

- ^

Implied within this stage is the critical consideration of whether a survey is an appropriate choice for your evaluation. Consider the audience you’re trying to reach, the depth of feedback you’re seeking, possible alternatives, and the resources you have to design, implement, and analyse a survey.

- ^

My personal preference, if you have access to a paid account (e.g., through a university) or can fund one, is Qualtrics.

Thank you for creating such a wonderful resource. It's kind of a plug and play MEL system! I'll be sharing this around.

Thanks for the feedback Constance, that's great to know!