Summary: This article documents my current thoughts on how to make the most out of my experiment with earning to give. It draws together a number of texts by other authors that have influenced my thinking and adds some more ideas of my own for a bundle of heuristics that I currently use to make donation decisions. I hope the article will make my thinking easier to critique, will help people make prioritization decisions, will inspire further research into the phenomena that puzzle me, and will allow people to link the right books or papers to me.

For the latest and complete version with better formatting, please see my blog.

Contents

Introduction

Content note: This article is written for an audience of people familiar with cause prioritization and other important effective altruism concepts and who have considered arguments for the importance of long-term impact. To others it may seem confusing unless they get their bearings first through reading such books as Doing Good Better and Superintelligence.

I’m aware of many arguments for the great importance of long-term strategic planning, but when “long-term” refers to millenia and more, it becomes unintuitive that we should be able to have an influence on it today with any but miniscule probability. I’m hoping to avoid having to base my work on Pascal’s Mugging–type of scenarios where enormous upsides are used to compensate their miniscule probability for a still-high expected value. So I need to assess when, in what cases, or for how long we can influence the long term significantly and with macroscopic probabilities.

Then I need heuristics to be able to compare, very tentatively, interventions that all can’t rely on feedback cycles or only on feedback cycles around unreliable proxy measures.

Finally, I would like to be able to weigh assured short-term impact (in the sense of “a few centuries at best”) against this long-term impact and develop better heuristics for understanding today which strategies are more likely or unlikely to have long-term impact.

Reservations

- The heuristics I’m using are not yet part of some larger model that would make it apparent when there are gaps in the reasoning. So there probably are huge gaps in the reasoning.

- In particular, I’ve come up with or heard about the heuristics during a time when I got increasingly excited about Wild Animal Suffering Research, so I may have inadvertently selected them for relevance to the intervention or even for being favorable to it.1

- I’m completely ignoring considerations related to infinity, the simulation hypothesis, and faster-than-light communication or travel for now. Especially the simulation hypothesis may be an argument why even slight risk aversion or a slight quantization of probability can lead to a greater focus on the short term.

Comparing Causes

The importance, neglectedness, tractability (INT) framework (or scale, crowdedness, solvability) provides good guidance for comparing between problems (also referred to as causes). It can be augmented with heuristics that make it easier to assess the three factors.

Upward Trajectories

One addition is a heuristic or consideration for assessing scale (and plausibly tractability) that occurred to me and that seems to be very important provided that we are able to make our influence felt over sufficiently long timescales.

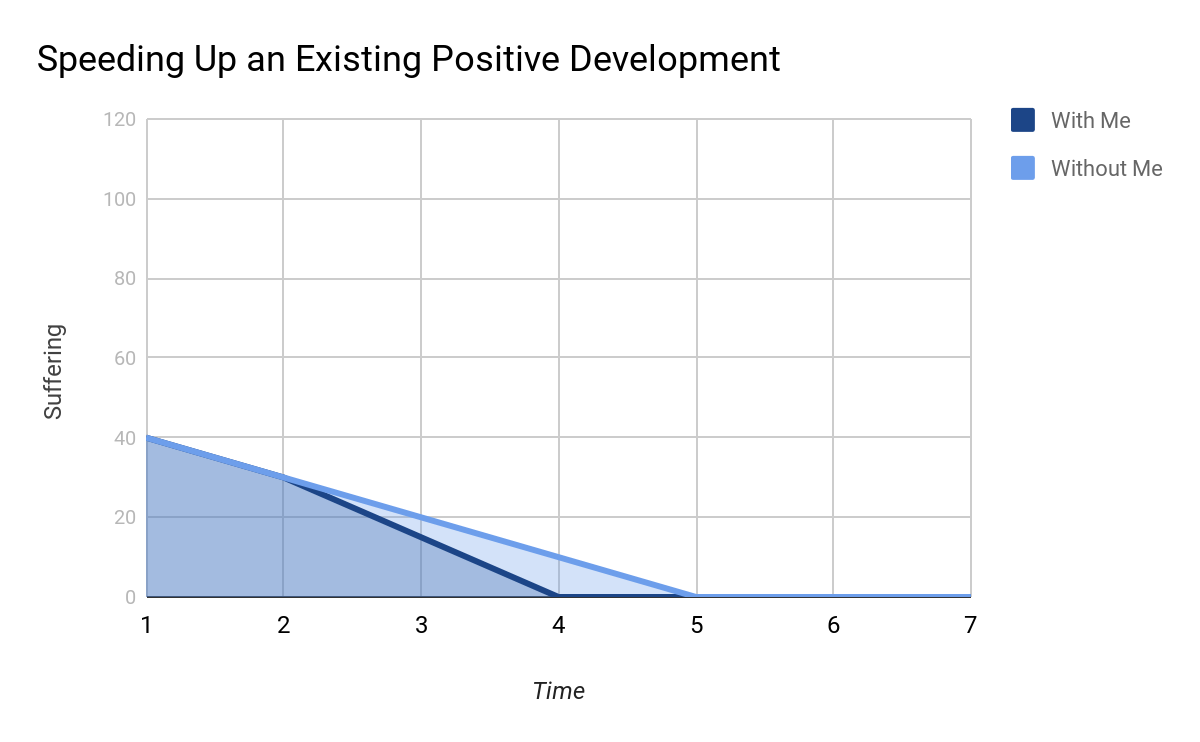

I care primarily about reducing suffering (in the broad sense of suffering-focused ethics not specifically negative utilitarianism),2 so I want my trajectories to point downward. Suffering of humans from poverty-related diseases has long been going down. If I were to get active in that area and actually succeed quite incredibly, the result may look like the chart below.

Here the sum of the two darker areas is the counterfactual suffering over time and the lighter area is the difference that I’ve made compared to the counterfactual without me.

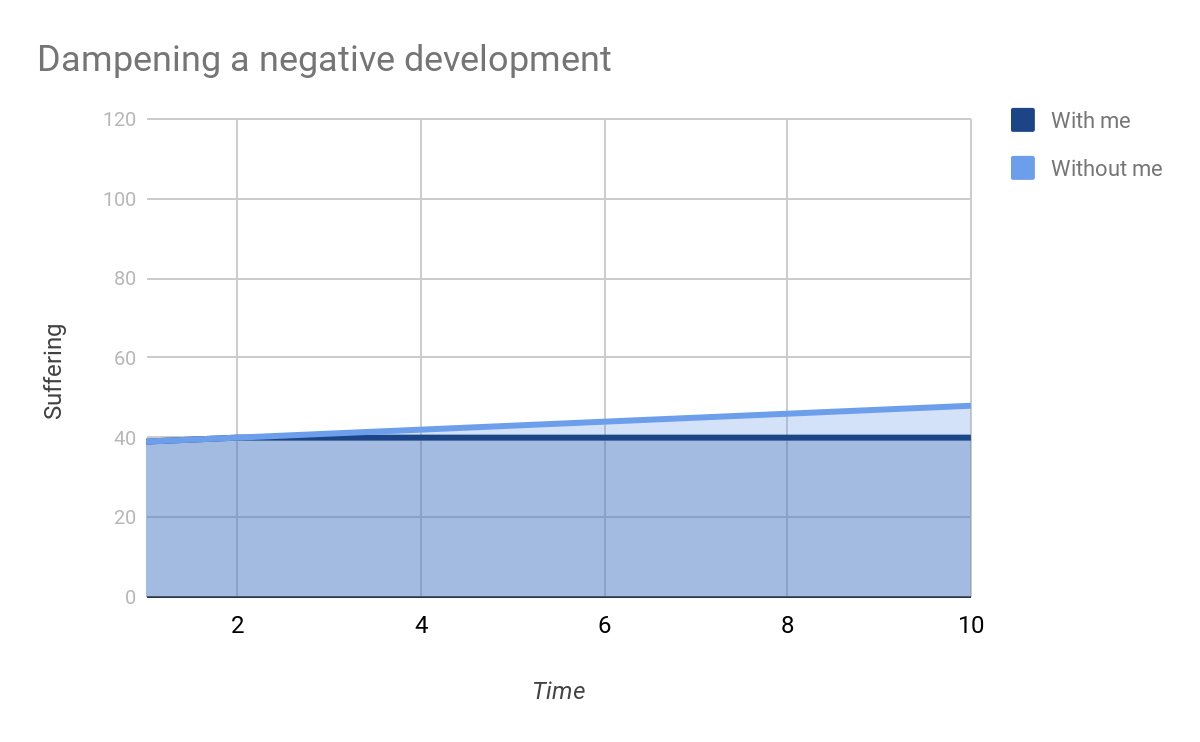

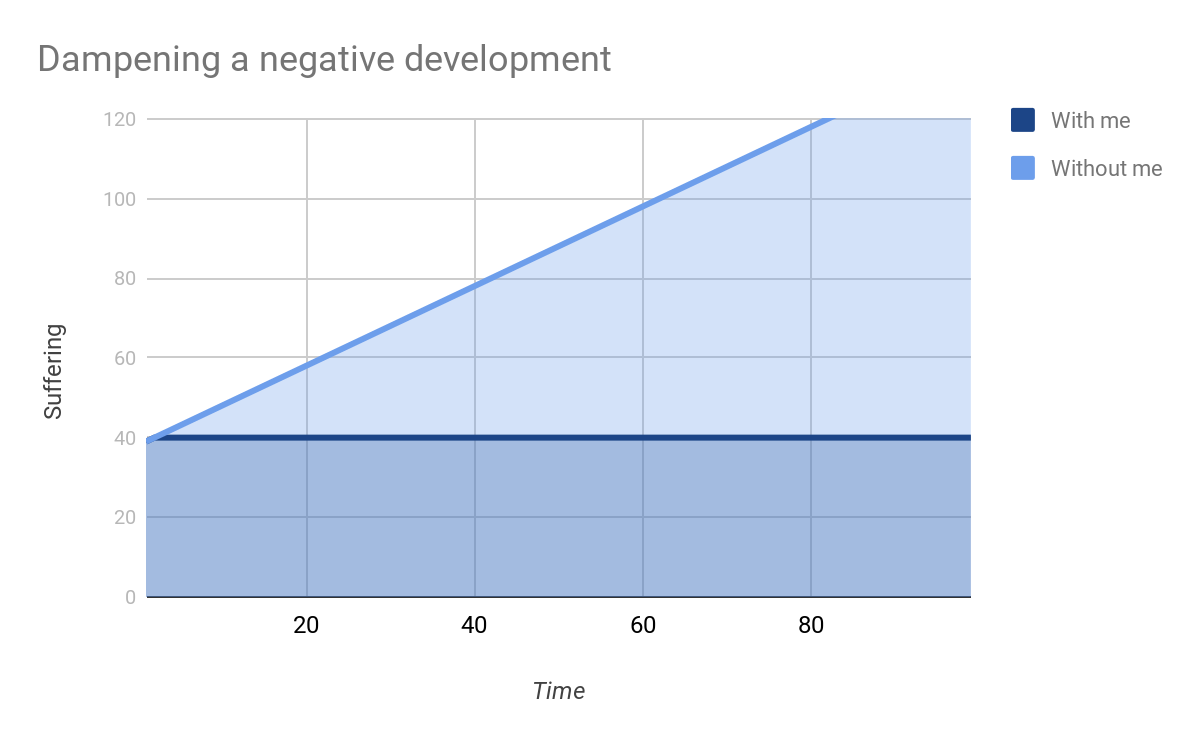

But consider the case where I pick out something that I think has an increasing trajectory:

When we zoom out,3 this starts to look really big:

In this simplified model, the impact of interventions that can and conceivably will solve the issue they’re addressing can be curtailed by intersecting with y = 0 if our influence is sufficiently durable.

This is a heuristic of scale and tractability. It is straightforward that the scale of a problem that is nigh infinite over all time is greater than that of a problem that is finite.

In practice, we will probably see an asymptotic approach toward the x axis as the cost-effectiveness of more work in the area drops further and further. This is where tractability comes in. As you approach zero disutility within some reference class, there is usually some decreasing marginal cost-effectiveness as the remaining sources of disutility are increasingly tenacious ones. If our influence is still felt at that point, it’ll rapidly become less important, something that does not happen (and maybe even the opposite4) in the case of interventions that try to dampen the exacerbation of a problem. So if I have the chance to affect some trajectory by 1° in a suffering-reducing direction, then I’d rather affect an upward trajectory than a downward one, one that afterwards will at most become constant.5

Some EAs have complained that they set out to make things better and now they’re just trying to prevent them from getting much worse. But perhaps that’s just the more impactful strategy.

Urgency

I’ve often seen urgency considered, but not as part of the INT framework. I think it is a heuristic of tractability. When there is an event that is important to prevent, and the event is of such a nature that preventing it is all-important but there’s nothing left to do if and once it happens, then all the leverage we have over the value of the whole future is condensed into the time leading up to the potential event.

Survival analysis is relevant to this problem, because there are two cases, one where the event happens and then nothing (e.g., some suffering-intense singleton emerges and continues throughout the future – a suffering risk or s-risk) and one where the event doesn’t happen but may, as a result, still happen just a little later.

There is some survival function that describes our chances of survival beyond some point in time. It may be some form of exponential decay function. These have a mean, the expected survival duration, which may serve as a better point for comparisons of urgency than the earliest catastrophic event.

If we wait with preventing catastrophes for so long that at the time when we do start to work on them the duration of the future that we can expect to influence is longer than the duration of the future we can expect to survive for, then it seems to me that we waited for too long. Conversely, if the expected end of our influence is earlier than our demise, it might be better to first focus on either more urgent causes or causes that don’t have such a strong event character. But first we’ll need to learn more about the likely shape of the decay function of our influence.

Further Research

It would also be interesting to investigate whether we can convert the survival function for an s-risk-type of catastrophe6 into an expected suffering distribution, which we could then compare against expected suffering distributions from suffering risks that don’t have event character. The process may be akin to multiplying the failure rate with the suffering in the case of the catastrophe, but since the failure rate is not a probability distribution, I don’t think it’s quite that easy.

Interventions

Some of my considerations for comparing interventions are less well established than the scale, tractability, neglectedness framework for problems. Michael Dickens proposed a tentative framework for assessing the effectiveness of interventions, but it is somewhat dependent on the presence of feedback loops. Apart from that, however, it makes the important contribution of considering the strength of the evidence in addition to what the evidence says about the expected marginal impact.

I will propose a few heuristics other than historical impact for evaluating interventions. In each case it would be valuable to not only count the heuristic as satisfied or not but also multiply in once confidence with that verdict. Michael Dickens, for example, generated likely background distributions of intervention cost-effectiveness to adjust his confidence in any particular estimate accordingly.

Feedback Loops

Confusingly, I’m listing feedback loops as one of the heuristics. But I don’t mean it in the sense that we should necessarily draw on historical evidence when evaluating the cost-effectiveness of an intervention (we should do so whenever possible of course) but that the presence of feedback loops that are short enough will be very valuable for the people running the intervention. So if there’s a chance that they can draw on them, it’s a big plus for the intervention enabling iterative improvements and reduced risks of updating around aimlessly on mere noise.

Good Proxies

We can almost never measure the outcomes we care about most directly but almost always have to make do with proxies, so the reliability of these proxies is an important consideration.

Durability

There are two failure modes here: the desired change may be reversed at some point in the future or it may have eventually happened anyway. For example, vegan and vegetarian societies have not been stable in the past or valuable research may get done in academia only a little later than at an EA-funded institute.7 Another failure mode for many interventions is that some form of singleton may take control of all of the future so that we can influence the future only by proxy through influencing what singleton emerges.

I can see three possible futures at the moment: (1) the emergence of a singleton, a wildcard for now, (2) complete annihilation of sentient or just intelligent life, or (3) space colonization. I’ll mention in the following why I’m conflating some other scenarios into these three. (This is heavily informed by Lukas Gloor’s “Cause Prioritization for Downside-Focused Value Systems.”)

Singleton

I’ve heard opinions that implied that a permanent singleton is possible and others that leaned toward thinking that value preservation under self-improvement is so hard that value drift is inevitable. Perhaps this path may enable us to create a wonderful populated utopia or attempts at it might lead to “near misses [that] end particularly badly.” Improving our strategy for preparing for this future seems very valuable to me.

A seeming singleton that turns out unstable after a while may be as deleterious for our ability to influence the future via other means than the singleton itself as an actual (permanent) singleton. Artificial general intelligence (AGI) may turn any sufficiently technologically advanced state that is not a singleton into a distractor state, in which case many of my thoughts in this text are moot. So this case is crucial to consider.

Extinction

In the cases of human extinction or extinction of all life, it would be interesting to estimate the expected time it’ll take for another at least similarly intelligent species to emerge. Turchin and Denkenberger have attempted this, yielding a result of something to the order of 100 million years. Such a long delay may significantly reduce the maximal disvalue (and also value, for less suffering-focussed value systems) of space colonization because resources will be more thinly spread (time-consuming to reach) throughout the greatly expanded universe and some other factors.8 However, space colonization may still happen relatively quickly if there are more species within our reachable universe who are also just some millenia away from starting to colonize space.9

But Lukas Gloor thinks “that large-scale catastrophes related to biorisk or nuclear war are quite likely (~80–90%) to merely delay space colonization in expectation,” with AGI misalignment and nanotechnology posing risks of a different category.10 So a wide range of seeming extinction risks may allow our civilization to recover eventually, at which point the future will still hold these three options.

Space Colonization

Even if this repeats many times, it is likely that eventually one of these civilizations will either go completely extinct, form a singleton, or colonize space. The third option would eliminate many classes of extinction risks, the ones that are only as severe as they are because we’re physically clustered on only one planet. Extinction risks that only depend on communication will remain for much longer.

Risks from artificial intelligence will probably only depend on communication11 so that it will have to turn out that artificial intelligence can be controlled permanently for space colonization to happen. This surely makes this scenario less likely than the previous two. (Or more precisely, though such precise language may imply a level of confidence I don’t have, less than one third of the probability mass of the future may fall on this scenario.) Perhaps, though, we have a greater chance to influence the outcome or influencing it may require different skill sets than influencing artificial intelligence.

But while humans may look out for one another, we have a worse track when it comes to those who are slightly outside our culture (or involved in it only in a unidirectional way where they can’t make demands of their own in “trades” with them). Michael Della-Iacovo considers that insects may be spread to other planets (or human habitats in space) as a food source or even by accident. Eventually, farmed animals may follow, and they may return to being wild animals even when animal farming becomes obsolete due to such technologies as cultured meat. People may even spread wild animals for aesthetic reasons. Finally, the expected capacity for disutility of bacteria may be small, but large numbers of them play vital roles in some proposed strategies for terraforming planets.

So the graphs in “Upward Trajectories” are inaccurate in the important way that suffering is likely to expand spherically as some cubic polynomial of the radius from our solar system – not linearly.12

If we want to influence a future of space colonization positively, then it is all-important to make sure that our influence survives to the start of the space colonization era. (I will qualify this further in “Reaching the Next Solar System” below.) Then, longer survival of the influence becomes rapidly less important: Communities will likely cluster because of the lightspeed limit on communication latency,13 and if one of these communities loses our influence or would’ve reaped its benefits even without us, it’ll carry this disvalue or opportunity cost outward only into an increasingly narrow cone of uncolonized space.

Strategic Robustness

I specify that I mean strategic robustness because I’ll mention something that I’ll refer to as ethical robustness later, but this strategic robustness is just what others mean when they just say “robustness.” Strategic robustness unfortunately overlaps with what I called durability, but I try to disentangle it by using robust to refer to strategies that are beneficial across many different possible futures while I use durable to refer to strategies that have a chance to survive until we colonize space, if it should come to that. Insofar as the second is included in the first, it is a small (but important) part of the concept, so I hope not to double-count too much.

Fundamentality

In The Bulk of the Impact Iceberg, I argue that any interventions that observably produce the impact we care about rest on a foundation of endless amounts of preparatory work that is often forgotten by the time the impact gets generated by the final brick intervention. Some of this preparatory work may not get done automatically. This suggests that highly effective final brick interventions are few compared to equally or more effective preparatory interventions.

A particularly interesting question is here whether there are heuristics for determining where the most important bottlenecks are in these trees of preparatory work because that is where we should invest most heavily. Studying advertisement may be helpful for noticing and testing such heuristics. (More on that in the abovelinked article.)

I particularly highlight (and use as one such heuristic for now) research as a highly effective interventions, because, if it is done right and studies something that is sufficiently plausible, it is valuable whether it is successful or seemingly fails.

Value of Information

Feedback loops generate valuable information to improve an intervention incrementally. In this context of robustness, however, I’m referring to information that is generated that doesn’t only benefit the very intervention that generates it but is more widely beneficial. People working in the area of human rights in North Korea, for example, may not be working on the most large-scale problem there is, and tractability and neglectedness may not make up for that, but they may gain skills in influencing politics and gain insights into how to avoid similar nigh-singletons as North Korea in the future.

Instrumental Convergence

Anything that we have already determined to be instrumentally convergent – i.e. something that a wide range of agents are likely to do no matter their ultimate goals – is convergent for reasons of its robustness. So all else equal, an intervention toward a convergent goal is a robust intervention. Gathering, knowledge, intelligence, and power are examples of such robust capacity building.

Option Value

Things that destroy option value are bad (all else equal):

- If you work on some intervention for a decade and then find that it is not the best investment of your time, it’s best if the work still helped you build transferable skills and contacts, which you’ll continue to profit from.

- If you never defect against other value systems even when it would be useful to you, you don’t lose the option to continue cooperate with them or to intensify your cooperation.

- If you remain neutral or low-profile, you retain the ability to cooperate with a wide range of agents and can avoid controversy and opposition.

- If you avoid making powerful enemies you can minimize risks to yourself or your organization. The individual or the organization are often points of particular fragility in an intervention so long as relatively few people are working on it or they are fairly concentrated. (The failure of an organization, be it for completely circumstantial reasons, may also discourage others from founding another similar organization.)

Relatedly, something that I like to call “control” is useful because it implies higher option value: If you can change course quickly and at a low cost you have more control than if you could do the same but at a somewhat higher cost or more slowly.

Backfiring

Finally, robustness is nil if the risk of very bad counterfactually significant outcomes is too high.

Counterfactual of Funding

Valuable work that is funded by money or time that would’ve otherwise benefited almost as valuable work is not terribly valuable. Resource constraints are rarely so clear cut, so we may never know whether it was great, good, eh,14 or bad for Rob Wiblin to switch to 80,000 Hours, but if you can achieve the same thing whether you’re funded by EAs or by a VC, then it’ll likely make a big difference.

Supportability

Even a problem whose solution is tractable may have an intervention addressing it that is hard to support, e.g., because you’d have to have a very particular skill set or the intervention is so far only a hypothetical one.

Cooperation

No two people have completely identical values, and often values are significantly different. Battling this out in a battle of force or wits has rather flat expected utility dependent on the actors relative strengths while cooperation lets them find Pareto-efficient points:

- Depending on the shape of the frontier, this can be an extremely important consideration even for a risk neutral agent with a single value system:

- To use the example from the article linked above, a special deep ecologist may care equally about 8e6 species totalling 2e18 individuals or the same number of species totalling radically more individuals. If most of these individuals suffer badly enough throughout their lives, a suffering reducer may greatly prefer the relatively smaller number. A trade would be very valuable for the suffering reducer – if they’re roughly equally powerful, then many times more than a battle in expectation.

- But it is also very decisive for me because I empathize with many value systems, so I want to do the things that are good or neutral for all of them – what I refer to as ethical robustness.

- Even if the frontier is such that the gains from trade are minor, a zero-sum game may look more like a battle than a race, so that time is irreversibly lost, time that could be used to avert suffering risks or preserve important suffering-reducing knowledge across existential catastrophes.

- More speculatively, insofar as our decisions can be correlated with those of sufficiently similar agents who yet have different moral goals, we can determine the results of the different instantiations of the decision algorithm that we share with them and thus get them to cooperate with our moral system too.

Comparative Advantage

Agents with strong commitments to cooperation will still face hurdles to achieving that cooperation because they may not be able to communicate efficiently. They can apply heuristics such as splitting their investments (see the “Coordination Issues” section in the linked article) into shared projects equally or focussing on the issues that they know few others can focus on, so capitalizing on their comparative advantage.

Altruists at government institutions or many foundations may face greater requirements to make strong cases for their investment decisions that they can point to later when something goes wrong or a project fails to avoid being blamed personally. Private donors or the Open Philanthropy Project can thus cooperate with the former group by supporting the types of projects that the former group can’t support.15

Influencing the Future

The first space colonization mission is about to be launched but the team of meteorologists is split over whether the weather is suitable for the rocket launch. Eventually, the highest-ranking meteorologist decides that if their colleagues can’t agree then the weather situation is too uncertain to permit the launch.

A week later, the rocket launches, and it sets a precedent for others. Innovation accelerates, prices drop, and eventually humans expand beyond the solar system far out into the Milky Way launching new missions in all directions by the minute. And all the while they take with them bacteria, insects, and many larger animals, may r-strategists among them. Unbeknownst to everyone, though, the launch would’ve been successful even the first time.

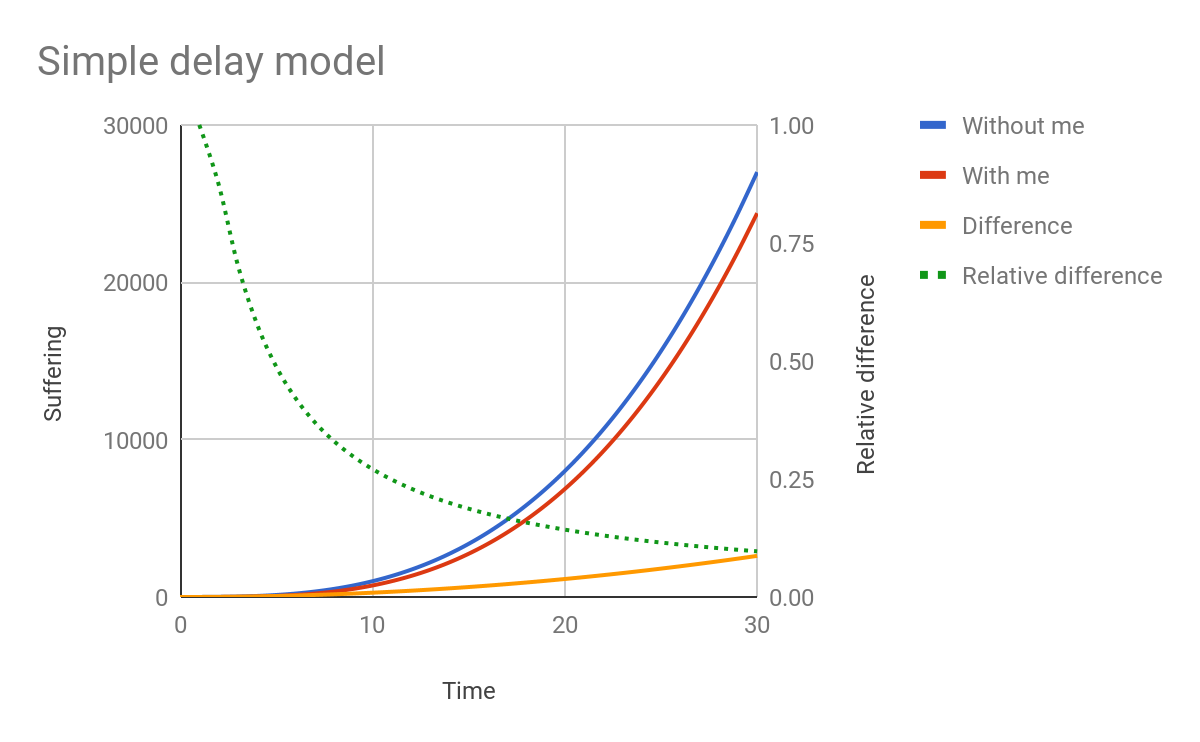

Simple Delay Model

What influence does the one-week delay have one millennium later?16 Intuitively, I would think that is has close to no impact, but a very simple model is not enough to confirm that impression.

The chart assumes that I’m the meteorologist and that I’ve turned a world whose suffering would’ve looked like the blue line, t³, into the red-line world, (t-1)³. The yellow line is the difference in suffering between the worlds: 3t² - 3t + 1. That’s a ton, and rather than diminishing over time like my intuition intuited, it increases rapidly.

What decreases rapidly, however, is the size of the difference relative to the suffering that increases even more rapidly – the green dotted line: (3t² - 3t + 1) / (t - 1)³. So is it just this morally unimportant relative difference that caused my intuition to view our influence as something like an exponential decay function when really it will increase throughout the next millennia? Dan Ariely certainly thinks that we erroneously reason about money in relative rather than absolute terms, fretting about cents and dollars when buying sponges but lightly throwing around thousands of dollars when renovating a house or speculating on cryptocurrencies.

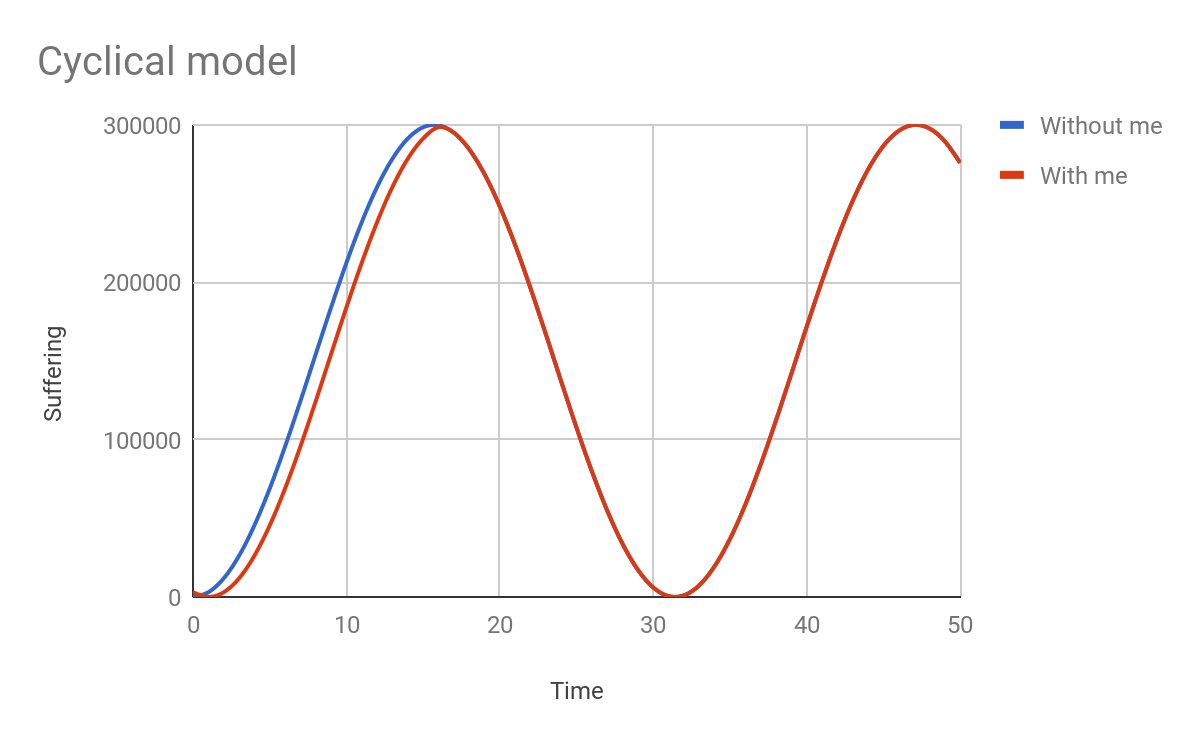

Cyclical Model

Or maybe the intuition is informed by cyclical developments.

Some overwhelming outside force may limit our potential absolutely at some point in time and force us back, and from that point onward, the suffering trajectory would only be conditional on the overwhelming force rather than our influence from the past.

Seasons, winter in particular, are an obvious example of cyclical forces like that, but in the context of space colonization, I can’t think of reason for it just yet – especially once several solar systems have been colonized with decades of communication delay between them and probably no exchange in commodities given how much longer they would have to travel.

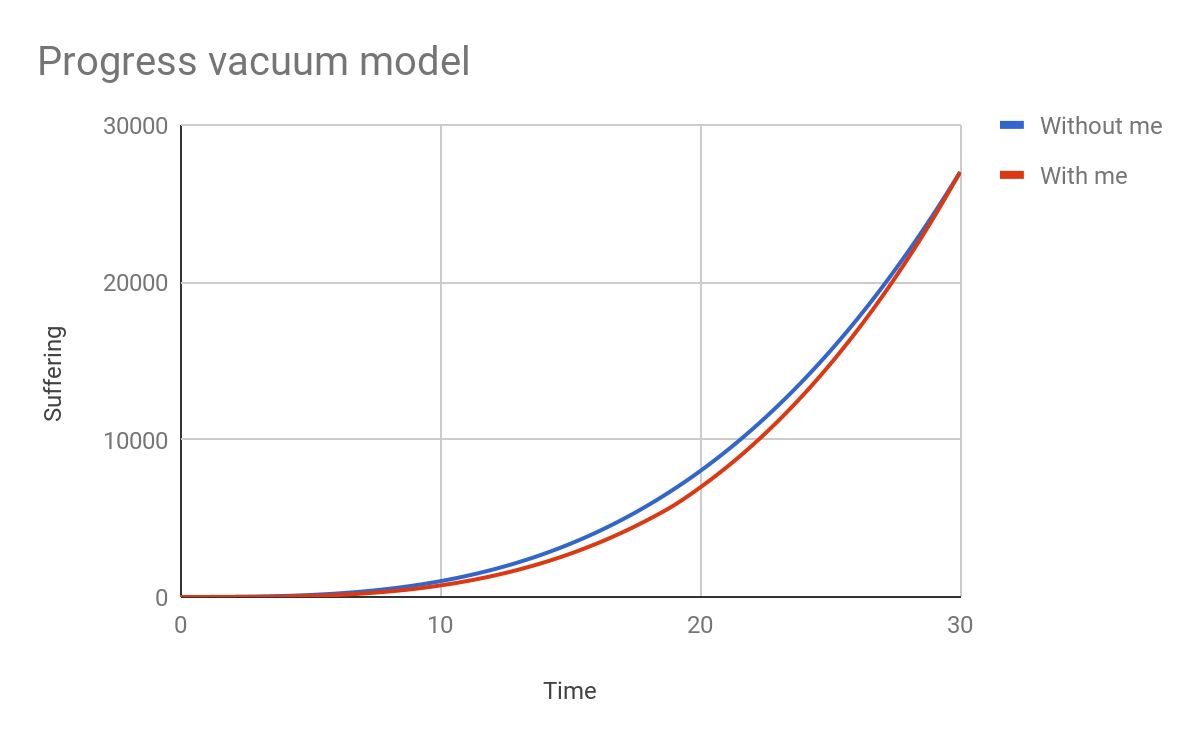

Progress Vacuum Model

But what about correlated forces rather than mysterious overwhelming outside forces?

Perhaps that week of delay saw one week of typical progress in charting space, searching for exoplanets, and advancing technology, so that the delay created one more week of “progress vacuum,” which eventually backfired by speeding up progress beyond the slope of the trajectory of the counterfactual world.

But the graph above is probably an exaggeration. When you reduce the supply by slowing some production process down, the demand reacts to that in complex ways due to various threshold effects, and the resulting cumulative elasticity factor is just that, a factor, a linear polynomial. If our influence is a quadratic polynomial, as surmised in the first diagram, then multiplying it with a small linear polynomial is not going to have a significant negative impact in the long term.

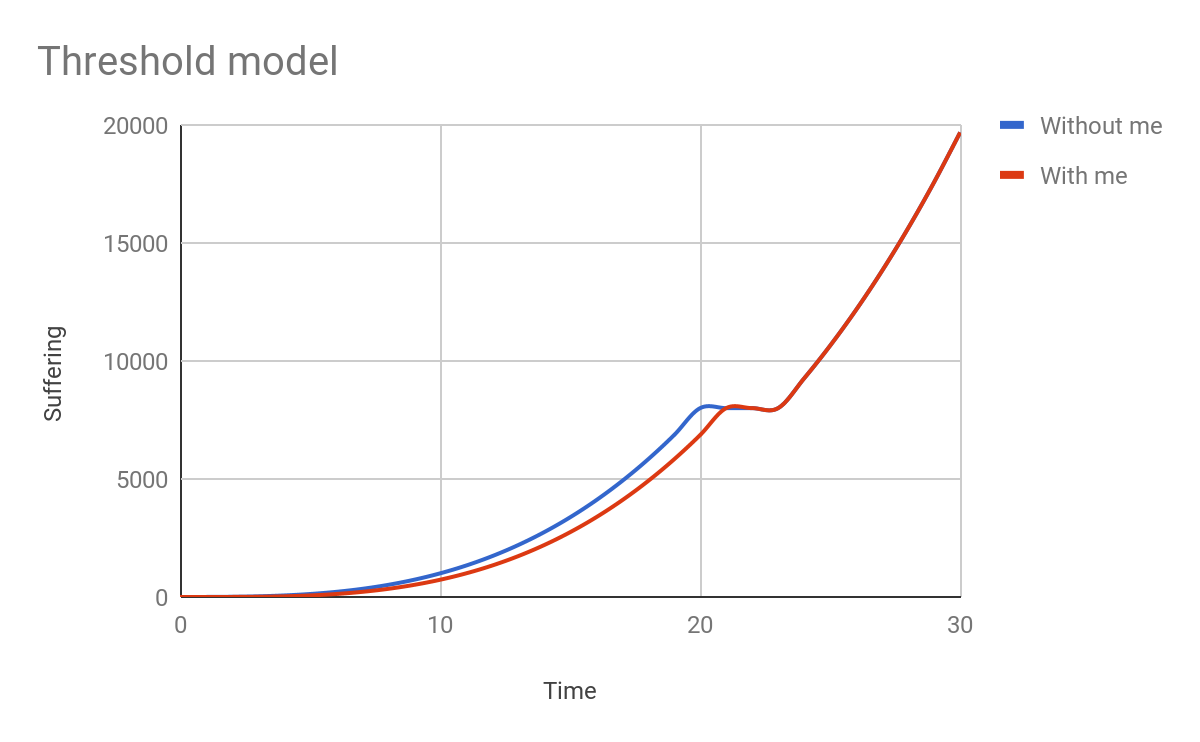

Threshold Model

But might we face more mighty thresholds?

Now that’s one mighty threshold right there! Once we’re spread throughout space, it becomes harder to think of thresholds like that because it has to have an expected impact that is at least commensurate to a quadratic polynomial of time or radius. But so long as the radius is constant and we’re still down to earth, a conference may be all that’s needed: a research group may present some seminal paper at a conference – delay them by one week, and they’ll hurry up and have a typo more in the presentation, but they will still present their results to the world at the same moment.

In conclusion, I think, based on just these rough considerations, that the cyclical model will continue to lose relevance but that the correlated speed-up (or supply/demand elasticity) model and the threshold model – the first probably just a smoothed out version of the second – will continue to be highly relevant for as long as we’re still in one solar system or even on one planet.

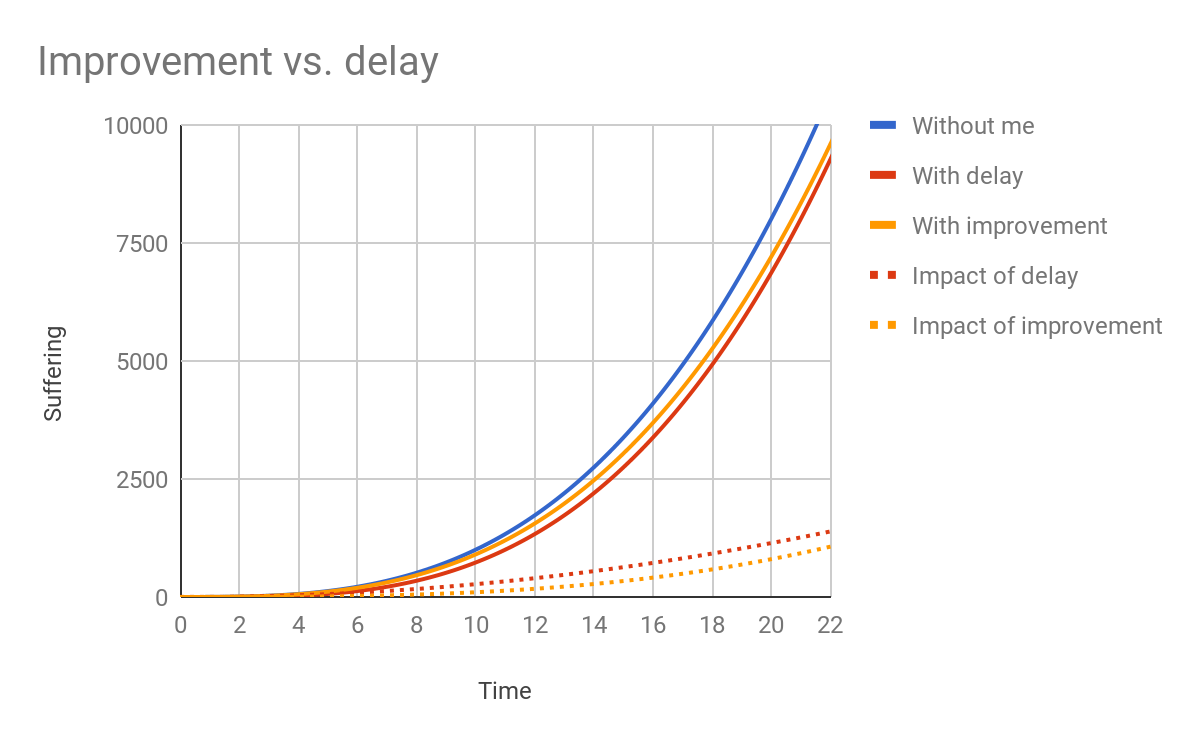

Improvements vs. Delays

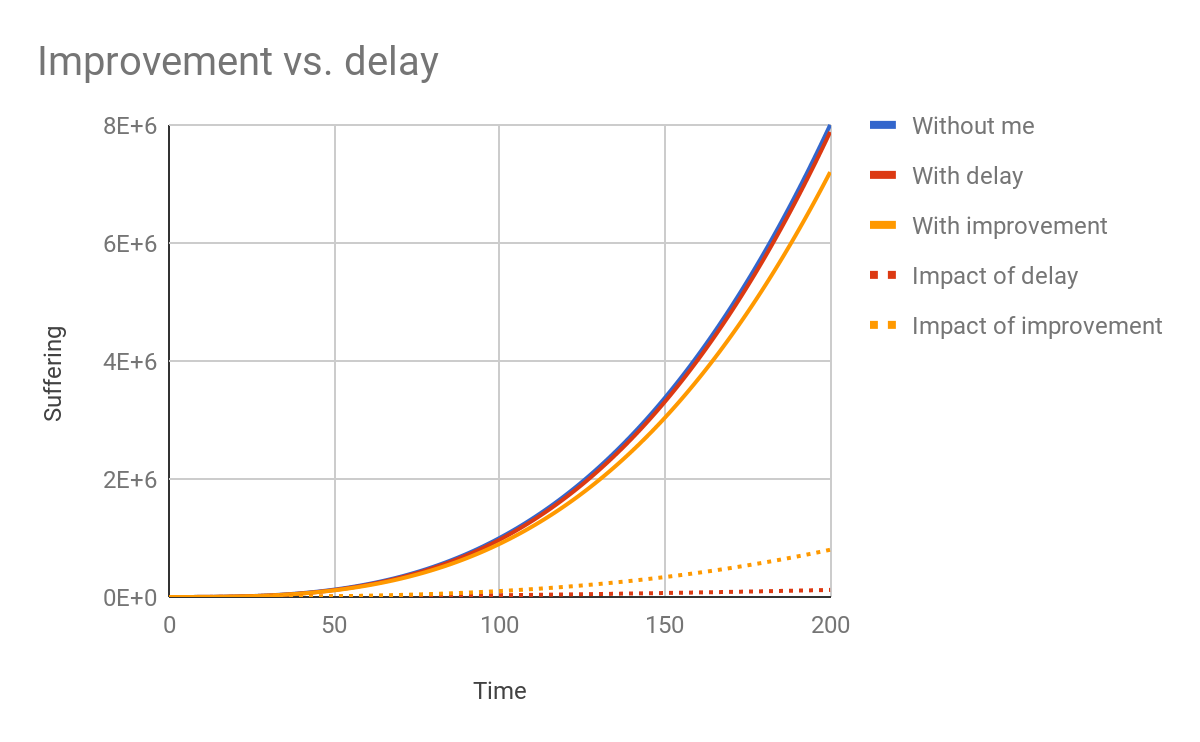

Intentionally delaying things that others care about is a bit uncooperative. The intervention that I’m most excited about at the moment, making space colonization more humane for nonhumans, would instead aim to reduce the suffering footprint space colonization without interfering with its rate, so for example 0.9t³ instead of (t-1)³. The difference to the counterfactual is now a cubic polynomial itself!

For short time scales, the delay approach is still ahead:

But then the cubic polynomial of the improvement approach of course quickly catches up:

Now how can my intuition that impact decays still be true when we’re dealing with a polynomial that is yet another degree higher?

- Thresholds would have to look different than papers presented at conferences to limit out impact in this scenario.

- A progress vacuum is also not as straightforward. But if we put in a lot of work to advance a technology for making space habitable that minimizes suffering and so make it the go-to technology by the time the exodus starts (see “Humane Space Colonization” below), we’re establishing it through some degree of path dependence.

- If it turns out that it wasn’t the most efficient technology, the path dependence may not be enough to lock it in permanently – just as it is imaginable that Colemak might eventually replace Qwerty.

- Or fundamental assumptions of the technology we locked in that way may cease to apply – more comparable to how Neuralink might eventually replace Qwerty.

- But in either case, our impact may only degrade to delay levels, not to zero.

But if our impact still decays and if the period until the cubic polynomial overtakes the quadratic one is suitable in length, then we might still be better off delaying. Perhaps there’s potential for a moral trade here: We’ll help you with your technological progress but in return you have to commit to long-term funding of our work to make it more humane.

Further Research

- What are the strongest risks to our impact with the improvement approach?

- Can we build some sort of technological obsolescence model off the idea that assumptions follow a tree structure where technologies closer to the root make fewer assumptions – they are harder to invent but there are fewer ways to make them obsolete – whereas the opposite is true for inventions closer to the leaves, and that in the shape of an exponential relationship, thus introducing a factor that can perhaps, in some way I haven’t quite fleshed out, still explain my decay intuition?

- When r-strategists reproduce they might "multiply" exponentially within the Malthusian bounds. If we can hope to affect little of the future, this may be highly relevant. Even if not, might we be able exert greater force on the polynomial than a delay has by influencing the factor of the exponent of such exponential but bounded growth?

Observations

Which of these models might best fit any observations that we can already make? To get at least some intuition for what we’re dealing with, I want to draw comparisons to some better-known phenomena.

| Factual | Counterfactual | |

|---|---|---|

|

People17

|

Plausibly more ephemeral (than concepts18), because (1) they still die. Plausibly more durable, because (1) their values may drift, talents and passions change, etc. at a higher rate than they can promote them to others. | Plausibly more ephemeral, because (1) the usual concerns with replaceability. |

|

Companies19

|

Plausibly more ephemeral, because: (1) they fail, probably in most cases, for a host of other reasons than that their core idea becomes obsolete and (2) because they are highly concrete and thus fragile.20 Plausibly more durable, because: (1) they can adapt (change their nature) to survive – the idea is not their essence as it is for concepts. | Plausibly more durable, because: (1) they are unlikely to would have been founded anyway, under the same name, if they hadn’t been founded when they were.21 |

|

Languages

|

Plausibly more durable, because: (1) they have higher path dependence than most22 concepts. | Plausibly more durable, because: (1) they are unlikely to would have developed the same way anyway if they hadn’t developed when they did.23 |

|

Cities

|

Plausibly more durable, because: (1) they have higher path dependence than most concepts and (2) they are often continuously nourished by something geographic, such as a river, which is probably unusually permanent. | Plausible more ephemeral, because: (1) at least some places are so well-suited for cities24 that if one hadn’t been founded there when it was, it would’ve been founded there little later. |

A human with stable values can work for highly effective short-term-focused charities, advocate for them, or earn-to-give for them for 50 years, which may serve as a lower bound for the durability of the influence we can plausibly have.

There are few companies that survived more than millenium (but probably wouldn’t have if it didn’t inhabit a small, stable geographic niche), and hoping to create one that scales and yet survives even a century is probably unreasonable, since large companies are not very old. A large company may be better positioned to get old than a small one, but there’s probably not enough space for enough large companies so that they can have the same outliers that there are among small companies.

Languages and cities still exert influences several millennia later, but because of the high probability that the oldest cities would’ve been founded anyway in short intervals because of their geography, languages are probably the stronger example. But they only have “extrapolatory power” for very path dependency–causing concepts.

Language may have emerged some 50–100 thousand years ago, but the proto-languages that can still be somewhat reconstructed were spoken a mere 10,000 or possibly 18,000 years ago.

Further Research

I haven’t found evidence for whether linguistic reconstruction errs more on the side of reconstructing noise – that a proto-language could’ve had many different forms with no systematic impact on today’s languages, so that attempts at its reconstruction yield a wide range of different results – or on the side of silence – that the reconstruction is not possible even though today’s languages would’ve been different had the proto-language been different. It may be interesting to weigh the factors that indicate either direction.

So we can perhaps hope for our influence to last between half a century and a couple thousand years.

If we want our influence to reach other solar systems – and we travel there in a waking state – we have to travel these, let’s say, 10 light years at the 100th to 1000th part of the speed of light. That’s some 30–300 times the 356,040 km/h that Helios 2 reached, probably the fastest human-made object in space to date.25 That doesn’t seem unattainable.

Furthermore, in a sleeping or suspended (in the case of emulations) state the distance doesn’t matter. Such missions will probably be expensive, so they’ll need to be funded by many stakeholders that will have particular interests and goals, such as harnessing the energy of another sun. They’re more likely to fund a mission with suspended passengers who can’t change the goals of the mission when the stakeholders can no longer intervene.

Unfortunately, that doesn’t necessarily mean that the new ships will be launched soon and none of our influence will be lost during the travel. Stakeholders will aim to make a good trade-off between an earlier launch and higher speeds, because waiting for technological innovation in, say, propulsion systems may be worth it and result in an earlier arrival. So the launch may be delayed, and if earlier enterprises get their predictions wrong and innovation happens faster than they thought, then their ships may even be overtaken by later, more value-drifted ships.

Reaching the Next Solar System

The jump from colonizing our solar system (where communication is feasible) and colonizing others is huge: The diameter of our solar system is in the area of 0.001 light years – around 9 light hours – while the closest solar system, around the star Epsilon Eridani, is 10.5 light years away and another one, around the star Gliese 876, full 15.3 light years. (Infeasibly far for light-speed communication with our solar system.) So the different levels of feasibility of communication mean that a range of existential risks will diminish much earlier than any reduction of risk from value drift.

Ideas for a Model

Maybe I’ll find the time to create a quantitative model to trade off short-term-focused and long-term-focused activities in view of the scenario where space colonization either happens before any civilizational collapse occurs or where our influence survives the collapse or collapses.

Guesstimate has the added benefit that the limit to 5,000 samples introduces a quantization that, incidentally, protects against Pascal’s Mugging type of calculations unless you reload very often. That will make the conclusions we can draw from it more intuitive or intuitively correct.

The model should take at least these factors into account:

- Our colonization of space can probably be modeled as a spherical expansion at some fraction of the speed of light.

- It might take into account that faster-than-light travel might be discovered.

- It should assume small fractions or otherwise take relativistic effects into account.

- It should take the expansion of space into account, which will put a limit on the maximum region of space that can be colonized – less than the Hubble volume.

- It should consider the number and perhaps the differences in density of solar systems

- Since a large fraction of our time will be spent in transit, it may also be important to investigate whether the transit would likely be spent in a suspended or sleep state26 that should preserve all properties of the civilization or in an active state where the civilization continues to evolve.

- If we assume that there’s no faster-than-light communication, then civilizations will probably cluster or otherwise have little effect on each other. Solar systems may be a sensible choice for the expanse of such clusters because they also have an energy source.

- Whole brain emulation seems to me (mostly from considerations in Superintelligence) more quickly achievable than large-scale space colonization, so between already-colonized regions of space, communication and travel will become similar concepts.

- Emulation may also become possible through training models of yourself with Neuralink.

- Whole brain emulation seems to me (mostly from considerations in Superintelligence) more quickly achievable than large-scale space colonization, so between already-colonized regions of space, communication and travel will become similar concepts.

- It should include some sort of rate of decay of influence, perhaps modeled as a risk per time, resulting into a decay function such as exponential decay. The following “risks” should contribute to it, but separately as I will argue below:

- Decay due to destruction of the civilizational cluster.

- Crucial so long as we’re huddled together on earth, afterwards probably limited to planets more than to whole civilizational clusters.

- Decay due to random “value” drift, but not limited to values

- Likely to be relevant for whole civilizational clusters, so probably close to impossible to overcome for us today.

- Decay (of our influence) due to independent, counterfactual discovery of our contribution.

- Dito.

- Decay due to destruction of the civilizational cluster.

Priorities

I think it makes more sense for me to put effort into prioritizing between different broad “classes of interventions” than individual charities, because if the charities are at all competent, they’re likely more competent than me. And within each cause area, I should just be able to ask the charities which one I should support, or else they’d fail my cooperativeness criterion. (Except, I may not notice many defections.)

Just this “class of intervention” is something they’re probably somewhat locked in to, either by by-laws, comparative advantage through early specialization, or some feature of the team’s ethical system.

So below I try to make sometimes a bit artificial distinctions and then try to apply my heuristics in the form of pros and cons of the cause area and the class of interventions. When an intervention has more cross-cutting benefits, then this scheme breaks down quickly – moral circle expansion, for example, can be beneficial in how it influences futures without singleton and in how it influences what singleton emerges, but the latter benefits are counted only in the “influencing the singleton” section. I think the usefulness of this section doesn’t go much beyond a mere brainstorming.

And note that it doesn’t make sense to count the pros and cons below because their weights are vastly different or my confidences are vastly different or they’re highly correlated or they’re just questions or some combination of these.

Influencing the Singleton

My heuristics don’t help much to evaluate this one until I have more clarity on how to convert a hazard function (failure rate) into an expected suffering distribution. I continue to see immense variance in this whole future scenario because of the wide range of different worlds we might get locked in to.

| Pro | Con | |

|---|---|---|

|

Problem

|

|

|

|

Intervention

|

DurabilityMaximal, ipso facto.UrgencyPlausibly most urgent. In particular, strong AGI may come before space colonization.RobustnessEven if it doesn’t, the absence of a singleton (through AGI) in the face of advanced technology may even generally be a distractor state. In that case, space colonization is unlikely to proceed fast enough to prevent an AGI from quickly controlling all (up to that point) colonized space. (More on that in the section “Ideas for a Model.”) | SupportabilityI know too little about how suffering focussed various organizations are and feel like the space needs mostly people with very specific and rare skill sets.27Feedback loopsUnlikely or distant at the moment and there don’t seem to be reliable proxies.RobustnessIf Robin Hanson is to be believed, then futures are possible in which AGI can be controlled (and no singleton emerges) even if the alignment problem remains unsolved. |

Wild Animal Suffering Research

| Pro | Con | |

|---|---|---|

|

Problem

|

|

|

|

Intervention

|

|

|

Preserving Effective Altruism

Movement building so far – probably highly effective but also fairly well funded – has aimed to grow the movement or grow its capacity – one aiming for greater numbers the other aiming for greater influence, better coordination, fewer mistakes, etc. But if effective altruism is destroyed, this would be a bad outcome according to even more value systems than in the case of existential catastrophes, so it would pay to invest heavily into reducing the probability of a permanent collapse of the movement.

Avenues to achieving this may include actual protection of the people – safety nets have been discussed and tried – and preservation of knowledge and values. Texts may be sealed into “time capsules,”30 but they need to be the right texts. Instructions that require a high level of technology may be useless for a long time after a catastrophe, and Lukas Gloor also notes that today’s morally persuasive texts were written for a particular audience – us. Read by a different audience in a very different world, they may have different effects from what we hoped for or probably none at all.31 So such texts may need to be written specifically to be as timeless as possible.32

| Pro | Con | |

|---|---|---|

|

Problem

|

|

|

|

Intervention

|

|

|

Other EA Movement Building

| Pro | Con | |

|---|---|---|

|

Problem

|

|

|

|

Intervention

|

|

Durability: I don’t know how likely EA is to re-emerge if it gets lost, that is, how often it would be invented anyway, but otherwise it seems very fragile with regard to both existential catastrophes and mundane value drift. |

Cultured Meat

| Pro | Con | |

|---|---|---|

|

Problem

|

|

|

|

Intervention

|

|

|

Moral Circle Expansion

I used to call this section “antispeciesist values spreading” but Jacy Reese draws the line more widely, so I’ll go with his reference class and name for it. I have trouble applying my heuristics here because of how meta the intervention is. Jacy’s article should be more enlightening.

| Pro | Con | |

|---|---|---|

|

Problem

|

|

|

|

Intervention

|

|

|

Banning Factory Farming

Sentience Politics works to ban factory farming in Switzerland via a national vote in 2019. This is a great benchmark since the initiative is said to have a macroscopic chance of success, will have its main effect for however many centuries nation states and national laws will still remain meaningful, and pattern-matches such seminal campaigns such as California’s Proposition 2, The Prevention of Farm Animal Cruelty Act, while avoiding some of its problems, centrally problems with imports. An intervention that aims to affect the long term should at least perform better than this very assuredly valuable intervention, which is probably a high bar.

| Pro | Con | |

|---|---|---|

|

Problem

|

|

|

|

Intervention

|

|

DurabilityMostly limited to however long laws last, be it due to being repealed, becoming obsolete, or forms of government changing, so maybe a couple centuries at most. (There are likely to be flow-through effects of course.)RobustnessFairly specific and final brick–like. |

Humane Space Colonization

This is what I’m most excited about at the moment because it promises to be a very strong contender for most effective long-term intervention – but much more research is needed.

The idea is that SpaceX and others may start shooting people into space in a few decades and may start to put them on Mars too. When that time comes, they’ll be looking for technologies that’ll allow people to survive in these environments permanently. They’ll probably have a range of options, and will choose the one that is most feasible, cheap, or expedient. There may even be a path dependence effect whereby the proven safety of this one technology and the advanced state of its development make it hard for other technologies to attract any attention or funding.

This may not be the technology that would’ve minimized animal suffering, though. So in order to increase the chances that the technology that gets used in the end and that perhaps sees some lock-in is the one that we think is most likely to minimize animal suffering, we need to invest into differential technological development such that at the time that the technology is needed by SpaceX and company, the one that is most feasible, cheap, and expedient coincides with the one that minimizes animal suffering.

A social enterprise that aims to achieve this could be bootstrapped on the basis of vertical agriculture, greenhouse agriculture, and zero-waste housing technologies and then use its know-how and general capacity to research low-suffering technologies for making space habitable.

| Pro | Con | |

|---|---|---|

|

Problem

|

|

|

|

Intervention

|

|

[For more, see the original article.]

Acknowledgements

This piece benefitted from comments and support by Anami Nguyen, Lukas Gloor, Martin Janser, Naoki Peter, and Michal Pokorný. Thank you.

-

But I’ve tried to compensate for that by compiling heuristics of others and searching past articles of mine for heuristics I thought relevant at the time. ↩

-

This is based on my now antirealist perspective, which is some sort of strategically constrained emotivism. Gains from Trade through Compromise, Moral Tribes, and The Righteous Mind are good introductions to what I’m referring to by “strategically constrained” (the article) and antirealism (the books) respectively. My moral perspective is the result of a moral parliament (note that the article appears to be written from a realist perspective) that consists mostly of a type of two-level preference utilitarianism with some negative and classic utilitarian minorities. The result looks akin to a deontological decision procedure and something like lexical threshold prioritarianism. My intuition is also that suffering scaleslinearly with the number of beings suffering to the same degree. ↩

-

When talking about the long term, I either mean millennia – periods long enough that we can suffer existential catastrophes or start colonizing space – or all of the future. Humans, as the currently most powerful species, are still compressed in one spot in the universe, so that I think that what we do now will matter more (might even matter greatly more if our influences can last long enough) for the rest of the future than what any similarly sized cluster of people will do once they have colonized enough of the surrounding universe that communication between the clusters becomes difficult. ↩

-

Just as problems will typically become harder to solve to the same degree the closer they are to being solved absolutely, so they will typically become easier to solve to the same degree the farther they are away from being solved absolutely. ↩

-

Or plausibly one with a downward trajectory that is still so far away from being solved that I don’t expect any significant drops in marginal utility throughout a period that I can hope to affect. (More on that in the "Model" section below.) But that judgment will be even more error prone than that of a trajectory alone. ↩

-

Anything from incremental but extreme increases in suffering to sudden explosions with afterwards constant extreme suffering are considered s-risks. ↩

-

If the EA-funded institute waits for (from our perspective) interesting research to get done elsewhere, it can invest the time into interesting research that would not otherwise get done. But if the EA-funded institute does the interesting research first the non-EA researchers will probably research something uninteresting (again, from our perspective) in that time, so less interesting research will get done. This may at first seem uncooperative, and it’ll probably pay to stay alert to cases where it is, but it is the observed preference of the non-EA researchers to research the interesting topic in question, so they pay no price for our gain. ↩

-

Lukas Gloor: “Any effects that near-extinction catastrophes have on delaying space colonization are largely negligible in the long run when compared to affecting the quality of a future with space colonization – at least unless the delay becomes very long indeed (e.g. millions of years or longer).” ↩

-

I’m still very much looking for models of intergalactic spreading that take into account lightspeed limits and cosmic expansion; trade-offs between expansion and more efficient usage of close space and resources (e.g., through a switch to silicon-based life); and trade (including moral trade) with other space-colonizing species. ↩

-

From “Cause Prioritization for Downside-Focused Value Systems”: “This leaves us with the question of how likely a global catastrophe is to merely delay space colonization rather than preventing it. I have not thought about this in much detail, but after having talked to some people (especially at FHI) who have investigated it, I updated that rebuilding after a catastrophe seems quite likely. And while a civilizational collapse would set a precedent and reason to worry the second time around when civilization reaches technological maturity again, it would take an unlikely constellation of collapse factors to get stuck in a loop of recurrent collapse, rather than at some point escaping the setbacks and reaching a stable plateau (Bostrom, 2009), e.g. through space colonization. I would therefore say that large-scale catastrophes related to biorisk or nuclear war are quite likely (~80–90%) to merely delay space colonization in expectation.[17] (With more uncertainty being not on the likelihood of recovery, but on whether some outlier-type catastrophes might directly lead to extinction.)” See also footnote 17 in the original. ↩

-

Unless perhaps they need great amounts of resources, such as energy, or some other additional limiting factor. ↩

-

Space has three spatial dimensions and is, at the largest scale, filled with matter evenly. When our civilization expands into space, we’ll always try to reach the resources that are closest and not yet claimed by others, so that there’s no reason why we would expand only in one direction (at a sufficiently large scale). At the largest scale, we’d get a volume of our civilization and thus suffering of ¾·π·r³, but first there are many irregularities that will lead to a much more complex (but still cubic) polynomial – irregularities such as cultural differences between mostly isolated clusters of our civilization, perhaps evolutionary differences, irregularities in the density of solar systems and galaxies, etc. ↩

-

Faster-than-light communication or even travel would change my models significantly. ↩

-

¯\_(ツ)_/¯↩ -

I would guess that this article is mostly read by an audience of private donors and perhaps someone at the Open Philanthropy Project or another similar foundation, so I can gear it a little bit toward that audience. ↩

-

A millennium is long enough that it seems unintuitive to me that a little delay at the beginning should have an effect at the end of it but it is short enough that the accessibility of marginal resources (solar systems and such) is not systematically lower at the end due to such effects as the expansion of space or increase in entropy. ↩

-

For example, ETGing for highly effective short-term-focused charities for 50 years of one’s life. ↩

-

I’ll refer to these as concepts or ideas (whichever is clearer in the context) or influences when I only mean the difference between their factual and counterfactual duration of existence. ↩

-

Databases of the application for and expiry of trademarks (such as the USPTO) and of companies themselves may provide enough data to try to fit a decay function to it. ↩

-

In comparison to languages for example, a company either exists or doesn’t, but Proto-Indo-European, albeit incomprehensible to us, still exerts some influence on languages today. ↩

-

I hope that sentence is comprehensible even if I got the grammar wrong. ↩

-

At least in the sense of “more than average.” ↩

-

Is this true? Or do similar people put in similar regions tend to independently evolve similar languages due to all the similarities in their requirements, circumstances, etc.? Eyes have evolved independently a couple of times after all. ↩

-

I don’t know if this is a minority or the majority of cities. ↩

-

The time dilation is about 1.6 seconds at that speed over 1,000 years, so no help there. ↩

-

By this I mean to cover the possibilities of physical travel in a sleeping state and travel of ems (as Robin Hanson calls them) stored on hard drives of sorts. ↩

-

My favorite is FRI, but I’m still waiting for it to emerge as a suffering-focused AI alignment powerhouse. (My heuristic here is whether an organization seems to be closer to the category of looking for something to research and when it finds it pounce on it to make the best of it or the category of having a wealth of topics to research and spending considerable time not on searching for but on prioritizing between topics.) It’ll probably take a while longer to build up such capacity. MIRI seems to be popular at FRI, but it is also (finally) well-funded this year. Are there any other suffering-focused organizations to consider? ↩

-

The name may’ve given it away already. ↩

-

Knowledge of how to reduce suffering at no gain to the suffering reducers themselves is probably not more durable than persuasive texts on ethics (Lukas Gloor gave Animal Liberation as an example). If we (people like me) had to still fight harder for our survival, we may not have had the resources (time, leisure, iodine, etc.) to become more altruistic in the first place. Just as our civilization gives us those resources, it also makes altruism cheaper through economies of scale, gains from trade, transport, communication, etc. If this civilization is destroyed and some people are just struggling to rebuild it, then (1) knowledge whose implementation is feasible today will be infeasible for a long time, throughout which it may get lost, and (2) those who can’t themselves fight or bargain to be included, such as nonhuman animals, will (again) be left behind for the longest time. Neither approach, scientific knowledge or moral arguments, are likely to be enough. However, a technology that will continue to be feasible, will profit its operator as much or more than an alternative, and benefits the animals would be the most likely technology to survive. ↩

-

See the paper Surviving global risks through the preservation of humanity's data on the Moon by Turchin and Denkenberger. ↩

-

He’s particularly worried about very bad outcomes from fanaticism for example about EA ideas when they are read at a time when people are less aware of biases or more prone to superstitious thinking. But if the probability of fanaticism is small compared to a failure of the texts to have any effect (let’s call it a null result) but the outcome may be bad enough to offset that, then, without act-omission distinction, fanaticism doesn’t seem clearly worse than simple failure by a factor of more than 1–2 or so, while the probability of a null result seems orders of magnitude more likely to me. ↩

-

A test of that might be to ask people from many different backgrounds and cultures to summarize such texts, preferably people who don’t know enough of the culture of the author to adjust for the difference. If the results are similar, they’re more likely to be suitable texts. ↩

-

The criteria of Animal Charity Evaluators should allow them to consider the long term, but that is not what I’ve observed in practice. ↩

Impressive work - I especially liked the graphs. For humane space colonization, rather than photosynthesis, it would be far more efficient to use solar or nuclear electricity for direct chemical synthesis of food or powering electric bacteria. One non-space colonization motivation would include agricultural catastrophes. Outside a catastrophe, it would likely be more fossil energy intensive than growing plants, but maybe not than producing animals. And it would be far less fossil energy intensive than artificial light growing of plants, which people are working on.

Cool, thank you! Have you written about direct chemical synthesis of food or can you recommend some resources to me?

From my book: "Synthetic food production refers to using chemical synthesis. Sugar has been synthesized from noncarbohydrates for decades (Hudlicky et al., 1996). Hudlicky, T., Entwistle, D.A., Pitzer, K.K., Thorpe, A.J., 1996. Modern methods of monosaccharide synthesis from non-carbohydrate sources. Chem. Rev. 96, 1195–1220." I didn't write much because I don't know enough about catalysis to say whether it can be ramped up quickly in a catastrophe. But for space colonization, that is not an issue.

Awesome, thank you!

I am still not finished with the article, just want to share a novel approach to prioritization using network theory that I found today, shared by World Bank, and got quite excited about it.

It is an application of network theory in prioritization of Sustainable Development Goals with counter-intuitive conclusions for people used to reasoning along the lines of cost-benefit estimates, like the ones by Copenhagen Consensus. In short, goals like affordable clean energy and sanitation are central to many of the SDGs and can thus increase probabilities of their achievement. http://sdg.iisd.org/news/world-bank-paper-tests-methodology-for-prioritizing-sdgs-indicators/

"The authors note that the extent to which capacities can be used between SDGs is dependent on their proximity to one another. They define “SDG proximity” as the conditional probability of two indicators being “successful” together, a function of the commonalities shared. For example, the indicator “number of physicians per 1,000 people” is likely to have more commonalities with—and be closer in proximity to—an indicator to measure malnutrition than it would with one on marine protected areas.

If a country performs well on a Goal or indicator with many “proximities,” the country is likely to also achieve progress in others.

The sum of a Goal’s proximities provides its measure of “SDG centrality,” the paper explains. If a country performs well on a Goal or indicator with high centrality, the country is likely to also achieve progress in others. SDGs 7 (affordable and clean energy) and 6 (clean water and sanitation) are calculated to be the most “central,” while Goals 1 (no poverty) and 13 (climate action) are calculated to be the least central.

The highest-ranked indicators in terms of centrality relate to access to electricity, and populations using improved drinking water sources. The least central indicators address varied themes, including gender parity, disaster risk reduction strategies, education, time spent on unpaid domestic work, employment in agriculture, malaria incidence rates, and the number of breeds classified as being not-at-risk of extinction, among others. However, the authors caution against “writing off” an SDG or indicator as irrelevant simply because it features limited connections.

To prioritize actions, the authors cite the importance of an SDG’s “density” in a country. This is defined on the basis of the Goal’s proximities to others in which the country is successful. It will be easier to make progress on a high-density SDG than a low-density SDG. The paper suggests that governments can use the concepts of SDG proximity, centrality and density to redeploy SDG delivery mechanisms or other capacities, in order to maximize impact."

I quickly summarized "four modes of thinking" about prioritization here: Sequence Thinking vs Cluster Thinking (both "horizontal") and Analytic Thinking vs. Synthetic/Systemic Thinking (both vertical) and used the above example https://medium.com/giving-on-the-edge/four-modes-of-thinking-about-cause-prioritization-8b9f81abd6b0

Oh, cool! I'm reading that study at the moment. I'll be able to say more once I'm though. Then I'll turn to your article. Sounds interesting!