Linkpost for https://docs.google.com/document/d/1sb3naVl0an_KyP8bVuaqxOBf2GC_4iHlTV-Onx3M2To/edit

This report represents ~40 hours of work by Rose Hadshar in summer 2023 for Arb Research, in turn for Holden Karnofsky in response to this call for proposals on standards.

It’s based on a mixture of background reading, research into individual standards, and interviews with experts. Note that I didn’t ask for permission to cite the expert interviews publicly, so I’ve anonymised them.

I suggest reading the scope and summary and skimming the overview, then only looking at sections which seem particularly relevant to you.

Scope

This report covers:

- Both biosecurity and biosafety:[1]

- Biosecurity: “the protection, control and accountability for valuable biological materials (including information) in laboratories in order to prevent their unauthorized access, loss, theft, misuse, diversion or intentional release.”

- Biosafety: “the containment principles, technologies and practices that are implemented to prevent unintentional exposure to pathogens and toxins or their accidental release”

- Biosecurity and biosafety standards internationally, but with much more emphasis on the US

- Regulations and guidance as well as standards proper. I am using these terms as follows:

- Regulations: rules on how to comply with a particular law or laws. Legally binding

- Guidance: rules on how to comply with particular regulations. Not legally binding, but risky to ignore

- Standards: rules which do not relate to compliance with a particular law or laws. Not legally binding.

- Note that I also sometimes use ‘standards’ as an umbrella term for regulations, guidance and standards.

Summary of most interesting findings

For each point:

- I’ve included my confidence in the claim (operationalised as the probability that I would still believe the claim after 40 hours’ more work).

- I link to a subsection with more details (though in some cases I don’t have much more to say).

The origins of bio standards

- (80%) There were many different motivations behind bio standards (e.g. plant health, animal health, worker protection, bioterrorism, fair sharing of genetic resources…)

- (70%) Standards were significantly reactive to rather than proactive about incidents (e.g. lab accidents, terrorist attacks, and epidemics), though:

- There are exceptions (e.g. the NIH guidelines on recombinant DNA)

- Guidance is often more proactive than standards (e.g. gene drives)

- (80%) International standards weren’t always later or less influential than national ones

- (70%) Voluntary standards seem to have prevented regulation in at least one case (e.g. the NIH guidelines)

- (65%) In the US, it may be more likely that mandatory standards are passed on matters of national security (e.g. FSAP)

Compliance

- (60%) Voluntary compliance may sometimes be higher than mandated compliance (e.g. NIH guidelines)

- (70%) Motives for voluntarily following standards include responsibility, market access, and the spread of norms via international training

- (80%) Voluntary standards may be easier to internationalise than regulation

- (90%) Deliberate efforts were made to increase compliance internationally (e.g. via funding biosafety associations, offering training and other assistance)

Problems with these standards

- (90%) Bio standards are often list-based. This means that they are not comprehensive, do not reflect new threats, prevent innovation in risk management, and fail to recognise the importance of context for risk

- There’s been a partial move away from prescriptive, list-based standards towards holistic, risk-based standards (e.g. ISO 35001)

- (85%) Bio standards tend to lack reporting standards, so it’s very hard to tell how effective they are

- (60%) Standards may have impeded safety work in some areas (e.g. select agent designation as a barrier to developing mitigation measures)

- (75%) Those implementing standards aren’t always sufficiently high powered

- (75%) Researchers view standards as a barrier to research

- (90%) The evidence base for bio standards is poor

- (95%) In the US, there is no single body or legislation responsible for bio standards in general

- Some countries have moved towards a centralised approach (e.g. Canada, China)

- (95%) Many standards are voluntary rather than legally mandated (e.g. BMBL)

- In the US, legal requirements to meet certain standards are a first amendment issue

- (75%) There is sometimes a conflict of interest where the same body is responsible for funding research and for assessing its safety

Overview of standards in biosafety and biorisk

Background

There are a lot of different biosafety and biorisk standards, but at a very high level:

- What bad things are these standards trying to prevent?

- Biosafety standards are generally trying to protect the safety of lab staff and prevent accidental release.

- Biosecurity standards are generally trying to prevent state or non-state development of bioweapons.

- Other motivations also come up (e.g. plant health, animal health, fair sharing of genetic resources…)

- What activities do these standards cover?

- Who conducts biological research in labs, on what, and how

- The storage, ownership, sale and transportation of biological agents

- Do these standards cover all actors undertaking those activities?

- Laws generally cover all actors undertaking the activities

- Standards are generally voluntary, though some funding bodies make compliance with standards a mandatory condition of funding

The main standards in biosafety and biorisk

Internationally:

- The Biological Weapons Convention (BWC, 1972) prohibits the development of bioweapons

- The Australia Group (1985) sets standards for the international sale of dual use equipment and transport of pathogens.

- The WHO Laboratory Safety Manual (LBM, 1983) is a voluntary biosafety standard (there is also more recent WHO guidance on biosecurity).

- The ISO 35001 (2019) is a voluntary standard for biosafety and biosecurity (though it’s not clear that there’s much adoption according to a biorisk expert involved in setting this standard up, and the standard is expensive to access).

In the US:

- The main things are:

- The Biosafety in Microbiological and Biomedical Laboratories (BMBL, 1984), which is a voluntary standard for laboratory biosafety.

- The select agent regulations, which are mandatory regulations with a statutory basis, and govern who can use particularly dangerous agents and how.

- The NIH Guidelines for Research Involving Recombinant DNA Molecules (1976) are noteworthy for being among the first voluntary standards, though they aren’t very significant today.

- There is also more recent voluntary guidance on:

- DNA synthesis screening (Screening Framework Guidance for Providers of Synthetic Double-Stranded DNA, 2010)

- Dual Use Research of Concern (Policy for Oversight of Life Sciences Dual Use Research of Concern, 2012 and Policy for Institutional Oversight of Dual Use Research of Concern, 2014)

- GoF research (P3CO, 2017)

- Also note that there are two bills currently under consideration (see here for a brief introduction):

Other countries

- China and Russia were both quite slow to develop biosafety standards.

- The first biosafety regulations in Russia were in 1993.

- China’s first regulations on biosafety in particular were in the early 2000s.

- There’s been a move towards overarching biosafety and biosecurity acts which give legislative footing to standards.

- Canada (2009) and China (2020) both have this.

- Canada has one of the best systems in the world, according to a biorisk expert I spoke with.

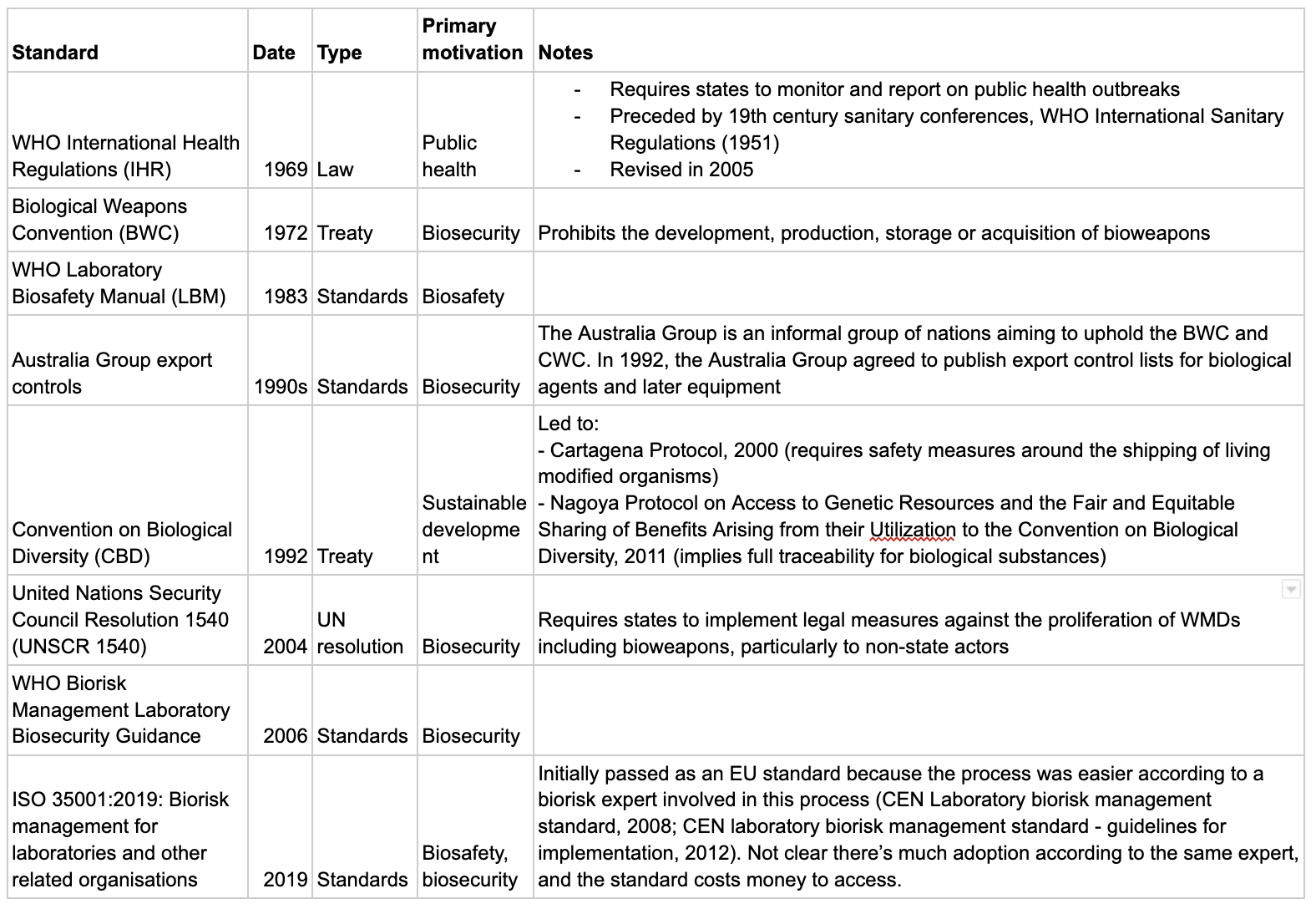

The tables below go into more detail. For a full timeline of biosafety and biosecurity standards, see here.

International standards

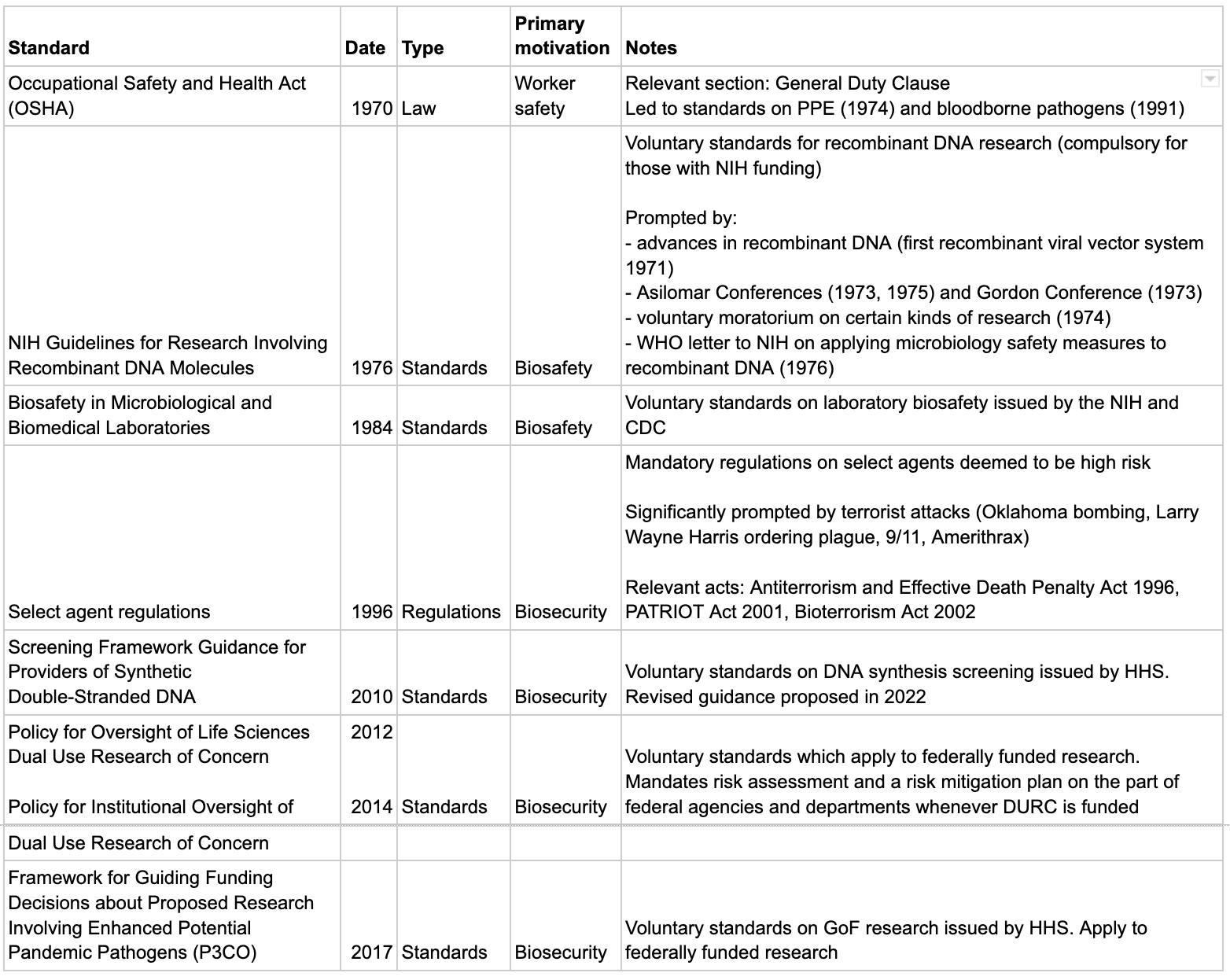

US standards

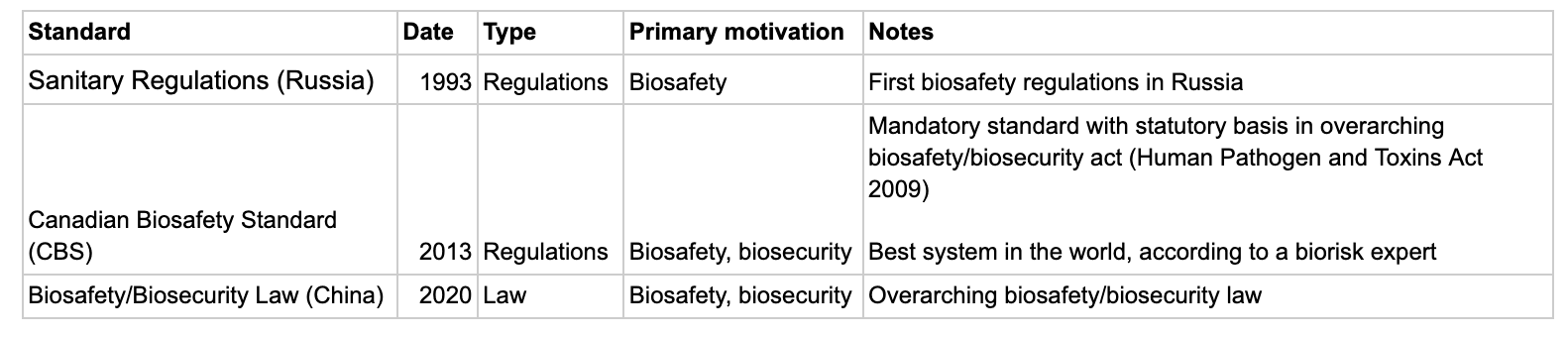

Notable standards in other countries

The origins of biosafety and biosecurity standards

Questions from Holden’s call this section relates to:

- What’s the history of the standard? How did it get started?

- How did we get from the beginnings to where we are today?

- If a standard aims to reduce risks, to what extent did the standard get out ahead of/prevent risks, as opposed to being developed after relevant problems had already happened?

- Was there any influence of early voluntary standards on later government regulation?

There were many different motivations behind bio standards

At a high level:

- Biosafety standards are generally trying to protect the safety of lab staff and prevent accidental release.

- Biosecurity standards are generally trying to prevent state or non-state development of bioweapons.

But other motivations have also led to standards with a bearing on biological research. For example:

- Worker safety: in the US, the Occupational Safety and Health Act (OSHA) of 1970 was motivated by worker safety in general, but some of its provisions were relevant to biological research.

- Plant protection: one of the earliest relevant standard-setting organisations was the International Plant Protection Convention (IPPC), founded in 1951. Some of their standards impact biological research involving plants.[2]

- Animal health: the World Organization for Animal Health (OIE) sets standards relating to animal health and zoonoses as well as animal welfare, animal production, and food safety.[3]

- Fair sharing of genetic resources: the Nagoya Protocol on Access to Genetic Resources and the Fair and Equitable Sharing of Benefits Arising from their Utilization to the Convention on Biological Diversity of 2011 was not motivated by concerns about biosafety or biosecurity, but its provisions imply full traceability on the access and use of some biological materials (genetic resources).[4]

Standards were significantly reactive rather than proactive

Standards have been significantly reactive to:

- Lab accidents

- I haven’t found evidence of lab accidents being a direct cause of particular biosafety standards, but it is the case that both harm to workers and accidental release from labs were happening before standards were introduced, so the standards were not pre-emptive.

- Terrorist attacks, in the case of biosecurity

- In the US, the select agent regulations were first established via the Antiterrorism and Effective Death Penalty Act (1996). The act overall was in significant part a reaction to the Oklahoma City bombing of 1995.[5] The select agent provisions in particular were in part a response to another incident in 1995, where white supremicist Larry Wayne Harris successfully ordered Yersinia pestis by mail.[6]

- 9/11 and Amerithrax prompted the PATRIOT Act (2001) and the Bioterrorism Act (2002), which led to the establishment of FSAP in its current form in 2003.[7]

- Epidemics

However:

- There are exceptions, where standards were developed in anticipation of potential risks.

- The most notable example is the NIH Guidelines for Research Involving Recombinant DNA Molecules, which were developed in anticipation of low probability extreme risks.[10]

- Guidance is often more proactive than standards.

- For example, there was guidance on gene drives before they were successfully built, but there still aren’t standards, according to one biorisk expert.

International standards weren’t always later or less influential than national ones

- The WHO LBM (1983) was published a year before the US BMBL (1984).

- There are examples of international standards prompting/informing national ones:

- There is competition between the WHO LBM and the BMBL.

- According to one biorisk expert I spoke with, this increases the risk of confusion and gaps, and happened because the US rushed ahead of the rest of the world, who weren’t willing/able to follow.

- According to two biorisk experts I spoke with, it’s useful to have international standards as some countries will never adopt US standards on principle.

Voluntary standards seem to have prevented regulation in at least one case

The NIH guidelines were widely seen by critics and proponents as preventing future regulation, and this was one of the key motivations of the scientists who organised the research pause and Asilomar.[13]

In the US, it may be more likely that mandatory standards are passed on matters of national security

Most bio related standards are voluntary in the US, with the exception of FSAP. According to an expert in standards I spoke with, one of the reasons this was practicable was that there’s federal authority over national security.

Compliance

Question from Holden’s call this section relates to: “What sorts of companies (and how many/what percentage of relevant companies) comply with what standards, and what are the major reasons they do so?”

Voluntary compliance may sometimes be higher than mandated compliance

In the case of the NIH guidelines, compliance may have been higher among commercial companies (who complied voluntarily) than among NIH-funded bodies (who were mandated to comply as a condition of their funding):[14]

- Paul Berg, one of the organisers of Asilomar, believed this was the case.

- Commercial companies likely had access to more resources and were more concerned by liability than academic counterparts.

Motives for voluntarily following standards include responsibility, market access, and the spread of norms via international training

I formed this impression from talking with a biorisk expert, and a biologist involved in setting up the Asilomar conference.

Voluntary standards may be easier to internationalise than regulation

Countries’ legal systems differ, and law is often slow and costly to enact. But voluntary standards can be adopted more quickly.

Examples of voluntary standards being adopted internationally:

- The WHO LBM is used internationally. To my knowledge, there are no equivalent internationally adopted laws on lab biosafety.

- The US BMBL, Canadian Biosafety Standard (CBS) and AU/NZ standards are also all used internationally.[15]

- Recombinant DNA:

- The 1974 voluntary pause on recombinant DNA research pause was observed internationally.[16]

- Scientists from all over the world were invited to Asilomar in 1975. According to one of the conference organisers, those people went back to their countries and helped set up regulatory regimes which were consistent with the Asilomar recommendations. The conference organiser believes that no countries deviated from these recommendations apart from Russia as part of their bioweapons programme.

Deliberate efforts were made to increase compliance internationally

According to a biorisk expert I spoke with, part of why biosafety compliance is high internationally is that a lot of funding was put into building regional and national biosafety associations. This expert says that the motivation for this was non-proliferation of dangerous biological agents.

Examples of deliberate efforts to increase compliance:

- According to the same expert, Trevor Smith at Global Affairs Canada and the US DoD and State Department have provided a lot of funding for regional biosafety associations.

- The Canadian Association for Biological Safety assisted Russia to train instructors for biosafety programmes in 2008. Canada has also been involved in other assistance with improving Russian standards, and in translating US and WHO biosafety guidelines into Russian.[17]

Problems with biosafety and biosecurity standards

Question from Holden’s call for proposals which this section relates to: Does the standard currently seem to achieve its intended purpose? To the extent it seeks to reduce risks, is there a case that it’s done so?

Bio standards are often list-based

Many bio standards are based on lists of agents to which different standards of safety and security apply. There are a number of possible problems with this approach:

- Lists aren’t comprehensive.

- Lists don’t automatically cover emerging threats.

- According to a biorisk expert I spoke with, the ability to make new structures (especially in future with AI) makes list-based approaches particularly inappropriate for biological research.

- List-based approaches tend towards tick-box exercises and may mitigate against careful thinking and innovation in risk management.[18]

- According to a biorisk expert I spoke with, list-based approaches often impose standards independently of context, when scientists know that there’s a big difference in risk depending on where and how the research is conducted.

There’s been a partial move away from prescriptive, list-based standards towards holistic, risk-based standards. The ISO 35001 is an example of this.

Bio standards tend to lack reporting standards, so it’s very hard to tell how effective they are

- Biorisk expert: “My main hot take is a lot of this field is flying ~blind due to the absence of any outcome data (e.g. how many incidents per X lab year or equivalent). The main push I advocate for is reporting standards rather than guessing what may or may not help (then having little steer post implementation whether you've moved the needle).”

- Palmer et al, 2015: criticises underdeveloped metrics[19]

- Farquhar et al: calls for centrally commission absolute risk assessments[20]

Standards may have impeded safety work in some areas

For example, some scientists have argued that in the US, select agent designation creates a barrier to developing mitigation measures.[21]

Those implementing standards aren’t always sufficiently high powered

- According to a biorisk expert, biosafety isn’t sufficiently integrated with senior management .

- This expert was involved in setting up the ISO standard, and says that one of the aims of the ISO standard is to address this.

- According to a biorisk expert I spoke with, biosafety compliance in the US defaults to something list-based even though the standards themselves are risk-based, because the inspection workforce isn’t sophisticated enough for a more consultative approach as in the UK[22] or Canada.

Researchers view standards as a barrier to research

- According to a biorisk expert

- They also note that currently biosafety isn’t a technical field in its own right where you can publish papers and make spin-off companies

The evidence base for bio standards is poor

According to a biorisk expert, there often isn’t good evidence that a particular standard is risk reducing. This seems like a somewhat structural problem, related to:

- The fact that standards tend to lack reporting standards

- It being hard to test low probability extreme risks experimentally.

In the US, there is no single body or legislation responsible for bio standards in general

According to a US biorisk expert I spoke with, this leads to gaps in what’s regulated and a lack of leadership.

Some countries have moved towards a centralised approach, for example Canada (Human Pathogen and Toxins Act 2009) and China (Biosafety/Biosecurity Law 2020).

Many standards are voluntary rather than legally mandated

Examples:

- Internationally: the WHO LBM, the Australia Group export controls, ISO 35001

- In the US: the NIH guidelines on recombinant DNA, the BMBL, PC30

The upshot of this is that it’s perfectly legal for example for a rich person in the US to build pathogenic flu in their basement, as long as it’s for peaceful purposes.

There is sometimes a conflict of interest where the same body is responsible for funding research and for assessing its safety

Examples:

- The NIH guidelines on Recombinant DNA 1976 (issued by NIH and applied to NIH fundees)[23]

- Policy for Oversight of Life Sciences Dual Use Research of Concern 2012 (issued by HHS and applied to HHS fundees)

- Policy for Institutional Oversight of Dual Use Research of Concern 2014 (issued by HHS and applied to HHS fundees)

- Framework for Guiding Funding Decisions about Proposed Research Involving Enhanced Potential Pandemic Pathogens (P3CO) 2017 (issued by HHS and applied to HHS fundees)

Questions this report doesn’t address

Holden’s call for proposal lists a series of questions which he’s interested in answers to. This report gives partial answers to some of those questions above, and doesn’t address the following questions at all:

- How is the standard implemented today? Who writes it and revises it, and what does that process look like?

- How involved are/were activists/advocates/people who are explicitly focused on public benefit rather than profits in setting standards? How involved are companies? How involved are people with reputations for neutrality?

- Are there audits required to meet a standard?

- If so, who does the audits, and how do they avoid being gamed?

- How much access do they get to the companies they’re auditing?

- How good are the audits? How do we know?

- What other measures are taken to avoid standards being “gamed” and ensure that whatever risks they’re meant to protect against are in fact protected against?

- How costly and difficult is it to comply with the standards?

- What happens if a company stops complying?

(Very) select bibliography

I haven’t made a proper bibliography.

This biorisk standards timeline contains information on all of the standards mentioned in this report, with quotes and links. I’d recommend it as a reference.

Some useful background articles:

- Connell, ‘Biologic agents in the laboratory—the regulatory issues’, Federation of American Scientists, 2011.

- Salerno and Gaudioso, ‘Introduction: The Case for Biorisk Management’, in Salerno and Gaudioso (eds), Laboratory biorisk management: biosafety and biosecurity, 2015.

- Beeckman and Rüdelsheim, ‘Biosafety and Biosecurity in Containment: A Regulatory Overview’, Frontiers in Bioengineering and Biotechnology, 2020.

- ^

WHO definitions, quoted from Connell, ‘Biologic agents in the laboratory—the regulatory issues’, Federation of American Scientists, 2011.

- ^

“[T]he International Plant Protection Convention (IPPC) in 1951, a multilateral treaty deposited with the Food and Agriculture Organization of the United Nations (FAO). The IPPC is the standard setting organization for the “Agreement on the Application of Sanitary and Phytosanitary Measures” (the SPS Agreement) of the World Trade Organization (WTO). Specific “International Standards for Phytosanitary Measures” (ISPMs) cover topics such as lists of quarantine organisms, pest risk analysis, or the design of plant quarantine stations, all of which are relevant when applying plant pests under containment in a laboratory or plant growing facility (FAO/IPPC, 2019a).” Beeckman and Rüdelsheim, ‘Biosafety and Biosecurity in Containment: A Regulatory Overview’, Frontiers in Bioengineering and Biotechnology, 2020.

- ^

“[T]he World Organization for Animal Health (OIE - Office International des Epizooties, est. 1924) is since 1998 the WTO reference organization for standards relating to animal health and zoonoses (WTO, 2019). The “Terrestrial Animal Health Code” and “Aquatic Animal Health Code” were developed with the aim of assuring the sanitary safety of international trade in terrestrial animals and aquatic animals, respectively, as well as their products. Traditionally addressing animal health and zoonoses only, these codes have been expanded to also cover animal welfare, animal production, and food safety in recent updates (OIE, 2019).” Beeckman and Rüdelsheim, ‘Biosafety and Biosecurity in Containment: A Regulatory Overview’, Frontiers in Bioengineering and Biotechnology, 2020.

- ^

“The “Nagoya Protocol on Access to Genetic Resources and the Fair and Equitable Sharing of Benefits Arising from their Utilization to the Convention on Biological Diversity” (SCBD, 2011) states that when benefits (either monetary or non-monetary) are arising from the utilization of genetic resources (e.g., in research) as well as during subsequent commercialization, that these benefits “shall be shared in a fair and equitable way with the Party providing such resources that is the country of origin of such resources or a Party that has acquired the genetic resources in accordance with the Convention”. Although in principle not related to biosafety, the Nagoya Protocol implies that full traceability on when and where a certain genetic resource (i.e., biological material, or in some case arguably even digital sequence information) was first accessed, as well as how it was subsequently used, is maintained.” Beeckman and Rüdelsheim, ‘Biosafety and Biosecurity in Containment: A Regulatory Overview’, Frontiers in Bioengineering and Biotechnology, 2020.

- ^

See ‘Historical origins of current biosecurity regulations’ in Zelicoff, ‘Laboratory biosecurity in the United States: Evolution and regulation’, Ensuring National Biosecurity, 2016.

- ^

“A former Aryan Nations member illegally obtained a bacterium that causes plague (Yersinia pestis) by mail order. As a result, Congress passed Section 511 of the Antiterrorism and Effective Death Penalty Act of 1996 requiring HHS to publish regulations for the transfers of select agents that have the potential to pose a severe threat to public health and safety (Additional Requirements for Facilities Transferring or Receiving Select Agents, 42 CFR Part 72.6; effective April 15, 1997).” https://www.selectagents.gov/overview/history.htm

- ^

“Following the anthrax attacks of 2001 that resulted in five deaths, Congress significantly strengthened oversight of select agents by passing the USA PATRIOT Act in 2001 and the Public Health Security and Bioterrorism Preparedness and Response Act of 2002 requiring HHS & USDA to publish regulations for possession, use, and transfer of select agents (Select Agent Regulations, 7 CFR Part 331, 9 CFR Part 121, and 42 CFR Part 73; effective February 7, 2003).” https://www.selectagents.gov/overview/history.htm

- ^

““Perhaps the most important legacy of the SARS epidemic, and to a lesser extent the H5N1 outbreak, was the sense of urgency it gave to finalising the updates to the 1969 International Health Regulations (IHRs)...Arguably, as a direct result of perceived reluctance on the part of the Chinese authorities to be transparent in the early stages of the SARS outbreak, the revised IHRs state that the WHO can collect, analyse and use information “other than notifications or consultations” including from intergovernmental organisations, nongovernmental organisations and actors, and the Internet.” McLeish, ‘Evolving Biosecurity Frameworks’, in Dover, Goodman and Dylan (eds), Palgrave Handbook on Intelligence and Security Studies, 2017.

- ^

“References in the literature and presentations at regional symposia refer to the SARS outbreak and resulting laboratory-acquired infections as the prime motivator for implementing legislation, developing enhanced safety programs, and constructing modern containment laboratories that meet or exceed international guidelines. To avoid the recurrence of biological risks posed by SARS and MERS, China has also learned from the experience of other countries and organisations, with the BMBL being one main knowledge resources.” Johnson and Casagrande, ‘Comparison of International Guidance for Biosafety Regarding Work Conducted at Biosafety Level 3 (BSL-3) and Gain-ofFunction (GOF) Experiments’, Applied Biosafety, 2016.

- ^

Shortly after recombinant DNA became technologically possible, scientists self-organised a pause on all research involving recombinant DNA until the potential risks were better understood. The Asilomar conference (1975) brought together the relevant scientists internationally and recommended a gradual introduction of recombinant DNA research with review for potential risks. These recommendations were taken up by the NIH who issued their Guidelines for Research Involving Recombinant DNA Molecules in 1976. Both the research pause and the spirit of the Asilomar recommendations were widely followed internationally, according to one of the conference organisers. As it transpired that recombinant DNA research was not particularly risky, the guidelines were gradually relaxed, as their instigators had intended. See Grace, ‘The Asilomar Conference: A Case Study in Risk Mitigation’, MIRI, 2015 for an introduction.

- ^

“The original BSATs were selected (i.e., Select Agents) from the Australia Group List of Human and Animal Pathogens and Toxins for Export Control (Australia Group List, 2014) with input from experts from inside and outside the government.” Morse, ‘Pathogen Security - Help or Hindrance?’, Frontiers in Bioengineering and Biotechnology, 2014.

- ^

“Biosecurity legislation in China started comparatively late and the relevant legislation was largely driven by China joining international conventions”. Qiu and Hu, ‘Legislative Moves on Biosecurity in China’,

Biotechnology Law Report, 2021.

- ^

“It is often suggested (by both attendees and critics) that a large motive of the Asilomar Conference’s attendees was to avoid regulation of their new technology by outsiders.” Grace, ‘The Asilomar Conference: A Case Study in Risk Mitigation’, MIRI, 2015. One of the organisers told me in a call that the Asilomar recommendations were partly a defensive manoeuvre to prevent the issue from being handed to the public or Congress.

“The RAC's existence obviated the need for more restrictive governmental legislation”. Wivel, ‘Historical Perspectives Pertaining to the NIH Recombinant DNA Advisory Committee’, Human Gene Therapy, 2014.

- ^

“Many people were critical of the guidelines because the guidelines were only imposed on those who had funding from the federal government, and so did not impact the commercial sector. There was a lot of concern whether the industry was going to bypass the constraints, as mild as they were. Berg never worried about industry. As it turned out, industry conformed more so than almost any academic center. “Berg believes, and thinks many of his colleagues agreed, that the commercial sector would be at great risk if they obviously and openly flaunted the guidelines because their plants and research labs are in amongst communities. Furthermore, it would be to their considerable detriment if their local communities learned that any of their recombinant DNA experiments could be dangerous. Berg speculates that they would have been picketed and closed down if it became known that they had avoided the guidelines. It was much more economically feasible for them to build the most secure facilities that anybody can think of.”” Interior quote from a conversation between Grace and Berg. Grace, ‘The Asilomar Conference: A Case Study in Risk Mitigation’, MIRI, 2015.

“Many people thought the commercial sector would be a problem because the guidelines were not imposed on them, so they were at liberty to ignore them. This was not the case: the commercial sector had strong incentives to follow the guidelines, and more money to invest in safety than academia had. Consequently, they heeded the guidelines more rigorously than most academic organizations.” Grace, ‘The Asilomar Conference: A Case Study in Risk Mitigation’, MIRI, 2015.

- ^

“There is a lack of European-wide harmonized practical guidance on how to implement the European Directives on biological agents and GMMs. A few EU Member States have developed their own national guidance based on the EC Directives. In other cases, these gaps are filled by e.g. US Biosafety in Microbiological and Biomedical Laboratories (BMBL) and Canadian guidelines”. “BSL-3 laboratories and safety programs in Hong Kong are regularly if not annually certified by independent third-party certifiers using criteria established in the BMBL or AU/NZ BSL-3 standards.” “To avoid the recurrence of biological risks posed by SARS and MERS, China has also learned from the experience of other countries and organizations, with the BMBL being one main knowledge resources.” Johnson and Casagrande, ‘Comparison of International Guidance for Biosafety Regarding Work Conducted at Biosafety Level 3 (BSL-3) and Gain-ofFunction (GOF) Experiments’, Applied Biosafety, 2016.

- ^

“While there was some disagreement about this action, the moratorium was universally adhered to.” Grace, ‘The Asilomar Conference: A Case Study in Risk Mitigation’, MIRI, 2015. One of the organisers also said this to me in a call.

- ^

“In 2008, Russia received assistance from the Canadian Association for Biological Safety to train instructors for biosafety programs. WHO and US biosafety documents were translated and used for training purposes”. “Through this partnership [the Global Partnership Against the Spread of Weapons and Materials of Mass Destruction], Canada provided Russia assistance in improving biosafety and biosecurity standards. Canada has translated biosafety training programs and documents into Russian for more widespread use”. National biosafety systems: Case studies to analyze current biosafety approaches and regulations for Brazil, China, India, Israel, Pakistan, Kenya, Russia, Singapore, the United Kingdom, and the United States, UPMC Center for Health Security, 2016.

- ^

“We believe that the bioscience community depends too heavily on predefined solutions sets, known as agent risk groups, biosafety levels, and biosecurity regulations. This dependence has relegated laboratory biosafety and biosecurity to the administrative basements of bioscience facilities. These generic agent risk groups, biosafety levels, and biosecurity regulations have almost eliminated the pursuit of the intellectually rigorous, risk-based assessments and solutions of the 1960s—when the field was in its infancy. Instead, we now often have complacency in laboratory biosafety and biosecurity, and the general absence of comprehensive management systems to mitigate these risks”. Salerno and Gaudioso, ‘Introduction: The Case for Biorisk Management’, in Salerno and Gaudioso (eds), Laboratory biorisk management: biosafety and biosecurity, 2015.

- ^

Dettmann, Ritterson, Lauer and Casagrande, ‘Concepts to Bolster Biorisk Management’, Health Security, 2022.

- ^

Dettmann, Ritterson, Lauer and Casagrande, ‘Concepts to Bolster Biorisk Management’, Health Security, 2022.

- ^

“in a 2010 Perspectives piece in Nature Reviews Microbiology by Casadevall and Relman, the authors question the utility of the SATL and highlight the following paradox: if an agent lacks countermeasures, it is more likely to be included on the SATL; yet the increased regulatory burden placed on research with the agent might in turn prevent the discovery and development of effective countermeasures.” Connell, ‘Biologic agents in the laboratory—the regulatory issues’, Federation of American Scientists, 2011.

- ^

“Biosafety officers receive accreditation from the Institute of Safety in Technology and Research (ISTR), which is a membership organization in the UK for safety professional”. Gronvall, Shearer and Collins, ‘National biosafety systems: Case studies to analyze current biosafety approaches and regulations for Brazil, China, India, Israel, Pakistan, Kenya, Russia, Singapore, the United Kingdom, and the United States’, UPMC Center for Health Security, 2016.

- ^

“The RAC has created another unusual conundrum concerning conflict of interest (Walters, 1991). As it exists, the RAC is an advisory committee to the NIH and was originally charged with fulfilling its role in a critical and independent manner. Yet the RAC sponsor is the chief funding agency for biomedical research in the United States. This has thrust the agency into the position of funding research on the one hand and simultaneously conducting quasi-regulatory oversight on certain aspects of that research. However, an objective observer would have to say that the two areas of responsibility have been carried out with no evidence of significant compromise.” Wivel, ‘Historical Perspectives Pertaining to the NIH Recombinant DNA Advisory Committee’, Human Gene Therapy, 2014.

This is a great report! Adding it to my to-do list! If I have more questions how would you prefer me to contact you? Feel free to reply with a DM.

Glad it's relevant for you! For questions, I'd probably just stick them in the comments here, unless you think they won't be interesting to anyone but you, in which case DM me.

Wow! This is a great report. So much information.

It's also quite scary, and IMHO fully justifies Biosecurity and Biosafety being priorities for EA. We do know what regulations, standards and guidance should look like, because in fields like engineering there are very clear ASTM or ISO rules, and as an engineer, you can be put in jail or sued if you don't follow them. I realise that these have been developed over literally centuries of experience and experiment - yet given the severity of biohazards, it's not OK to just say "well, we did our best".

I'm particularly worried by two points you make, which are very telling:

It really does seem like the people working in the field understand the seriousness of this (as they would) and might even welcome regulation which was stronger if it were based on good science and more consistently applied globally. For example, if ISO 35001 is truly based on the best information available, it would seem very valuable to work towards getting this applied globally, including finding funding to enable labs in poorer countries to fulfil the obligations.

You write that many people in the field view standards as an obstacle to research. In my experience, everyone in R&D has this impression to some extent. People working with explosive solvents complain about not being able to create explosive atmospheres. But mostly this is just words - deep down, they are happy that they are "forced" to work safely, even if sometimes the layers of safety feel excessive. And this is especially true when everyone is required to follow the same safety rules.

This is not my field of expertise at all, but I know quite a bit about industrial chemical engineering safety, so I'm kind of looking at this with a bias. I'd love to hear what true experts in this field think!

But thank you Rose for this really useful summary. I feel so much wiser than I was 30 minutes ago!

Thanks for the kind words!

Can you say more about how either of your two worries work for industrial chemical engineering?

Also curious if you know anything about the legislative basis for such regulation in the US. My impression from the bio standards in the US is that it's pretty hard to get laws passed, so if there are laws for chemical engineering it would be interesting to understand why those were plausible whereas bio ones weren't.

Hi Rose,

To your second question first: I don't know if their are specific laws related to e.g. ASTM standards. But there are laws related to criminal negligence in every country. So if, say, you build a tank and it explodes, and it turns out that you didn't follow the appropriate regulations, you will be held criminally liable - you will pay fines and potentially end up in jail. You may believe that the approach you took was equally safe and/or that it was unrelated to the accident, but you're unlikely to succeed with this defence in court - it's like arguing "I was drunk, but that's not why I crashed my car."

And not just you, but a series of people, perhaps up to and including the CEO, and also the Safety Manager, will be liable. So these people are highly incentivised to follow the guidelines. And so, largely independently of whether there are actually criminal penalties for not following the standards even when there is no accident, the system kind of polices itself. As an engineer, you just follow the standards. You do not need further justification for a specific safety step or cost than that it is required by the relevant standard.

But I'm very conscious that the situations are very different. In engineering, there are many years of experience, and there have been lots of accidents from which we've learned through experience and eventually based on which the standards have been modified. And the risk of any one accident, even the very worst kind like a building collapse or a major explosion, tends to be localised. In biohazard, we can imagine one incident, perhaps one that never happened before, which could be catastrophic for humanity. So we need to be more proactive.

Now to the specific points:

Reporting:

For engineering (factories in general), there are typically two important mechanisms.

Proactive vs. Reactive:

I think the idea is clear. I am sure that someone, probably many people, have done this for bio-hazards and bio-security. But what is maybe different is that for engineers, there is such a wealth of documentation and examples out there, and such a number of qualified, respected experts, that when doing this risk analysis, we have a lot to build on and we're not just relying on first-principles, although we use that too.

For example, I can go on the internet and find some excellent guides for how to run a good HAZOP analysis, and I can easily get experienced safety experts to visit my pilot-plant and lead an external review of our HAZOP analysis, and typically these experts will be familiar with the specific risks we're dealing with. I'm sure people run HAZOP's for Biolabs too (I hope!!) but I'm not sure they would have the same quality of information and expertise available to help ensure they don't miss anything.

From your analysis of biolabs, it feels much more haphazard. I'm sure every lab manager means well and does what they can, but it's so much easier for them to miss something, or maybe just not to have the right qualified person or the relevant information available to them.

What I've described above is not a check-list, but it is a procedure that works in widely different scenarios, where you incorporate experience, understanding and risk-analysis to create the safest working environment possible. And even if details change, you will find more or less the same approach anywhere around the world, and anyone, anywhere, will have access to all this information online.

And ... despite all this, we still have accidents in chemical factories and building that collapse. Luckily these do not threaten civilisation they way a bio-accident could.

Hope this helps - happy to share more details or answer questions if that helps.

Thanks, this is really interesting.

One follow-up question: who are safety managers? How are they trained, what's their seniority in the org structure, and what sorts of resources do they have access to?

In the bio case it seems that in at least some jurisdictions and especially historically, the people put in charge of this stuff were relatively low-level administrators, and not really empowered to enforce difficult decisions or make big calls. From your post it sounds like safety managers in engineering have a pretty different role.

Indeed,

A Safety manager (in a small company) or a Safety Department (in a larger company) needs to be independent of the department whose safety they monitor, so that they are not conflicted between Safety and other objectives like, say, an urgent production deadline (of course, in reality they will know people and so on, it's never perfect). Typically, they will have reporting lines that meet higher up (e.g. CEO or Vice President), and this senior manager will be responsible for resolving any disagreements. If the Safety Manager says "it's not safe" and the production department says "we need to do this," we do not want it to become a battle of wills. Instead, the Safety Manager focuses exclusively on the risk, and the senior manager decides if the company will accept that risk. Typically, this would not be "OK, we accept a 10% risk of a big explosion" but rather finding a way to enable it to be done safely, even if it meant making it much more expensive and slower.

In a smaller company or a start-up, the Safety Manager will sometimes be a more experienced hire than most of the staff, and this too will give them a bit of authority.

I think what you're describing as the people "put in charge of this stuff" are probably not the analogous people to Safety Managers. In every factory and lab, there would be junior people doing important safety work. The difference is that in addition to these, there would be a Safety Manager, one person who would be empowered to influence decisions. This person would typically also oversee the safety work done by more junior people, but that isn't always the case.

Again, the difference is that people in engineering can point to historical incidences of oil-rigs exploding with multiple casualties, of buildings collapsing, ... and so they recognise that getting Safety wrong is a big deal, with catastrophic consequences. If I compare this to say, a chemistry lab, I see what you describe. Safety is still very much emphasised and spoken about, nobody would ever say "Safety isn't important", but it would be relatively common for someone (say the professor) to overrule the safety person without necessarily addressing the concerns.

Also in a lab, to some extent it's true that each researcher's risks mostly impact themselves - if your vessel blows up or your toxic reagent spills, it's most likely going to be you personally who will be the victim. So there is sometimes a mentality that it's up to each person to decide what risks are acceptable - although the better and larger labs will have moved past this.

I imagine that most people in biolabs still feel like they're in a lab situation. Maybe each researcher feels that the primary role of Safety is to keep them and their co-workers safe (which I'm sure is something they take very seriously), but they're not really focused on the potential of global-scale catastrophes which would justify putting someone in charge.

I again emphasise that most of what I know about safety in biolabs comes from your post, so I do not want to suggest that I know, I'm only trying to make sense of it. Feel free to correct / enlighten me (anyone!).