Summary

Sentience Institute is a 501(c)3 nonprofit think tank aiming to maximize positive impact through longtermist research on social and technological change, particularly moral circle expansion. Our main focus in 2022 has been conducting high-quality empirical research, primarily surveys and behavioral experiments, to build the field of digital minds research (e.g., How will humans react to AI that seems agentic and intentional? How will we know when an AI is sentient?). Our two most notable publications this year are a report and open-access data for our 2021 Artificial Intelligence, Morality, and Sentience (AIMS) survey and our paper in Computers in Human Behavior on “Predicting the Moral Consideration of Artificial Intelligences,” and we have substantial room for more funding to continue and expand this work in 2023 and beyond.

AIMS is the first nationally representative longitudinal survey of attitudes on these topics. Even today with limited AI capabilities, we are already seeing the social relationship we have with these digital minds bearing on public discourse, funding, and other events in the trajectory of AI. The CiHB predictors paper is a deep dive into demographic and psychological predictors of AI attitudes, so we can understand why certain people view these topics in the way that they do. This follows up on our 2021 conceptual paper in Futures and literature review in Science and Engineering Ethics. We have also been working to build this new field through hosting a podcast, an AI summit at the University of Chicago, and a regular intergroup call between organizations working on this topic (e.g., Center on Long-Term Risk, Future of Humanity Institute).

The urgency of building this field has been underscored in two ways in 2022. First, AIs are rapidly becoming more advanced, as illustrated in the amazing performance of image generation models — OpenAI’s DALL-E 2, Midjourney, and Stable Diffusion — as well as DeepMind’s Gato as a general-purpose transformer and high-performing language models Chinchilla and Google’s PaLM. Second, the topic of AI sentience had one of its first spikes in the mainstream news cycle, first as OpenAI chief scientist Ilya Sutskever tweeted “it may be that today's large neural networks are slightly conscious” in February, and then a much larger spike as Google Engineer Blake Lemoine was fired after claiming their language model LaMDA is “sentient.”

In 2023, we hope to continue our empirical research as well as develop these findings into a digital minds “cause profile,” making the case for it as a highly neglected, tractable cause area in effective altruism with an extremely large scale. The perceptions, nature, and effects of digital minds are quickly becoming an important part of the trajectory of advanced AI systems and seem like they will continue to be so in medium- and long-term futures. This cause profile would be in part a more rigorous version of our blog post, “The Importance of Artificial Sentience.”

2022 has been a challenging year for effective altruism funding. There was already an economic downturn when this month’s collapse of FTX, one of the two largest EA funders, left many EA organizations like us scrambling for funding and many other funders stretched thin. We expect substantially less funding in the coming months, and we need your help to continue work in this time-sensitive area, perhaps more than any other year since we were founded in 2017. We hope to raise $90,000 this giving season for our work on digital minds, such as surveys, experimental research, the cause profile, and other field-building projects. We will also continue some work on factory farming thanks to generous donors most excited about moral circle expansion in that domain, such as our Animals, Food, and Technology (AFT) survey and an experiment on how welfare reforms like cage-free eggs affect attitudes towards animal farming in Anthrozoös.

As always, we are extremely grateful to our supporters who share our vision and make this work possible. If you are able to in 2022, please consider making a donation.

Accomplishments in 2022 (To Date)

Research

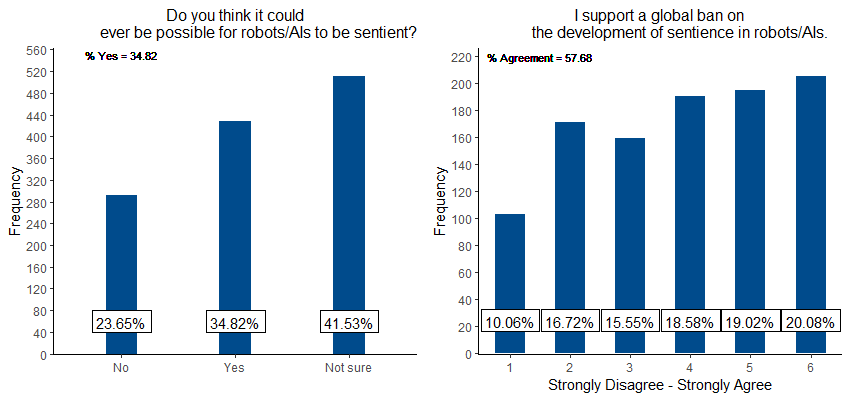

- We published a report and open-access data for our 2021 Artificial Intelligence, Morality, and Sentience (AIMS) survey. This is the first nationally representative longitudinal survey on this topic. For example, we find that 74.91% of U.S. residents agreed that sentient AIs deserve to be treated with respect and 48.25% of people agreed that sentient AIs deserve to be included in the moral circle. Also, people who showed more moral consideration of nonhuman animals and the environment tended to show more moral consideration of sentient AIs. There is much more detail that we hope will be useful for researchers, policymakers, and others in the years to come. And because this year’s survey was the first data ever for many of these questions, we had SI researchers and Metaculus users forecast the results to know how our beliefs should update. We overestimated 6 items and underestimated 18 items related to moral concern for sentient robots/AIs, suggesting we should positively update.

Here is an example of the data from two of our survey questions:

- Our paper in Computers in Human Behavior on “Predicting the Moral Consideration of Artificial Intelligences” is a more in-depth study with 300 U.S. residents on Prolific with a battery of psychological predictors (perspective, relational, expansive, and technological) as well as demographic predictors of moral attitudes towards AIs. We find, for example, that substratism, sci-fi fan identity, techno-animism, and positive emotions were the strongest predictors, suggesting that these groups of people may be the strongest supporters of AI rights, and perhaps those most vulnerable to the ELIZA effect, in the years to come.

- We published our first historical case study for our work on AI, “The History of AI Rights Research.” This is a largely descriptive project because we did not know of any other place where the history of the field had been straightforwardly documented with this depth. We identify several streams of academic thought with, until the 2010s, surprisingly little cross-citation. We suggest four strategies that could help grow the field in beneficial ways. This report is cross-posted on ArXiv.

- Our blog post, “Is Artificial Consciousness Possible? A Summary of Selected Books” provides a go-to citation for the claim that there is a broad consensus among leading philosophers that AI sentience and consciousness is possible, even among scholars such as John Searle known as skeptics. In some cases, this is because those critiques see AI consciousness as technically possible but unlikely with current computing architectures. Most works also cover a “wait and see” approach in that we have deep uncertainty about consciousness or at least which systems have it.

- The essay “The Future Might Not Be So Great” begins to develop a view of cautious longtermism: “Before humanity colonizes the universe, we must ensure that the future we would build is one worth living in.” This is motivated by a careful cataloging of arguments on the value of the long-term future that suggests it is not highly positive, in contrast with the often unanalyzed assumption in conventional longtermism. This is also the subject of a forthcoming book chapter in an academic volume that will be the definitive reference for the term.

- We published a peer-reviewed AI conference paper on consciousness semanticism, a new eliminativist/illusionist theory of consciousness that argues there is no “hard problem” because the vague semantics in the standard philosophical notions of consciousness, such as “what it is like” to be an entity (Nagel 1975), do not lend themselves to the precise answers implied by questions such as, “Is this entity conscious?” By moving past the hard problem, we may make more progress on understanding biological and digital minds.

- We have supported work by our new hire Brad Saad (e.g., in Philosophical Studies and Synthese), though we are not the primary funder of that work and Brad is primarily working on a report on simulations and global catastrophic risks.

- For our farmed animal research program, we published 2021 data from our Animals, Food, and Technology (AFT) survey. As in previous iterations, we found strong U.S. opposition to various aspects of animal farming, such as discomfort with the industry (74.6%) and support for a ban on slaughterhouses (49.1%). We also added a regional analysis showing that the West North Central region (the nation’s “Agricultural Heartland”) is most supportive of animal farming and least supportive of animal product alternatives.

- For our farmed animal research program, we published a paper “The Effects of Exposure to Information About Animal Welfare Reforms on Animal Farming Opposition: A Randomized Experiment” in the peer-reviewed journal Anthrozoös. This presents the results of a preregistered experiment on how welfare reforms like cage-free eggs affect attitudes towards animal farming. We found that participants (n = 1,520) provided with information about current animal farming practices had somewhat higher animal farming opposition (AFO) than participants provided with information about an unrelated topic (d = 0.17). However, participants provided with information about animal welfare reforms did not report significantly different AFO from either the current-farming (d = −0.07) or control groups (d = 0.10).

- For our farmed animal research program, in the coming weeks, we will publish a blog post on “Longtermism and Animal Farming Trajectories” that discusses the long-term implications of socially driven trajectories to the end of animal farming, which may set precedent for moral progress, and technologically driven trajectories, which may lead to faster moral progress before value lock-in.

More detail on our in-progress research is available in our Research Agenda.

Outreach

- On July 7, 2022, we hosted the first intergroup call for organizations working on digital minds research. We expect these calls to occur twice a year with participation from the Sentience Institute, Future of Humanity Institute, Legal Priorities Project, Global Catastrophic Risk Institute, Center on Long-Term Risk, Center for Reducing Suffering, and Mila. These calls may take different forms as we learn what is most effective, such as research workshops where we also invite academics and independent researchers to share their digital minds research findings.

- On October 13–15, 2022, we co-organized The Summit on AI in Society, which included leaders in AI research such as Erik Brynjolffson, Melanie Mitchell, Dewey Murdick, and Stuart Russell. One of the panels was on AI and Nonhumans, including panelists Brian Christian and David Gunkel.

- We released two new podcast episodes with philosopher Thomas Metzinger and psychologist Kurt Gray, and we have recorded episodes with two other professors researching digital minds topics.

- We reorganized parts of our website, including two new landing pages for our two longitudinal surveys, AIMS and AFT.

- We continue to share our research via social media, emails, and meetings with people who can make use of it. In particular this year, we have been heartened by replies from academics who find our work useful in bolstering their own work on digital minds and related topics.

2022 Spending

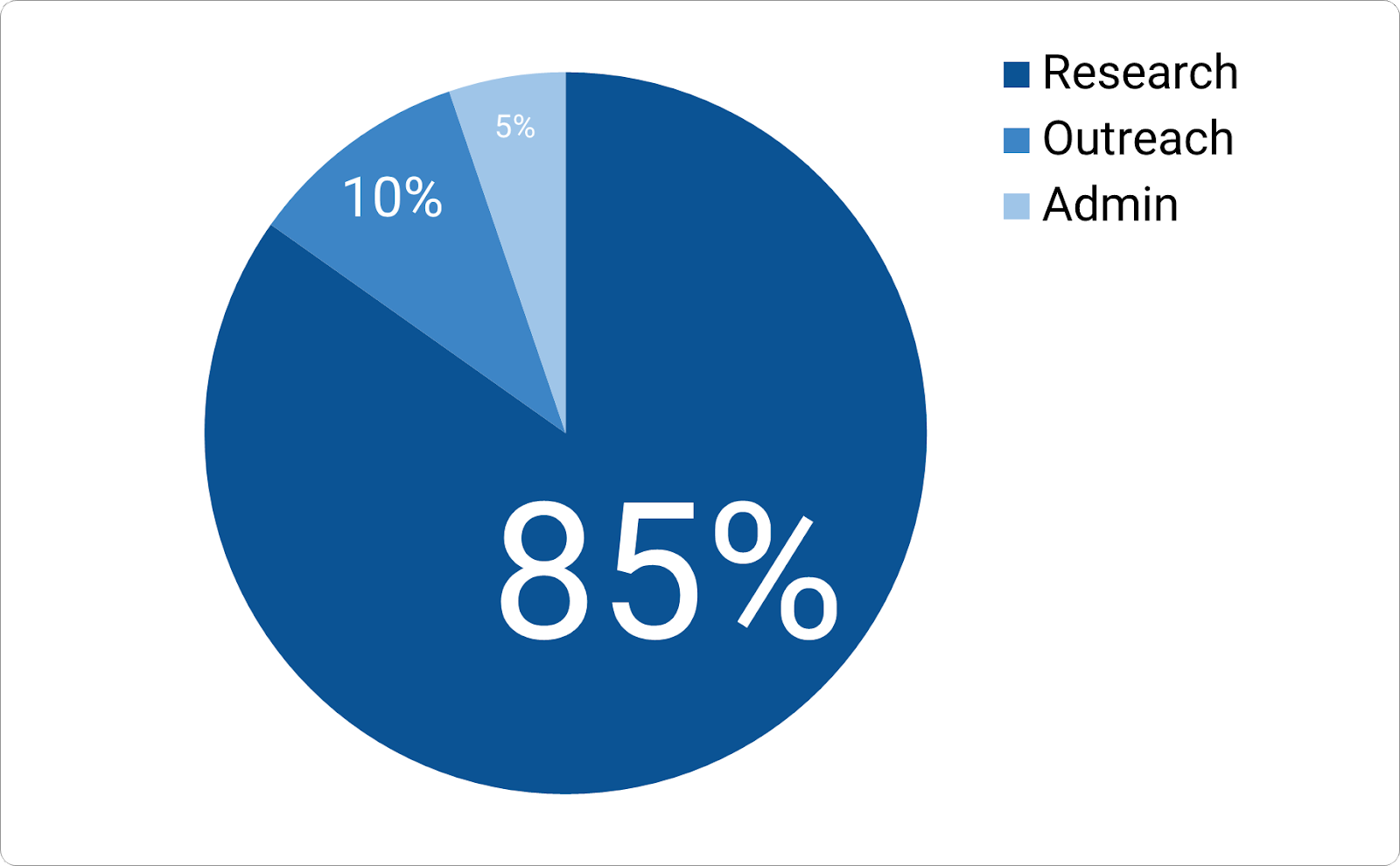

So far this year we’ve spent $154,362, broken down approximately as follows (85% research, 10% outreach, 5% admin). Research expenses are primarily researcher salaries; outreach this year was primarily the staff time spent on the podcast and intergroup call; and admin expenses include insurance, software, and our virtual office subscription.

We continue to maintain a Transparency page with annual financial information, a running list of mistakes, and other public information.

Room for More Funding

We have substantial room for more funding for highly cost-effective projects. We are currently aiming to raise $90,000 this giving season (November 2022–January 2023) to continue our work on digital minds research. We also welcome donations specifically to our continued work on factory farming and will do our best to ensure their counterfactual impact is only an increase in that research.

While we know that the economic downturn and FTX’s collapse will make fundraising difficult this year, we would be thrilled to raise more than this. For each additional $75,000 after that, we could continue hiring at least 2–3 top-tier researchers, up to a total of $300,000. Our early 2022 recruitment had 108 applicants for the generalist position (1 we hired and at least 5 more we would have been thrilled to hire) and 98 researcher applicants (2 we hired and at least 5 more we would have been thrilled to hire). If we were able to raise more, we would put forth a more ambitious plan, which we are open to, for organizational scaling in 2023 and 2024.

If you have questions, feedback, or would like to collaborate, please email me at michael@sentienceinstitute.org. If you would like to donate, you can do so from our website via PayPal or by check.