Note: this is my attempt to articulate why I think it's so difficult to discuss issues concerning AI safety with non-EAs/Rationalists, based on my experience. Thanks to McKenna Fitzgerald for our recent conversation about this, among other topics.

The current 80,000 Hours list of the world's most pressing problems ranks AI safety as the number one cause in the highest priority area section. And yet, it's a topic never discussed in the news. Of course, that's not because journalists and reporters mind talking about catastrophic scenarios. Most professionals in the field, are perfectly comfortable talking about climate change, wars, pandemics, wildfires, etc. So what is it about AI safety that doesn't make it a legitimate topic for a panel on TV?

The EA consensus is roughly that being blunt about AI risks in the broader public would cause social havoc. And this is understandable; the average person seems to interpret the threats from AI either as able to provoke socio-economic shifts similar to those that occurred because of novel technologies during the Industrial Revolution (mostly concerning losing jobs), or as violently disastrous as in science fiction films (where e.g., robots take over by fighting wars and setting cities on fire).

If that's the case, taking seriously what Holden Karnofsky describes in The Most Important Century as well as what many AI timelines suggest (i.e., that humanity might be standing at a very crucial point in its trajectory) could easily be interpreted in ways that would lead to social collapse just by talking about what the problem concerns. Modern AI Luddites would potentially form movements to "prevent the robots from stealing their jobs". Others would be anxiously preparing to physically fight and so on.

But, even if the point about AGI doesn't get misinterpreted, if, in other words, all the technical details could be distilled in a way that made the arguments accessible to the public, it's very likely that it would trigger the same social and psychological mechanisms that would result in chaos manifested in various ways (for example, stock market collapse because of short timelines, people breaking laws and moral codes since nothing matters if the end is near, etc.).

From that conclusion, it makes sense to not want alignment in the news. But what about all the people that would understand and help solve the problem if only they knew about it and how important it is? In other words, shouldn't the communication of science also serve the purpose of attracting thinkers to work on the problem? This looks like the project of EA so far; it's challenging and sometimes risky itself, but the community seems to overall have a good grasp of its necessity and to strategize accordingly.

Now, what about all the non-EA/Rationalist circles that produce scholarly work but refuse to put AI ethics/safety in the appropriate framework? Imagine trying to talk about alignment and your interlocutor thinks that Bostrom's paperclips argument is unconvincing and too weird to be good analytic philosophy, that it must be science fiction. There are many background reasons why they may think that. The friction this resistance and sci-fi-labelling creates is unlikely to be helpful if we are to deal with short timelines and the urgency of value alignment. What's also not going to be helpful is endorsing the conceptual, metalinguistic disputes some philosophers of AI are engaging with (for instance, trying to define "wisdom" and arguing that machines will need practical wisdom to be ethical and helpful to humans). To be fair, however, I must note that the necessity to teach machines how to be moral was emphasized by certain philosophers (such as Colin Allen) early on in the late 2000s.

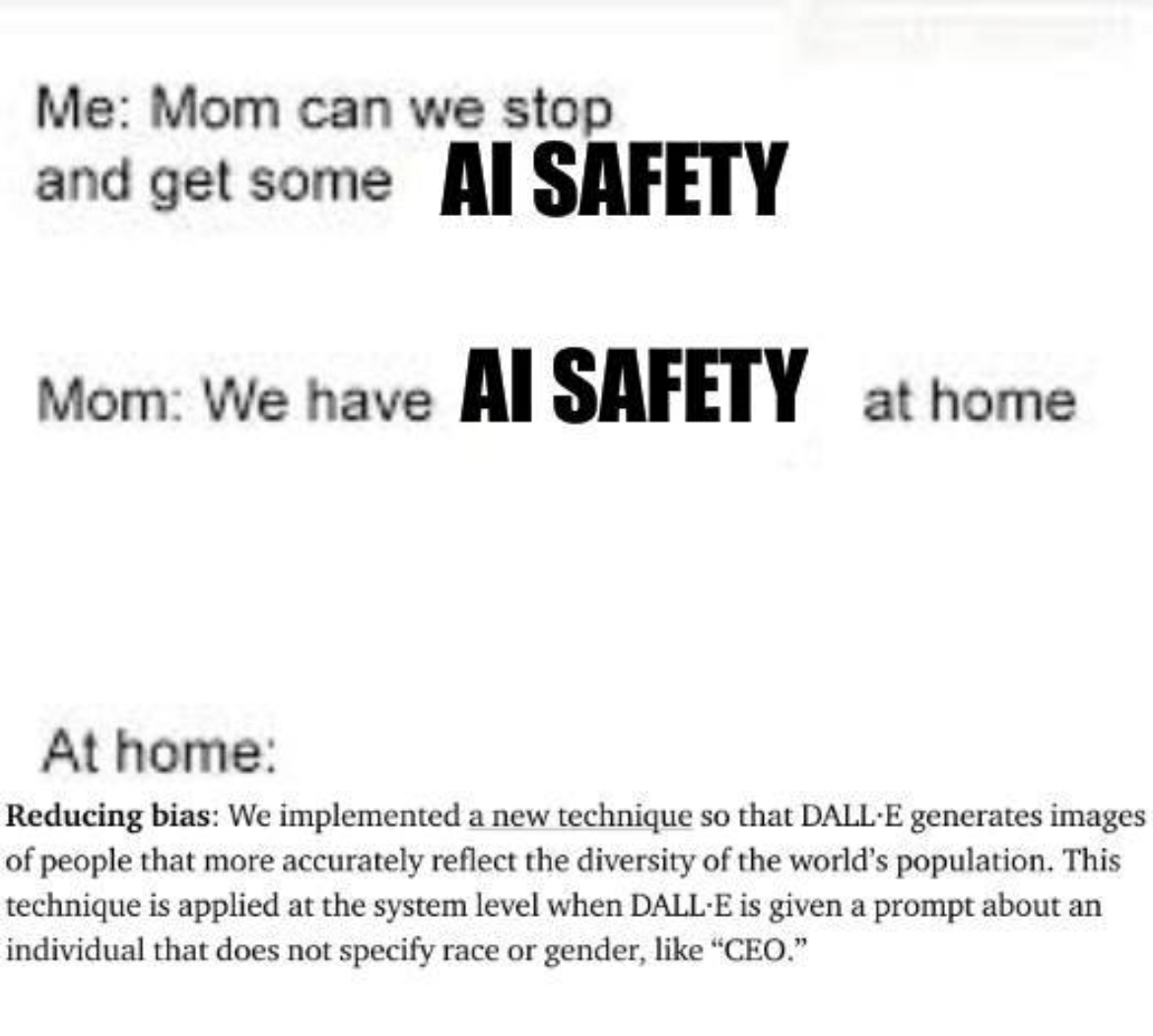

Moreover, as of now, the state of AI ethics seems to be directed towards questions that are important, but might give the impression that misalignment merely consists of biased algorithms that discriminate among humans based on their physical characteristics, as it often happens for example, with AI image creation systems that don't take into account racial or gender diversity in the human population. To be clear, I'm neither downplaying the seriousness of this as a technical problem nor its importance for the social domain. I just want to make sure that the AI ethics agenda prioritizes the different risks and dangers in a straightforward way, i.e., existential risks that accompany the development of this technology deserve more effort and attention than non-existential risks.

In conclusion, here I list some reasons why I think AI safety is so difficult to talk about:

- It's actually weird to think about these issues and it can mess with one's intuitions about how science and technology progress; the amount of time each of us spends on earth is too short to be able to grasp how fast or slow technological advancements took place in the past and to project that into the not-so-distant future.

- This weirdness entails significant uncertainties at different levels (practical, epistemic, moral). Uncertainty is by itself an uncomfortable feeling that people usually try to repel in various ways (e.g., by rationalizing).

- It's a very new area of organized research; many of the AI safety teams/organizations have been around for only a couple of years.

- There isn't a lot of work published in peer-reviewed journals (which is a turn-off for many academics who don't give credibility to blog posts, despite the fact that many of them are well-researched and technically rigorous).

- Narratives about future catastrophic scenarios exist in most cultures and eras. The belief that "our century is the most important one" isn't special to our time. If people were wrong about their importance in the past, why would our case be any different?

- There's a widespread reluctance to take seriously what our best science takes seriously (which is not limited to issues in AI).

- There's a broader crisis in science communication and journalism that creates skepticism towards research communities.

- There are persistent open questions in the philosophy and theory of science such as the demarcation problem, what makes a claim scientific, how falsifiability works, and many more.

Social havoc isn't bad by default. It's possible that a publicity campaign would result in regulations that choke the life out of AI capabilities progress, just like the FDA choked the life out of biomedical innovation.