This is a linkpost for https://twitter.com/AnthropicAI/status/1764653830468428150

This is another important datapoint suggesting that stacking an org full of EAs seems to make very little difference to the decision making of the org.

This is another important datapoint suggesting that stacking an org full of EAs seems to make very little difference to the decision making of the org.

As I understand it, [part of] Anthropic's theory of change is to be a meaningful industry player so its safety agenda can become a potential standard to voluntarily emulate or adopt in policy. Being a meaningful industry player in 2024 means having desirable consumer products and advertising them as such.

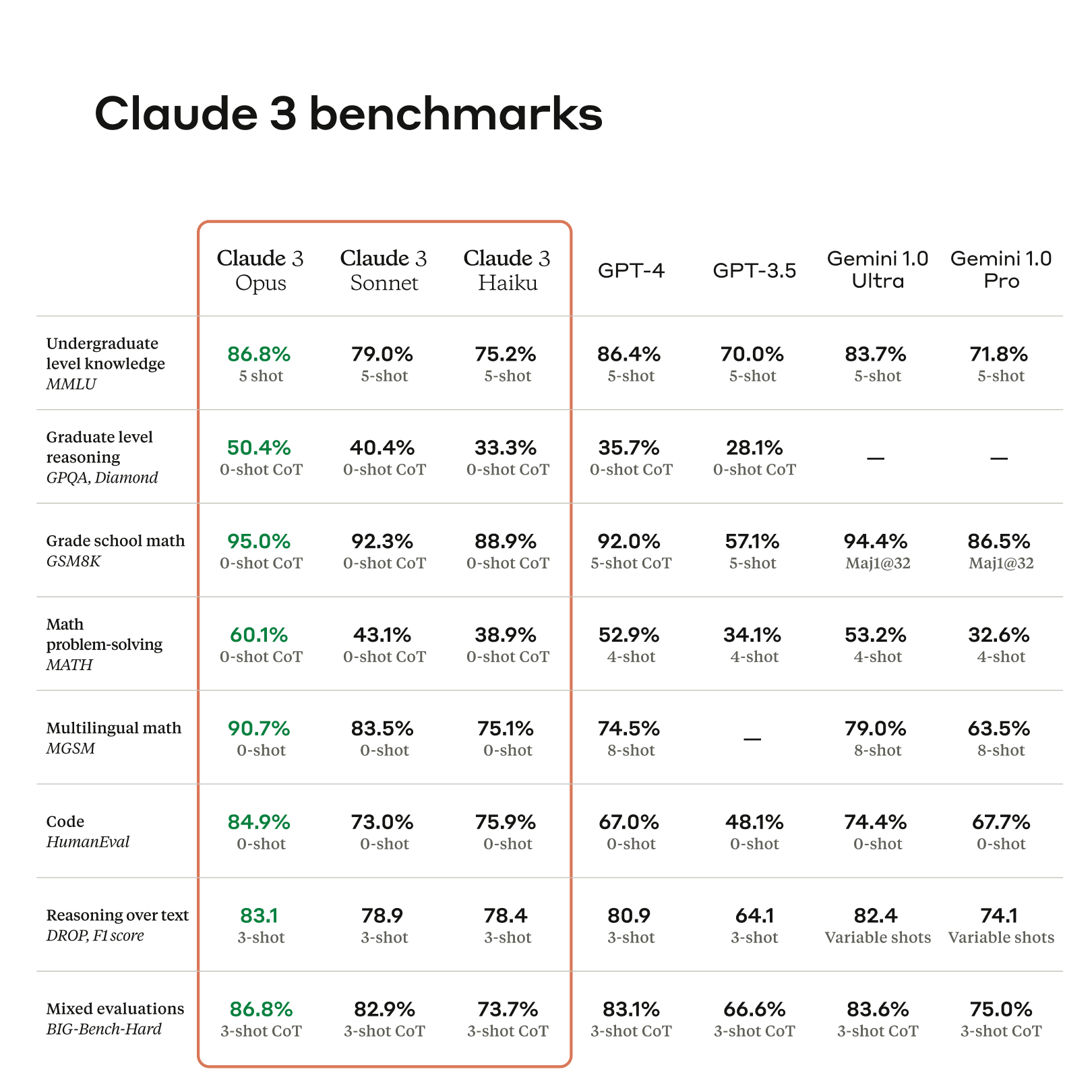

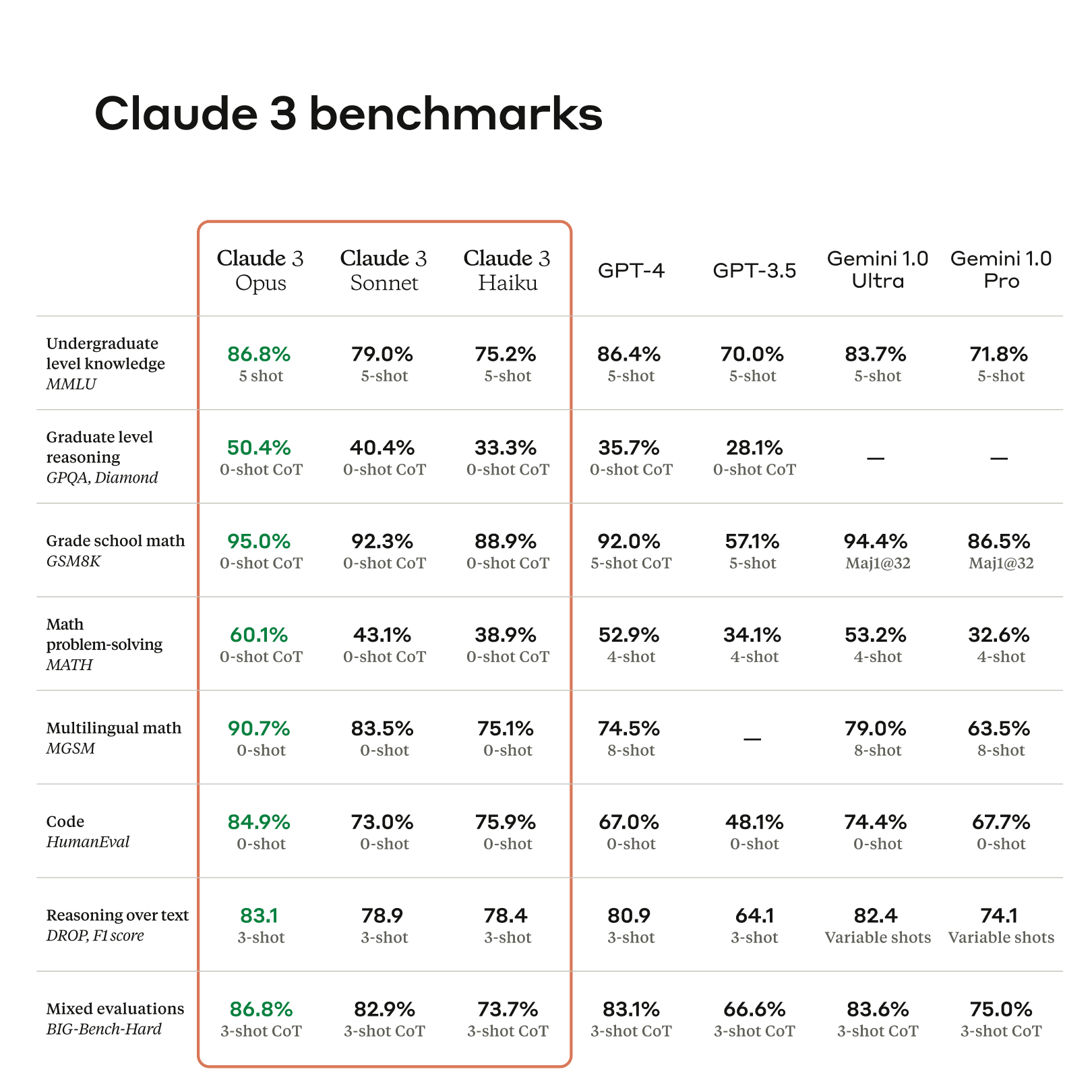

It's also worth remembering that this is advertising. Claiming to be a little bit better on some cherry picked metrics a year after GPT-4 was released is hardly a major accelerant in the overall AI race.

Fair point. On the other hand, the perception is in many ways more important than the actual capability in terms of incentivizing competitors to race faster.

Also based on early user reports it seems to actually be noticably better than GPT-4.

Yes, I think this is a reasonable response. However, it seems to rest on the assumption that just trying a bit harder at safety makes a meaningful difference. If Alignment is very hard then Anthropic's AIs are just as likely to kill everyone as other labs'. It seems very unclear whether having "safety conscious" people at the helm will make any difference to our chance of survival, especially when they are almost always forced to make the exact same decisions as people who are not safety conscious in order to stay at the helm.

Even if they are right that it is important to stay in the race, what Anthropic should be doing is

All of which they could do while continuing to compete in the race. RSPs are nice, but not sufficient.

I think this characterizes the disagreement between pause advocates and Anthropic as it stood before the Claude 3 release with some pause-advocacy-favorable assumptions about the politics of maintaining one's position in the industry. Full-throated, public pause advocacy doesn't seem like a good way to induce investment in your company, for example.

More broadly I think Anthropic, like many, hasn't come to final views on these topics and is working on developing views, probably with more information and talent than most alternatives by virtue of being a well-funded company.

It would be remiss to not also mention the large conflict of interest analysts at Anthropic have when developing these views.