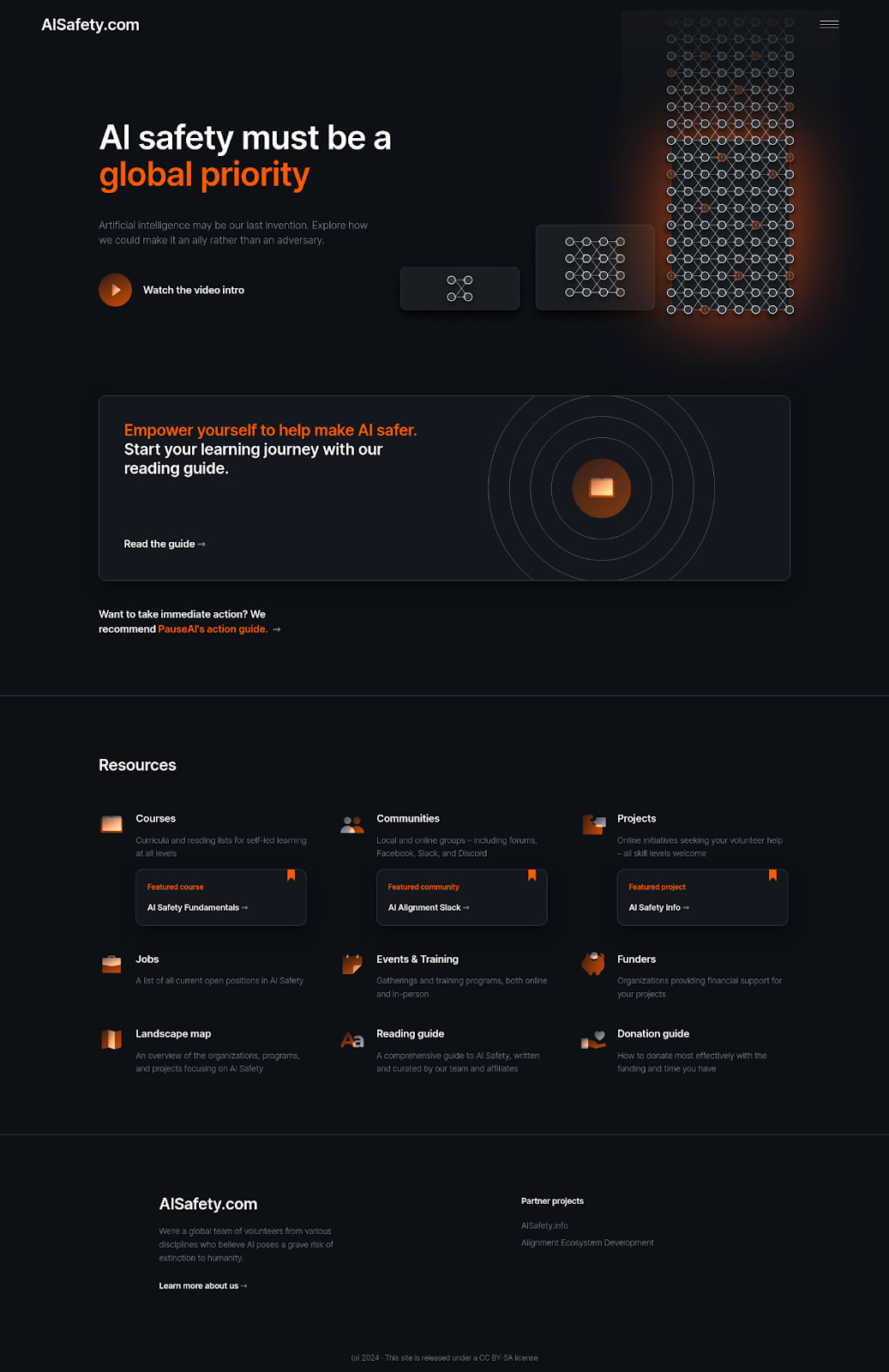

There are many resources for those who wish to contribute to AI Safety, such as courses, communities, projects, jobs, events and training programs, funders and organizations. However, we often hear from people that they have trouble finding the right resources. To address this, we've built AISafety.com as a central hub—a list-of-lists—where community members maintain and curate these resources to increase their visibility and accessibility.

In addition to presenting resources, the website is optimized to be an entry point for newcomers to AI Safety, capable of funnelling people towards understanding and contributing.

The website was developed on a shoestring budget, relying extensively on volunteers and Søren paying out of pocket. We do not accept donations, but if you think this is valuable, you’re welcome to help out by reporting issues or making suggestions in our tracker, commenting here, or volunteering your time to improve the site.

Feedback

If you’re up for giving us some quick feedback, we’d be keen to hear your responses to these questions in a comment:

- What's the % likelihood that you will use AISafety.com within the next 1 year? (Please be brutally honest)

- What list of resources will you use?

- What could be changed (features, content, design, whatever) to increase that chance?

- What's the % likelihood that you will send AISafety.com to someone within the next 1 year?

- What could be changed (features, content, design, whatever) to increase that chance?

- Any other general feedback you'd like to share

Credits

- Project owner and funder – Søren Elverlin

- Designer and frontend dev – Melissa Samworth

- QA and resources – Bryce Robertson

- Backend dev lead – nemo

- Volunteers – plex, Siao Si Looi, Mathilde da Rui, Coby Joseph, Bart Jaworski, Rika Warton, Juliette Culver, Jakub Bares, Jordan Pieters, Chris Cooper, Sophia Moss, Haiku, agucova, Joe/Genarment, Kim Holder (Moonwards), de_g0od, entity, Eschaton

- Reading guide embedded from AISafety.info by Aprillion (Peter Hozák)

- Jobs pulled from 80,000 Hours Jobs Board and intro video adapted from 80,000 Hours’ intro with permission

- Communities list, The Map of Existential Safety, AI Ecosystem Projects, Events & Training programs adapted from their respective Alignment Ecosystem Development projects (join the Discord for discussion and other projects!). Funding list adapted from Future Funding List, maintained by AED.

This is fantastic!

Do you know if anything like this exists for other cause areas, or the EA world more broadly?

I have been compiling and exploring resources available for people interested in EA and different cause areas. There is a lot of organisations and opportunities to to get career advice, or undertake courses, or get involved in projects, but it is all scattered and there is no central repository or guide for navigating the EA world that I know of.

Thanks Tristan :)

AFAIK the closest to this for EA generally is effectivealtruism.org, which links to some resources.

I can imagine something more comprehensive (like an AISafety.com for EA) perhaps being useful. One thing that might be interesting is a landscape map of the main orgs and projects in EA – we'd be happy to share the code of the AI safety map if you chose to explore that.

Thanks for this, OP!

I hadn't seen the latest updates to the site since ~launch and have sent my own feedback.

Fellow Forum users—consider taking a few min to look at the site and give their sense of the UX or whatever other feedback feels useful.

You, yes, you could help aisafety.com visitors have a smoother/more impactful experience, adding real value to the time spent by the team on this already at a pretty minimal cost to you :)