This project by CEARCH investigates the cost-effectiveness of resilient food pilot studies for mitigating the effects of an extreme climate cooling event. It consisted of three weeks of desktop research and expert interviews.

Headline Findings

- CEARCH finds the cost-effectiveness of conducting a pilot study of a resilient food source to be 10,000 DALYs per USD 100,000, which is around 14× as cost-effective as giving to a GiveWell top charity[1] (link to full CEA).

- The result is highly uncertain. Our probabilistic model suggests a 53% chance that the intervention is less cost-effective than giving to a GiveWell top charity, and an 18% chance that it is at least 10× more cost-effective. The estimated cost-effectiveness is likely to fall if the intervention is subjected to further research, due to optimizer’s curse[2].

- The greatest sources of uncertainty are 1) the likelihood of nuclear winter and 2) the degree to which evidence from a pilot study would influence government decision-making in a catastrophe

- We considered two other promising interventions to mitigate the effects of an ASRS: policy advocacy at the WTO to amend restrictions that affect food stockpiling, and advocating for governments to form plans and strategies for coping with the effects of Abrupt Sunlight Reduction Scenarios (ASRSs). We believe these could possibly be very cost-effective interventions, although we expect them to be difficult to assess.

- Our detailed cost-effectiveness analysis can be found here, and the full report can be read here.

Executive Summary

In an Abrupt Sunlight Reduction Scenario (ASRS) the amount of solar energy reaching Earth’s surface would be significantly reduced. Crops would fail, and many millions would be without food. Much of the research on mitigating the effects of these catastrophes is the product of ALLFED. Their work has been transformative for the field, but may be seen by some as overly pessimistic about the likelihood of agricultural shortfalls, and overly optimistic about the effectiveness of their suggested interventions. This project aims to provide a neutral “second opinion” on the cost-effectiveness of mitigating ASRSs.

Nuclear winter is probably the most likely cause of an ASRS, although the size of the threat is highly uncertain. Nuclear weapons have only once been used in war, and opinions differ on the likelihood of a future large-scale conflict. Even if there is a major nuclear war, the climate effects are contested. Much of the scientific literature on nuclear winter is the product of a small group of scientists who may be politically motivated to exaggerate the effects. Critics point to the long chain of reasoning that connects nuclear war to nuclear winter, and argue that slightly less pessimistic assumptions at each stage can lead to radically milder climate effects. We predict that there is just a 20% chance that nuclear winter follows large-scale nuclear war. Even so, this implies that nuclear winter represents over 95% of the total ASRS threat.

There are a number of ways to prepare: making plans; fortifying our networks of communication and trade; building food stockpiles; developing more resilient food sources. After a period of exploration, we decided to focus on the cost-effectiveness of conducting a pilot study for one resilient food source. “Resilient” food sources, such as seaweed, mass-produced greenhouses, or edible sugars derived from plant matter, can produce food when conventional agriculture fails, mitigating the food shortage. We know that these sources can produce edible food, but none have had pilot studies that identify the key bottlenecks in scaling up the process rapidly in a catastrophe. We believe that such a pilot study would increase the chances of the resilient food source being deployed in an ASRS, and that the lessons learnt in the study would enable the food source to be harnessed more productively.

We assume that resilient foods would not make a significant difference in milder scenarios, although this is far from certain[3]. We do not attempt to measure the benefits of resilient food sources in other climate and agricultural catastrophes[4].

Unlike previous analyses, ours accounts for specific reasons that resilient food technologies may not be adopted in a catastrophe, including disruptions to infrastructure, political dynamics or economic collapse. We attempt to model the counterfactual effect of the pilot itself on the deployment of the food source in a catastrophe. Due to lack of reliable data, however, we rely heavily on subjective discounts.

We estimate that a USD 23 million pilot study for one resilient food source would counterfactually reduce famine mortality by 0.66% in the event of an ASRS, preventing approximately 16 million deaths from famine.

Mortality represents 80% of the expected burden of an ASRS in our model, with the remainder coming from morbidity and economic losses. There is some uncertainty about the scale of the economic damage, but we are confident that famine deaths would form the bulk of the burden. We do not consider the long-term benefits of mitigating mass global famine.

Our full CEA assesses the intervention in detail. We draw upon objective reference classes when we can, and we avoid anchoring on controversial estimates by using aggregates where possible.

Our final result suggests that there is a distinct possibility that the cost-effectiveness of developing a resilient food source is competitive with GiveWell top charities. The result is highly uncertain and is especially contingent on 1) the likelihood of nuclear winter and 2) the degree to which evidence from a pilot study would influence government decision-making in a catastrophe.

Cost-effectiveness

Overall, we estimate that developing one resilient food source would cost approximately USD 23 million and would reduce the number of famine deaths in a global agricultural shortfall by 0.66%. We project that the intervention would have a persistence of approximately 17 years.

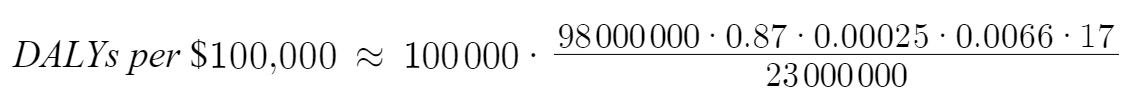

Given that we estimate the toll of a global agricultural shortfall to be approximately 98 billion DALYs, we obtain an estimated cost-effectiveness of 10,000 DALYs per USD 100,000, which is 14× as cost-effective as a GiveWell top charity[1].

The final cost-effectiveness calculation is approximately

With the following figures:

| 98,000,000,000 | Burden of an ASRS, DALYs |

| 0.87 | Proportion of the burden that is affectable once the delay in conducting the study is accounted for |

| 0.00025 | Annual probability of an ASRS |

| 0.0066 | Reduction in burden due to resilient food pilot study |

| 17 | Persistence of intervention (equivalent baseline years) |

| 23,000,000 | Cost of intervention, USD |

The calculation above is heavily simplified. Check the full CEA to see how the figures above were reached.

Overall uncertainty

Although the cost-effectiveness is estimated to be 14× that of a GiveWell top charity, our uncertainty analysis suggests there is only a 47% chance that the cost-effectiveness is at least 1× that of a GW top-charity, and a 18% chance that it is at least 10×. Hence the central cost-effectiveness estimate is heavily influenced by a minority of “right-tail” scenarios of very high cost-effectiveness.

We derived the above estimate by creating an alternative version of our CEA that incorporates uncertainty. Most input values were modeled as Beta or LogNormally-distributed random variables, and the adapted CEA was put through a 3000-sample Monte Carlo simulation using Dagger. In most cases the input distributions were determined subjectively, using the same mean value as the point-estimate used in the CEA. An important exception is the cost: instead of modeling cost as a distribution and dividing “effectiveness” by “cost” to get cost-effectiveness, we model the reciprocal of cost as a distribution, and multiply “effectiveness” by this to get cost-effectiveness. This allows us to avoid obtaining a different central estimate to our CEA due to the result E[X/Y]≠E[X]/E[Y].

Link to full report // Link to cost-effectiveness analysis // Link to summary of expert interview notes

- ^

- ^

The headline cost-effectiveness will almost certainly fall if this cause area is subjected to deeper research: (a) this is empirically the case, from past experience; and (b) theoretically, we suffer from optimizer's curse (where causes appear better than the mean partly because they are genuinely more cost-effective, but also partly because of random error favoring them, and when deeper research fixes the latter, the estimated cost-effectiveness falls).

- ^

In mild agricultural shortfalls such as those that may be triggered by crop blight, VEI-7 volcanic eruption or extreme weather, adaptations like redirecting animal feed, rationing and crop relocation would in theory be sufficient to feed everyone. However, it’s plausible that resilient foods could ease these crises by filling gaps in available nutrition (protein, for example) or providing new sources of animal feed which make more crops available for human consumption.

- ^

Resilient food sources could prove useful in a variety of agricultural shocks. Industrial sources such as cellulosic sugar plants could form a reliable food source in scenarios of 10+°C warming, or in the face of an engineered crop pathogen targeting the grass family [credit: David Denkenberger]

Nice analysis, Stan!

Under your assumptions and definitions, I think your 20.2 % probability of nuclear winter if there is a large-scale nuclear war is a significant overestimate. You calculated it using the mean of a beta distribution. I am not sure how you defined it, but it is supposed to represent the 3 point estimates you are aggregating of 60 %, 8.96 % and 0.0355 %. In any case, 20.2 % is quite:

A common thread here is that aggregation methods which ignore information from extreme predictions tend to be worse (although one should be careful not to overweught them). As Jaime said with respect to mean (and I think the same applies to the MLE of the mean of a beta distribution fitted to the samples):

For these reasons, I would aggregate the 3 probabilities using the geometric mean of odds, in which case the final probability would be 17.9 % as large.

Based on my adjustment to the probability of nuclear winter, I would conclude the cost-effectiveness is 2.51 (= 14*0.179) times that of GiveWell's top charities (ignoring effects on animals), i.e. within the same order of magnitude. This would be in agreement with what I said in my analysis of nuclear famine about the cost-effectiveness of activities related to resilient food solutions:

I should also note there are way more cost-effective intervention to increase welfare:

In addition, life-saving interventions have to contend with the meat-eater problem:

I would also be curious to know about whether CEARCH has been mostly using the mean, or other methods underweighting low predictions, to aggregate probabilities differing a lot between them, both in this analysis and others. I think using the mean will tend to result in overestimating the cost-effectiveness, which might explain some of the estimates I consider intuitively quite high.

Thanks for the comment, Vasco!

We have been thinking about aggregation methods a lot here at CEARCH, and our views on it are evolving. A few months ago we switched to using the geometric mean as our default aggregation method - although we are considering switching to the geometric mean of odds for probabilities, based on Simon's M persuasive post that you referenced (although in many cases the difference is very small).

Firstly I'd like to say that our main weakness on the nuclear winter probability is a lack of information. Experts in the field are not forthcoming on probabilities, and most modeling papers use point-estimates and only consider one nuclear war scenario. One of my top priorities as we take this project to the "Deep" stage is to improve on this nuclear winter probability estimate. This will likely involve asking more experts for inside views, and exploring what happens to some of the top models when we introduce some uncertainty at each stage.

I think you are generally right that we should go with the method that works the best on relatively large forecasting datasets like Metaculus. In this case I think there is a bit more room for personal discretion, given that I am working from only three forecasts, where one is more than two orders of magnitude smaller than the others. I feel that in this situation - some experts think nuclear winter is an almost-inevitable consequence for large-scale nuclear war, others think it is very unlikely - it would just feel unjustifiably confident to conclude that the probability is only 2%. Especially since two of these three estimates are in-house estimates.

Thanks for the reply, Stan!

Cool!

Right, I wish experts were more transparent about their best guesses and uncertainty (accounting for the limitations of their studies).

Nice to know there is going to be more analysis! I think one important limitation of your current model, which I would try to eliminate in further work, is that it relies on the vague concept of nuclear winter to define the climatic effects. You calculate the expected mortality multiplying:

However, I believe it is better to rely on a more precise concept to assess the climatic effects, namely the amount of soot injected into the stratosphere, or the mean drop in global temperature over a certain period (e.g. 2 years) after the nuclear war. In my analysis, I relied on the amount of soot, estimating the expected famine deaths due to the climatic effects multiplying:

Ideally, I would get the expected famine deaths multiplying:

Luisa followed something like the above, although I think her results are super pessimistic.

Fair point, there is no data on which method is best when we are just aggregating 3 forecasts. That being said:

I think there is a natural human bias towards thinking that the probability of events whose plausibility is hard to assess (not lotteries) has to be somewhere between 10 % to 90 %. In general, my view is more that it feels overconfident to ignore predictions, and using the mean does this when samples differ a lot among them. To illustrate, if I am trying to aggregate N probabilities, 10 %, 1 %, 0.1 %, ..., and 10^-N, for N = 9:

I think the mean is implausible because:

You say 2 % probability of nuclear winter conditional on large nuclear war seems unjustifiable, but note the geometric mean of odds implies 4 %. In any case, I suspect the reason even this would feel too high is that it may in fact be too high, depending on how one defines nuclear winter, but that you are overestimating famine deaths conditional on nuclear winter. You put a weight of:

At the end of the day, I should say our estimates for the famine deaths are pretty much in agreement. I expect 4.43 % famine deaths due to the climatic effects of a large nuclear war, whereas you expect 6.16 % (20.2 % probability of nuclear winter if there is a large-scale nuclear war times 30.5 % deaths given nuclear winter).

For the question "What is the unconditional probability of London being hit with a nuclear weapon in October?", the 7 forecasts were 0.01, 0.00056, 0.001251, 10^-8, 0.000144, 0.0012, and 0.001. The largest of these is 1 M (= 0.01/10^-8) times the smallest, whereas in your case the largest probability is 2 k (= 0.6/0.000355) times the smallest.

In the report a footnote 2 states:

"In mild agricultural shortfalls such as those that may be triggered by crop blight, VEI-7 volcanic eruption or extreme weather, adaptations like redirecting animal feed, rationing and crop relocation would in theory be sufficient to feed everyone".

How did you come to that conclusion? We're only aware of 1 academic study (Puma et al 2015) about food losses from a VEI 7 eruption and it estimates 1-3 billion people without food per year (I think this is a likely an overestimate, and I'm trying to do research to quantify this), so just trying to figure out what you're basing the above statement on, does this take into consideration food price increases and who would be able to pay for food (even if there is technically enough)?

Also note the use recurrence intervals of super eruptions is an order of magnitude off from Loughlin paper which has since been changed (see discussion here: https://forum.effectivealtruism.org/posts/jJDuEhLpF7tEThAHy/on-the-assessment-of-volcanic-eruptions-as-global ). Also note, VEI 7 eruptions can sometimes have the same/if not greater climatic impact as super-eruptions, as the magnitude scale is based on the quantity of ash erupted whilst the clmatic impact is based on the amount of sulfur emitted (which can be comparable for VEI 7 and 8 eruptions). I mention these as whilst nuclear war probabilities have huge uncertainties, our recurrence intervals from ice cores of large eruptions are now well constrained, so it might help with the calcs.

Thank you for your comment!

From a quick scan through Puma et al 2015 it seems like the argument is that many countries are net food importers, including many poor countries, so smallish shocks to grain production would be catastrophic as prices rise and importers have to buy more and at higher prices which they can't afford. I agree that this is a major concern and that it's possible that a sub-super eruption could lead to a large famine in this way. When I say "adaptations like redirecting animal feed, rationing and crop relocation would in theory be sufficient to feed everyone" I mean that with good global coordination we would be able to free up plenty of food to feed everyone. That coordination probably includes massive food aid or at the least large loans to help the world's poorest avoid starvation. More importantly, resilient food sources don't seem like a top solution in these kinds of scenarios. It seems cheaper to cull livestock and direct their feed to humans than to scale up expensive new food sources.

Thanks for the link - now you mention it I think I read this post at the beginning of the year and found it very interesting. In my analysis I'm assuming resilient foods only help in severe ASRS, where there are several degrees of cooling for several years. Do you think this could happen with VEI 7 eruptions?

I couldn't find the part where the Loughlin paper has been changed. Could you direct me towards it?

Hi Stan, thanks for your response. I understand your main thesis now -seems logical provided those ideal circumstances (high global co-operation and normal trade).

VEI 7 eruptions could lead to up to 2-3 degrees of global cooling for ~5-10 years (but more elevated in the northern hemisphere). See here: https://doi.org/10.1029/2020GL089416

More likely is two VEI 6 eruptions close together, which may provide longer duration cooling of a similar amount ~2 degrees, like in the mid 6th century (Late antique ice age).

The Loughlin chapter didn't account for the incompletness of the geological record like papers published since have done with statistical methods (e.g. Rougier paper I cite in that post), or with ice cores that are better at preserving eruption signatures compared with the geological record.