And how to be mindful with beginners

I love Effective Altruism. ❤

That’s not an exaggeration — I truly love the community and the way I’ve grown as a result of joining the movement.

I first heard about EA back in 2013 when its messaging was pretty straightforward: use data to donate to more effective charities that will alleviate the suffering of the global poor.

Now, the scope of EA has broadened significantly, and the number of people who subscribe to the philosophy of EA has also ballooned.

For the record, I think this is fabulous and should celebrate our growth so far! We can and must continue to expand our areas of inquiry, and I’m proud of the way that EA has grown to include animal welfare, long term flourishing, and many more topics.

But this growth comes with a byproduct: much more content.

While the early content on the web about EA was focused predominantly on global health and development, the current messaging includes things like AI safety research and ancient viruses trapped in permafrost. Given the increase in our interests as a community in the last decade, it’s no wonder that the amount of content on the web about Effective Altruism has skyrocketed as well.

But just like rockets, there is sometimes a risk of too much content.

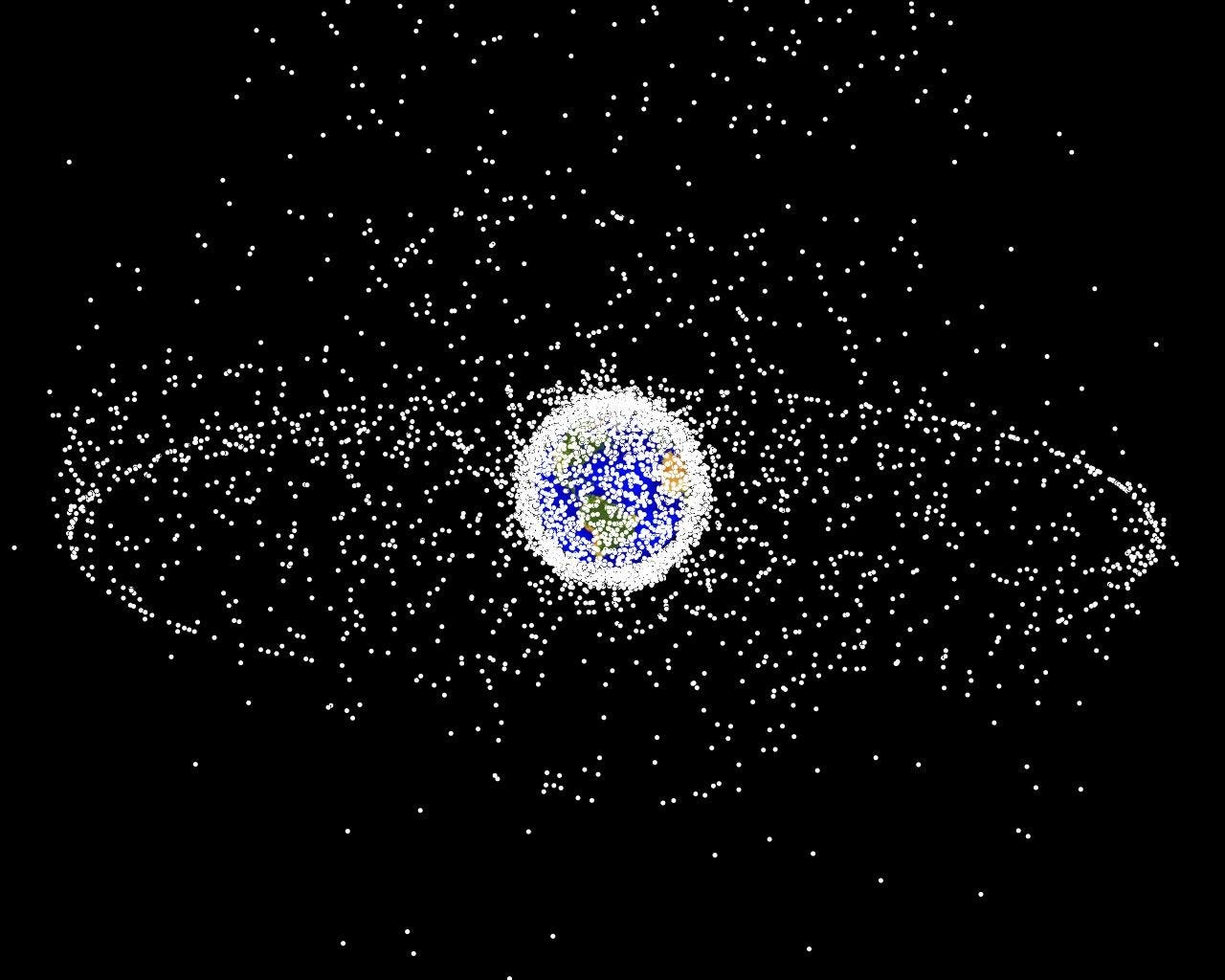

In cosmic terms, there is a phenomenon known as Kessler Syndrome, which is a hypothetical (but very plausible) scenario in which the outer atmosphere becomes so clouded with debris from defunct satellites and other space junk that it becomes impossible to safely launch more rockets into space.

If such an event were to happen, humans would effectively be locked in (barring some new technology that would clean up outer space), and things would only get worse as time went on, since the space junk could collide with other space junk, leading to more space junk.

Locked in or locked out?

I worry that something similar may be happening with content online about EA.

10 years ago, there were early forum posts, GiveWell, and a few books by Peter Singer.

Now, there are conferences and podcasts and tons of forum posts and lots of books and local EA chapters and newsletters and much, much more.

Don’t get me wrong, I love all of this content! As a firm believer in EA, I’m excited by the meteoric rise in activity and the increasing diversity of our interests.

However, for newbies to the movement, we may run into a risk of Kessler Syndrome when it comes to online content.

I’ll give you an example using someone very near to my heart: my grandma.

I recently became the EA NYC co-director through the Community Building Grant at CEA. I’m SO excited for the role, and of course I told my family. My grandma is 93 (and she would absolutely MURDER me if she knew I disclosed her real age in public, so please don’t send this article to her!) - and she did what any curious grandma would do about her grandson’s new interests: she Googled Effective Altruism.

Now, I don’t know exactly how she got there, but she wound up calling me about the coming AI apocalypse and whether we should have an emergency plan in case robots start taking over the world.

While I think it’s fabulous that my grandma has a budding interest in AI safety, it may not have been the best introduction for her. In effect, she was launched into the vacuum of space (i.e. the internet) and bombarded by the blog posts, podcasts, books, etc.

I believe we may risk alienating some newcomers if they feel overwhelmed or confused by the central tenets of EA, what it stands for, and how they can easily get involved.

Keep in mind, I don’t think the answers to these tenets are at all hard to come by. In fact, we have more of an apparatus for newcomers than ever before thanks to the amazing work of CEA, 80,000 hours, and programs like the Community Builders Grants.

My suggestion to avoiding EA Kessler Syndrome is actually quite simple: be mindful of the folks you’re speaking to about EA and be sure that they know of the simplest and cleanest way to learn more: the effectivealtruism.org website. It’s clear, concise, and extremely approachable. While we can have a discussion about what we as a community think the best on-ramp to EA is, the site seems to me like the clearest contender for now.

We ought to be as clear as possible when explaining EA to folks who are just learning about the philosophy, and we should do our best to make sure they see the movement and its members as approachable and accessible. Of course, that doesn’t just stop at the online content we share; it’s incumbent on each of us to be ambassadors to the best of our abilities, and that includes being gentle, courteous advocates who welcome new members with open arms and open minds.

And if there’s nothing else you take from this post, I hope you’ll at least remember to not launch your grandmas into outer space! 😋

Great message and fun to read. Although I'm skeptical of your grandma's personal fit, it certainly sounds like she has the passion to become an exceptional AI safety researcher. ;)

I have run into a similar problem here when trying to introduce EA to others. It feels intuitive to give others an example cause area, like AI safety or global poverty, but then the other person becomes much more likely to align EA with just that cause area, and not the larger questions of how we do the most good.

At the same time, it seems hard to get someone new excited about EA without giving some examples of what the community actually does.

Great post, thanks!