In March 2020, I wondered what I’d do if - hypothetically - I continued to subscribe to longtermism but stopped believing that the top longtermist priority should be reducing existential risk. That then got me thinking more broadly about what cruxes lead me to focus on existential risk, longtermism, or anything other than self-interest in the first place, and what I’d do if I became much more doubtful of each crux.[1]

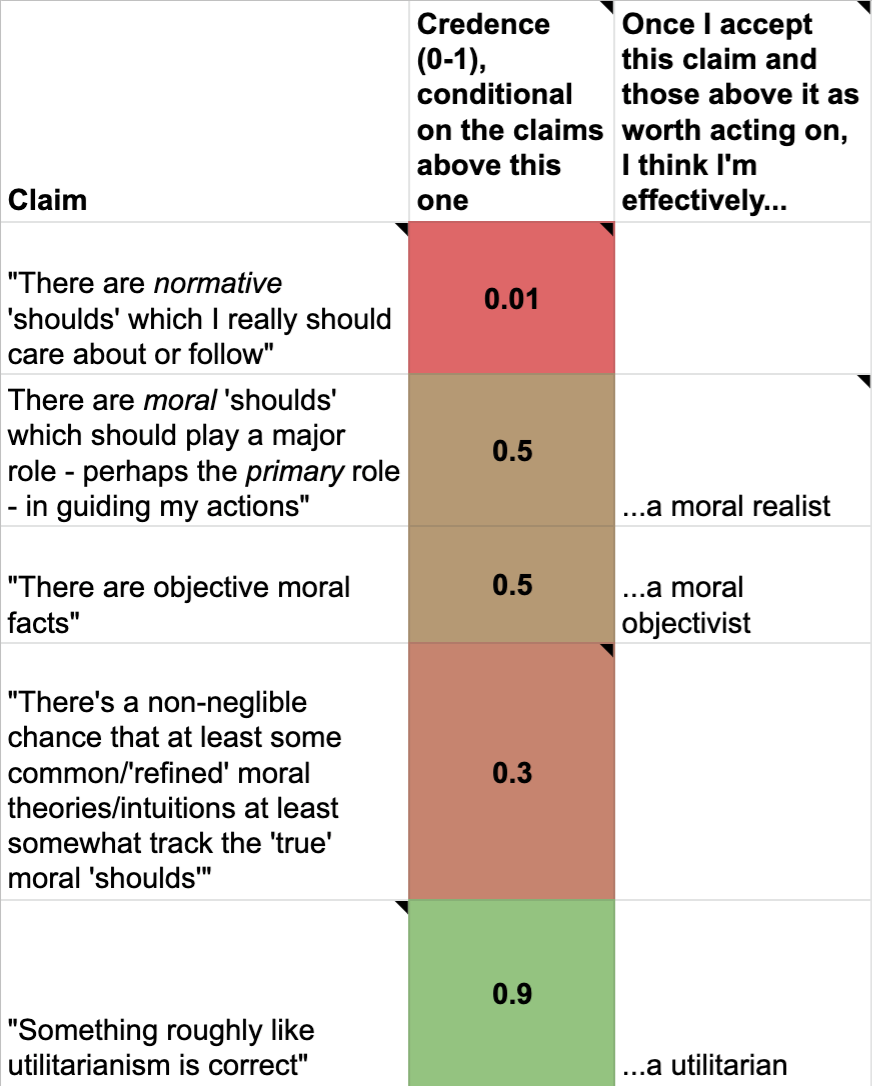

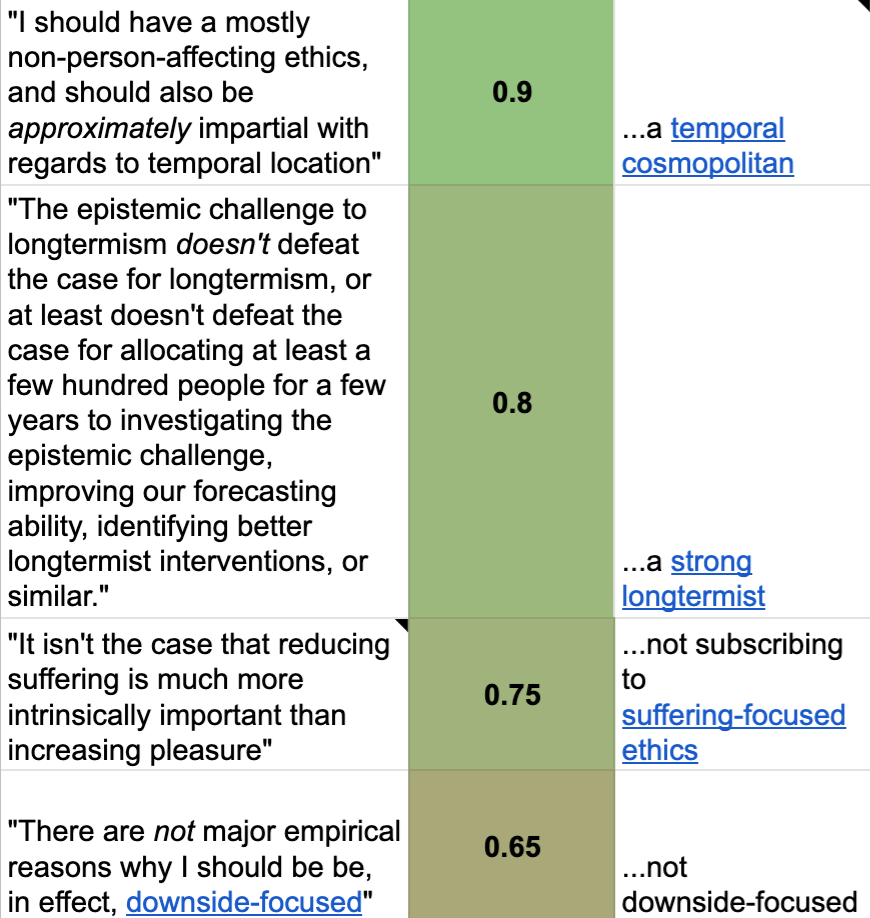

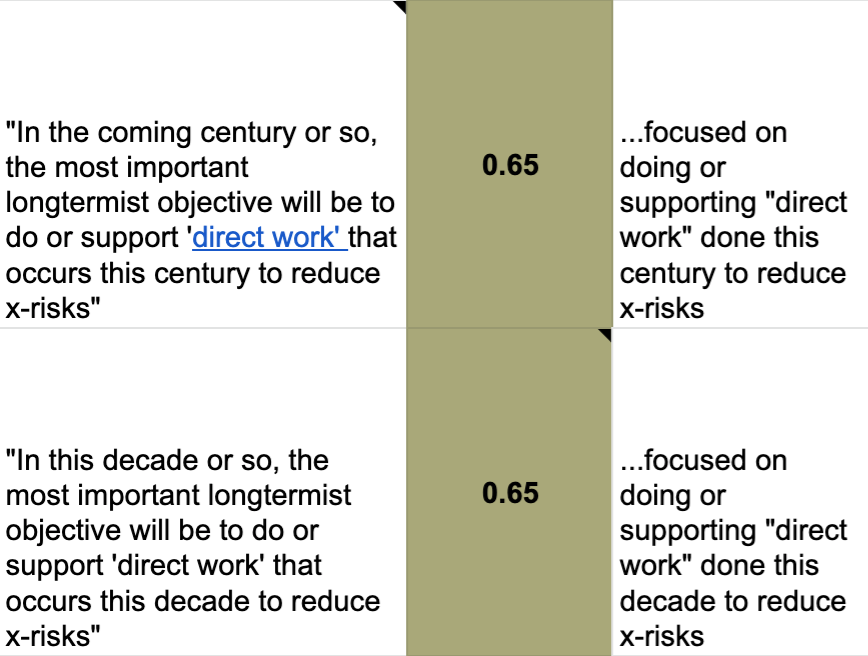

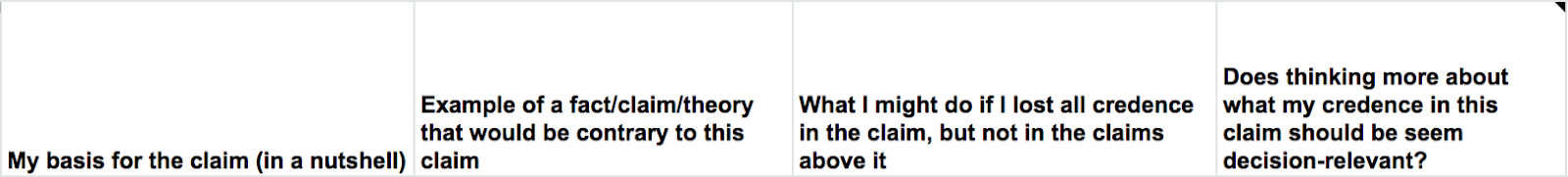

I made a spreadsheet to try to capture my thinking on those points, the key columns of which are reproduced below.

Note that:

- It might be interesting for people to assign their own credences to these cruxes, and/or to come up with their own sets of cruxes for their current plans and priorities, and perhaps comment about these things below.

- If so, you might want to avoid reading my credences for now, to avoid being anchored.

- I was just aiming to honestly convey my personal cruxes; I’m not aiming to convince people to share my beliefs or my way of framing things.

- If I was making this spreadsheet from scratch today, I might’ve framed things differently or provided different numbers.

- As it was, when I re-discovered this spreadsheet in 2021, I just lightly edited it, tweaked some credences, added one crux (regarding the epistemic challenge to longtermism), and wrote this accompanying post.

The key columns from the spreadsheet

See the spreadsheet itself for links, clarifications, and the following additional columns:

Disclaimers and clarifications

- All of my framings, credences, rationales, etc. should be taken as quite tentative.

- The first four of the above claims might use terms/concepts in a nonstandard or controversial way.

- I’m not an expert in metaethics, and I feel quite deeply confused about the area.

- Though I did try to work out and write up what I think about the area in my sequence on moral uncertainty and in comments on Lukas Gloor's sequence on moral anti-realism.

- I’m not an expert in metaethics, and I feel quite deeply confused about the area.

- This spreadsheet captures just one line of argument that could lead to the conclusion that, in general, people should in some way do/support “direct work” done this decade to reduce existential risks. It does not capture:

- Other lines of argument for that conclusion

- Perhaps most significantly, as noted in the spreadsheet, it seems plausible that my behaviours would stay pretty similar if I lost all credence in the first four claims

- Other lines of argument against that conclusion

- The comparative advantages particular people have

- Which specific x-risks one should focus on

- E.g., extinction due to biorisk vs unrecoverable dystopia due to AI

- See also

- Which specific interventions one should focus on

- Other lines of argument for that conclusion

- This spreadsheet implicitly makes various “background assumptions”.

- E.g., that inductive reasoning and Bayesian updating are good ideas

- E.g., that we're not in a simulation or that, if we are, it doesn't make a massive difference to what we should do

- For a counterpoint to that assumption, see this post

- I’m not necessarily actually confident in all of these assumptions

- If you found it crazy to see me assign explicit probabilities to those sorts of claims, you may be interested in this post on arguments for and against using explicit probabilities.

Further reading

If you found this interesting, you might also appreciate:

- Brian Tomasik’s "Summary of My Beliefs and Values on Big Questions"

- Crucial questions for longtermists

- In a sense, this picks up where my “Personal cruxes” spreadsheet leaves off.

- Ben Pace's "A model I use when making plans to reduce AI x-risk"

I made this spreadsheet and post in a personal capacity, and it doesn't necessarily represent the views of any of my employers.

My thanks to Janique Behman for comments on an earlier version of this post.

Footnotes

[1] In March 2020, I was in an especially at-risk category for philosophically musing into a spreadsheet, given that I’d recently:

- Transitioned from high school teaching to existential risk research

- Read the posts My personal cruxes for working on AI safety and The Values-to-Actions Decision Chain

- Had multiple late-night conversations about moral realism over vegan pizza at the EA Hotel (when in Rome…)

I don't think this is quite right.

The simplest reason is that I think suffering-focused does not necessarily imply downside-focused. Just as a (weakly) non-suffering-focused person could still be downside-focused for empirical reasons (basically the scale, tractability, and neglectedness of s-risks vs other x-risks), a (weakly) suffering-focused person could still focus on non-s-risk x-risks for empirical reasons. This is partly because one can have credence in a weakly suffering-focused view, and partly because one can have a lot of uncertainty between suffering-focused views and other views.

As I note in the spreadsheet, if I lost all credence in the claim that I shouldn't be subscribe to suffering-focused ethics, I'd:

That said, it is true that I'm probably close to 50% downside-focused. (It's even possible that it's over 50% - I just think that the spreadsheet alone doesn't clearly show that.)

And, relatedly, there's a substantial chance that in future I'll focus on actions somewhat tailored to reducing s-risks, most likely by researching authoritarianism & dystopias or broad risk factors that might be relevant both to s-risks and other x-risks. (Though, to be clear, there's also a substantial chunk of why I see authoritarianism/dystopias as bad that isn't about s-risks.)

This all speaks to a weakness of the spreadsheet, which is that it just shows one specific conjunctive set of claims that can lead me to my current bottom-line stance. This makes my current stance seems less justified-in-my-own-view than it really is, because I haven't captured other possible paths that could lead me to it (such as being suffering-focused but thinking s-risks are far less likely or tractable than other x-risks).

And another weakness is simply that these claims are fuzzy and that I place fairly little faith in my credences even strongly reflecting my own views (let alone being "reasonable"). So one should be careful simply multiplying things together. That said, I do think that doing so can be somewhat fruitful and interesting.