Summary

- We use the term “impact investing” to refer to any investment made for the dual purpose of having a positive impact and generating a financial return

- Impact investing has received relatively little attention from an EA perspective

- We review an investment case study produced by the Total Portfolio Project (TPP) leveraging research from Lionheart Ventures, Let’s Fund, and Rethink Priorities

- The case study uses TPP’s Total Portfolio Return (TPR) framework, a rigorous framework which can be used to assess the value of an impact investment relative to other ways of generating impact

- The case study estimates that an impact investment into Mind Ease averts DALYs at $150 per dollar invested in expectation, comparing favorably to GiveWell top charities before financial returns are taken into account

- We show how the TPR framework can be used to determine that, for a donor who is already making the best possible use of donations and investing to give, investing in Mind Ease appears better than either additional donations or more investing to give

- Given the lack of prior research in this area and the potential for impact investing to significantly benefit many cause areas, we recommend that the EA community direct more research and attention to this area.

- We highlight several ways to contribute including: dialogue and movement building, evaluations, research, entrepreneurship, and shifting money towards impact investing.

Background and Implications

Impact investing has largely been neglected by the EA community. This is perhaps due to the perception that most impact investing is relatively low impact per dollar invested. In turn, EA principles are largely neglected within impact investing. We believe there could be benefits on both sides to exploring the potential of impact investing to produce effective results.

Complicating things is the fact that the term “impact investing” has many possible interpretations and connotations. It generally refers to one or more strategies within a disparate range of financial strategies that are associated (correctly or not) with “impact,” from buying (or not buying) certain stocks, to providing loans to nonprofits and disadvantaged communities, to funding early-stage startups with a specific social mission.

We only want to distinguish an investment as impactful if results are generated that would not occur in expectation without the investment. Among the various interpretations of the term impact investing, we believe that only some of these approaches offer potential for impact. Thus, we will use the term “impact investing” in this article to refer to any investment that is expected to have a positive impact on the world and generate financial returns. Such investments could take the form of anything from early stage startup investments to ESG-related strategies in liquid stock markets (but note that ESG does not imply impact).

There are many parallels between the impact investing space at present and the donation space before EA emerged. Most EAs believe the impact of charitable donations has a heavy-tailed distribution, meaning a small number of donations/charities have a much higher impact (sometimes orders of magnitude more) than donations/charities on average. We think impact investing likely has a distribution of impact similar to or even more extreme than donations (see here for a technical discussion). Analogous to the early days of EA, there is no evaluator like GiveWell using rigorous evaluation techniques to find the most impactful investing opportunities.

In this article, we share what we think is the first work in the EA community attempting to rigorously evaluate a specific impact investment and compare it to high impact donation options—in this case, comparing an investment into the EA-aligned anti-anxiety app Mind Ease with a donation to GiveWell top charities. We find that after careful counterfactual adjustments, an investment into Mind Ease has an expected investment amount per DALY averted of $150 compared to a cost per DALY averted of $860 for GiveDirectly and $50 for AMF. However, unlike with a donation, in expectation the investment will generate financial returns. As long as the investment generates a financial return that is different from that of a donation then this needs to be accounted for. To handle this, we review how the Total Portfolio Return (TPR) framework enables impact and financial returns to be considered together.

While these findings are promising, we would like to emphasize the potential risks with impact investing. Firstly, if done without care, impact investing could make the world counterfactually worse (e.g. if the impact is incorrectly assessed as positive when it is negative). Secondly, we suspect investing is psychologically easier than donating, as investments hold the promise of generating financial returns. This may draw some people away from donating and towards impact investing, even when the impact case for a donation is stronger. Finally, it may ultimately be determined that there are so few highly impactful investment opportunities that further investigation into impact investing is not a good use of resources.

Despite these risks, we think the potential for impact investing is sufficiently great as to warrant more attention from an EA perspective. There has been extensive EA-aligned work to assess the impact of donations one can make today, and recent work has explored the idea of investing to give later. Impact investing opens avenues for effecting change in the world that are complementary and categorically different. This can include "differential technology" strategies where you invest to scale up and accelerate robustly good technologies that compete against other technologies with negative impacts (or risks of negative impacts). Or engagement strategies such as using shareholder voting and securing board seats to influence corporate behavior. There also seems to be a lot of unexplored territory that may contain valuable ideas we have not thought of yet or have not properly explored.

Impact investing could be useful for a wide range of value systems and across cause areas ranging from AI and biosecurity to animal welfare and global development. Not only can the direct impacts of the investment be impactful, as in this case study, but the indirect and long term effects can be important, for example, through field building. The impact could come from targeted shifts in investment behavior for a small number of people (such as the EA community) towards highly impactful ventures or from a small shift in the $500 billion to $31 trillion already allocated to responsible investing.

Given (1) the potential size of the impact investing space, (2) the lack of attention it has received, (3) this case study that demonstrates the existence of a highly impactful investment, and (4) the unique and appealing characteristics of impact investing (such as corporate influence and return of capital), we think the field of impact investing is a promising area for further inquiry. If further research supports the existence of high-impact opportunities that can be identified in an efficient way, we think there is an exciting opportunity to influence a large amount of external capital and to deploy EA capital more effectively. At the end of this post, we highlight ways you can contribute to these efforts.

Mind Ease Case Study

Acknowledgements

Brendon Wong and Will Roderick’s investigation of impact investing would not have started without the Longtermist Entrepreneurship Fellowship, spearheaded by Jade Leung, Ben Clifford, and Rebecca Kagan. This case study would not have been possible without the efforts of the Total Portfolio Project (TPP), led by Jonathan Harris and Marek Duda (now at Lantern Ventures), which has been pioneering ways to apply an EA perspective to investing including impact investing. TPP’s work on the case study, in turn, would not have been possible without input from Lionheart Ventures (David Langer) who assessed Mind Ease from a business perspective and from Let’s Fund (Hauke Hillebrandt) and Rethink Priorities (Derek Foster) in assessing Mind Ease from an impact perspective. Mind Ease was created by the startup studio Spark Wave. The entire analysis was reliant on engagement from the Mind Ease and Spark Wave teams, including close collaboration with Spencer Greenberg and Peter Brietbart. In addition to these stakeholders, we’d like to acknowledge Aaron Gertler, Connor Dickson, and Rhys Lindmark for reviewing this article. As a disclaimer, reviewers may or may not agree with the points made in this publication. Thank you to all the partners and stakeholders involved!

Framework

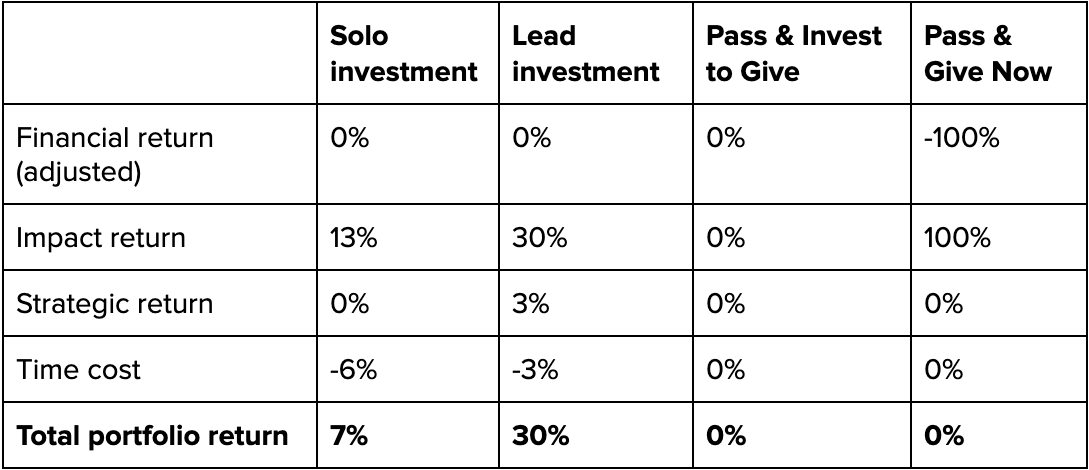

TPP has developed the Total Portfolio Return framework (the "Framework") to help altruistic investors combine the financial and impact considerations related to an investment together in their decision making. For the analysis of Mind Ease, they assessed the total portfolio returns of three options: to fund the entire seed round ("Solo"), be the first investor in the round and encourage others to follow ("Lead"), or to pass on the investment ("Pass").

The Framework groups the value of an investment into several "return" categories. The main categories necessary to understand the case study are:

- Financial returns: the conventional risk-adjusted, excess, expected financial returns of the investment (excess means over and above the market interest rate). These returns have altruistic value because they can be used for future giving (and impact investing). To distinguish this definition from basic financial returns, we refer to these returns as "adjusted financial returns." Because of market efficiency, most investments will have zero adjusted financial returns.

- Impact returns: the counterfactual impacts on Mind Ease's users of accelerating the company's progress with the investment. These impacts are valued in terms of the donation to a benchmark highly effective charity required to generate an equivalent amount of impact.

- Strategic returns: the more indirect effects of investing in Mind Ease, such as forging connections in the mental health space.

An investment's Total Portfolio Return (TPR) is then the sum of its returns in each category. In general any investment with a TPR greater than zero is worth doing. However, often an investor may only be able to make one investment among a set of alternatives. In this case, they should choose the investment with the greatest TPR.

To allow for the inclusion of all potentially important considerations in the decision making process, all returns are aggregated into a decision matrix with each decision option given a column and each consideration a row. This way of transparently presenting the assessments of each consideration borrows from the field of Multiple-Criteria Decision Analysis while retaining a "returns" framing that is natural for investors.

TPR Framework Applied to Mind Ease

The Framework is careful to distinguish Enterprise Impact and Investor Impact. Enterprise Impact is defined as the counterfactual good done by the enterprise (i.e. relative to a world where Mind Ease doesn't exist). Investor Impact is the counterfactual increase in Enterprise Impact caused by the investor making the investment in Mind Ease (rather than choosing to not invest).

To assess Investor Impact, an investor first needs to form an assessment of the Enterprise Impact if they invest and then compare this to an assessment of Enterprise Impact if they choose not to invest ("Pass"). Only after having assessed the likely Enterprise Impact in both scenarios can they assess their own impact. This is the challenge of assessing your impact with any funding opportunity. With donations it is perhaps a little easier as you could argue that what happens under "Pass" is simply that the charity doesn’t get the money and doesn’t have the impact. But with (return-generating) investments it’s even more crucial to assess the counterfactual scenario.

For illustrative purposes we consider two options under Pass: Pass & Invest to Give and Pass & Give Now.

The following steps were taken to assess the TPRs for each option.

Step 1: Business Analysis and Financial Returns

TPP first considered Lionheart's assessment of the business prospects of Mind Ease. The assessment included Mind Ease’s background and plans (including offering a free or discounted version of the app to low/middle income countries), historical downloads and active users, competitors, and financial details. Some strengths are that Mind Ease is operating with a revenue model that has already been validated by competitors, the team’s product development is above average, the unit economics are promising, and the potential user base is large. Some identified risks are that the unit economics could be threatened by factors such as competition and increasing costs of user acquisition with scale, and the team doesn’t have a lot of past experience scaling consumer applications.

Based on Lionheart Ventures’ analysis, the assessment considered a range of possible user growth estimates over the next eight years. These estimates go up to 1,500,000 users in an optimistic success scenario (with considerable expected variance). These estimates are used in the calculation of impact returns below.

Financial returns

In setting the adjusted financial return it is important to consider the efficient market hypothesis (EMH). In the words of Eugene Fama, the original author of the EMH: "I take the market efficiency hypothesis to be the simple statement that security prices fully reflect all available information… A weaker and economically more sensible version of the efficiency hypothesis says that prices reflect information to the point where the marginal benefits of acting on information (the profits to be made) do not exceed the marginal costs." This hypothesis is supported by compelling intuition—if prices did not accurately reflect all information, then traders would have an opportunity to exploit the discrepancy to make a profit, which would lead to prices becoming more accurate.

Lionheart's view was that Mind Ease is as strong as many other mental health startups that raise venture financing. Based on this we follow the original analysis and take the view that the adjusted financial returns of the investment in Mind Ease are around zero (like most investments in the market as these are adjusted returns).[1]

Note that an altruistic investor that is generating Investor Impact with their investment should be willing to make it even if the adjusted financial return is negative. In fact, we expect this to be the case for most impact investments, especially if pursued at scale. This doesn't necessarily mean such investments will have "low" returns in an absolute sense. It means they will have lower adjusted financial returns than a socially neutral investor would accept, because impact investors will be willing to take large, concentrated positions in the best opportunities (resulting in large risk adjustments). The absolute level of the non-risk adjusted returns could still be quite high and potentially attractive to altruistic investors with high risk appetites.

We assume that the investor has optimized the rest of their portfolio before considering investing in Mind Ease. For Pass & Invest to Give, assuming their main investments generate no other types of returns (e.g. strategic), the adjusted financial return will be 0%. This is because if this were not the case then the investor would want to increase the amount they invest to give, suggesting they were actually not yet optimized. For Pass & Give Now the adjusted financial return is simply -100%.

Step 2: Investor Impact and Impact Returns

User Impact Assessment

TPP first analyzed the expected impact of Mind Ease per user. Hauke's part of this analysis is available online, with the full 76-page report here.

At a high level, the assessment was informed by priors based on the standard Scale, Neglectedness, and Solvability (SNS) framework. For scale, Hauke cited a finding from the Global Burden of Disease Study indicating that over 1% of all poor health and death worldwide is caused by anxiety. For neglectedness, Hauke cited The Happier Lives Institute’s findings that most people suffering from mental health issues don’t get proper treatment, particularly in low/middle income countries (76%-85% don’t get any treatment). For solvability, Hauke determined there is “relatively good evidence that Mind Ease’s interventions work [in general, not including apps], that they can work in the context of an app, and the average effect size is 0.3.”

The main units of the analysis were disability adjusted life years (DALYs).[2] Hauke utilized estimates of the counterfactual GAD-7 anxiety score reduction of Mind Ease use and the number of years the effect is likely to persist to estimate that users served by Mind Ease will experience a counterfactual 0.25 DALY reduction. Derek Foster's estimate was much lower at 0.01 DALYs averted per user. This was principally because they modeled users as having less severe anxiety on average and they were much more pessimistic about the duration of the effect.

Based on principles of robust decision making under uncertainty, it is important to explore how the results of the analysis vary across a range of possible weights on Foster’s versus Hillebrandt’s analysis. In the analysis below, we focus on the case where we weight Foster’s analysis (which was more pessimistic) more highly than Hillebrandt’s. This is because if Mind Ease still looks attractive in this "worst case," then it would definitely look attractive with weights that are less tilted towards Foster’s estimate. So, to determine a single value to use in the rest of the model, TPP tilted towards Foster's estimate as much as they thought was reasonable, resulting in a value of 0.02 DALYs per user.

Investor Impact

Investor Impact is the total counterfactual DALYs averted by making the investment. It comes from the investment's counterfactual effect on Enterprise Impact. For simplicity we analyze this in terms of the probability of Mind Ease getting its required funding (and then in expectation generating its Enterprise Impact). We use this as a proxy for a range of ways the investment could affect the enterprise. In reality, for example, the investment could increase the chance of finding success, or decrease the time required to obtain the funding, or increase the final amount raised.

How can an investment affect the enterprise given the EMH and our assumption of a "market rate" zero adjusted financial return? First, the EMH only holds on average - it doesn't rule out exceptions. We are not claiming Mind Ease is an average investment - it could be exceptional but evaluating that is out of scope for this case study. Second, the strength of market efficiency is open to debate and early stage venture markets are definitely less efficient than the liquid stock markets that are the setting for most studies of the EMH. Third, and perhaps most importantly, the EMH really isn't the correct economic concept to be pointing to in this context. The principles behind the EMH may be relevant here, but not the EMH itself. As discussed in the above subsection on financial returns, the EMH is only a statement about pricing.

What is relevant for impact is to know the number of "good" venture ideas that arise and would offer zero adjusted financial returns (because of the EMH), but that never get funded. These ventures could exist (and fail) because of various informational and structural frictions. One general reason to expect such attempted ventures to exist is because "ideas are cheap" while economic resources are scarce. There are usually more venture ideas to fund than there is capital and talent to invest in them. Thus, it is possible for Mind Ease to have an adjusted financial return of zero (from the perspective of an investor who has already found it) and still have a probability of not being funded. Based on empirical base rates [3] and input from experts, TPP set the increase in funding probability (relative to Pass) at 15% and 20% for Lead and Solo, respectively, and the chance of success at 25%.

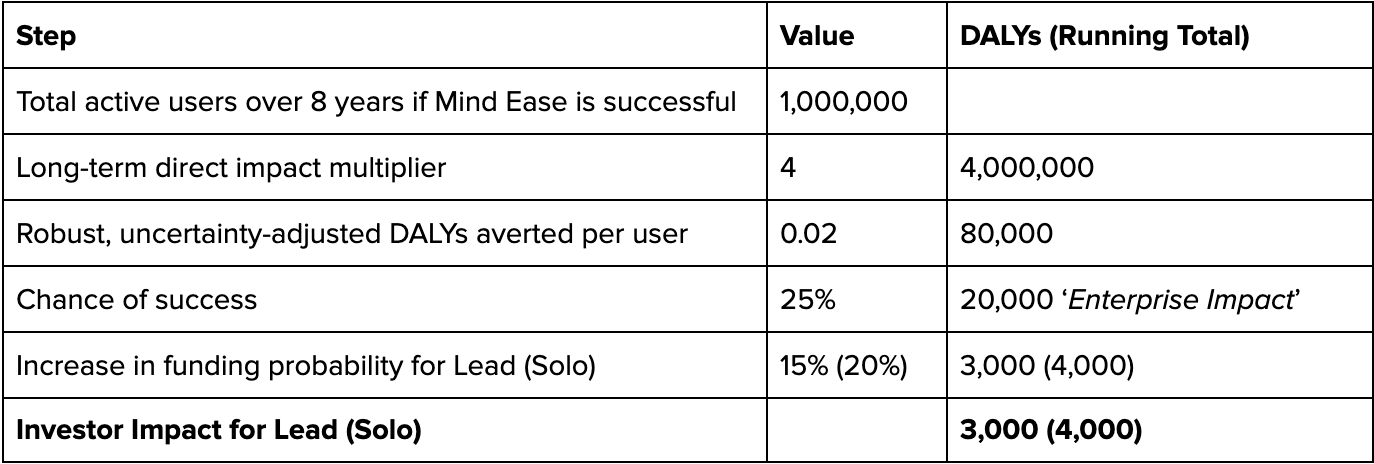

TPP merged the business analysis and the per user impact assessment into a quantitative model for assessing Investor Impact. A simplified back-of-the-envelope calculation that summarizes TPP’s full model proceeds as follows for the case of Lead:

- On average a successful Mind Ease has 1,000,000 users in the first 8 years.

- A successful Mind Ease will serve at least 4x this many users in future years.

- The average counterfactual benefit is 0.02 DALYs averted per user.

- A chance of success of 25% implies an Enterprise Impact of 22,000 DALYs.

- The funding probability increases then imply Investor Impact of 3,300 DALYs for Lead and 4,400 DALYs for Solo.

The key steps above are represented in the table below.

Impact Returns

One way to compare Mind Ease to different investments and donations is to divide the investment amount by the DALYs averted to get an "investment-effectiveness" of approximately $150 invested per DALY averted. This can be compared with a $860 cost per DALY averted for GiveDirectly and $50 cost per DALY averted for AMF, which are cited in Hauke’s report. Such a comparison indicates that an investment in Mind Ease has an "investment-effectiveness" of the same order of magnitude as the cost-effectiveness of a GiveWell top charity. However, this ignores the expected financial returns of the investment.

To compare impact and financial returns, it is useful to define a Best Available Charitable Option (BACO). This represents the most effective way that the same impacts could be produced with a donation (e.g. AMF and other GiveWell top charities for global health). We use the most effective opportunities identified by GiveWell as a single BACO that can avert a DALY for roughly $50.

Investors are comfortable working with and estimating financial returns. To utilize this comfort, Investor Impact can be compared to the additional financial return that would be necessary to produce enough additional funding for the future BACO to generate the same impact. This hypothetical "return" is the "Impact Return" = ("Investor Impact" * "Future BACO cost-effectiveness") / "Investment Amount".[4] This values Investor Impact in terms of the most cost-effective charitable way to produce the impact in the future.

At least in the case of global health, we believe future cost-effectiveness ratios are generally expected to be higher (that is, less impact per $). To be conservative in calculating impact returns, we simply use current cost-effectiveness as a lower bound. If a more detailed forecast was used, since we expect the forecast to be for lower effectiveness on average, this would imply higher impact returns for the Mind Ease investment. This reflects that if it is going to be more costly to generate impact in the future then it is more urgent to generate impact today. Given this potential urgency, we expect that our assessments of the impact returns of investing in Mind Ease are an underestimate in this regard.

For Lead, the investment amount is $500,000 USD and the Investor Impact is 3,000 DALYs. It would cost $150,000 to avert this many DALYs with grants to the BACO. This implies an impact return of 30% ($150,000 / $500,000) for Lead. For Solo, the Investor Impact of 4,000 DALYs similarly implies an impact return of 13% on the associated $1.5 million investment.

Note that there are compelling reasons to compare the impact of different interventions in terms of subjective well-being instead of DALYs. This was not done in the original Mind Ease analysis and we don’t attempt it here. If we did, we suspect it would increase our assessment of Mind Ease’s impact while leaving the BACO roughly the same, increasing the impact returns.

Impact returns can be assessed for any investment including grants. The impact return of "Pass & Invest to Give" is 0% as we assume the associated investments generate zero Investor Impact (the impact of the eventual giving is accounted for as financial returns). The impact return of "Pass & Give Now" is 100% as we assume any additional donations are given to the BACO.[5]

Step 3: Strategic Returns and Time Costs

Other criteria that were considered were the time cost of ongoing engagement with the investment and the value of building strategic connections with other investors. Just as for the financial and impact returns, the strategic return and time cost are assessed based on the value placed on these considerations divided by the investment amount. The values of these returns were assessed intuitively based on how much the investor might value their time and be willing to pay for similar strategic connections. The values we present below for these returns are only illustrative and in this case they don't impact the ultimate decision.

Step 4: Decision Matrix

Having reviewed the assessment of each consideration, we can now combine them into a decision matrix.

Based on the decision matrix, Lead is the best option. The decision is dominated by the assessed impact returns. Lead would still be the best option even if the adjusted financial return was as low as -30%. Note that while these returns may seem large, they are for the entire length of the investment (i.e. potentially 8+ years, so 30% corresponds to ~3% per year).

Ultimately, the investor for whom this analysis was prepared chose Lead. Mind Ease was able to raise the remaining funds and is now fully funded and growing.

How to Contribute to This Area

Here, we list some of the ways to contribute to this space, but these are just a high-level starting point. If you have an idea for a contribution that you would like to see or do, please get in touch by email.

- Dialogue and movement building. This could be both within EA and in the broader impact investing community, creating a movement to give more attention and perspectives to new ideas. This could look like more EA forum posts about how impact investments can play a role in the impact portfolio of EA. Another example of this is Sanjay’s work on shaping the field of ESG investing. It could also be helpful to bring EA-aligned ideas into the public consciousness, like counterfactual impact. This could also look like giving critique and feedback on the TPR framework discussed in this post.

- Evaluations. Impact evaluations could look like assessing counterfactual impact per dollar invested across a range of cause areas, investment types, or individual opportunities. The Mind Ease case study covered above, conducted by Total Portfolio Project, is an example of this type of work. In 2017, Will MacAskill recommended that a person or group should explore creating a GiveWell for impact investing, “where you could search for the best impact investing opportunities from an EA perspective.”

- Research. Separate from the applied work of evaluations, there are important research questions that require a more theoretical approach. This includes questions such as what impact we should expect from investments with different fundamental characteristics and how impact investments should fit into the EA portfolio. Total Portfolio Project's recent technical papers (model, framework) are a start in this direction that offers a foundation on which future work can build. As far as we are aware, academic quality research on impact investing from an EA perspective is very neglected.

- Even if debates about the value of impact investing are never resolved, research in this area can still be highly valuable because it demands answers to fundamental questions in altruistic economics. As Eugene Fama wrote about the EMH: "It is a disappointing fact that… precise inferences about the degree of market efficiency are likely to remain impossible. Nevertheless, judged on how it has improved our understanding of the behavior of security returns, research on market efficiency is among the most successful in empirical economics."

- Entrepreneurship. EA-aligned entrepreneurship through new impactful startup ventures like Mind Ease could leverage the funding in this space. These ventures could be in a range of cause areas from mental health and clean meat to biosecurity and AI.

- Shifting money towards impact investing. Money could be directed towards specific investments (for informational or impact purposes), research, or evaluations within EA or in the broader impact investing community.

Financial Returns

If Mind Ease was to actually receive VC funding then the EMH would imply it is a zero adjusted financial return investment for these VCs. Because VCs must earn a fee for their work, this suggests our investor would be looking at a positive adjusted financial return if they were able to invest on the same terms as the fund manager but without paying for the VC’s operating costs (e.g. without paying a fee, essentially being fortunate enough to free load off the hypothetical VC). If this happened it could get viewed as monetizing the investor's reputation (or whatever other reason they were allowed to participate).

The adjusted financial returns would be more likely to be zero if only angels invest. However, the returns could also be positive if the founders wanted to offer aligned money an incentive to invest. The returns could be negative if the business were to warrant a lower valuation than an average venture-backed startup but if it were not possible to negotiate the valuation down sufficiently (because to do this too much would damage the incentives of the management team).

Note that the adjusted financial return ignores the costs of finding and evaluating the opportunity prior to making the investment decision. This is appropriate for this case study as it is meant as a demonstration of a tactical impact investment analysis. We believe this tactical analysis supports the case for more strategic work on impact investing, including assessing how efficiently good opportunities can be identified. For experienced philanthropic impact evaluators, we expect the marginal cost to assess the impact of investments in their area of expertise will be relatively small. Even if search costs are high now, it may be possible to bring them down over time. Detailed discussion of such strategic issues is out of scope for this case study. ↩︎

DALYs

Hauke’s report provides a background on the units used to quantify the impact of Mind Ease. DALYs represent the harm inflicted on people either by a shortened lifespan (in which living 1 year shorter = 1 DALY) or by living a year in less than full health (in which living 1 year with blindness could = 0.2 DALYs depending on how the conversion is done). “Averting” 1 DALY is roughly equivalent to giving someone a full year of life. The efficacy of anxiety reduction apps is measured by rating scales like the GAD-7 (Generalised Anxiety Disorder Assessment) score. ↩︎

Startup Base Rates

Our base rates for the increase in the probability of funding given angel investment (17%) and success given funding (9-27%) are guided by Kerr, Lerner and Schoar (2013) who studied data from two angel investment groups from 2001-2006. They found that:

- Funded ventures had a 76% survival rate (to 2010) and 27% "success" rate (exit or >75 employees). Unfunded ventures found other funding 26% of the time, had a 56% survival rate given funding, and had a 9% "success" rate.

- The angel groups had a positive impact on the business outcomes of their investees. Adjusted for other effects, the survival probability impact of angel investment was 22% and statistically significant, while the funding probability impact was 16% but not significant.

- Among the groups of ventures with medium levels of interest, the probability of securing any funding was between 55%-71%.

- Among all ventures that were selected to pitch, only 30% ultimately secured any funding (from the angels or other investors).

- Even among the ventures they liked the most (top 2% of all ventures by pitch rating), the chance of a group funding a venture was only 41%.

- It is extremely rare for a lot of angels to indicate interest in a venture. Most pitches generate 0 interest. This means that there is little information value in what a given investor rejects (whereas there is information in what they like).

Impact Returns

Mathematically we are looking for the impact return such that "Investor Impact" = "Investment Amount" * "Impact Return" / "Future BACO cost-effectiveness." Rearranged to be a formula for the Impact Return, this is: "Impact Return" = ("Investor Impact" * "Future BACO cost-effectiveness") / "Investment Amount." Note that "Future BACO cost-effectiveness" has units of $/impact. ↩︎

Taxes

We have ignored taxes in this analysis. We do this for simplicity and also because our view is that they aren’t relevant for donations & investments made with tax-exempt funds (e.g. inside a foundation or DAF). For donors that are subject to taxes, we expect accounting for this would decrease the impact returns by the amount it increases the adjusted financial return, resulting in no net change to the TPR. ↩︎

First: I like the framework and the fact that you help to make impact vesting a viable option for the EA space. It indeed might open more opportunities for entering a multitude of markets and funding. Having a streamlined standard EA-aligned framework for this in the Global Health and Wellbeing Space could make the investment process more attractive (smooth), more efficient (options clearer and better comparable), and lead to better decisions (if the background analysis is high-quality).

Some points that jumped to my head for this specific case:

Might it be useful to add something like “neglectedness” of funding”? E.g. in the Mindease Case, I believe it was moderately likely (depending on the quality of the presented scaling strategy) that another investor would have jumped in to take the lead. There might be value in identifying and helping (1) ventures that have a promising impact prospect but low funding chances or (2) ventures that look like they might not have a promising impact prospect but since you have some rare specialists in the corresponding field, you know it is better than other options in the field. E.g. if Mindease had a really promising approach (evaluated by the specialists) to solve the low retention of users or the lack of sustainable effects of mental health interventions.

The evaluation of neglectedness (of the solution/problem) seemed partly confusing to me. It is correct that lots of people suffering from mental health issues do not receive treatment. This is also true in HIC where digital solutions are widely available. The real neglected problem seems to be the distribution of these interventions and this has been hard for all companies out there offering services like that and is only possible if you adapt your service to the specifics of the different cultures and countries and then also tailor the distribution strategy. This means, that currently Mindease just does something in HIC that other apps such as Sanvello are offering already (maybe no additional value for people that in this calculation is added to the DAILYs) and does not have product or market strategy for LMIC - where it would be neglected. Convincing providers like Sanvello to offer their services in LMIC, and then just specializing in tailoring the product for people in a specific large country (e.g. Nigeria) as well as nailing distribution there, might be much more impactful. E.g. the UK-based charity Overcome does something along the lines of this.

Having said this, maybe the impact evaluation should be done by an expert in the field: in this case an individual that knows the current state of research, practice, and industry and has a background in the field (here: mental health interventions).

Would it be useful also to add a “people’s time resources” needed to the equation? E.g. it is a difference if 10 or 30 EA spend their time working on this solution because they could also help to make an impact elsewhere.

Hi Inga, thanks for commenting!

Thanks! I personally think that impact investing is an incredibly promising space for EA.

That's already included under "Investor Impact!" See "funding probability." If I recall correctly, the 15% indicates that there's a ~1/6 chance this investment was counterfactually impactful, and that draws from the much lengthier documents written by TPP that this writeup is based on.

Did you read Hauke's analysis, or just our brief summary of it? Here's a direct link to the 3.5 pages covering neglectedness in Hauke's report. The extended TPP report covers several distribution strategies, which I personally believe to be very compelling and differentiated from existing apps, and some of them may resemble strategies you proposed. I do not recall if the full report is public, but Jon Harris would know the latest status.

I think that's an interesting idea! I haven't seen too many examples of that in EA analyses, but let me know if you find any on the EA Forum! I can think of a few difficulties associated with doing that and I'm not sure how people would overcome that, including establishing a counterfactual personal impact benchmark (what is the baseline impact of the EA that would have been hired?) and modeling the additive impact of additional team members (does going from 10 to 30 team members triple the impact? under what impact scenarios would that occur?). Also, not all team members would need to be "EAs" (what even counts as EA?), and I think that can also be hard to forecast and is somewhat dependent on the team and how things end up working out (whether people end up joining from within or outside EA).

Thank you, Akash, for sharing these papers. They are fascinating! Here is another paper that I sometime cite when referring to the "retention"-problem. It is a systematic analysis of infield data, estimating that 90% of users trying unguided mental health apps drop out after 7 days (Baumel & Edan, 2019).

Thanks for sharing this reference, Inga!

These are great points! FYI, jh is Jonathan Harris who runs TPP.

I'll preface my response by saying that I'm not an expert in this area; Will and I mostly focused on the impact investing side of things, and Hauke or someone on the Mind Ease team could likely provide a better response.

I think that Headspace and Calm may not be the right reference points since they're not designed to tackle anxiety and depression, whereas Sanvello (formerly Pacifica) is. Headspace and Calm strike me more as "mindfulness apps" versus "mental health apps." For instance, it doesn't look like Mindspace and Calm feature any CBT exercises, whereas Sanvello does. I have paid access to Calm via my employer, and while I haven't used it much, it looks like all it has are guided meditations. Reviews of Headspace and Calm support my initial impression and mention that they just have guided meditation and music. I expect that evidence-based practices specifically designed to target certain mental health conditions are significantly more efficacious than guided mindfulness meditations.

This distinction might've made it so that this Psychology Today review from 2019 mentions Sanvello as "the most popular" mental health app, ignoring Headspace and Calm. Regarding competition, it mentions that Sanvello has 2.6 million registered users, which is smaller than Headspace and Calm but still seems significant. It seems like other apps, including ones I've never heard of, have also been able to get decent user counts. For example, Moodpath reached 1 million downloads in 2019. Regardless of whether Headspace and Calm are competitors, Sanvello and other apps would still need to be competed against. Unlike other industries, like network effects with social media, I don't think that the major mindfulness/mental health apps have enough of a moat to prevent other competitors from emerging.

My understanding is that Headspace and Calm offer their vast majority of their content behind a paywall. The Headspace review I linked to suggests free users get access to one-third of a "Basics" (introductory meditation) course before they need to pay.

What constitutes releasing an app for free as "working?" It's commonly known that free apps get many more downloads and are the vast majority of apps on the iOS and Android app store, so I would expect that this would be a very effective user acquisition strategy (but perhaps not an effective monetization strategy, hence the social impact angle).

Sanvello (Pacifica) was likely selected because it is the most efficacious competitor in the same space. I'm not sure about how Hauke utilized the study findings in his report and his reasoning for doing so, so I'll have to defer to him on this. I'll let him know about your question!

It's worth pointing out that the report uses a very conservative counterfactual user impact estimate that is much closer to Foster's estimate than Hauke's estimate due to "principles of robust decision making under uncertainty."

It is not! TPP has not publicly released information containing private data from Mind Ease, which includes the business analysis and Foster's report. That's why the section on Mind Ease user data is not included in Hauke's report.

There isn't too much information out there on startup success rates, and I'm afraid I'm not familiar with success data specifically about mental health apps!

Just to add that in the analysis we only assumed Mind Ease has impact on 'subscribers'. This meanings paying users in high income countries (and active/committed users in low/middle income countries). We came across this pricing analysis while preparing our report. It has very little to do with impact but it does a) highlight Brendon's point that Headspace/Calm are seen as meditation apps, and b) that anxiety reduction looks to be among the highest Willingness To Pay / high value to the customer segments into which Headspace/Calm could expand (e.g. by relabeling their meditations as useful for anxiety). The pricing analysis doesn't even mention depression (which Mind Ease now addresses following the acquisition of Uplift). Perhaps because they realize it is a more severe mental health condition.

Thanks for this interesting analysis! Do you have a link to Foster's analysis of MindEase's impact?

How do you think the research on MindEase's impact compares to that of GiveWell's top charities? Based on your description of Hildebrandt's analysis for example, it seems less strong than e.g. the several randomized control trials supporting distributing bed nets. Do you think discounting based on this could substantially effect the cost-effectiveness? (Given how much lower Foster's estimate of impact is though and that this is more heavily used in the overall cost-effectiveness, I would be interested to see whether this has a stronger evidence base?)

Hi Robert, Foster's analysis currently isn't publicly available, but more details from Foster's analysis are available in TPP's full report on Mind Ease. To my knowledge, it was not stronger evidence that resulted in a lower efficacy estimate from Foster's research, but skepticism of the longer-term persistence of effects from anxiety reduction methods as well as analyzing the Mind Ease app as it is in the present—not incorporating the potential emergence of stronger evidence of the app's efficacy, as well as future work on the app. As one would expect, Mind Ease plans on providing even stronger efficacy data and further developing its app as time goes on.

As mentioned in our writeup, TPP used the lower estimate "because if Mind Ease still looks attractive in this "worst case," then it would definitely look attractive with weights that are less tilted towards Foster’s estimate," rather than skewing based on the strength of a particular estimate. I think it's quite possible that Mind Ease's expected impact is considerably higher than the example conservative estimate shared in this writeup. Using different framings, such as the Happier Lives Institute's findings regarding the cost-effectiveness of mental health interventions, can also result in much higher estimates of Mind Ease's expected impact compared to GiveWell top charities.

I personally haven't spent much time looking over GiveWell's evaluations, but Hauke's full report "avoid[s] looking at individual studies and focus[es] mostly on meta-analyses and systematic reviews of randomized controlled trials." I'd expect that someone's assessment of Mind Ease's impact will generally follow their opinion of how cost effective digital mental health interventions are compared to getting no treatment (Hauke's report mentions that most people suffering from depression and anxiety do not receive timely treatment or any treatment at all, particularly in developing countries).

Just to add, for the record, that we released most of Hauke's work because it was a meta-analysis that we hope contributes to the public good. We haven't released either Hauke or Derek's analyses of Mind Ease's proprietary data. Though, of course, their estimates and conclusions based on their analyses are discussed at a high level in the case study.

Hi Akash! Yep, I think Mind Ease's ability to attract users is certainly an important factor! I think of it as Mind Ease's counterfactual ability to get users relative to other apps (for example, since Mind Ease is impact-driven, they could offer the app for free to people living in low-income countries which profit-driven competitors are not incentivized to do) as well as Mind Ease's counterfactual impact on users compared to a substitute anxiety reduction app (for example, data in the cost-effectiveness analysis section of Hauke's report pointing Mind Ease potentially having a stronger effect on GAD-7 anxiety scores compared to Pacifica, a popular alternative, as well as the general rigor of their approach).

The active user estimates were modeled in TPP's full report on Mind Ease. We provide a high-level overview of the data that was considered in the full report, which "included Mind Ease’s background and plans (including offering a free or discounted version of the app to low/middle income countries), historical downloads and active users, competitors, and financial details." The report leveraged Lionheart's business analysis, and based on my knowledge of information such as Mind Ease's historical user figures and strategic options like offering the app for free to certain populations, I think the report's user growth projections are reasonable (and those projections are only for the 25% "success" case).

As a reference point for the 25% chance of success, it's possible that many people's views on startup success are shaped by headlines like "90% of startups fail." However, the criteria for the startups that are included in the numerator and denominator of such success calculations can greatly affect the final number. Funded startups have higher success rates than one might expect. In our footnote on startup base rates which you may find interesting, "Funded ventures had a 76% survival rate (to 2010) and 27% "success" rate (exit or >75 employees)." The 25% success rate aligns very closely with the 27% success rate of funded ventures gaining over 75 employees or getting acquired in the study we cited. The study findings align with other research/reports I am familiar with.

To add two additional points to Brendon's comment.

The 1,000,000 active users is cumulative over the 8 years. So, just for example, it would be sufficient for Mind Ease to attract 125,000 users a year each year. Still very non-trivial, but not quite as high a bar as 1,000,000 MAU.

We were happy we the 25% chance of success primarily because of the base rates Brendon mentioned. In addition this can include the possibility that Mind Ease isn't commercially viable for reasons unconnected to its efficacy, so the IP could be spun out into a non-profit. We didn't put much weight on this, but it does seem like a possibility. I'm mentioning it mostly because it's an interesting consideration with impact investing that could be even more important in some cases.

On reading just the summary, the immediate consideration I had was that the EMH would imply that in the counterfactual where I don't invest in Mind Ease, someone else will, and if I do invest in Mind Ease, someone else will not. After reading the post, it looks like you have two important points here against this—first, early-stage venture markets are not necessarily as subject to the EMH, and second, it's different in this case because EA-aligned investors would be willing to take a lower financial return than they could get with the same risk otherwise in order to do good. Do you agree that impact investing in the broader financial market into established companies has very little counterfactual impact, or is there something I'm missing there? I'm interested in further research on this concept, and I'm not sure how much EA-aligned for-profits are already working on this.

Thanks for commenting! Our point is not only that VC markets are inefficient and thus the EMH may not apply, but also that the EMH is only a statement on the pricing of assets, and does not in fact imply that all available assets will be funded. Thus, it is possible for investments that offer market-rate returns to go unfunded and for the EMH to still hold.

Interestingly, in VC, investors and founders can actually negotiate the pricing for an investment round. Thus, it may be possible for most/all startups to produce market-rate returns if the valuation is tweaked (for example, founders getting less dollars per share than they are asking investors for in a priced round), but since many startups don't even get an investment offer, clearly not all market-rate investments are being funded.

Even if this is not the case, altruistic investors/impact investors are willing to make concessionary investments in which expected returns are lower than the market rate. For example impact investors give loans to nonprofits or disadvantaged communities at interest rates lower than the market rate, gaining both impact returns and financial returns, whereas for-profit investors may pass because they are only looking at financial returns.

In my opinion, it seems like the consensus is that primary market investments (like VC investing and loans directly to people and organizations) are much higher impact than secondary market investments, and I agree with this consensus. I think there may be the chance that creative secondary market strategies, like forms of shareholder advocacy, may have a counterfactual impact (the exact degree of which is unclear), but I haven't looked into it much yet.

"I'm interested in further research on this concept, and I'm not sure how much EA-aligned for-profits are already working on this."

Which concept are you referring to?

[Edited on 19 Nov 2021: I removed links to my models and report, as I was asked to do so.]

Just to clarify, our (Derek Foster's/Rethink Priorities') estimated Effect Size of ~0.01–0.02 DALYs averted per paying user assumes a counterfactual of no treatment for anxiety. It is misleading to estimate total DALYs averted without taking into account the proportion of users who would have sought other treatment, such as a different app, and the relative effectiveness of that treatment.

In our Main Model, these inputs are named "Relative impact of Alternative App" and "Proportion of users who would have used Alternative App". The former is by default set at 1, because the other leading apps seem(ed) likely to be at least as effective as Mind Ease, though we didn't look at them in depth independently of Hauke. The second defaults to 0; I suppose this was to get an upper bound of effectiveness, and because of the absence of relevant data, though I don't recall my thought process at the time. (If it's set to 1, the counterfactual impact is of course 0.)

Our summary, copied in a previous comment, also stresses that the estimate is per paying user. I don't remember exactly why, but our report says:

As far as I can tell (correct me if I'm wrong), your "Robust, uncertainty-adjusted DALYs averted per user" figure is essentially my theoretical upper-bound estimate with no adjustments for realistic counterfactuals. It seems likely (though I have no evidence as such) that:

So 0.02 DALYs averted per user seems to me like an extremely optimistic average effect size, based on the information we had around the middle of last year.

Hi Derek, hope you are doing well. Thank you for sharing your views on this analysis that you completed while you were at Rethink Priorities.

The difference between your estimates and Hauke's certainly made our work more interesting.

A few points that may be of general interest:

From an impartial perspective, I think it is also necessary to account for the wallet of the customer, not only of the investor. After all, the only reason why the investor gets their money back, is that customers are paying for the product.

In other words, one could add a row "Financial loss customer" to the decision matrix. For the "Pass & Give Now" Column it would be 0% (there is customer who pays the investor back). For all other columns it would be 100%, I think. That is, once the customers wallet is taken into account, the best world would be if either the investor did not invest but donate to the BACO, or the customer did not buy the App but donate to the BACO instead.

So all things considered, solo and lead impact investing are good, but only 7 and 30% as good as donating to the BACO, respectively. Or am getting this wrong?

Thanks for this comment and question, Paul.

It's absolutely true that the customer's wallets are worth potentially considering. An early reviewer of our analysis also made a similar point. In the end we are fairly confident this turns out to not be a key consideration. The key reason is that mental health is generally found to be a service for which people's willingness to pay is far below the actual value (to them). Especially for likely paying customer markets of e.g. high-income country iPhone users, the subscription costs were judged to be trivial compared to changes in their mental health. This is why, if I remember correctly, this consideration didn't feature more prominently in Hauke's report (on the potential impacts on the customers). Since it didn't survive there, it also didn't make it into the investment report.

I'm not quite sure I understand the point about the customer donating to the BACO instead. That could definitely be a good thing. But it would mean an average customer with anxiety choosing to donate to a highly effective charity (presumably instead of not buying the App). This seems unlikely. More importantly, it doesn't seem like the investor can influence it?...

In short, since the expected customers are reasonably well off non-EAs, concerns about customer wallet or donations didn't come into play.

Thanks for the response. My issue was just that the money flow from the customer to the investor was accounted as positive for the investor, but not negative for the customer. I see the argument that customers are reasonably well off non-EAs whereas the investor is an EA. I am not sure if it can be used to justify the asymmetry in the accounting.

Perhaps it would make sense that an EA investor is only 10% altruistic and 90% selfish (somewhat in line with the 10% GW pledge)? The conclusion of that would be that investing is doing good, but donating is doing more good.

I'm still not sure I understand your point(s). The payment of the customers was accounted for as a negligible (negative) contribution to the net impact per customer.

To put it another way: Think of the highly anxious customers each will get $100 in benefits from the App plus 0.02 DALYs averted (for themselves) on top of this. The additional DALYs being discounted for the potential they could use another App.

Say the App fee is $100 dollars. This means to unlock the additional DALYs the users as a group will pay $400 million over 8 years.

The investor puts in their $1 million to increase the chances the customers have the option to spend the $400m. In return they expect a percentage of the $400m (after operating costs, other investors shares, the founders shares). But they are also having a counterfactual effect on the chance the customers have/use this option.

This is basically a scaled up version of a simple story where the investor gives a girl called Alice a loan so he can get some therapy. The investor would still hope Alice repays them with interest. But they also believe that without their help to get started she would have been less like to get help for herself. Should they have just paid for her therapy? Well, if she is a well-off, western iPhone user who comfortably buys lattes everyday, then that's surely ineffective altruism. Unless she happens to be the investor's daughter or something, so it makes sense for other reasons.

I think the message of this post isn't that compatible with general claims like "investing is doing good, but donating is doing more good". The message of the post is that specific impact investments can pass a high effectiveness bar (i.e. $50 / DALY). If the investor thinks most of their donation opportunities are around $50/DALY, then they should see Mind Ease as a nice way to add to their impact.

If their bar is $5/DALY (i.e. they see much more effective donation opportunities) then Mind Ease will be less attractive. It might not justify the cost of evaluating it and monitoring it. But for EAs who are investment experts the costs will be lower. So this is all less an exhortation for non-investor EAs to learn about investing, and more a way for investor EAs to add to their impact.

Overall, the point of the post is a meta-level argument that we can compare donation and investment funding opportunities in this way. But the results will vary from case to case.

I agree with your statement that "The message of the post is that specific impact investments can pass a high effectiveness bar"

But when you say >>I think the message of this post isn't that compatible with general claims like "investing is doing good, but donating is doing more good".<< I think I must have been misled by the decision matrix. To me it suggested this comparison between investment and donation, while not being able to resolve a difference between the columns "Pass & Invest to Give", "Pass & Give now" (and a hypothetical column "Pass & Invest to keep the money for yourself", with presumably all zero rows), which would all result in zero total portfolio return (differences between these three options would become visible if the consumer wallet would be included and the "Pass & Invest to Give" would create impact through giving, like the "Pass & Give now" column does).

Anyway, I now understand that this comparison between investing and donating was never the message of the post, so all good.

[Edited on 19 Nov 2021: I was asked to remove the links.]

For those who are interested, here is the write-up of my per-user impact estimate (which was based in part on statistical analyses by David Moss): [removed]

The Main Model in Guesstimate is here: [removed]

The Effect Size model, which feeds into the Main Model, is here: [removed]

I was asked to compare it to GiveDirectly donations, so results are expressed as such. Here is the top-level summary:

Note that this was done around June 2020 so there may be better information on MindEase's effectiveness by now. Also, I think the Happier Lives Institute has since done a more thorough analysis of the wellbeing impact of GiveDirectly, which could potentially be used to update the estimate.

This is a great analysis!

I'm working on an EA-aligned public benefit corporation (www.dozy.health) with similar ambitions as Mind Ease, but for insomnia treatment. My funding up to this point has predominantly been from EA-driven investors, implicitly based on a model like this. Having a more formalized analysis for the approach I'm taking will be helpful. :)

Thanks Sam! Great to hear this could be a valuable framework through which to view Dozy's impact from a philanthropic and investment perspective.