Effective Ventures (EV) is a federation of organisations and projects working to have a large positive impact in the world. EV was previously known as the Centre for Effective Altruism but the board decided to change the name to avoid confusion with the organisation within EV that goes by the same name.

EV Operations (EV Ops) provides operational support and infrastructure that allows effective organisations to thrive.

Summary

EV Ops is a passionate and driven group of operations specialists who want to use our skills to do the most good in the world.

You can read more about us at https://ev.org/ops.

What does EV Ops look like?

EV Ops began as a two-person operations team at CEA. We soon began providing operational support for 80,000 Hours, EA Funds, the Forethought Foundation, and Giving What We Can. And eventually, we started supporting newer, smaller projects alongside these, too.

As the team expanded and the scope of these efforts increased, it made less sense to remain a part of CEA. So at the end of last year, we spun out as a relatively independent organisation, known variously as “Ops”, “the Operations Team”, and “the CEA Operations team”.

For the last nine months or so, we’ve been focused on expanding our capacity so that we can support even more high-impact organisations, including the GovAI, Longview Philanthropy, Asterisk, and Non-trivial. We now think that we have a comparative advantage in supporting and growing high-impact projects — and are happy that this new name, “Effective Ventures Operations”' or “EV Ops”, accords with this.

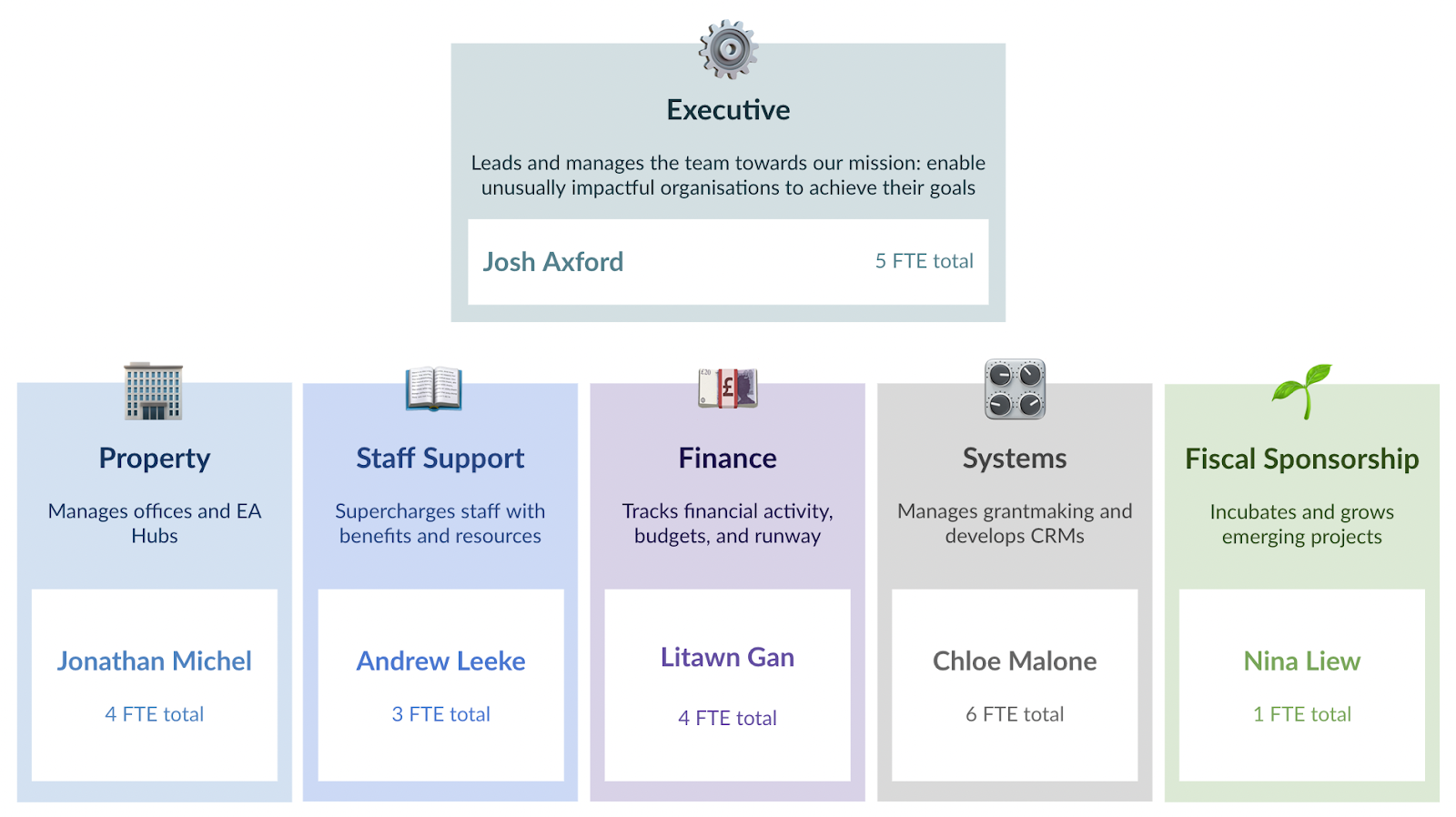

EV Ops is arranged into the following six teams:

The organisations EV Ops supports

We now support and fiscally sponsor several organisations (learn more on our website). Alongside these we support a handful of Special Projects: smaller, 1-2 person, early-stage projects which may grow into independent organisations of their own.

We’re keen to support a wide range of projects looking to do good in the world, although we’re close to current capacity. To see if we could help your project grow and develop, visit https://ev.org/ops/about or complete the expression of interest form.

Get involved

We’re currently hiring for the following positions:

- Project Manager for Oxford EA hub

- Senior Bookkeeper / Accountant

- Operations Associate

- Executive Assistant for the Property team

- Operations Associate - Salesforce Admin

- Finance Associate

If you’re interested in joining our team, visit https://ev.org/ops/careers.

If you have any questions about EV or EV Ops, just drop a comment below. Thanks for reading!

Can someone clarify whether I'm interpreting this paragraph correctly?

I think what this means is that the CEA board is drawing a distinction between the CEA legal entity / umbrella organization (which is becoming EV) and the public-facing CEA brand (which is staying CEA). AFAIK this change wasn't announced anywhere separately, only in passing at the beginning of this post which sounds like it's mostly intended to be about something else?

(As a minor point of feedback on why I was confused: the first sentence of the paragraph makes it sound like EV is a new organization; then the first half of the second sentence makes it sound like EV is a full rebrand of CEA; and only at the end of the paragraph does it make clear that there is intended to be a sharp distinction between CEA-the-legal-entity and CEA-the-project, which I wasn't previously aware of.)

Yep, your interpretation is correct. We didn't want to make a big deal about this rebrand because for most people the associations they have with "CEA" are for the organization which is still called CEA. (But over the years, and especially as the legal entity has grown and taken on more projects, we've noticed a number of times where the ambiguity between the two has been somewhat frustrating.) Sorry for the confusion!

What are your criteria for deciding which organizations to support?

I’m particularly interested in how you think about cause prioritization in this process. The list of currently supported organizations looks roughly evenly split between organizations that are explicitly longtermist (e.g. Forethought Foundation and Longview Philanthropy) and organizations that (like the EA community as a whole) support both longtermist and neartermist work (e.g. GWWC and EA Funds). I don’t see any that focus solely on neartermist work. Do you expect the future mix of supported organizations to look similar to the current one? Would an organization working on animal welfare be as likely to receive support as one working on biosecurity if other factors like strength and size of team were the same?

Also, I’ve mentioned this elsewhere, but I really hope this change leads to a major reassessment of how governance is structured for these organizations.

Minor criticism, but having the same initials as expected value might cause some confusion when people refer to expected value as EV sometimes.

Obviously Effective Ventures aren’t alone in this - CEA could mean both Centre for Effective Altruism and Cost Effectiveness Analysis.

And I’m not sure how feasible it is for new orgs to avoid confusion due to other abbreviations and acronyms used in EA.

I once met an EA (effective altruist) who worked at EA (Electronic Arts) and I asked to meet his EA (executive assistant) and it turned out they lived in EA (East Anglia) and were studying EA (enterprise architecture) but considering adding in EA (environmental assessment) to make it a double major, the majors cost $40k ea (each) 😉

Were they wearing an Emporio Armani t-shirt, by any chance?

They're pretty different kinds of things - an abstract concept vs an organisation - so I don't think it will cause confusion.

I had the same thought only with Tyler Cowen's Emergent Ventures, which is an organisation that is even fairly closely associated with EA (e.g. I personally know two EAs who are among their fellows).

I know a few EAs amongst their fellows as well but I have never heard Emergent Ventures referred to as EV in practice, so it seems fine to me.

I strongly agree with this comment, except that I don't think this issue is minor.

IMO, this issue is related to a very troubling phenomenon that EA is seemingly undergoing in the past few years: people in EA

tend tosometimes do not think much about their EV, and instead strive to have as much impact as possible. "Impact" is a sign-neutral term ("COVID-19 had a large impact on international travel"). It's very concerning that many people in EA now use it interchangeably with "EV", as if EA interventions in anthropogenic x-risk domains cannot possibly be harmful. One can call this phenomenon "sign neglect".Having a major EA organization named "EV" (as an acronym for something that is not "expected value") may exacerbate this problem by further decreasing the usage of the term "EV", and making people use sign-neutral language instead.

I think when people talk about impact, it's implicit that they mean positive impact. I haven't seen anything that makes me think that someone in EA doesn't care about the sign of their impact, although I'd certainly be interested in any evidence of that.

It's not about people not caring about the sign of their impact (~everyone in EA cares); it's about a tendency to behave in a way that is aligned with maximizing impact (rather than EV).

Consider this interview with one of the largest funders in EA (the following is based on the transcript from the linked page):

.

Notably, the FTX Foundation's regranting program "gave over 100 people access to discretionary budget" (and I'm not aware of them using a reasonable mechanism to resolve the obvious unilateralist's curse problem). One of the resulting grants was a $215,000 grant for creating an impact market. They wrote:

A naive impact market is a mechanism that incentivizes people to carry out risky projects—that might turn out to be beneficial—while regarding potential harmful outcomes as if they were neutral. (The certificates of a project that ended up being harmful are worth as much as the certificates of a project that ended up being neutral, namely nothing.)

Thanks for the reply!

If I understand correctly, you think that people in EA do care about the sign of their impact, but that in practice their actions don't align with this and they might end up having a large impact of unknown sign?

That's certainly a reasonable view to hold, but given that you seem to agree that people are trying to have a positive impact, I don't see how using phrases like "expected value" or "positive impact" instead of just "impact" would help.

In your example, it seems that SBF is talking about quickly making grants that have positive expected value, and uses the phrase "expected value" three times.

Reasonably determining whether an anthropogenic x-risk related intervention is net-positive or net-negative is often much more difficult[1] than identifying the intervention as potentially high-impact. With less than 2 minutes to think, one can usually do the latter but not the former. People in EA can easily be unconsciously optimizing for impact (which tends to be much easier and aligned with maximizing status & power) while believing they're optimizing for EV. Using the term "impact" to mean "EV" can exacerbate this problem.

Due to an abundance of crucial considerations. ↩︎

Does EV have any policies around term-limits for board members? This is a fairly common practice for nonprofits and I’m curious about how EV thinks about the pros and cons, and more generally how EV thinks about board composition and responsibilities given the outsize role the board has in community governance.

Does EV have any current employees outside of EV Ops?

Technically speaking all employees of the constituent organizations are "employees of EV" (for one of the legal entities that's part of EV).

Thanks, yea. I guess I'm asking if there are other people or functionalities of EV outside of EV Ops or the constituent orgs, and outside the board.

Ah, got you. There are a few people employed in small projects; things with a similar autonomous status to the orgs, but not yet at a scale where it makes sense for them to be regarded as "new orgs".