Over the last couple months I’ve noticed myself flipping back and forth between two mindsets: “I should try to be useful soon” and “I should build skills so that I am more useful in 5+ years.” I’ve compiled arguments for each view in this post. Note that a lot of this is specific to undergrads who want to reduce AI risk.

So, which is the better heuristic? Trying to be usfeul soon or useful later? I don't think there’s a one-size-fits-all answer to that question. The arguments below apply to differing extents depending on the specific career path and person; so I think this list is mostly useful as a reference when comparing careers.

To clarify, when I say someone is following the “useful soon” heuristic, I mean they operate similar to how they would operate if they were going to retire soon. This is not the same as behaving like timelines are short. For example, undergraduate field building makes a lot of sense under a "useful soon" mindset but less so under short timelines.

To what extent is [being useful soon] in tension with [being useful later]?

Can you have your cake and eat it too? Certainly not always. Here are career paths where this trade off clearly exists:

- Useful soon: being a generalist for The Center for AI Safety (CAIS). CAIS is one of the projects I am most excited about, but the way I can be most helpful to them involves helping with ops, sending emails, etc; I would be more impactful in 5+ years if I worked towards a more specific niche.

- Useful soon: university field building. If I spend most of my time organizing fellowship programs, running courses, etc, I won’t learn as quickly as I would if I focus on my own growth.

- Useful later: whole brain emulation. If I tried to position myself to launch a WBE megaproject in 10 years, I would be approximately useless in the meantime.

- Useful later: earn-to-give entrepreneurship: It takes 7 years on average for unicorns to achieve unicorn status.

Career paths where this trade off isn’t as obviously present:

- AI safety technical research: In the near term, technical researchers can produce useful work and mentor people. They also build skills that are useful in the long term.

- AI governance research: similar points apply as in technical research – though I can see a stronger case for the discount rate mattering here. From what I can tell, there are two main theories of change in AI governance: (1) acquire influence, skills, and knowledge so you can help actors make decisions when the stakes are high. (2) Forecast AI development to inform decisions now. Optimizing for 1 could look pretty different from optimizing for 2.

Arguments for trying to be useful soon

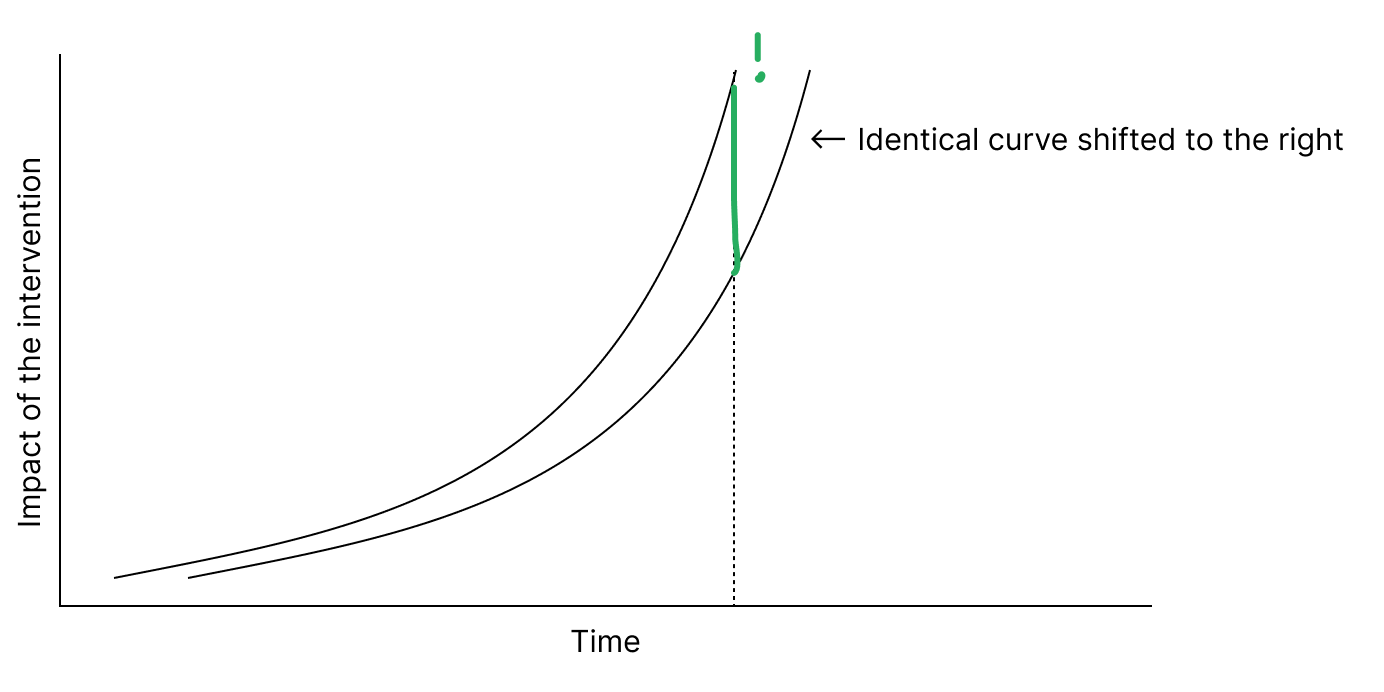

Impact often compounds. The impact of an intervention often grows substantially over time, implying high returns to earliness. Examples:

- John gets involved in AI risk through a student group. The impact of this intervention increases as John becomes more skilled.

- Amy publishes a research paper that establishes a new AI safety subproblem. The impact of this intervention grows as more papers are written about the subproblem.

Crowdedness increases over time. The number of AI safety researchers has been growing 28% per year since 2000. The more people start paying attention to AI risk and trying to reduce it, the less impactful your individual actions are likely to be. This is especially true if newcomers can rapidly catch up or surpass your skill level. For example, philosophers with years of research experience may rapidly catch up and surpass undergrads like me in conceptual research.

It’s hard to predict what will be useful >5 years from now. You might spend 8 years launching startups, but by the time you make it big, Eric Shmit and Jeff Bezos are big donors for AI safety research and your $100 million is a drop in the bucket.

Iteration is better than planning: using current usefulness as a proxy for future usefulness. Making incremental adjustments to increase your current usefulness is much easier than planning out how you will be super useful in the future. “Evolution is cleverer than you are.”

More undergrads will probably choose “useful later” paths than is optimal due to risk aversion. Social momentum pushes in the direction of getting a PhD, finishing school, etc. Also, I’m more risk tolerant than most other undergraduates involved in AI risk, so I expect more than the optimal amount of people to pursue these “useful later” paths.

Short timelines. Capability progress is moving quickly and many well informed and intelligent people have timelines as short as 5 years (e.g. Daniel Kokotajlo and Connor Leahy).

Arguments for trying to be useful later

Skillsets that will be more useful in the future are systematically neglected. Research engineers are in demand right now, and as a result, everyone wants to be one. There are probably high-value skill sets that are not on people’s radar because they aren’t super high-value yet. Some speculative examples:

- Public relations: AI and AI risk are getting more attention from the public. What can thought leaders do to shape the narrative in a beneficial way? Does anyone have the appropriate background to answer these questions?

- Physical engineering / neuroscience: imitating human cognition is arguably safer than other routes to PASTA. Maybe imitating human cognition could be achieved with next-token-prediction on neurolink signals or maybe it requires scanning whole brains. Even if AGI is developed before these methods are feasible, actors might be willing to wait for a safer alternative before kicking off an intelligence explosion.

- Custom AI hardware: expertise in this area could be useful in governance (what if we could put gps modules in every AI chip that were impossible to disable?). I can also imagine applications in technical AI safety research. For example, alternative hardware might allow sparse models to be competitive, which may in turn increase the viability of mechanistic interpretability by reducing polysemanticity.

This conflicts with the “prediction is hard” point. The question is not whether prediction is easy or hard. It is whether people are generally not doing enough of it when selecting career paths (I think there's plenty of alpha to be gained from prescience at the moment).

Tail career outcomes are systematically neglected and require years of building career capital. Go big or go home career paths might be systematically neglected due to risk aversion. These paths also tend to require a lot of time to pay off. Examples include:

- Starting a billion dollar company

- Becoming a congress representative

- Launching a whole brain emulation megaproject.

This point conflicts with “most young people will choose ‘useful later’ paths.” It really depends on the career. I expect lots of AI risk-pilled students to get ML PhDs, but very few to get neuroscience PhDs in pursuit of whole brain emulation.

If most of your impact is in the future, trading near-term impact for future impact is generally a good idea.

This is a counter argument to the claim that you are likely to be much more impactful in the future, but you might as well supplement that with near-term impact. I think either you in fact expect your near term impact (e.g field building) to be significant, or it’s generally better to trade near-term impact for longer term impact.

Let’s say you can increase your near-term impact by 5x by helping to facilitate an AI safety course or you can increase your expected future impact by 2x by using that time to get better grades and get your name on (potentially useless) papers. If you expect your future work to be more than 2.5x as important as your university organizing, you should choose the second option. The correct model probably isn’t strictly multiplicative, but I think it’s intuitively clear that changing the variables that affect large EV parts of the equation will generally make a larger difference than changing the variables that affect the smaller EV parts.

Feelings of urgency may be counterproductive for some people

If people think they have to be useful soon they might:

- work unsustainable hours

- feel anxious

- become desperate and take foolish actions

I was substantially more anxious when I thought most of my impact was going to come from what I did in the next few years.

Yeah, thanks. I flip back and forth too. Currently thinking a little bit more extreme in the "useful later" path than you, in that I think I'm gonna make a bet on a skillset that isn't correlated with the rest of the movement.

It is my anecdotal sense, modulo that the type of people you meet at conferences are selected a particular way, is that EA is overexposed to some impatient theories of change (mostly to do with passive impact, support roles or community building). Furthermore, I think there's a pressure in this direction because it is easier than hard math/cs, alignment research takes a lot of emotional resilience, facing your limitations, failing often, etc. I worry that we're deeply underexposed to "Alice is altruistically motivated, can think for herself about population ethics and threatmodels, and conveniently is an expert in super niche xyz with a related out-of-movement friendgroup who can help her with some project that wasn't on OpenPhil's radar 5 or even 2 years ago".

Yeah, EA is probably underrating uncorrelated skillsets and breadth of in-movement expertise.