Matt_Sharp

Bio

I'm just a normal, functioning member of the human race, and there's no way anyone can prove otherwise

Posts 4

Comments107

Re 2 - ah yeah, I was assuming that at least one alien civilisation would aim to 'technologize the Local Supercluster' if humans didn't. If they all just decided to stick to their own solar system or not spread sentience/digital minds, then of course that would be a loss of experiences.

Thanks for clarifying 1 and 3!

Interesting read, and a tricky topic! A few thoughts:

- What were the reasons for tentatively suggesting using the median estimate of the commenters, rather than being consistent with the SoGive neartermist threshold?

- One reason against using the very high-end of the range is the plausible existence of alien civilisations. If humanity goes extinct, but there are many other potential civilisations and we think they have similar moral value to humans, then preventing human extinction is less valuable.

- You could try using an adapted version of the Drake equation to estimate how many civilisations there might be (some of the parameters would have to be changed to take into account the different context, i.e. you're not just estimating current civilizations that could currently communicate with us in the Milky Way, but the number there could be in the Local Supercluster)

- I'm still not entirely sure what the purpose of the threshold would be.

- The most obvious reason is to compare longtermist causes with neartermist ones, to understanding the opportunity cost - in which case I think this threshold should be consistent with the other SoGive benchmarks/thresholds (i.e. what you did with your initial calculations).

- Indeed the lower end estimate (only valuing existing life) would be useful for donors who take a completely neartermist perspective, but who aren't set on supporting (e.g.) health and development charities

- If the aim is to be selective amongst longtermist causes so that you're not just funding all (or none) of them, then why not just donate to the most cost-effective causes (starting with the most cost-effective) until your funding runs out?

- I suppose this is where the giving now vs giving later point comes in. But in this case I'm not sure how you could try to set a threshold a priori?

- It seems like you need some estimates of cost-effectiveness first. Then (e.g.) choose to fund the top x% of interventions in one year, and use this to inform the threshold in subsequent years. Depending on the apparent distribution of the initial cost-effectiveness estimates, you might decide 'actually, we think there are plenty of interventions out there that are better than all the ones we have seen so far, if only we search a little bit harder'

- I suppose this is where the giving now vs giving later point comes in. But in this case I'm not sure how you could try to set a threshold a priori?

- The most obvious reason is to compare longtermist causes with neartermist ones, to understanding the opportunity cost - in which case I think this threshold should be consistent with the other SoGive benchmarks/thresholds (i.e. what you did with your initial calculations).

- Trying to incentivise more robust thinking around the cost-effectiveness of individual longtermist projects seems really valuable! I'd like to see more engagement by those working on such projects. Perhaps SoGive can help enable such engagement :)

Assuming it could be implemented, I definitely think your approach would help prevent the imposition of serious harms.

I still intuitively think the AI could just get stuck though, given the range of contradictory views even in fairly mainstream moral and political philosophy. It would need to have a process for making decisions under moral uncertainty, which might entail putting additional weight on the views on certain philosophers. But because this is (as far as I know) a very recent area of ethics, the only existing work could be quite badly flawed.

Hey Spencer!

From the 2022 South Africa paper, it appears that the bedaquiline-based regimen actually consists of 8 different drugs (see table S1), with a total cost per treatment of $6402 in the base-case. It's not clear to me how much each drug contributes to the total cost, but you should be able to work this out from the regimen info from table S1 and the drug cost data from the medicines catalog (from reference 24 of the paper). Presumably if you've done it right you should end up with ~$6,402. Then you can just tweak the cost of bedaquiline (I'm assuming no other drugs have also changed price).

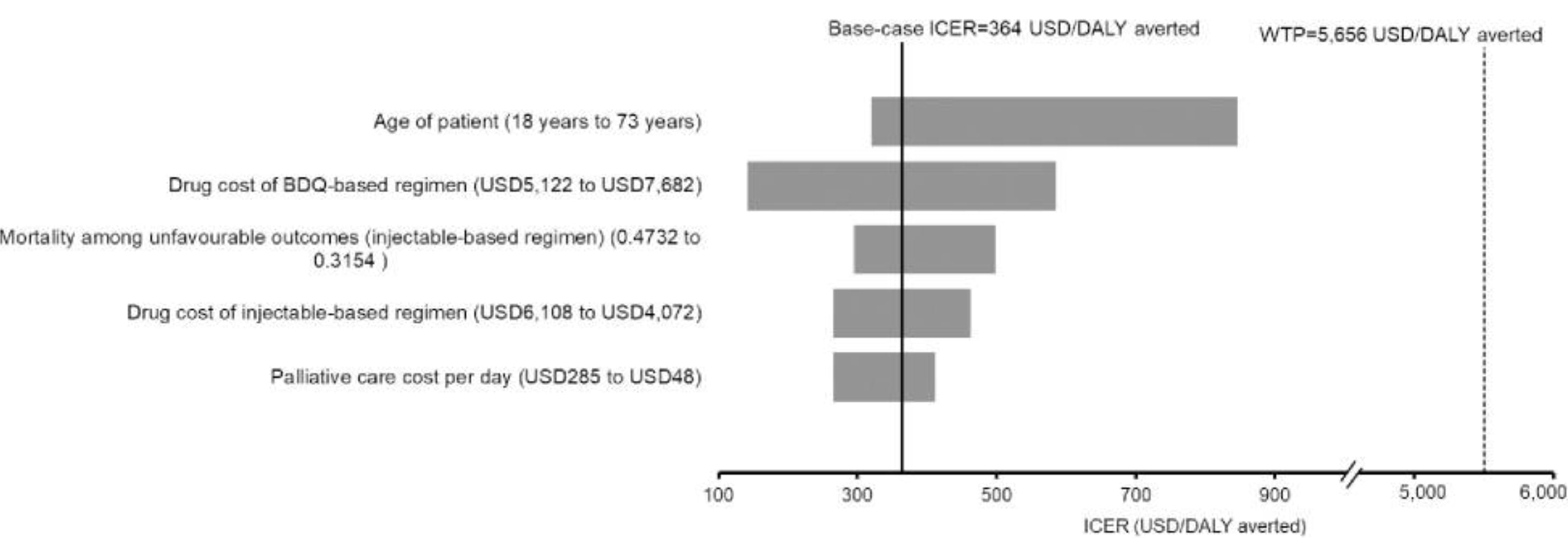

If you manage to do this, then quite plausibly your updated cost per treatment will fall within the range of the paper's one-way sensitivity analysis ($5,122 to $7,682), i.e. Figure 2. From this you can eyeball what the new ICER will be:

Alternatively, you could try reaching out to the lead author - assuming they still have their model to hand, it should be very easy for them to adjust the cost of BDQ and see what the model outputs :)

Also, just to caution, the South Africa paper is "from the perspective of the South Africa national healthcare provider" and so uses the GDP per capita of South Africa as the willingness-to-pay threshold, and some of the health and cost data is also South Africa-specific. So I don't think this can easily be used to infer the change in cost-effectiveness in other settings (though it might give a rough indication of whether or not there could be a substantial change).

I believe CEA's general lack of engagement with social media (and with some traditional media) was a deliberate choice of not wanting EA to grow too quickly, and because of concerns about the 'fidelity' of ideas. See e.g. this CEA blog post. There has been some previous discussion of this on the Forum, e.g. here and here.

I don't know if this is still their approach, or will be once they have a new Executive Director in place.

This post seems very much aligned with (and perhaps inspired by) this highly commended article and this podcast.

Supporting economic growth seems to be very much a mainstream, common-sense idea (in the UK at least it receives a fair amount of coverage in the press). Given this, I'm not convinced simply talking about the benefits of economic growth is particularly valuable. However, perhaps you'll be recommending particular career paths, neglected policies, or organisations that may have a particularly outsized impact on promoting growth and could be supported?

I agree with the overall claims.

However, with regards to Claim 1:

There are good reasons for some people to not give at some points in their lives — for instance, if it leaves someone with insufficient resources to live a comfortable life, or if it would interfere strongly with the impact someone could have in their career. However, I expect these situations will be the exception rather than the rule within the current EA community, [6] and even where they do apply there are often ways around them (e.g. exceptions to the Pledge for students and people who are unemployed).

I disagree with the phrasing 'exception rather than the rule'. To me this suggests that there are only rare and uncommon reasons for failing to donate at least 10%.

But in footnote 6 you say "the 2022 EA survey found that ~50% of respondents were full-time employed".

If this survey is representative, this suggests that a small majority (or sizable minority) of EAs could plausibly not be in a position to donate at least 10% (of those full-time employed, some proportion will be on low-income salaries, or trying to save up to ensure they have a big enough 'personal runway').

Sounds like he'd be good to have at the debate! But it seems very unlikely he'll make the first one in a few weeks time. There seem to be 3 requirements to qualify for the first debate:

It sounds like he needs a big boost from somewhere - maybe if e.g. Elon Musk were to tweet about him and endorse his position on AI that would get him there (and convince him to change his mind re 1, though I'm not sure briefly speaking about AI alignment justifies this)?!