Jeff Kaufman

Bio

Participation4

Software engineer in Boston, parent, musician. Switched from earning to give to direct work in pandemic mitigation. Married to Julia Wise. Speaking for myself unless I say otherwise.

Full list of EA posts: jefftk.com/news/ea

Posts 63

Comments683

no concrete feedback loops in longtermist work

This seems much too strong. Sure, "successfully avert human extinction" doesn't work as a feedback loop, but projects have earlier steps. And the areas in which I expect technical work on existential risk reduction to be most successful are ones where those loops are solid, and are well connected to reducing risk.

For example, I work in biosecurity at the NAO, with an overall goal of some thing like "identify future pandemics earlier". Some concrete questions that would give good feedback loops:

- If some fraction of people have a given virus, how much do we expect to see in various kinds of sequencing data?

- How well can we identify existing pathogens in sequencing data?

- Can we identify novel pathogens? If we don't use pathogen specific data can we successfully re-identify known pathogens?

- What are the best methods for preparing samples for sequencing to get a high concentration of human viruses relative to other things?

Similarly, consider the kinds of questions Max discusses in his recent far-UVC post.

Can you say more about why you [EDIT: this is wrong] decided to categorize climate change as neartermist?

Is it something like, the names are not literal (someone who is concerned that AI will kill everyone in the next 15 years seems literally more neartermist than someone who is worried about the effects of climate change over the next century) but instead represent two major clusters of EA cause prioritization with the main factor being existential impact? And few people who put down a high ranking for climate change are doing it because they are concerned that it will lead to extinction?

I can't imagine anyone would ever sue a charity for this.

I think the main issue isn't the charity being sued, but loss of 501c3 status?

Here's an example of a situation the IRS might be worried about: I give $100k to charity in 2023, the charity gives me a "no goods and services" receipt, and I deduct $100k from my income in figuring taxes, and I save, say $30k. Then in 2024 I tell the charity "I'm sorry, I lost my job, I'm in danger of losing my house, and I need the money back". They give me the money back, and I don't tell the IRS.

This is an interesting analysis, thanks for writing it up!

One aspect of the "waves" of Ben's post that this doesn't touch on is how the community has responded to varying levels of funding availability. When there were a lot more things that clearly needed doing than money to fund them (say, pre-2016, when OP really got going) there was a lot of emphasis on earning to give, effective volunteering, frugality, etc. Then as money became less of the limiting constraint the culture shifted. I don't think this is reflected much in EA forum topic choice, because there wasn't a huge amount to say along these lines, but it felt like a very different movement to be in.

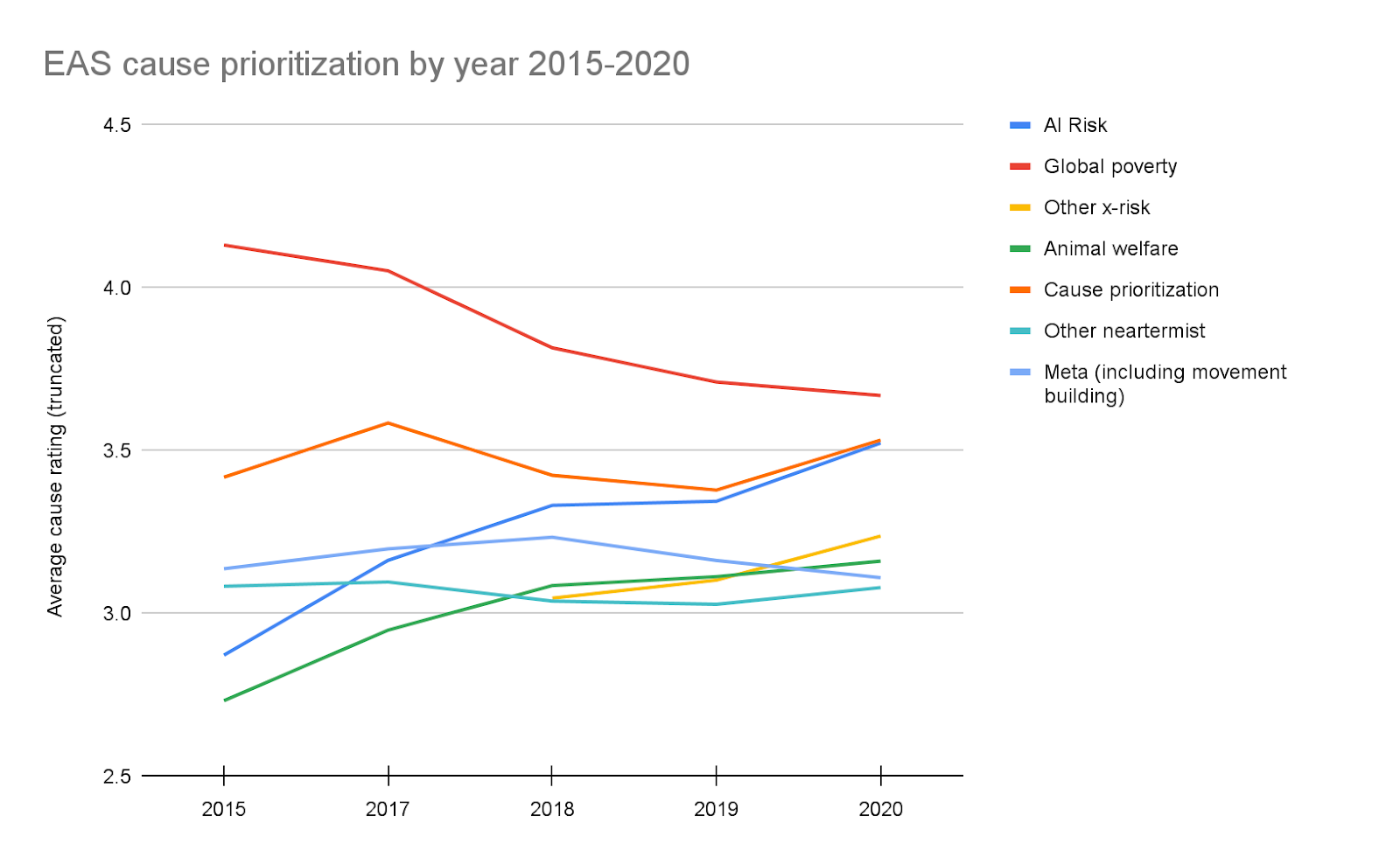

I also think that in as much as waves were about what topics EAs prioritized, the shift from helping others in bad conditions in the near future (global poverty, animals) to trying to reduce the risk of extinction (ai, bio, etc) has been stronger the farther people are from the core of the movement. Early broad audience writing mostly pushed the former, and more recently has pushed the latter. All along, though, both have been heavily represented within the core, and the Forum mostly reflects that. I'd predict you'd see a clearer topic shift if you looked at what EA Survey respondents think are the top priority and where they say they're donating to.

EDIT: https://forum.effectivealtruism.org/posts/83tEL2sHDTiWR6nwo/ea-survey-2020-cause-prioritization has a chart, and while I'm not that happy with their cause groupings it does show real change:

You don't have to get into this if its more personal than you'd like, but I'm confused about the contrast between being allergic to:

Beans (but not peanuts, which are also legumes)

And then later saying:

Tofu is a fine supplement in many dishes

Tofu is made from soy beans; do you know if you can do soy in general, or if the fermentation (or another step of the tofu making process) is what makes it ok?

in terms of tone, it's pretty supportive

That's not my read? It starts by establishing Edwards as a trusted expert who pays attention to serious risks to humanity, and then contrasts this with students who are "focused on a purely hypothetical risk". Except the areas Edwards is concerned about ("autonomous weapons that target and kill without human intervention") are also "purely hypothetical", as is anything else wiping out humanity.

I read it as an attempt to present the facts accurately but with a tone that is maybe 40% along the continuum from "unsupportive" to "supportive"? Example word choices and phrasings that read as unsupportive to me: "enthralled", emphasizing that the outcome is "theoretical", the fixed-pie framing of "prioritize the fight against rogue AI over other threats", emphasizing Karnofsky's conflicts of interest in response to a blog post that pre-dates those conflicts, bringing up the Bostrom controversy that isn't really relevant to the article, and "dorm-room musings accepted at face value in the forums". But it does end on a positive note, with Luby (the alternative expert) coming around, Edwards in between, and an official class on it at Stanford.

Overall, instead of thinking of the article as trying to be supportive or not, I think it's mostly trying to promote controversy?

Founders Pledge evaluates charities in a range of fields including global catastrophic risk reduction. For example, they recommend NTI’s biosecurity work, and this is why GWWC marks NTI's bio program as a top charity. Founders Pledge is not as mature as GiveWell, they don't have the same research depth, and they're covering a very broad range of fields with a limited staff, but this is some work in that direction.