This post is intended to consolidate all the Programme Development Methodologies I found into one post, with an overview of what they cover. None of them on their own were sufficient for our purposes at Shrimp Welfare Project (which is why we developed the Impact Roadmap). But perhaps that's unique to our situation and you may be able to find just what you need at one of the links below :)

As with the rest of the Roadmap, I'll likely update it in the future, but at the moment I just wanted to provide them all together in the same place, along with a concise summary (usually cobbled together from the resource itself). I tried to align them with the appropriate stage of the Roadmap, but it became unnecessarily complicated, so I'm just presenting each one as is. Additionally, if I'm missing any please let me know! I'd love to update it to ensure it's as comprehensive as it can be.

Impact Measurement Guide

- Create a Theory of Change - A theory of change is a narrative about how and why a program will lead to social impact. Every development program rests on a theory of change – it’s a crucial first step that helps you remain focused on impact and plan your program better.

- Assess Need - A needs assessment describes the context in which your program will operate (or is already operating). It can help you understand the scope and urgency of the problems you identified in the theory of change. It can also help you identify the specific communities that can benefit from your program and how you can reach them.

- Review Evidence - An evidence review summarizes findings from research related to your program. It can help you make informed decisions about what’s likely to work in your context, and can provide ideas for program features.

- Assess Implementation - A process evaluation can tell you whether your program is being implemented as expected, and if assumptions in your theory of change hold. It is an in-depth, one-time exercise that can help identify gaps in your program.

- Monitor Performance - A monitoring system provides continuous real-time information about how your program is being implemented and how you’re progressing toward your goals. Once you set up a monitoring system, you would receive regular information on program implementation to track how your program is performing on specific indicators.

- Measure Impact - Impact evaluations allow you to conclude with reasonable confidence that your program caused the outcomes you are seeing. They tell you how big of an effect your program is having.

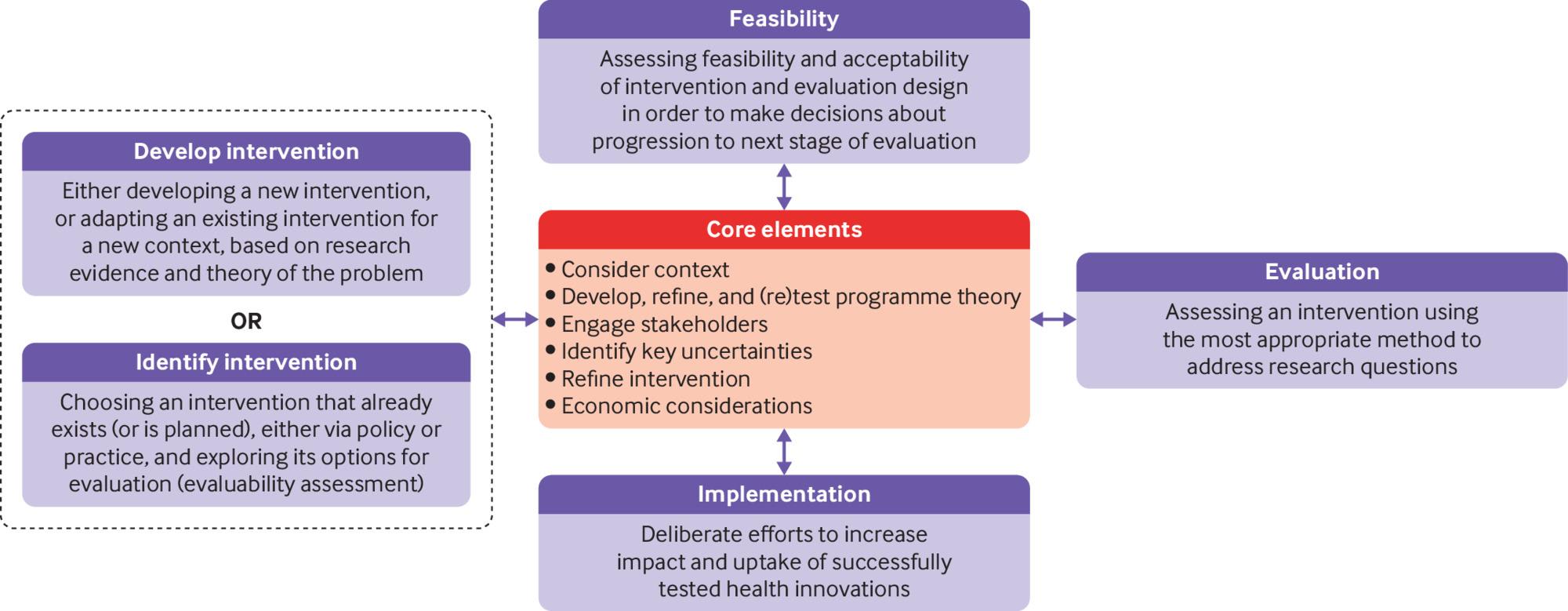

Framework for the development and evaluation of complex interventions

(The link above is to a summary paper which I think is useful, however they also published a comprehensive report for this framework too, which might be worth your time too if this framework seems useful. I've found this to be one of the most useful and comprehensive frameworks, though one of the most difficult to parse and understand "what to do next")

The framework divides complex intervention research into four phases: development or identification of the intervention, feasibility, evaluation, and implementation. A research programme might begin at any phase, depending on the key uncertainties about the intervention in question. Repeating phases is preferable to automatic progression if uncertainties remain unresolved. Each phase has a common set of core elements. These elements should be considered early and continually revisited throughout the research process, and especially before moving between phases (for example, between feasibility testing and evaluation).

- Core Elements - consideration throughout all of the phases of complex intervention research

- Consider context -any feature of the circumstances in which an intervention is conceived, developed, evaluated, and implemented

- Develop, refine, and (re)test programme theory - describes how an intervention is expected to lead to its effects and under what conditions—the programme theory should be tested and refined at all stages and used to guide the identification of uncertainties and research questions

- Engage stakeholders - those who are targeted by the intervention or policy, involved in its development or delivery, or more broadly those whose personal or professional interests are affected (that is, who have a stake in the topic)—this includes patients and members of the public as well as those linked in a professional capacity

- Identify key uncertainties - given what is already known and what the programme theory, research team, and stakeholders identify as being most important to discover—these judgments inform the framing of research questions, which in turn govern the choice of research perspective

- Refine the intervention - the process of fine-tuning or making changes to the intervention once a preliminary version (prototype) has been developed

- Economic considerations - determining the comparative resource and outcome consequences of the interventions for those people and organisations affected

- Developing or identifying a complex intervention - The term ‘development’ is used here for the whole process of designing and planning an intervention from initial conception through to feasibility, pilot or evaluation study.

- Plan the development process

- Involve stakeholders, including those who will deliver, use and benefit from the intervention

- Bring together a team and establish decision-making processes

- Review published research evidence

- Draw on existing theories

- Articulate programme theory

- Undertake primary data collection

- Understand context

- Pay attention to future implementation of the intervention in the real world

- Design and refine the intervention

- End the development phase

- Feasibility - Pieces of research done before a main study in order to answer the question ‘Can this study be done?

- Evaluation - Enables judgments to be made about the value of an intervention. It includes whether an intervention ‘works’ in the sense of achieving its intended outcome identifying what other impacts it has, theorising how it works, taking account of how it interacts with the context in which it is implemented, how it contributes to system change, and how the evidence can be used to support real-world decision-making.

- Efficacy: can this work in ideal circumstances?

- Effectiveness: what works in the real world?

- Theory based: what works in which circumstances and how?

- Systems: how do the system and intervention adapt to one another?

- Implementation - Early consideration of implementation increases the potential of developing an intervention that can be widely adopted and maintained in real-world settings. Implementation questions should be anticipated in the intervention programme theory, and considered throughout the phases of intervention development, feasibility testing, process, and outcome evaluation. Alongside implementation-specific outcomes (such as reach or uptake of services), attention to the components of the implementation strategy, and contextual factors that support or hinder the achievement of impacts, are key.

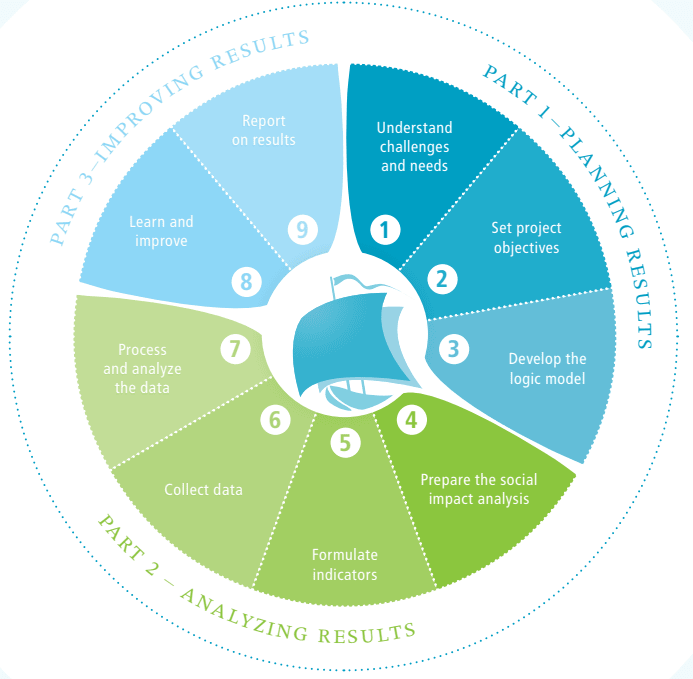

Social Impact Navigator

PHINEO also has an accompanying book - The Practical Guide for Organisations targeting better results

- Planning Impact

- Determining Needs - use a needs assessment and context analysis to obtain important information about your target group’s needs and the context of your (planned) project. This will help you plan your project in an impact-oriented way.

- Defining Project Objectives - develop project objectives and an overall project approach, using your needs assessment and context analysis as a foundation.

- Developing a Logic Model - use a logic model to develop a systematic path toward achievement of your project objectives.

- Analysing Impact

- Preparing Impact Analysis - lay out the logistics of a social impact analysis and how to formulate the questions used in this analysis.

- Developing Indicators - develop indicators for the collection of data

- Collecting Data - various data collection methods and learn how to

determine the right methods for your social impact analysis. - Analysing Data - evaluate and analyse the data collected to make sure you obtain information you can use to guide your conclusions and recommended actions to be taken.

- Improving Impact

- Learning & Improving - learn from the findings of the social impact analysis, enabling you to adapt and improve structures, processes and strategies in your project work.

- Reporting Impact - use the findings of the social impact analysis for your

reporting and communications work.

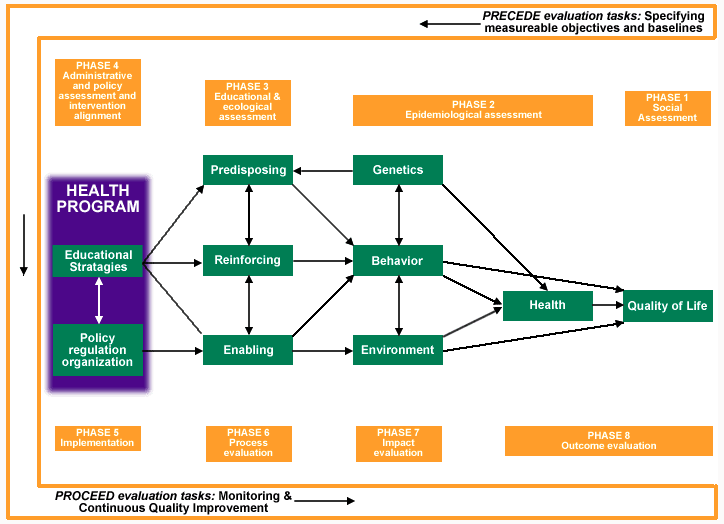

PRECEDE–PROCEED

PRECEDE (Predisposing, Reinforcing, and Enabling Constructs in Educational/Environmental Diagnosis and Evaluation) represents the process that precedes an intervention. PRECEDE has four phases:

- Social Assessment - Defining the ultimate outcome. The focus here is on what the community wants and needs, which may seem unrelated to the issue you plan to focus on. What outcome does the community find most important? Eliminating or reducing a particular problem (homelessness)? Addressing an issue (race)? >Improving or maintaining certain aspects of the quality of life (environmental protection?) Improving the quality of life in general (increasing or creating recreational and cultural opportunities)?

- Epidemiological Assessment - Identifying the issue. In Phase 2 of PRECEDE, you look for the issues and factors that might cause or influence the outcome you’ve identified in Phase 1 (including supports for and barriers to achieving it), and select those that are most important, and that can be influenced by an intervention. (One of the causes of community poverty, for instance, may be the global economy, a factor you probably can’t have much effect on. As important as the global economy might be, you’d have to change conditions locally to have any real impact.)

- Educational & Ecological Assessment - Examining the factors that influence behaviour, lifestyle, and responses to environment. Here, you identify the factors that will create the behaviour and environmental changes you’ve decided on in Phase 2.

- Administrative and Policy Assessment and Intervention Alignment - Identifying “best practices” and other sources of guidance for intervention design, as well as administrative, regulation, and policy issues that can influence the implementation of the program or intervention. Phase 4 helps you look at organizational issues that might have an impact on your actual intervention. It factors in the effects on the intervention of your internal administrative structure and policies, as well as external policies and regulations (from funders, public agencies, and others).

PROCEED (Policy, Regulatory, and Organizational Constructs in Educational and Environmental Development) describes how to proceed with the intervention itself. PROCEED has four phases:

- Implementation - At this point, you’ve devised an intervention (largely in Phases 3 and 4), based on your analysis. Now, you have to carry it out. This phase involves doing just that – setting up and implementing the intervention you’ve planned.

- Process evaluation - This phase isn’t about results, but about procedure. The evaluation here is of whether you’re actually doing what you planned. If, for instance, you proposed to offer mental health services three days a week in a rural area, are you in fact offering those services?

- Impact Evaluation - Here, you begin evaluating the initial success of your efforts. Is the intervention having the desired effect on the behavioural or environmental factors that it aimed at changing – i.e., is it actually doing what you expected?

- Outcome Evaluation - Is your intervention really working to bring about the outcome the community identified in Phase 1? It may be completely successful in every other way – the process is exactly what you planned, and the expected changes made – but its results may have no effect on the larger issue. In that case, you may have to start the process again, to see why the factors you focused on aren’t the right ones, and to identify others that might work.

Confusingly, some descriptions list 8 steps, some list 9. And some list the step names differently.

6SQuID (6 Steps in Quality Intervention Development)

- Define and understand the problem and its causes - Clarifying the problem with stakeholders, using the existing research evidence, is the first step in intervention development. Some health problems are relatively easily defined and measured, such as the prevalence of a readily diagnosed disease, but others have several dimensions and may be perceived differently by different groups. For instance, ‘unhealthy housing’ could be attributed to poor construction, antisocial behaviour, overcrowding or lack of amenities. Definitions therefore need to be sufficiently clear and detailed to avoid ambiguity or confusion. Is ‘the problem’ a risk factor for a disease/condition (eg, smoking) or the disease/condition itself (eg, lung cancer)? If the former, it is important to be aware of the factor's importance relative to other risk factors. If this is modest even a successful intervention to change it might be insufficient to change the ultimate outcome.

- Clarify which causal or contextual factors are malleable and have greatest scope for change - The next step is to identify which of the immediate or underlying factors that shape a problem have the greatest scope to be changed. These might be at any point along the causal chain. For example, is it more promising to act on the factors that encourage children to start smoking (primary prevention), or to target existing smokers through smoking cessation interventions (secondary prevention)? In general, ‘upstream’ structural factors take longer and are more challenging, to modify than ‘downstream’ proximal factors, but if achieved structural changes have greatest population impact, as noted above.

- Identify how to bring about change: the change mechanism - Having identified the most promising modifiable causal factors to address, the next step is to think through how to achieve that change. All interventions have an implicit or explicit programme theory about how they are intended to bring about the desired outcomes. Central to this is the ‘change mechanism’ or ‘active ingredient’, the critical process that triggers change for individuals, groups, or communities.

- Identify how to deliver the change mechanism - Working out how best to deliver them. As with other steps, it is helpful to involve stakeholders with the relevant practical expertise to develop the implementation plan. Sometimes change mechanisms can only be brought about through a very limited range of activities, for instance legal change is achieved through legislation. However, other change mechanisms might have several delivery options; for instance modelling new behaviours could be performed by teachers, peers or actors in TV/radio soap operas. The choice is likely to be target group-specific and context-specific.

- Test and refine on a small scale - Once the initial intervention design has been resolved, in most cases its feasibility needs to be tested and adaptations made. Testing the intervention can clarify fundamental issues such as: acceptability to the target group, practitioners and delivery organisations; optimum content (eg, how participatory), structure and duration; who should deliver it and where; what training is required; and how to maximise population reach.

- Collect sufficient evidence of effectiveness to justify rigorous evaluation/implementation - Before committing resources to a large scale rigorous evaluation (typically a ‘phase III’ RCT), the final step is to establish sufficient evidence of effectiveness to warrant such investment. What is being sought at this stage is some evidence that the intervention is working as intended, it is achieving at least some short-term outcomes, and it is not having any serious unintended effects, for instance exacerbating social inequalities.

Intervention Mapping

- Logic Model of the Problem - Conduct a needs assessment or problem analysis, identifying what, if anything, needs to be changed and for whom;

- Establish and work with a planning group

- Conduct a needs assessment to create a logic model of the problem

- Describe the context for the intervention, including the population, setting, and community

- State program goals

- Program Outcomes and Objectives - Create matrices of change objectives by combining (sub-)behaviours (performance objectives) with behavioural determinants, identifying which beliefs should be targeted by the intervention;

- State expected outcomes for behaviour and environment

- Specify performance objectives for behavioural and environmental outcomes

- Select determinants for behavioural and environmental outcomes

- Construct matrices of change objectives

- Create a logic model of change

- Program Design - Select theory-based intervention methods that match the determinants into which the identified beliefs aggregate, and translate these into practical applications that satisfy the parameters for effectiveness of the selected methods;

- Generate program themes, components, scope, and sequence

- Choose theory- and evidence-based change methods

- Select or design practical applications to deliver change methods

- Program production - Integrate methods and the practical applications into an organized program;

- Refine program structure and organization

- Prepare plans for program materials

- Draft messages, materials, and protocols

- Pretest, refine, and produce materials

- Program Implementation Plan - Plan for adoption, implementation and sustainability of the program in real-life contexts;

- Identify potential program users (adopters, implementers, and maintainers)

- State outcomes and performance objectives for program use

- Construct matrices of change objectives for program use

- Design implementation interventions

- Evaluation - Generate an evaluation plan to conduct effect and process evaluations.

- Write effect and process evaluation questions

- Develop indicators and measures for assessment

- Specify the evaluation design

- Complete the evaluation plan

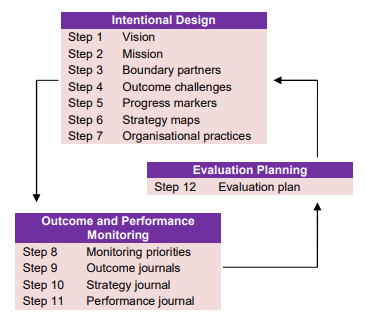

Outcome Mapping

Outcome Mapping focuses on changes in the behaviour of the people, groups and organisations influenced by a programme. Outcome Mapping is designed to deal with complexity, and is not based around linear models of change.

- Intentional Design - helps a programme establish consensus on the changes it aims to help bring about, and plan the strategies it will use. It helps answer four questions:

- Vision - That reflects the large-scale development-related changes that the programme hopes to encourage. It describes economic, political, social, or environmental changes that the programme hopes to help bring about. The programme’s activities should contribute to the vision, but not be solely responsible for achieving it.

- Mission - That describes how the programme intends to support the vision. The mission statement states the areas in which the programme will work towards the vision, but does not list all the activities which the programme will carry out.

- Boundary Partners - Identifying the individuals, groups, or organisations with which the programme interacts directly and where there will be opportunities for influence. Boundary partners may be individual organisations but might also include multiple individuals, groups, or organisations if a similar change is being sought across many different groups (for example, research centres or women's NGOs).

- Outcome Challenges - For each boundary partner. It describes how the behaviour, relationships, activities or actions of an individual, group, or institution will change if the programme is extremely successful. Outcome challenges are phrased in a way that emphasises behavioural change.

- Progress Markers - For each boundary partner. These are visible, behavioural changes ranging from the minimum one would expect to see the boundary partners doing as an early response to the programme, to what it would like to see, and finally to what it would love to see them doing if the programme were having a profound influence.

- Strategy Maps - Identify the strategies used by the programme to contribute to the achievement of each outcome challenge. For most outcome challenges, a mixed set of strategies is used because it is believed this has a greater potential for success.

- Organisational Practices - Identify the organisational practices that the programme will use to be effective. These organisational practices describe a wellperforming organisation that has the potential to support the boundary partners and sustain change interventions over time

- Outcome and Performance Monitoring - provides a framework for the ongoing monitoring of the programme's actions and the boundary partners' progress toward the achievement of ‘outcomes’. Monitoring is based largely on self-assessment.

- Monitoring Priorities - Divided into organisational practices, progress toward the outcomes being achieved by boundary partners, and the strategies that the programme is employing to encourage change in its boundary partners.

- Outcome Journals - For each boundary partner. It includes the progress markers set out in step 5; a description of the level of change as low, medium, or high; and a place to record who among the boundary partners exhibited the change.

- Strategy Journal - This journal records data on the strategies being employed, and is filled out during the programme's regular monitoring meetings.

- Performance Journal - Records data on how it is operating as an organisation to fulfil its mission. This is then filled out during regular monitoring meetings.

- Evaluation Planning - helps the programme identify evaluation priorities and develop an evaluation plan.

- Evaluation Plan - Develop a descriptive plan of a proposed evaluation. This outlines the evaluation issue, the way findings will be used, the questions, sources and methods to be used, the nature of the evaluation team, the proposed dates and the approximate cost. This information is intended to guide the evaluation design.

If Outcome Mapping sounds like a useful tool for your intervention, I would recommend the full book.

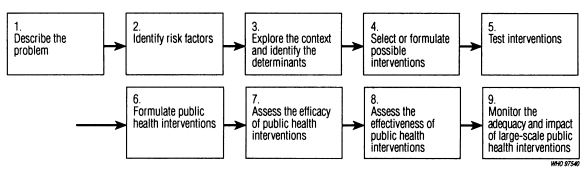

Research steps in the development and evaluation of public health interventions

- Describe the problem - A given problem has to be confirmed to be a public health issue by carrying out basic epidemiological research to describe its nature and magnitude.

- Identify risk factors - Carry out research to identify factors that are associated with the outcome of interest.

- Explore the context and identify the determinants - Describe the behavioural or social processes that lead to the risk factors.

- Select or formulate possible interventions - Development of a solution to the identified problem.

- Test interventions - Studies are conducted to examine the safety, feasibility, acceptability, and efficacy of the intervention at the level of the recipient.

- Formulate public health interventions - Formulate approaches to make this intervention available to those who need it within the context of a public health programme.

- Assess the efficacy of public health interventions - Measure the impact of an intervention that is feasible in public health settings, but which is delivered under ideal conditions for the purposes of the study

- Assess the effectiveness of public health interventions - Measure the impact of an intervention delivered under normal programme conditions; they take into account the vagaries of public health practice, and provide a more realistic statement of potential impact in the "real world".

- Monitor the adequacy and impact of large-scale public health interventions - Monitoring and evaluation of the new or enhanced programme is required to assess its adequacy with respect to progress in implementing programme activities and to achieve predetermined targets in outputs and coverage

Stage-Specific Methodologies

The methodologies above intend to cover all (or most) of the steps involved in Programme Development. However, there are some methodologies that deep-dive into specific stages within the overall Programme Development (such as the Theory of Change, Monitoring, or Evaluation), which I've covered below.

Theory of Change in Ten Steps

- Situation analysis - The first step is to develop a good understanding of the issue you want to tackle, what you bring to the situation and what might be the best course of action. You might feel that this is revisiting old ground, but we think it’s always useful to take stock. You will definitely have useful conversations. There are three parts to this process:

- ‘Problem’ definition. What, succinctly, is the issue your project is aiming to tackle?

- Take time to think about the ‘problem’ you want to tackle.

- Think about what you bring/offer

- Target groups - Who are the people you can help or influence the most? Based on your situation analysis in Step 1 you can now describe the types of people or institutions you want to work with directly. We refer to these as your target groups.

- Impact - Think about the sustained or long term change you want to see. This is where you describe what the project or campaign hopes to achieve in the long run. Think about what you want the ‘sustained effect on individuals, families communities, and/or the environment’ to be.

- Outcomes - What shorter-term changes for your target group might contribute to impact? Outcomes happen before impact. In a services context, it’s often good to see them as changes in the strengths, capabilities or assets that you aim to equip your target group with, to achieve the impact you set out in Step 3. Time can be a helpful lens here. If impact is what you want to achieve over years, outcomes are more about weeks and months. You will want and expect to see outcomes changing more quickly.

- Activities - What are you going to do? You can now move on to thinking about your activities. This step is about stating what you are doing or plan to do to encourage the outcomes from Step 4 to happen. You should find this the easiest part of the theory of change.

- Change mechanisms - How will your activities cause the outcomes you want to see? The mechanisms stage is where you describe how you want people to engage with your activities, or experience them, to make outcomes more likely. This can be as simple as stating that people need to listen to your advice to make a change, but it can be more subtle – that they need to feel your advice is relevant to them and believe it is something they can take action on.

- Sequencing - It’s sometimes helpful to think about the order outcomes and impact might occur in, especially when you expect change to take time or happen in stages. Thinking about sequencing requires and demonstrates a deeper level of thinking about how change will occur and what your contribution might be. You might identify gaps in your reasoning or appreciate how some activities are more applicable to different stages.

- Theory of change diagram - It can often help to make a diagram of your theory of change. The two main benefits are

- The discipline of creating a diagram on one page prompts further reflection. It may help you be more succinct, see new connections, identify gaps in your thinking, and be clearer about the sequence of outcomes.

- A diagrammatic theory of change is a useful communications tool.

- Stakeholders and 'enabling factors' - You have already thought a lot about the external environment in Step 1. Steps 2-8 have been about your own work, which helps you to focus. Now it’s time to think again about how the external environment will affect your aims and plans. Specifically, what you need others to do to support your theory of change and what factors might help or hinder your success.

- Assumptions - ‘Assumptions’ are often talked about as an important part of the theory of change process. Broadly they refer to ‘the thinking that underlies your plans’. We find the concept a bit nebulous though and that it conflates important things. Hence, we have already covered lots of what would normally be included under ‘assumptions’ in Steps 1, 6 and 9. What’s remaining for Step 10 is to identify where your theory of change is weak, untested or uncertain. This is worthwhile because it helps you clarify what the biggest concerns are. It is also the best way to identify your main research questions.

Ten Conditions for Change

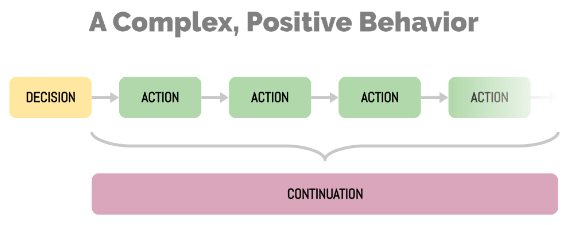

The framework is organized into three main phases: DECISION, ACTION, and CONTINUATION. The ten conditions together make up these three phases.

- Making a Decision to adopt the new behaviour

- Considers changing the behaviour

- Desires changing the behaviour

- Intends to change the behaviour

- Performing a number of Actions that comprise the new behaviour

- Remembers to perform each action

- Believes performing each action will help achieve the goal

- Chooses to perform each action

- Knows how to perform each action

- Has needed resources to perform each action

- Embodies skills and traits needed to perform each action

- Ensuring the Continuation of the relevant conditions for success as time passes

- Maintains attributes required to perform future needed actions

The website lists a huge number of specific strategies for each step in the process, which I'd recommend looking into if you're interested in this framework. They also provide some useful advice for how to apply the framework:

- Choose a Target Population - Select a population or person (it could be yourself!) who you are aiming to benefit via positive behaviour change.

- Consider the Desired Outcomes & Brainstorm Behaviours - Write down the primary positive outcome or benefit that you’re aiming to create for the target population. Then brainstorm a list of many possible new behaviours (or behaviours that are currently only done infrequently) that could lead to that positive outcome or benefit. After you’ve come up with this list of possible target behaviours, for each one consider:

- how much benefit it would create for the target population if they were to engage in this behaviour reliably, and

- how difficult it would be to create that behaviour.

- Select the Behaviour - Of the list of concrete, positive behaviours you brainstormed, pick the one that you believe has the best ratio of positive outcomes for your target population relative to its difficulty of implementation.

- Consider the Decision Conditions - Now we are going to apply the Decision phase of the Ten Conditions for Change framework to the behaviour that you selected. For each of the three conditions for change in the Decision phase, ask yourself:

- To what extent is this condition met by this person?

- What percentage of people in this population meet this condition?

- Break the Behaviour into Action Sequences - Then, break the behaviour down into a series of actions that a person can perform to fully succeed at the behaviour. Note that most complex behaviours will require a number of different actions to be performed. Furthermore, there might be multiple sequences of actions that could lead a person to succeed at this behaviour, so you’ll want to choose the sequence of actions that is easiest to implement and that is still sufficient for engaging in the desired behaviour. Ask yourself:

- Of all of the sequences of actions that could produce this behaviour, which one makes the most sense in this situation?

- Which one is easiest to carry out?

- Consider the Action Conditions - Once you have settled on a sequence of actions that constitute the positive behaviour, then you’ll want to think about those actions individually. For each distinct type of action, consider each of the six conditions for change in the Action phase, and ask yourself:

- “To what extent is this condition met by this person?”

- “What percentage of people in this population meet this condition?”

- Consider the Continuation Condition - Then, review the condition for change in the Continuation phase, and ask yourself:

- Is it likely that the conditions in the Decision and Action phase may shift for the worse as time passes or as some of the actions are completed?

- If so, when, where, and how will the process break down in the future?

- Choose Strategies - As you review all of the conditions mentioned, keep in mind that it often turns out that some of the conditions will already be met automatically and so require no work, whereas others will only be met if appropriate interventions are used. The conditions for change that you think are not already well met provide hypotheses about how to increase the chance that the behaviour will be changed. For each condition that is not well met, consider the provided example strategies for improving that condition, or brainstorm your own strategies. Consider which strategies seem promising regarding the intended behaviour change for the intended population given each strategy’s likelihood of success and difficulty of implementation. Now, ask yourself:

- Given all of the conditions for change that are not well met and all of the relevant strategies for meeting that condition, which would be the most effective for me to work on?

- Which do I predict will provide the biggest expected improvements in behaviour change relative to their cost, effort, or difficulty of implementation?

The M&E Universe

The M&E Universe is a free, online resource developed by INTRAC to support development practitioners involved in monitoring and evaluation (M&E). The M&E Universe is divided into nine discrete areas of interest:

- Planning M&E: Planning is at the heart of the universe and provides an entry point for those who are relatively new to the world of M&E.

- Data Collection: This section covers basic data collection methods such as interviews, focus group discussions, observation, as well as related issues such as sampling and the use of ICT in data collection

- Complex Methods of Data Collection and Analysis: More complex methods used to collect and analyse data, such as: contribution analysis, most significant change, experimental methods, organisational Assessment tools, qualitative comparative analysis and others.

- Data Analysis: Methods and approaches for analysing M&E data, including both quantitative and qualitative analysis.

- Data Use: This section deals with the different uses of M&E information, including learning, accountability, management and communications, and advice on how these uses affect the design of M&E systems.

- M&E Functions: This section covers monitoring, evaluation, reviews, impact assessments and research, and explains how these interrelate; the evaluation section contains sub-sections on the different kinds of evaluations commonly in use.

- M&E systems: Advice and information on designing and developing M&E systems, including the practicalities of basic M&E systems as well as developing M&E systems for more complex situations.

- M&E debates: Brief introductions to some of the main debates surrounding M&E – both past and present.

- M&E of development approaches – Advice on planning, monitoring and evaluating different programming approaches such as advocacy, capacity building and mobilisation.

The CART Principles

The CART Principles are discussed in detail in the book “The Goldilocks Challenge”. I found the book extremely useful in the context of Monitoring, Learning, and Evaluation. This section has sub-sections and functions as a sort of book summary.

The CART Principles refer to data that is:

- Credible: Collect high quality data and analyse the data correctly

- Is the data valid? (does it capture the actual essence of what we’re seeking to measure, and not a poor proxy for it?)

- Is the data reliable? (is it possible to gather this data in a way that reduces randomness?)

- Is the data unbiased? (Are we able to reduce bias in the data, particularly in the case of data that can only be gathered through surveys?)

- Can we appropriately analyse this data? (Are we able to measure this data against a counterfactual? I.e. can we get a control)

- Actionable: Commit to act on the data you collect

- Is there a specific action that we will take based on the findings?

- Do we have the resources necessary to implement that action?

- Do we have the commitment required to take that action?

- Responsible: Ensure the benefits of data collection outweigh the costs

- Is there a cheaper or more efficient method of data collection that does not compromise quality? (i.e. can we take certain data points less frequently and not compromise information value? Can one datapoint stand as a reliable proxy for others?)

- Is the total amount of spending on data collection justified, given the information it will provide, when compared to the amount spent on other areas of the organisation? (e.g. administrative and programmatic costs)

- Does the information to be gained justify taking a beneficiary’s time to answer?

- Is the added knowledge worth the cost? (i.e. how much do we already know about the impact of the program from prior studies, and thus how much more do we expect to learn from a new impact evaluation? )

- Transportable: Collect data that generate knowledge for other programs

- Will future decisions, either by this organisation, by other organisations, or by donors, be influenced by the results of this study?

- Will this data be relevant to the design of new interventions, if required?

- Will this data support the scale-up of interventions that work?

- Does this data help us answer questions about our Programme Theory? (allowing other organisations to judge whether the program might work in their context)

- Would collecting this data also be useful in other contexts? (i.e. are we able to replicate this somewhere else, which if successful, would bolster the relevance of this intervention in multiple contexts)

Data Collection

A first consideration you’ll likely want to make is, are we going to use Primary data (i.e. data we’re collecting ourselves) or Secondary data (i.e. data that exists already and we’re using), or a mix. There are some obvious trade-offs:

- Primary data - gives you more organisational control over the data being collected, and enables it to be highly targeted, however, it has a high cost (it requires time to develop and test collection methods, train staff, and ensure data quality).

- Secondary data - often much cheaper, but far less targeted, often unavailable in the context you’re working in, and the data will still need to be validated for accuracy.

Next, you’ll need to consider concepts of data Validity:

- Construct Validity - the extent to which an indicator captures the essence of the concept you are seeking to measure - the output or the outcome

- Concept - refers to defining exactly what the organisation wants to measure (i.e. Activities, Outputs, and Outcomes), clearly and precisely.

- Indicator - refers to the metric that will measure the Concept

- Criterion Validity - the extent to which an indicator is a good predictor of an outcome, whether contemporaneous or in the future

Then, you'll want to consider data Reliability, having data that is consistently generated. In other words, the data collection procedure does not introduce randomness or bias into the process:

- Bias (in the context of data collection) - The systematic difference between how the average respondent answers a question and the true answer to that question. Some common forms of measurement bias are:

- Mere measurement effect - people changing their behaviour because they were asked a question

- Anchoring - a person’s answer is influenced by another number or concept

- Social desirability bias - a person’s response to a question is influenced by wanting the answer to be the socially desirable answer

- Experimenter demand effect - people answer in ways as to please the experimenter

- Error (in the context of data collection) - Data issues caused by noisy, random, or imprecise measurement of data. Some common forms of measurement error are:

- Wording of questions - lack of precision and clarity in questions leading to different responses and unreliable data

- Recall problems - the recall period is too long for the context - also known as the “look-back period”. You can often consult the literature for appropriate look-back periods in different contexts.

- Translation issues - poor or inconsistent translation that fails to account for cultural contexts

Next we can move on to defining our Indicators. Indicators should be (which should be easy enough to remember with the snappy acronym SSTFF…):

- Specific - enough to measure the Concept you’re trying (and only that concept).

- Sensitive - to the changes it is designed to measure, so that the range of responses is calibrated to the likely range of answers.

- Time-Bound - relating to a specific time-period with a defined frequency

- Feasible - within the capacity of the organisation to measure (and the respondents to answer)

- Frequency-calibrated - dependent on the data that is being captured.

Finally, it’s worth considering some Logistics, such as:

- Data collection instruments - It often makes sense to pretest instruments in both an office and in the field)

- Survey setup - Ensuring there is a detailed data collection plan, that documents are translated appropriately, data collection staff are trained, and there is good oversight in place - i.e. accompanying, spot-checks and audits),

- Personnel - Staff incentives don’t unintentionally skew the data, program staff understand the importance of the handling the data and how to do so), and

- Data entry - Dependent on paper or digital-based, but embedding concepts like logical checks (not enabling a response that doesn’t make sense), and double entry (having two people independently enter paper data electronically and then having any discrepancies flagged electronically)

Actionable Data Systems

Monitoring system(s) should a) demonstrate accountability and transparency (that the program is on track and delivering outputs), and b) provide actionable information for learning and improvement (for decision-makers).

In practice, this likely means collecting five types of data:

- Financial - Costs (staff, equipment, transport etc.) and revenues (from donors, or a contract, or income if a social enterprise). Often this data is collected anyway as a result of external accountability requirements, however data can also be organised to implement learning, such as cost-per-output of programs.

- Activity Tracking - Key activities (and outputs) identified by the Theory of Change. As with financial data, this is typically collected anyway for external accountability, but often can be made more actionable, by satisfying the following conditions:

- By disaggregating activities across programs/sites

- By connecting activity data to financial data

- Regular reviewing of the data to make decisions. High-performing areas can then be learned from and the learnings transferred to others (internal programs, or other organisations)

- Targeting - Tracking if target Populations are being reached, so that corrective actions can be taken in a timely manner if not. This data likely falls within your Outcomes, or Impact range within your Theory of Change.

- Engagement - The Extensive Margin (the size of the program as identified by participation) and the Intensive Margin (how intensely did they participate). Essentially, once activities are in place and running smoothly - is the program working from a participant perspective?

- Feedback - From participants, which can often shed light on other data, such as Engagement. Feedback data can also include program staff. Feedback can additionally be gathered through A/B Testing of an ongoing program.

All this data, then, needs to be collated into a system to enable sharing of the data and insights, for accountability purposes (such as with donors, or for the Impact page of your website), or with internal decision-makers. Additionally, the systems should facilitate the timely usage of the data. The data shouldn’t “disappear into a black hole”. Your actionable data system then, should do three things:

- Collect the right data - Following the CART Principles, your data should be:

- Credible - Collect high-quality data and analyse the data correctly

- Actionable - Commit to act on the data you collect

- Responsible - Benefits of data collection outweigh the costs

- Transportable - Generates knowledge for other programs

- Produce useful data in a timely fashion - This means being able to resolve differing needs for different insights at different parts of the organisation.

- The senior team may need aggregated data related to strategic priorities.

- The Program staff may need disaggregated data to assess program performance.

- There will likely also be external requirements for the data (such as reporting to donors).

- The data should therefore be accessible and analysable in multiple ways, and your system should be able to facilitate this.

- Create organisational capacity and commitment - Finally, data should be shared internally, with staff responsible for reviewing and reporting on data, with a culture of learning.

- Shareable data could be as fancy as a nice digital dashboard, but it also could be as simple as a chalkboard.

- Reviewing and reporting on data can be built into regular meeting agendas.

- A culture of learning should be built, with staff being able to understand, explain and respond to data - with an emphasis on learning and improvement, not mistakes.

Applied Information Economics

The concept of Applied Information Economics (AIE), can be summarised as a philosophy in the following six points (pg 267 of the "How to Measure Anything" book):

- If it’s really that important, it’s something you can define. If it’s something you think exists at all, then it’s something that you’ve already observed somehow.

- If it’s something important and something uncertain, then you have a cost of being wrong and a chance of being wrong.

- You can quantify your current uncertainty with calibrated estimates.

- You can compute the value of additional information by knowing the “threshold” of the measurement where it begins to make a difference compared to your existing uncertainty.

- Once you know what it’s worth to measure something, you can put the measurement effort in context and decide on the effort it should take.

- Knowing just a few methods for random sampling, controlled experiments, or even just improving on the judgement of experts can lead to a significant reduction in uncertainty.

In practice, “measuring anything” can be broken down into 5 steps:

- Start by decomposing the problem - “Decompose the measurement so that it can be estimated from other measurements. Some of these elements may be easier to measure and sometimes the decomposition itself will have reduced uncertainty.”

- Ask is it business critical to measure this? - Does the importance of the knowledge outweigh the difficulty of the measurement?

- Estimate what you think you’ll measure - Make estimates about what you think will happen, and calibrate those estimates to understand just how uncertain you are about outcomes.

- Measure just enough, not a lot - The Rule of Five: "There is a 93.75% chance that the median of a population is between the smallest and largest values in any random sample of five from that population." or (nowing the median value of a population can go a long way in reducing uncertainty.)

- Do something with what you’ve learned - After you perform measurements or do some data analysis and reduce your uncertainty, then it’s time to do something with what you’ve learned

This section is essentially a summary of the book “How to Measure Anything”. Most of the above is taken from this summary here., and there is a more detailed summary available here.

Network on Development Evaluation (EvalNet)

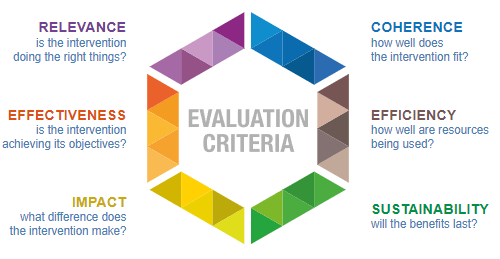

The EvalNet methodology uses six criteria, with two principles for their use.

The two principles are:

- The criteria should be applied thoughtfully to support high-quality, useful evaluation - They should be contextualised – understood in the context of the individual evaluation, the intervention being evaluated, and the stakeholders involved. The evaluation questions (what you are trying to find out) and what you intend to do with the answers, should inform how the criteria are specifically interpreted and analysed.

- Context: What is the context of the intervention itself and how can the criteria be understood in the context of the individual evaluation, the intervention and the stakeholders?

- Purpose: What is the evaluation trying to achieve and what questions are most useful in pursuing and fulfilling this purpose?

- Roles and power dynamics: Who are the stakeholders, what are their respective needs and interests? What are the power dynamics between them? Who needs to be involved in deciding which criteria to apply and how to understand them in the local context? This could include questions about ownership and who decides what is evaluated and prioritised.

- Intervention: What type of intervention is being evaluated (a project, policy, strategy, sector)? What is its scope and nature? How direct or indirect are its expected results? What complex “systems thinking” is are at play?

- Evaluability: Are there any constraints in terms of access, resources and data (including disaggregated data) impacting the evaluation, and how does this affect the criteria?

- Timing: At which stage of the intervention’s lifecycle will the evaluation be conducted? Has the context in which the intervention is operating changed over time and if so, how? Should these changes be considered during the evaluation? The timing will influence the use of the criteria as well as the source of evidence.

- The use of the criteria depends on the purpose of the evaluation. The criteria should not be applied mechanistically - Instead, they should be covered according to the needs of the relevant stakeholders and the context of the evaluation. More or less time and resources may be devoted to the evaluative analysis for each criterion depending on the evaluation purpose. Data availability, resource constraints, timing, and methodological considerations may also influence how (and whether) a particular criterion is covered.

- What is the demand for an evaluation, who is the target audience and how will they use the findings?

- What is feasible given the characteristics and context of the intervention?

- What degree of certainty is needed when answering the key questions?

- When is the information needed?

- What is already known about the intervention and its results? Who has this knowledge and how are they using it?

The six evaluation criteria are:

- Relevance - Is the intervention doing the right things? (The extent to which the intervention objectives and design respond to beneficiaries , global, country, and partner/institution needs, policies, and priorities, and continue to do so if circumstances change)

- Coherence - How well does the intervention fit? (The compatibility of the intervention with other interventions in a country, sector or institution)

- Effectiveness - Is the intervention achieving its objectives? (The extent to which the intervention achieved, or is expected to achieve, its objectives, and its results, including any differential results across groups)

- Efficiency - How well are resources being used? (The extent to which the intervention delivers, or is likely to deliver, results in an economic and timely way)

- Impact - What difference does the intervention make? (The extent to which the intervention has generated or is expected to generate significant positive or negative, intended or unintended, higher-level effects)

- Sustainability – Will the benefits last? (The extent to which the net benefits of the intervention continue, or are likely to continue)

Choosing which of the Six Criteria to use:

- If we could only ask one question about this intervention, what would it be?

- Which questions are best addressed through an evaluation and which might be addressed through other means (such as a research project, evidence synthesis, monitoring exercise or facilitated learning process)?

- Are the available data sufficient to provide a satisfying answer to this question? If not, will better or more data be available later?

- Who has provided input to the list of questions? Are there any important perspectives missing?

- Do we have sufficient time and resources to adequately address all of the criteria of interest, or will focusing the analysis on just some of the criteria provide more valuable information?

[If the EvalNet criteria are useful to you, I'd recommend the OECD book, available online]