Introduction

This submission will argue that moral weights–GiveWell’s current approach to comparing different good outcomes–are almost certainly flawed in measurement, and potentially flawed in concept entirely. But (at least) the former is well-known, and acknowledged often by GiveWell researchers. More concerningly, the body of publicly released research from GiveWell displays a lack of imagination surrounding how the moral weights approach can be improved to better track the effect of different outcomes on the balance of happiness in the world.

The recommendation we are most sure of is that GiveWell should hire (perhaps on a part-time or project basis) one or more philosophers specializing in the kinds of questions the moral weights approach attempts to answer. We argue for this recommendation by outlining the doubts we have about the validity of the current approach, especially those that hit on deeper philosophical questions which we believe generalists are ill-placed to adequately answer.

Our submission is notably light on actual philosophical argument; we may come across as being satisfied with merely gesturing at big problems without expressing confidence in any particular solution. This is the point–as undergraduates in philosophy (and economics), it would be irresponsible to claim definitive answers to the gnarly questions. Our hope is that this submission encourages GiveWell to invest resources into providing satisfactory answers to such questions.

1. Why is this an area of priority to improve?

In short, differences in moral weights might significantly alter GiveWell’s cost effectiveness ranking of giving opportunities. Charities avert deaths and increase logged consumption–to take an example of two outcomes to which moral weights are applied–in different proportions to one another. Although specific quantitative estimate of the value of additional information on these parameters is outside the scope of this submission (but see a recent EA forum post by Sam Nolan, Hannah Rokebrand, and Tanae Rao), it is plausibly valuable enough to warrant implementing some or all of our recommendations, given that the moral weight parameters appear in every GiveWell CEA. Beyond their use in existing CEAs, updates to GiveWell’s moral weights might also change the organization’s priorities for future research, incubation grants, etc.

GiveWell seems to pre-empt this view in a 2017 post on their website, in which they state (emphasis ours):

Our initial analysis suggests that using relatively "standard" moral weight assumptions (i.e., the assumptions in the previous two bullet points) instead of our staff's moral weights would not change our overall view of the relative cost-effectiveness of our current top charities. It may affect how we view some interventions in the future, particularly those that disproportionately focus on averting deaths for young children or adults.

The problem with this line of argumentation (if used to refute us) is that GiveWell is not in the business of using standard practice as a yardstick against which its own performance is to be graded. The relevant counterfactual to using current moral weights is not using “standard” assumptions, but rather investing in radically improving the moral weights approach.

For example, if the doubts expressed in section 2(c) of this submission lead GiveWell to scrap or give much lower consideration to preference surveys in calculating its moral weights, it is plausible that whatever replaces the surveys currently used will be >10% different than current parameter values. If GiveWell hires a practical ethicist to do the kind of work we specify in section 3, the magnitude of the changes (if any at all) to the moral weights approach are difficult to predict; they could be minimal, or be large enough that it no longer makes sense to even call the parameters ‘moral weights’.

Furthermore, our uneducated guess is that this area of research is neglected as compared with GiveWell’s examination of other parameters in its CEA. For example, we did not come across any public announcements about GiveWell hiring or collaborating with trained moral philosophers to review its methodology in determining moral weights. If we are right about this, this might be on par with its failure to prioritize hiring an economist in terms of the mistake's effect on the accuracy of GiveWell’s recommendations to its donors.

2. What is wrong with GiveWell’s current approach?

We assume our readers are familiar with GiveWell’s current approach to moral weights, as the primary intended audience of this submission is GiveWell researchers. For a primer on the moral weights approach, we found Olivia Larsen’s lightning talk (text version here) useful.

(a) The IDInsight 2019 survey measures the preferences of beneficiaries inaccurately.

This section will be brief. We do not have anything novel to add to the list of existing flaws acknowledged by GiveWell and other researchers, but felt that the submission would be incomplete without a restatement of key issues in the most well-known study on this topic.

Some of the problems include:

- Participants displayed a poor understanding of low probabilities, which may have systematically skewed their responses in such a way as to not reflect their genuine preferences.

- The presence of social desirability and related biases, skewing participants in favor of prioritizing averting death over increasing logged consumption.

- Large differences in elicited VSL depending on how questions were phrased, followed by a simple averaging of elicited VSLs which differed by >100%.

(b) GiveWell donor surveys have an unclear methodology, and are weighted very heavily.

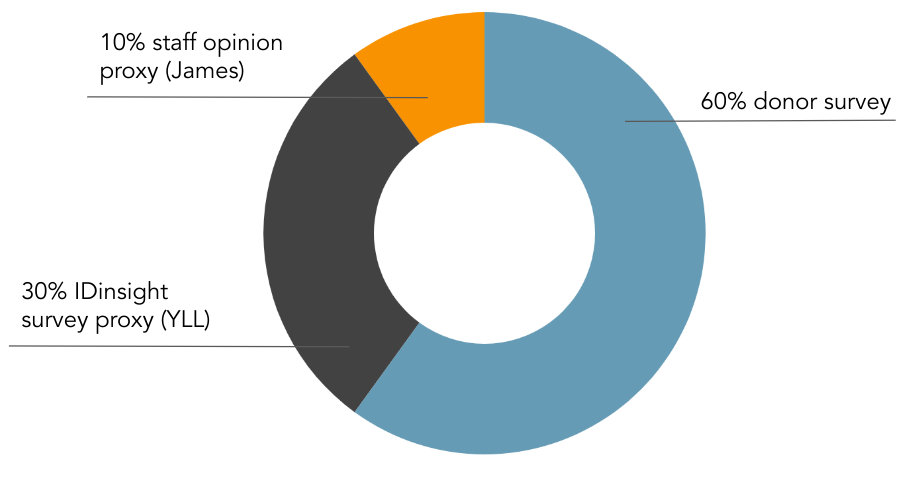

From a presentation by GiveWell researcher Olivia Larsen at the EA Global San Francisco 2022 conference (text version here), the components of GiveWell’s current moral weights are:

As far as we can discern, the methodology of GiveWell’s donor survey–which constitutes a majority of its moral weights–is not easily accessible to the general public.[1] GiveWell should publish this, or link it more prominently on its website if already released.

Although GiveWell donors may indeed have thought about these questions extensively, it is unclear that they are better positioned to answer them than the beneficiaries themselves. From the outside, it can look as if the views of a group selected for having similar views as GiveWell researchers (i.e., donors, who might have donated elsewhere if they had substantial disagreements with GiveWell staff) were prioritized in part because GiveWell researchers thought that their views were more plausible than those of beneficiaries.

We are fairly confident that the preceding paragraph oversimplifies the actual situation, but GiveWell should communicate more details about the donor survey to challenge this perception, and open themselves up to more specific criticism.

As it stands, we (weakly) hold that some of the same problems present in the IDinsight 2019 survey (and more) appear in the donor survey. Specifically, most of us are bad at considering small probabilities, the lack of lived experience in the same situation as the average beneficiary of a GiveWell top charity makes responses significantly less reliable, and questions about abstract scenarios are not well-placed to capture genuine moral intuitions.

(c) GiveWell’s published material lacks a (sorely needed) defense of preferences as a reliable measurement of moral weights.

In this section, we argue that using people’s preferences to measure moral weights is far from obviously a reliable method. It follows that GiveWell should release a more detailed defense of this method than is currently available, and/or acknowledge massive uncertainties in relying on preferences.

Even if beneficiary preferences could be more accurately elicited (e.g., by fixing some of the issues in the IDinsight 2019 survey), it is unclear precisely what role such data ought to play in determining GiveWell’s moral weight parameters. Roughly, there are two reasons why beneficiary preferences might be important:

- Maximizing beneficiary preferences is our end goal.

- Beneficiary preferences give us useful information to estimate some other value, such as the amount of happiness preserved / generated by averting a death, increasing consumption, etc.

(1) is a departure from a hedonistic theory of the good, towards a position resembling preference consequentialism. Without presuming too much about the average GiveWell donor, it is at least plausible that maximizing beneficiary preferences is not their intended goal. When asked why they donate to GiveWell, we would not be surprised if most donors answer that they want to improve one of happiness, wellbeing, etc. in the most cost effective way. To be clear, we are not dismissing (1) entirely, but merely pointing out that it is far from an obvious stance.[2]

We expect that (2) is much more intuitive to most people. When trying to best improve the happiness or well being of a group of people, it is natural to ask members of that group how this could best be achieved, in order to develop more accurate empirical beliefs. However, on closer examination, beneficiary preferences may not be fit for this purpose. For example, qualitative findings from the 2019 IDinsight survey suggest that many participant responses were influenced by religious or spiritual beliefs that the value of life is absolute. The view that averting death is discontinuously valuable as compared with increasing household consumption may be a genuine preference. But the fact that religion or spirituality (which are perhaps not proper epistemic grounds) played a significant role in the formation of said beliefs should give us pause in integrating them into our moral weights.

More generally, we are concerned that considering beneficiary preferences will lead to (unintentional) ‘creeping’ of ethical theories not held by the average GiveWell donor into donor decision making. For example, a utilitarian donor might find themselves supporting clearly non-utility maximizing causes, because they mistook the preferences of deontologist survey respondents that A > B (where A and B are different incomes they can obtain with the same dollar donated towards different charities) as evidence for u(A) > u(B), rather than that B violates some rule, while A does not. Allowing ethical positions we disagree with to creep into our own internal ethical deliberations would be imprudent, and we risk this happening by using beneficiary preferences as part of the moral weights parameters.[3]

This objection is not limited to beneficiary preferences. Different ethical views of GiveWell staff, or other donors, can also creep into donor decisions. Although we expect self-selection effects to mean that most GiveWell staff and donors share similar positions on the relevant issues, staff and donors are not a homogenous group of people who agree fully on the normative questions. This might undermine the position that surveyed preferences are a reliable way of estimating moral weights.

An analogous objection can probably be avoided by some competing methods, such as DALYs, as they do not entail asking people questions whose answers depend on personal ethical views. With such competing methods, we know what we're getting (e.g., a list of charities optimized by the total years of life increased, adjusted for disabilities) in a way we cannot when depending on the confused normative mixture produced by surveys.

Our recommendation is that GiveWell should publicly explain why it uses preferences–of staff, donors, and beneficiaries alike–to calculate moral weights. This might involve defending using preferences against some of the obvious concerns outlined above, highlighting uncertainties regarding some of these philosophical questions more extensively, or another approach not listed here.

3. GiveWell should hire practical ethicists to review its current approach to moral weights.

Our most direct and implementable recommendation is that GiveWell should hire specialists in this field (especially practical ethicists) to review its current approach. We use the term ‘hire’ loosely; we are not arguing that these would need to be full-time employees of GiveWell. For example, GiveWell could partner with ethicists in academia on a short-term project basis. There is likely existing interest in conducting this kind of research among academic philosophers, especially in collaboration with a grantmaker of GiveWell’s size.

We refer to practical ethicists in a loose way here, and would include most moral philosophers whose work is not particularly attached to one normative ethical theory.

We believe that practical ethicists will be able to offer insight in the following areas:

(1) On whether or not moral weight is actually a good idea:

[We expect that GiveWell, quite reasonably, will not prioritise this in comparison with our recommendations (2) and (3).]

There is in-depth discussion in philosophy about comparing cardinally similar items without a single unit of measurement. It is not immediately obvious to us that moral weight can actually be reasonably calculated, under any methodology. We would point to Derek Parfit’s work on Imprecise Comparatibility, and Ruth Chang’s work on Parity. Parfit’s work rejects that differences between cardinally ranked items can always be measured by a scale unit of the relevant value. In addition to rejecting the same proposition as Parfit, Chang’s work rejects that all rankings proceed in terms of trichotomy of ‘better than’, ‘worse than’ and ‘equally good’. It seems like moral weight requires a similar implicit assumption (that different moral concepts can be weighed against each other). We think, then, the work of some ethicists is relevant to GiveWell’s moral weight question.

(2) On balancing utility and preference:

There seem to be two broad, competing schools of thought when it comes to moral weights. On one side, GiveWell uses individual preferences, mostly elicited from surveys of donors and beneficiaries. On the other side, mainstream health economists, as well as the Happier Lives Institute, advocate for measures which purport to measure the overall happiness produced by a specific outcome (e.g., doubling consumption by one person-year). These include DALYs, WELLBYs, and similarly rhyming terms.

It is plausible to us that a practical ethicist could provide implementable advice to GiveWell about how it should balance the two positions (if at all). Specifically, a specialist could:

Offer answers to how GiveWell should decide between using utility or preference as the scale of measurement.

We point to existing literature in decision theory being incredibly useful here, such as Utility by John Broome, Internal Consistency of Choice by Amartya Sen, and Jurgen Eichberger & David Kelsey’s work on Non-expected utility. We believe that ethicists will offer additional insight to the debate, beyond our common intuitions.

Offer justification for a specific percentage split between utility and preference (if any).

Ethicists well-trained in intertheoretic value comparison could contribute a lot here. We think that GiveWell’s current methods of measuring preference will probably be criticized by most ethicists. We suspect that accounting for utility directly (e.g., through WELLBYs) would at the very least pose a significant conceptual change to how GiveWell currently calculates moral weight.

Improve preference measurements (if they are still used).

A well-trained economist can point out the difference between revealed and stated preferences and list out the advantages and disadvantages for using each one in a given experiment. The same applies for ethicists, who can identify the relationship between our moral intuitions (which is relevant to IDInsights survey, as people relied heavily on their intuitions when responding) and stated preferences across different scenarios. Our moral intuitions are not equally valuable across all cases–sometimes they misrepresent our actual preferences. Ethicists will be able to identify these (often non-obvious) cases more easily.

(3) On setting an agenda for future improvements:

This perhaps is the hardest item on this list for GiveWell to implement without external specialists. A 2020 public update to GiveWell’s moral weights lists three future improvements, all of which are slight adjustments to survey methodology (e.g., asking slightly different questions, increasing sample size). Although we acknowledge that this list was probably not intended to be exhaustive, it is still noteworthy that the big picture question of whether or not the information gathered through surveys can act as a sufficient basis for justifying beliefs about moral weights is omitted from this list. GiveWell acknowledges the limitations of donor surveys themselves, but treats these limitations as a necessary part of any system similar to moral weight.

Moreover, there does not seem to have been much improvement between the 2020 public update and the description provided at EAG SF 2022. This suggests to us that external assistance may be especially helpful; although not all problems are best solved by hiring, we believe we have made a compelling case that this particular problem requires specialist intervention.

The combination of these three things will probably lead to major changes to the moral weight parameters.

We don’t think that generalists can conduct these research sufficiently well to address our concerns (although we can certainly make some progress). These are complex moral issues. When non-philosophers do philosophy, they tend to make mistakes more frequently. Moral weight is fundamentally an ethics proposition, which is best understood by ethicists.

Disclaimer: We (Tanae Rao and Ricky Huang) are unaffiliated with GiveWell, IDinsight, and any other organization referred to in this submission, unless stated otherwise. All mistakes are our own.

- ^

It is possible that we are wrong about this, but we searched for ~15 minutes on the GiveWell website to find any documentation about the particulars of the donor survey, and could not find anything relevant.

- ^

It also raises the question of why GiveWell is fulfilling the preferences of disproportionately low-income households (i.e., it does not necessarily follow from preference maximization views that we should direct our donations towards the least well-off, in the way that–for instance–utilitarians can appeal to diminishing marginal utility from income).

- ^

We should distinguish this ‘creeping’ concern from a case where (for example) utilitarianism itself implies different courses of action depending on the preferences of other people. For example, perhaps a utilitarian in a society of strict non-utilitarians should not perform most actions deemed impermissible by the conventional morality, because the disutility of the combined outrage of the rest of society would outweigh any positive utility generated by the action’s other consequences.

Thanks for your entry!

For what it's worth, I'm a philosopher and I've not only offered to help GiveWell improve its moral weights, but repeatedly pressed them to do so over the years. I'm not sure why, but they've never shown any interest. I've since given up. Perhaps others will have more luck.

Thanks for the comment! I really enjoyed reading the work on WELLBYs by HLI.

I personally think GiveWell fell into the epistemic problem of prioritizing current functionality (even when it is based upon an unjustified belief) over potential counterfactual impact of establishing something new. I think they know its bad, but unaware of how bad moral weight currently is.