There is a strategy I’ve heard expressed over the years but haven’t seen clearly articulated. Phrases used to articulate it go roughly like “outrunning one’s problems” and “walking is repeated catching ourselves as we fall”.

It is the strategy of entering unstable states, that would lead to disaster if not exited shortly, as a way to get an advantage (like efficient locomotion in humans) or on the way to other better states (like travelling across a desert to get to an oasis). If it can be done properly it enables additional opportunities and greatly extends the flexibility of your plans and lets you succeed in otherwise harsh conditions but if done in error may make you worse off; you must travel between them like walking over hot coals, if you stay too long without moving you get burnt (relatedly but with a different sense of instability).

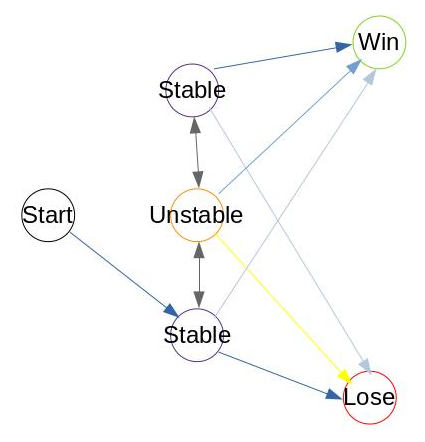

Let’s move a bit towards a formal definition. Let the states of the world fall into these categories:

- Loss unstable

- Loss stable

- Win

- Lose

A loss unstable state is one where if you’re in that state too long the probability per time of entering a lose state goes up for reasons such as (but not limited to) accumulated damage (say CO2 levels) or resource loss (say the amount of phosphorous available that is used in fertilizer). A loss stable state is one where your probability of entering a lose state isn’t increasing with the time that you’re in it (for instance independently off how long you’re standing 20 meters from a cliff your probability of falling off it doesn’t increase). For example:

Why would one choose to enter a loss unstable state then? Well, firstly, you may have no choice and must just do the best you can in the situation. If you do have a choice though, there are several reasons why you may still choose to enter a loss unstable state:

- They may have higher transition probabilities to the win states

- They may be on the path to better states

- They may otherwise be the best state one can reach as long as you don’t stay there for long (say for accumulating resources)

In general, this idea of loss unstable states contrasting with loss stable states is a new lens for highlighting important features of the world. The ‘sprinting between oases’ strategies enabled by crossing through loss unstable states may very well be better than those going solely through stable states, if used without error.

I'm not clear on what relevance this holds for EA or any of its cause areas, which is why I've tagged this as "Personal Blog".

The material seems like it might be a better fit for LessWrong, unless you plan to add more detail on ways that this "strategy" might be applied to open questions or difficult trade-offs within a cause area (or something community-related, of course).

I would have been much more interested in this post if it had included explicit links to EA. That could be including EA-relevant examples. It also could be explicitly referencing existing EA 'literature' or a positioning this strategy as a solution to a known problem in the EA.

I don't think the level of abstraction was necessarily the problem; the problem was that it didn't seem especially relevant to EA.

It's true that this is pretty abstract (as abstract as fundamental epistemology posts), but because of that I'd expect it to be a relevant perspective for most strategies one might build, whether for AI safety, global governance, poverty reduction, or climate change. It's lacking the examples and explicit connections though that make this salient. In a future post that I've got queued on AI safety strategy I already have a link to this one, and in general abstract articles like this provide a nice base to build from toward specifics. I'll definitely think about, and possibly experiment with, putting the more abstract and conceptual posts on LessWrong.

If you plan on future posts which will apply elements of this writing, that's a handy thing to note in the initial post!

You could also see what I'm advocating here as "write posts that bring the base and specifics together"; I think that will make material like this easier to understand for people who run across it when it first gets posted.

If you're working on posts that rely on a collection of concepts/definitions, you could also consider using Shortform posts to lay out the "pieces" before you assemble them in a post. None of this is mandatory, of course; I just want to lay out what possibilities exist given the Forum's current features.

I think I like the idea of more abstract posts being on the EA Forum, especially if the main intended eventual use is straightforward EA causes. Arguably, a whole lot of the interesting work to be done is kind of abstract.

This specific post seems to be somewhat related to global stability, from what I can tell?

I'm not sure what the ideal split is between this and LessWrong. I imagine that as time goes one we could do a better cluster analysis.

This idea seems somewhat related to: