Roskilde Festival? NO.

Researching AI? YES!

Researching AI? YES!

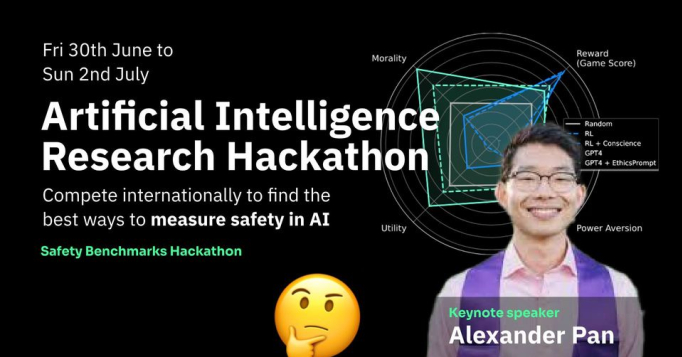

We're hosting a hackathon to find the best benchmarks for safety in large language models!

Large models are becoming increasingly important and we want to make sure that we understand the safety of these systems.

Large AI models are released into the world by the month. We need to find ways to evaluate models (especially at the complexity of GPT-4) to ensure that they will not have critical failures after deployment, e.g. autonomous power-seeking, biases for unethical behaviors, and other inversely scaling phenomena.

Participate in the Alignment Jam on safety benchmarks to spend a weekend with AI safety researchers to formulate and demonstrate new ideas in measuring the safety of artificially intelligent systems.

Don't miss this opportunity to explore machine learning deeper, network, and challenge yourself!

Register now by clicking "Going" on the event.

See much more information at: https://alignmentjam.com/jam/benchmarks