Lizka

Bio

I run the non-engineering side of the EA Forum (this platform), run the EA Newsletter, and work on some other content-related tasks at CEA. Please feel free to reach out! You can email me. [More about my job.]

Some of my favorite of my own posts:

- Disentangling "Improving Institutional Decision-Making"

- [Book rec] The War with the Newts as “EA fiction”

- Native languages in the EA community (and issues with assessing promisingness)

- EA should taboo "EA should"

- Invisible impact loss (and why we can be too error-averse)

I finished my undergraduate studies with a double major in mathematics and comparative literature in 2021. I was a research fellow at Rethink Priorities in the summer of 2021 and was then hired by the Events Team at CEA. I've since switched to the Online Team. In the past, I've also done some (math) research and worked at Canada/USA Mathcamp.

Some links I think people should see more frequently:

Posts 126

Comments448

Topic contributions238

I really like that poem. For what it's worth, I think a number of older texts from China, India, and elsewhere have things that range from depictions of care towards animals to more directly philosophical writing on how to treat animals (sometimes as part of teaching yourself to be a better person).

Some links:

- This short paper that I've skimmed, "Kindness to Animals in Ancient Tamil Nadu" (I haven't checked any of the quotes, and I think this is around 5th or 6th century CE)

- "The King saw a peacock shivering in the rain. Being compassionate, he immediately removed his gold laced silk robe and wrapped it around the peacock"

- And, from the beginning: "One day, Chibi - a Chola king - sat in the garden of his palace. Suddenly, a wounded dove fell on his lap. He handed over the dove to his servants and ordered them to give it proper treatment. A few minutes later, a hunter appeared on the scene searching for the dove which he had shot. He realized that the King was in possession of the dove. He requested the King to hand over the dove. But the king did not want to give up the dove. The hunter then told the King that the meat of the dove was his only food for that day. However, the King being compassionate wanted to save the life of the dove. He was also desirous of dissuading the hunter from his policy of hunting animals..." [content warning if you read on: kindness but also a disturbing action towards oneself on behalf of a human]

- Mencius/Mengzi has a passage where a king takes pity on an ox (and this is seen as a good thing). From SEP:

- "In a much–discussed example (1A7), Mencius draws a ruler’s attention to the fact that he had shown compassion for an ox being led to slaughter by sparing it. [...] an individual’s sprout of compassion is manifested in cognition, emotion, and behavior. (In 1A7, C1 is the ox being led to slaughter. The king perceives that the ox is suffering, feels compassion for its suffering, and acts to spare it.)"

- Humans helping animals and being rewarded for it is a whole motif in folklore, I think (apparently e.g. this index has it as "grateful animals"), from a bunch of different cultures/societies. E.g. of a link listing some examples.

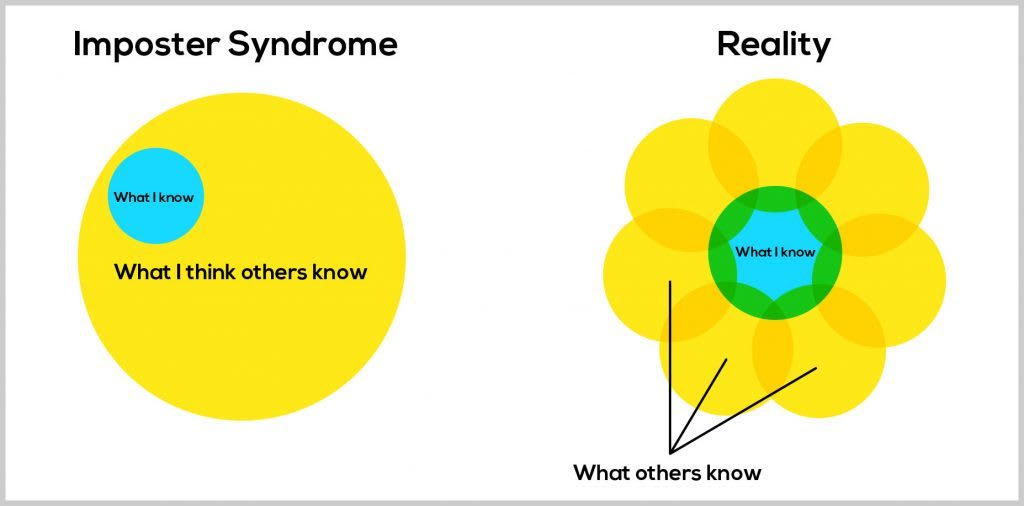

I agree that avoiding asking "dumb questions" is harmful (to yourself), but I also think it's a tendency that's really hard to overcome, especially on your own. And I think it's often related to impostor syndrome; a constant (usually unjustified!) fear of being exposed as unqualified inhibits risk-taking, including stuff like asking questions when you think there's a chance that everyone else thinks the answer is obvious. (I wrote a bit about this in this appendix.)

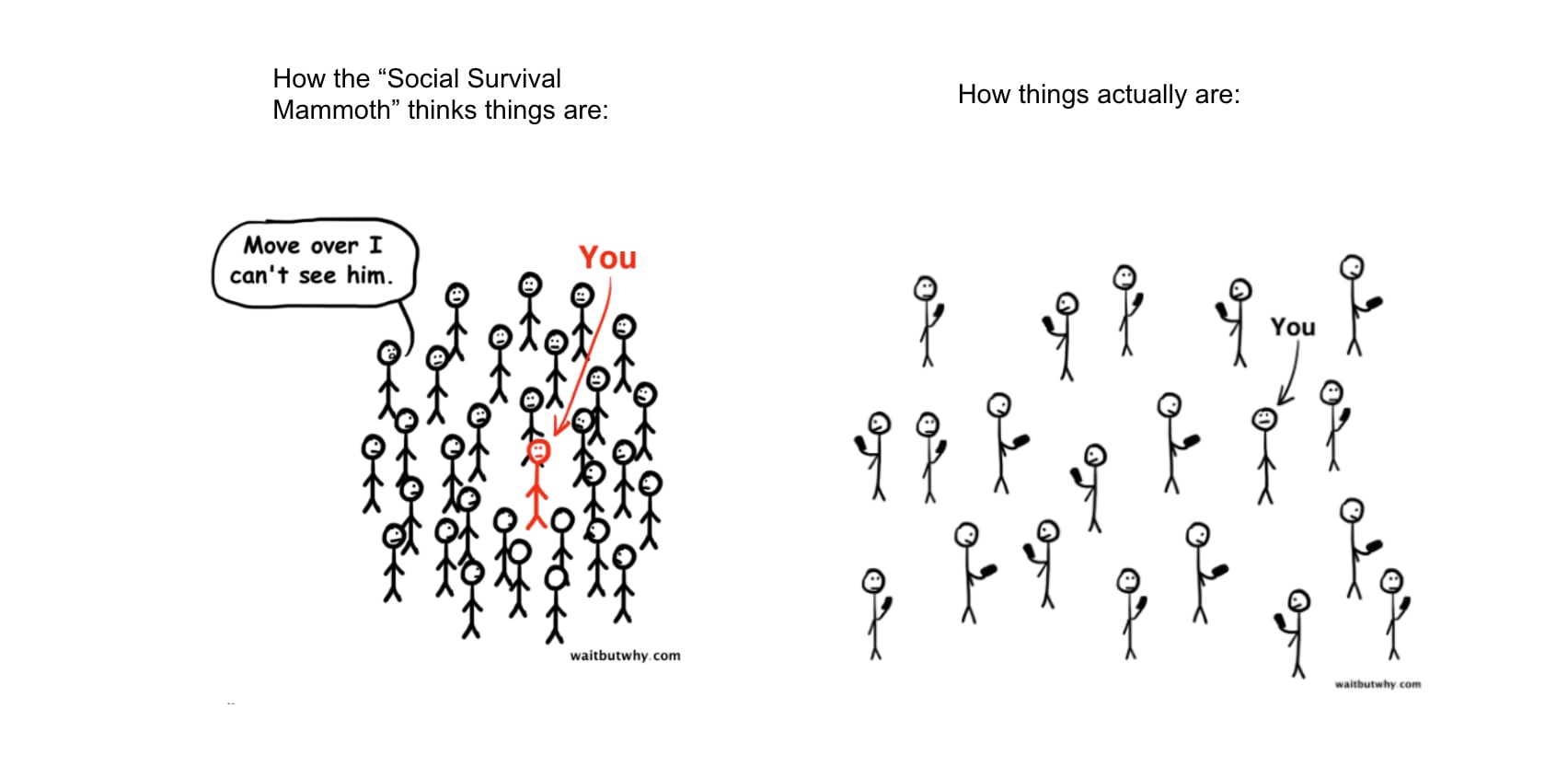

Relatedly, I've found this diagram useful and "sticky" as a mental reference for how we end up with false beliefs about what everyone else knows (some people think the diagram should be made up of squiggly blobs of slightly different sizes, but that it's basically accurate besides that — sorry for never updating it! :) ):

A few things have helped me with this kind of thinking:

- Finding trusted people / safe spaces where I could ask questions without feeling judged, asking "dumb" questions there, and building up confidence and habits for asking questions in other contexts, too.

- I think I asked Linch a lot of "dumb questions" when he was my research supervisor, and it was great. I'd recommend it!

- Bulldozing through the fear/anxiety for the first few times in new contexts breaks the ice for me and makes it easier for me to feel comfortable asking questions later on. (Sometimes I have this as an explicit goal the first few times I'm asking a "dumb" question somewhere.)

- Viewing looking silly as charity unto others

- Learning about impostor syndrome

- Caring a bit less about what the average person I interact with thinks of me (this is a classic post[1] on the topic, but I'm not sure how this actually improved for me)

- ^

From the post, another diagram I like (lightly adapted):

Re: 1b (or 1aii because of my love for indenting): That makes sense. I think I agree with you, and I'm generally unsure how much of a factor what I described even is.

Re 2: Yeah, this seems right. I do think some of the selection effects might mean that we should expect that forecasting is less promising than one might think given excitement about them in EA, though?

Re: 3: Thanks for clarifying! I was indeed not narrowing things down to Tetlock-style judgmental forecasting. I agree that it's interesting that judgement-style forecasting doesn't seem to get used as much even in fields that do use forecasting (although I don't know what the most common approaches to forecasting in different fields actually are, so I don't know how far off they are from Tetlock-style things).

Also, this is mostly an aside (it's a return to the overall topic, rather than being specific to your reply), but have you seen this report/post?

I both think that some groups in EA are slightly overenthusiastic about forecasting[1] (while other subgroups in EA don't forecast enough), and that forecasting is underused/undervalued in a lot of the world for a number of different reasons. And I'd also suggest looking at this question less from the lens of "why is EA more into forecasting than other groups?" and more from the lens of "some groups are into forecasting while others aren't, why is that?"

More specific (but still quick!) notes:

- I'd guess reasons for why other groups are less enthusiastic about forecasting can vary significantly.

- E.g.:

- People in governments and the media (and maybe e.g. academia) might view forecasting as not legible enough, or credentialist enough, and the relevant institutions might be slower to change. I don't know how much different factors contribute in different cases. There might also be legal issues for some of those groups.

- I think some people have argued that in corporate settings, people in power are not interested in setting up forecasting-like mechanisms because it might go against their interests. Alternatively, I've heard arguments that middle management systems are messed up, meaning that people who are able to set up forecasting systems in large organizations are promoted and the projects die. I don't know how true these things are.

- E.g.:

- I think people who end up in EA are often the kinds of people who'd also be more likely to find forecasting interesting — both for reasons that are somewhat baked into common ~principles in EA, and for other reasons that look more like biases/selection effects.

- I'm not sure if you're implying that EA is one of the most extremely-into-forecasting groups, but I'm not sure that this is true. Relatedly, if you're looking to see whether/where forecasting is especially useful, looking at the different use cases as a whole (rather than zooming into EA-vs-not-EA as a key division) might be more useful.

- E.g. sports (betting), trading, and maybe even the weather might be areas/fields/subcultures that rely a lot on forecasting. Wikipedia lists lots of "applications" in its entry on forecasting, and they include business, politics, energy, etc.

- [Edit: this is not as informative as I thought! See Austin's comment below.] Manifold has groups, and while there's an EA group, it's only 2.2K people, while groups like sports (14.4k people) and politics (16.4k people) are bigger. This is more surprising to me since Manifold is particularly EA-aligned.[2]

- The forecasting group on Reddit looks dead, but it looks like people in various subreddits are discussing forecasts on machine learning, data science, business, supply chains, politics, etc. (I also think that forecasting might be popular in crypto-enthusiast circles, but I'm not sure.)

- This is potentially minor, but given that a lot of prediction-market-like setups with real money are illegal, as far as I understand, the only people who forecast on public platforms are probably those who are motivated by things like reputation, public good benefits, or maybe charity in the case of e.g. Manifold. So you might ask why Wikipedia editors don't forecast as much as EAs.

- ^

And specific aspects of forecasting

- ^

You could claim that this is a sign that EA is more into forecasting, but I'm not sure; EA is also more into creating public-good technology, so even if lots of e.g. trading firms were really excited about forecasting, I'd be unsurprised if there weren't many ~public forecasting platforms that were supported by those groups. (And there are in fact a number of forecasting platforms/aggregators whose relationship to EA is at least looser, unless I'm mistaken, like PredictIt, PolyMarket, Betfair, Kalshi, etc.)

Some notes (written quickly!):

- You write "it can either be an advisory system or it can be a deterministic system," but note that an advisory system misses out on a key benefit of what I understand as ~classic futarchy: the fact that it solves the problem of conditionality not being the same as causality. See here (from my earlier post):

- Causality might diverge from conditionality in the case of advisory/indirect markets.[10] Traders are sometimes rewarded for guessing at hidden info about the world—information that is revealed by the fact that a policy decision was made—instead of causal relationships between the policy and outcomes.[11]

- For instance, suppose a company is running a market to decide whether to keep an unpopular CEO, and they ask if, say, stocks conditional on the CEO remaining would be higher than stocks conditional on the CEO not staying. Then traders might think that, if it is the case that the CEO stayed, it is likely that the Board found out something really great about the CEO, which would increase expectations that the CEO would perform very well (and stocks would rise). So the market would seem to imply that the CEO is good for the company even if they were actually terrible.

- There's also a post on this (haven't re-read in a while, only vaguely remember): Prediction market does not imply causation

- Causality might diverge from conditionality in the case of advisory/indirect markets.[10] Traders are sometimes rewarded for guessing at hidden info about the world—information that is revealed by the fact that a policy decision was made—instead of causal relationships between the policy and outcomes.[11]

- I think getting a metric that's good enough would be really difficult, and your post undersells this

- E.g. it should probably take into account the cost. I'm a bit suspicious of metrics that depend on a vote 5 years from now. There are still issues with forecasting on decently long periods, and if I'm thinking about how I would bet on a metric like this, I'd go look at the people doing the voting, I'd be trying to forecast how beliefs and biases of the relevant group would change (which could be useful, but it's not exactly what grants are for), etc. If the point is ability to scale this up, the metric should be applicable to a wide variety of grants. Grant quality could depend on information that isn't accessible to a wide audience (like how much a grant supported a specific person's upskilling), etc.

- But maybe my argument is ~cheemsy and there are versions of this that work at least as well as current grantmaking systems, or would have sufficient VoI — I'm not sure, but I want to stress that at least for me to believe that this is not a bad idea, the burden of proof would be on showing that the metric is fine.

- I'm also honestly not sure why you seem so skeptical that manipulation wouldn't happen, but maybe there are clever solutions to this. I haven't thought about this seriously (at least, not for around 2 years).

Relatedly, 2 years ago I wrote two posts about futarchy — I haven't read them in forever, they probably have mistakes, and my opinions have probably shifted since then, but linking them here anyway just in case:

This is a good question. I was mostly not thinking of pre-FTX levels when I was writing that part of my response. I'm not sure if I think my claim holds on longer time scales! Things in the community have been calmer recently (outside of the Forum); I don't know what would have happened had we not added the section (i.e on the Forum. whether things would have calmed down by themselves), nor what would happen if we removed the section now (and unfortunately we can't e.g. A/B test this kind of thing, but maybe we should test in some other way at some point).

Aside (not related to what I was talking about in the "Forum has gotten healthier" section above): I agree that 4Q 22 / 1Q 23 aren't representative in many ways, but a key underlying motivation for the Community section is giving posts that have a more niche audience a better chance by separating out posts that tend to get a lot of karma so they're not "fighting for the same spots" (which are determined by ~karma). That phenomenon (and goal) is unaffected by recent events; ie. what we described in Karma overrates some topics; resulting issues and potential solutions should hold at all times. (On a skim, the top-by-karma posts of 2023, 2022, to some extent 2021 are Community posts. Curiously, this seems less true of 2020 but again more true of 2019.)

Just a quick clarification: I don't think this was a "change to lower community engagement." Adding the community section was a change, and it did (probably[1]) lower engagement with community posts, but that wasn't the actual point (which is a distinction I think is worth making, although maybe some would say it's the same). In my view, the point was to avoid suffocating other discussions and to make the Forum feel less on-edge/anxiety-inducing (which we were hearing was at least some people's experience). In case it helps, this outlines our (or at least my) thinking about it.

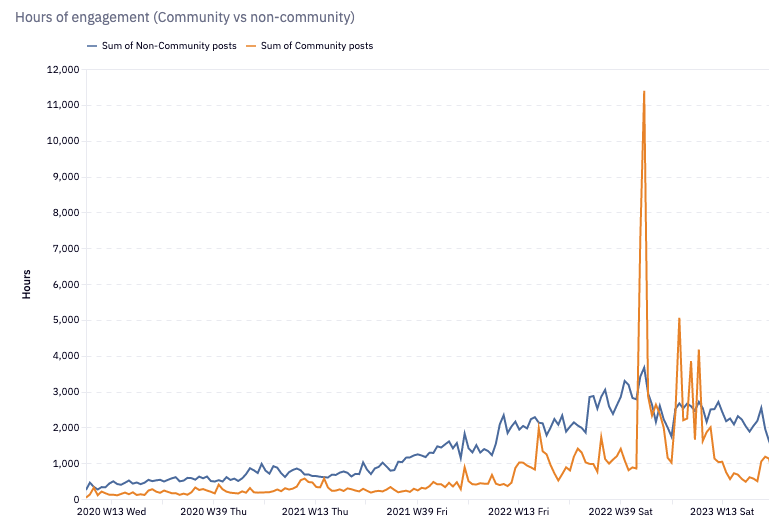

And before I get into my personal takes, here's a graph of engagement hours on Community posts vs. other content:

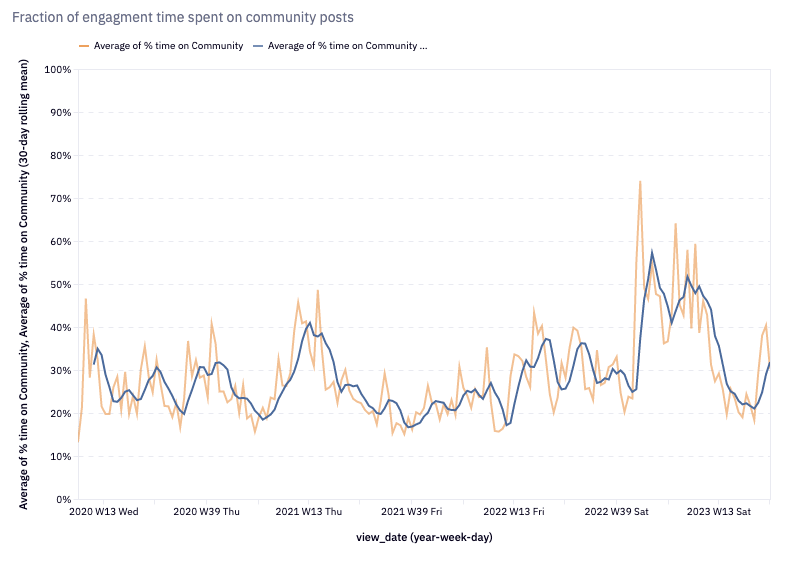

and here's a graph of the percent of overall engagement that's spent on Community posts (the blue line is a running 30-day average):

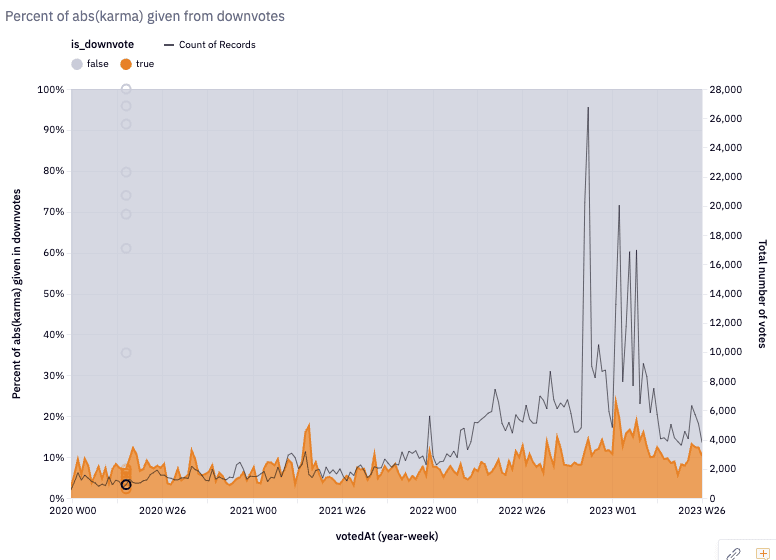

And another graph that might be interesting is this one — percent of karma (orange) given that comes from downvotes (the black line is the total number of votes in a period):

On to how I feel about the change:

I'm pretty happy with it, with some caveats.

I was quite worried about it initially,[2] but eventually got convinced/decided that it was a good thing to try. I'm much more positive about it now, although I still think that we might be able to find a better and more elegant solution at some point.

Some reasons I feel positive about it:

(1) We get a lot of positive feedback on the Community Section, from a broad range of people.

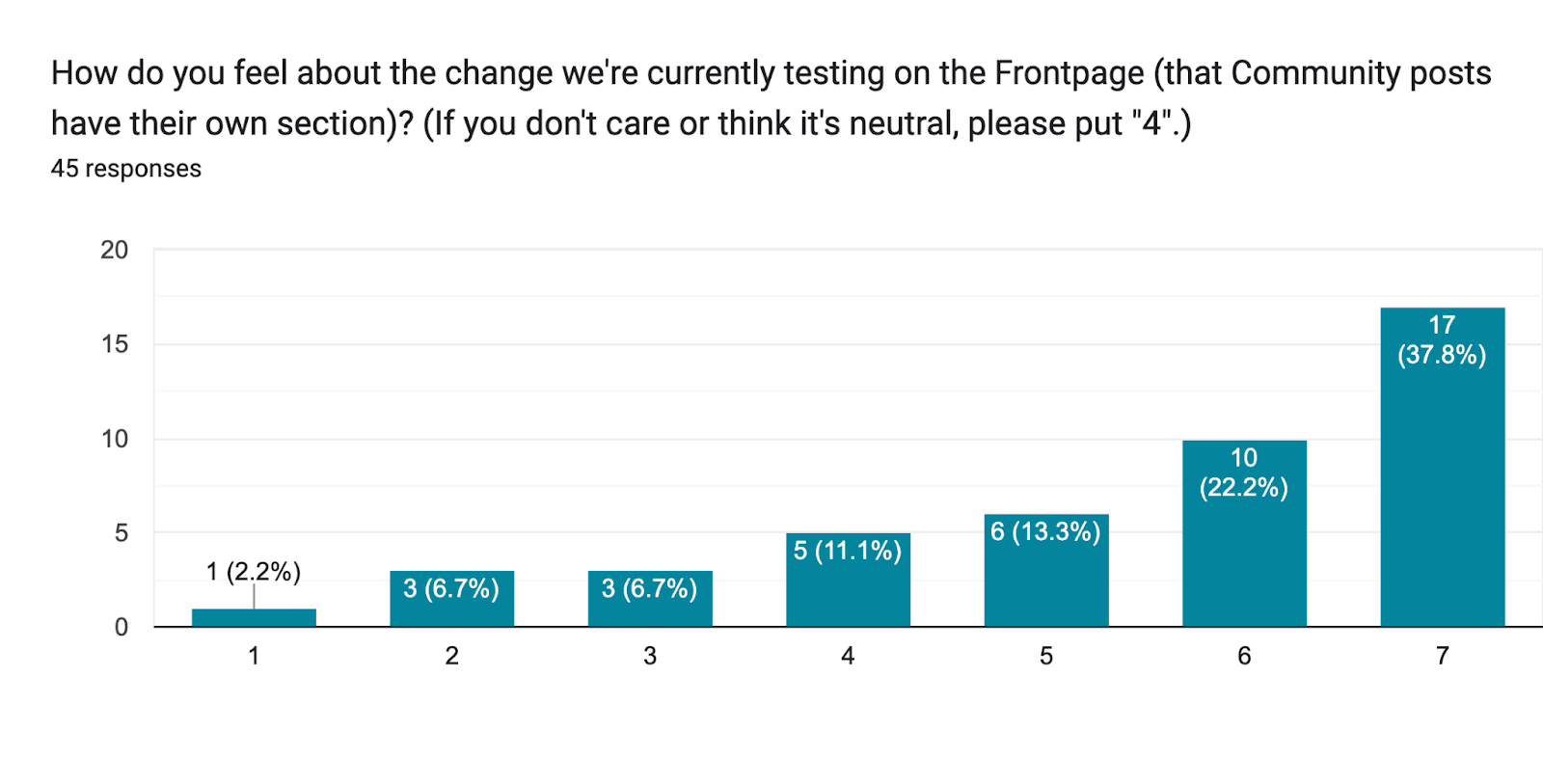

For the initial test, we surveyed people on the Forum (more here), and got the following results:

(45 responses. On a scale of 1-7 where "1" was negative and "7" was positive, 38% of responses put 6, 22% put 7, 13% put 5, 11% put 4, 7% put 3 and 2 (each), and 2% put 1.)

We'd also done some user interviews and gotten quick takes from people (again, a bit more here).

Since then, we've continued hearing from people who really appreciate it. At EAG and in other ~EA spaces, I've been, unprompted, told things like "I'm going on the Forum a lot more now because I see interesting and useful content" and "The Forum seems much better now."

A number of people have also asked for ways to hide the section for themselves entirely.

(2) I feel that the Forum has gotten healthier.

I could try to point you to discussions that, before the change, I'd have expected would blow up in some unpleasant ways, I could pull out the number for how often moderators have to ban or warn someone per engagement hour in different periods, and I can point you to the "percent of karma that comes from downvotes" that I shared above, but I think this is mostly a subjective judgement, and factors in things that others might not agree with me on, like "how much does karma track what I think is more valuable?"

I also find it easier to read what I want to read on the Forum right now. I hadn't expected a huge change in my own experience — I read a lot of Community posts! — but I actually quite like having the section.

(3) I was worried about various downsides, and they haven't seemed to have gotten very bad — although that's my main uncertainty.

- Growth in total engagement hours has stalled. I don't know if that will continue, and I don't fully know why that is. Maybe people are tired, disenchanted with EA (or more precisely, maybe new folks aren't finding EA as much), using Twitter/LW more, or too busy working on slowing down AI or the like. (I’ve seen data about other ~EA engagement metrics that suggest there’s at least some lull.) Or maybe we've discouraged authors of important and engaging Community content from posting on the Forum, and this has knock-on effects on non-Community engagement.

- But we didn't see a mass exodus, which I was somewhat worried about, and it's not on a clear down trajectory. And evaluating all of this is pretty hard given the quick growth in 2022 due to FTX and WWOTF, and the crazy engagement levels in November.

- I was really worried about the community/individual people missing out on important discussions. This is hard to notice — I shouldn't expect to know what discussions I'm missing out on. But I was tracking some topics that I'd discussed with folks off the Forum that hadn't made it on to the Forum, and I think most of them showed up during Strategy Fortnight, so I'm excited about more pushes/events like that (and other things listed here and elsewhere).

- Here's another bad and subjective datapoint: the average karma of some Community posts compared to some non-Community posts that are of a similar minimum quality/value (according to me) is quite similar (with Community posts winning a bit).[3]

- There are other downsides, like:

- Authors are sometimes sad that their posts are in the Community section

- We've introduced some messiness/complexity to how the Forum is structured

- Maybe we've implicitly made communicated something about what is and isn't "valuable" that I don't endorse

My personal approach here is to keep an eye on this, see if people are engaging to the extent that they endorse, and continue looking for better solutions.

- ^

I think this is almost certainly true — engagement with community posts went down —but there was also less big news to discuss, and that might have been the actual cause of the decrease.

- ^

Initially I was particularly worried that we would miss out on important conversations, and worried about our reasoning (I was worried that we were getting feedback from a heavily biased sample of people). I think I was also worried that there was something "special" about the Forum as it was at the time — I've now articulated this in terms of "we're at relatively ~stable eliquibrium points on some important parameters and structural/external changes can mess with that stability" — that we would mess with by doing something big like this.

- ^

Here's a bad baseline sanity check that I did at some point: (1) Take the posts shared in the Digest — which gives me a baseline of ~quality/value-to-readers (according to my own judgement). Note that I don't filter strongly for karma in the Digest; if something gets a lot of karma and I'm not too sure about it, I'd maybe err on the side of including, but I don't really use karma as a big factor. (I might also be more likely to miss low-karma good posts, and there are other reasons to share or not share something in the Digest, but let's put that aside for now.) (2) I compared how much karma different types of posts were getting. The posts that I was putting as ~top 3 in the list order for the Digets had noticeably more karma, on average. Setting those aside, though, Community posts in the Digest got ~100 karma on average, and non-Community posts got ~80 karma on average. (Note that there's a lot of variation, though.)

Just to add a few: when I think about Forum features/structure/culture, I often think of other sites that have similar-ish functions that I've appreciated and/or used, including:

- Stack Exchange (my main experience is with Math Stack Exchange)

- Quanta Magazine

- Assorted blogs

- For the wiki: Wikipedia (and other wikis, including stuff like TV tropes), Stanford Encyclopedia of Philosophy

- We also sometimes think about e.g. Twitter

- [Edited to add — happened to remember] Also Hacker News

I mostly agree with Lorenzo, with caveats.

We've got an official policy on this. From that:

If we end up with a bunch of job listings and/or we start hearing that there are issues due to the job listings, we could change our approach — e.g. by limiting or discouraging listings, limiting where they're shown (more likely), creating separate spaces for them (something I think we should explore), or something else.

The other thing I'd note is that I think job ~advertisements go better (get more engagement, bring value to people not looking for the job) when they're combined with other content/information, like some explanation of why you're hiring, what your strategy is, an update on what you do, etc. This means that people who aren't interested in the jobs could also get something out of the post, and you might also get more applicants because the post gets more karma (so more people will see it) and because some people who come for the content are also interested in the jobs (this is probably truer for some jobs than for others).

(I should caveat that I wrote the above quickly (besides the policy excerpt), and without input from others.)