rime

Bio

Flowers are selective about the pollinators they attract. Diurnal flowers must compete with each other for visual attention, so they use colours to crowd out their neighbours. But flowers with nocturnal anthesis are generally white, as they aim only to outshine the night.

Posts 5

Comments47

I mused about this yesterday and scribbled some thoughts on it on Twitter here.

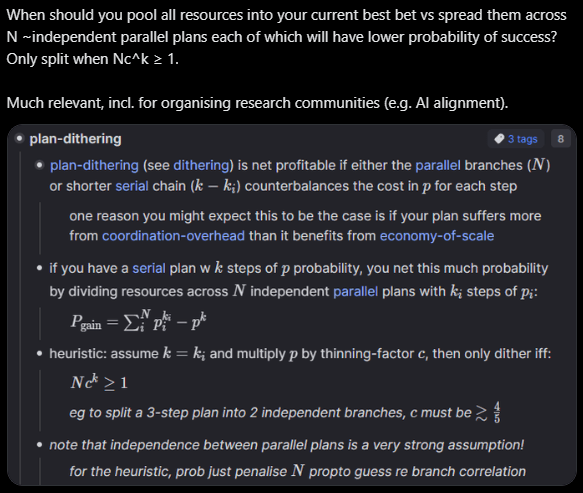

"When should you pool all resources into your current best bet vs spread them across N ~independent parallel plans each of which will have lower probability of success?"

Investing marginal resources (workers, in this case) into your single most promising approach might have diminishing returns due to A) limited low-hanging fruits for that approach, B) making it harder to coordinate, and C) making it harder to think original thoughts due to rapid internal communication & Zollman effects. But marginal investment may also have increasing returns due to D) various scale-economicsy effects.

There are many more factors here, including stuff you mention. The math below doesn't try to capture any of this, however. It's supposed to work as a conceptual thinking-aid, not something you'd use to calculate anything important with.

A toy-model heuristic is to split into separate approaches iff the extra independent chances counterbalance the reduced probability of success for your top approach.

One observation is that the more dependent/serial steps ( your plan has, the more it matters to maximise general efficiencies internally (), since that gets exponentially amplified by .[1]

- ^

You can view this as a special case of Ahmdal's argument. If you want. Because nobody can stop you, and all you need to worry about is whether it works profitably in your own head.

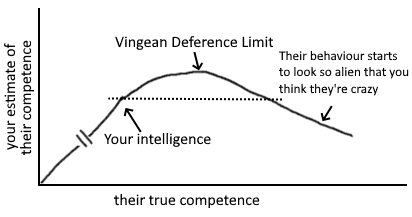

The problem is that if you select people cautiously, you miss out on hiring people significantly more competent than you. The people who are much higher competence will behave in ways you don't recognise as more competent. If you were able to tell what right things to do are, you would just do those things and be at their level. Innovation on the frontier is anti-inductive.

If good research is heavy-tailed & in a positive selection-regime, then cautiousness actively selects against features with the highest expected value.[1]

That said, "30k/year" was just an arbitrary example, not something I've calculated or thought deeply about. I think that sum works for a lot of people, but I wouldn't set it as a hard limit.

- ^

Based on data sampled from looking at stuff. :P Only supposed to demonstrate the conceptual point.

Your "deference limit" is the level of competence above your own at which you stop being able to tell the difference between competences above that point. For games with legible performance metrics like chess, you get a very high deference limit merely by looking at Elo ratings. In altruistic research, however...

The problem with strawmanning and steelmanning isn't a matter of degree, and I don't think goldilocks can be found in that dimension at all. If you find yourself asking "how charitable should I be in my interpretation?" I think you've already made a mistake.

Instead, I'd like to propose a fourth category. Let's call it.. uhh.. the "blindman"! ^^

The blindman interpretation is to forget you're talking to a person, stop caring about whether they're correct, and just try your best to extract anything usefwl from what they're saying.[1] If your inner monologue goes "I agree/disagree with that for reasons XYZ," that mindset is great for debating or if you're trying to teach, but it's a distraction if you're purely aiming to learn. If I say "1+1=3" right now, it has no effect wrt what you learn from the rest of this comment, so do your best to forget I said it.

For example, when I skimmed the post "agentic mess", I learned something I thought was exceptionally important, even though I didn't actually read enough to understand what they believe. It was the framing of the question that got me thinking in ways I hadn't before, so I gave them a strong upvote because that's my policy for posts that cause me to learn something I deem important--however that learning comes about.

Likewise, when I scrolled through a different post, I found a single sentence[2] that made me realise something I thought was profound. I actually disagree with the main thesis of the post, but my policy is insensitive to such trivial matters, so I gave it a strong upvote. I don't really care what they think or what I agree with, what I care about is learning something.

- ^

"What they believe is tangential to how the patterns behave in your own models, and all that matters is finding patterns that work."

From a comment on reading to understand vs reading to defer/argue/teach.

- ^

"The Waluigi Effect: After you train an LLM to satisfy a desirable property , then it's easier to elicit the chatbot into satisfying the exact opposite of property ."

Here's my just-so story for how humans evolved impartial altruism by going through several particular steps:

- First there was kin selection evolving for particular reasons related to how DNA is passed on. This selects for the precursors to altruism.

- With ability to recognise individual characteristics and a long-term memory allowing you to keep track of them, species can evolve stable pairwise reputations.

- This allows reciprocity to evolve on top of kin selection, because reputations allow you to keep track of who's likely to reciprocate vs defect.

- More advanced communication allows larger groups to rapidly synchronise reputations. Precursors of this include "eavesdropping", "triadic awareness",[1] all the way up to what we know as "gossip".

- This leads to indirect reciprocity. So when you cheat one person, it affects everybody's willingness to trade with you.

- There's some kind of inertia to the proxies human brains generalise on. This seems to be a combination of memetic evolution plus specific facts about how brains generalise very fast.

- If altruistic reputation is a stable proxy for long enough, the meme stays in social equilibrium even past the point where it benefits individual genetic fitness.

- In sum, I think impartial altruism (e.g. EA) is the result of "overgeneralising" the notion of indirect reciprocity, such that you end up wanting to help everybody everywhere.[2] And I'm skeptical a randomly drawn AI will meet the same requirements for that to happen to them.

- ^

"White-faced capuchin monkeys show triadic awareness in their choice of allies":

"...contestants preferentially solicited prospective coalition partners that (1) were dominant to their opponents, and (2) had better social relationships (higher ratios of affiliative/cooperative interactions to agonistic interactions) with themselves than with their opponents."

You can get allies by being nice, but not unless you're also dominant.

- ^

For me, it's not primarily about human values. It's about altruistic values. Whatever anything cares about, I care about that in proportion to how much they care about it.

Thanks!

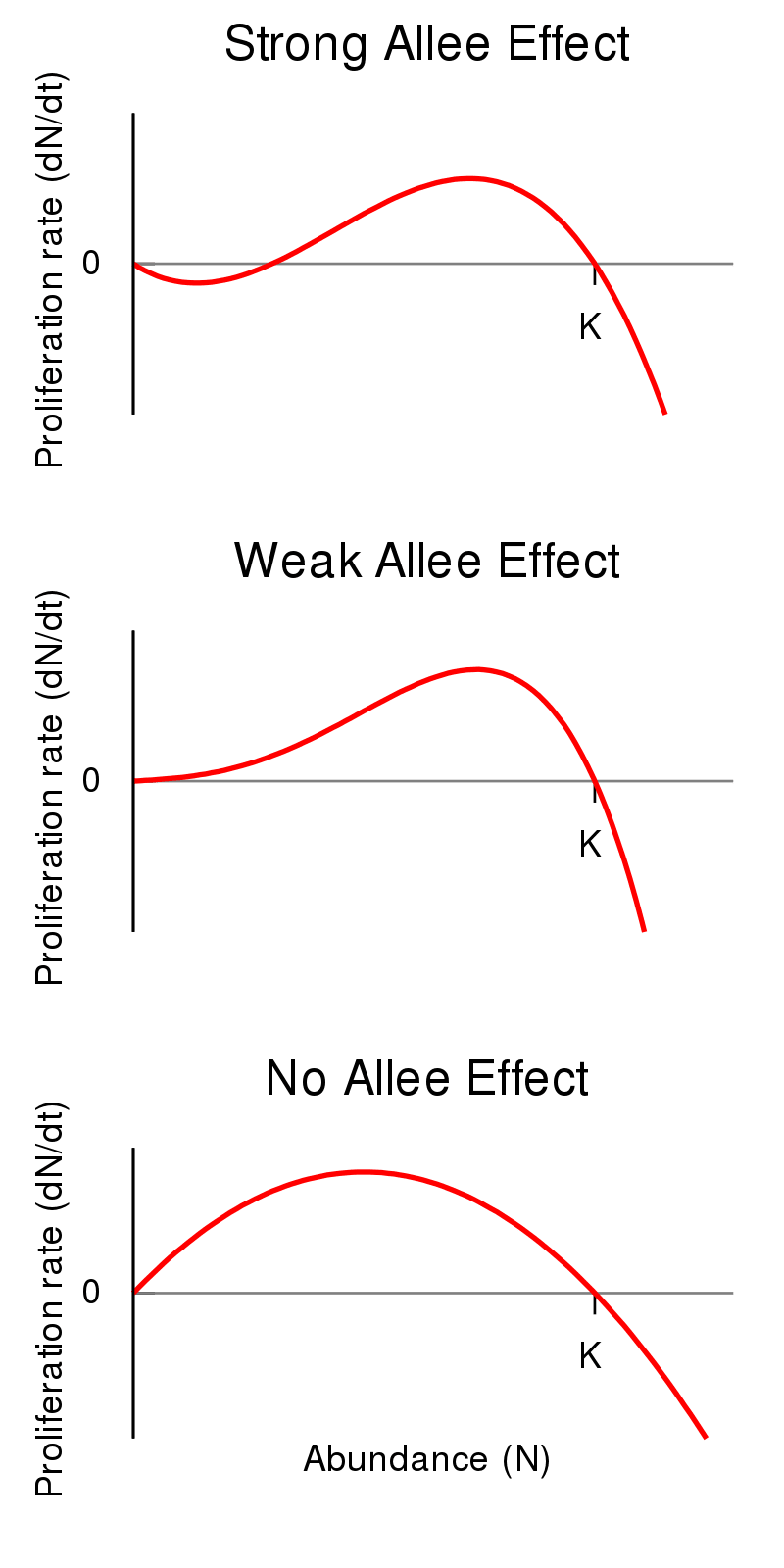

Although, I think many distinct spaces for small groups leads to better research outcomes for network epistemology reasons, as long as links between peripheral groups & central hubs are clear. It's the memetic equivalent of peripatric vs parapatric speciation. If there's nearly panmictic "meme flow" between all groups, then individual groups will have a hard time specialising towards the research niche they're ostensibly trying to research.

In bio, there's modelling (& some observation) suggesting that the range of a species can be limited by the rate at which peripheral populations mix with the centre.[1] Assuming that the territory changes the further out you go, the fitness of pioneering subpopulations will depend on how fast they can adapt to those changes. But if they're constantly mixing with the centroid, adaptive mutations are diluted and expansion slows down.

As you can imagine, this homogenisation gets stronger if fitness of individual selection units depend on network effects. Genes have this problem to a lesser degree, but memes are special because they nearly always show something like a strong Allee effect[2]--proliferation rate is proportional to prevalence, but is often negative below a threshold for prevalence.

Most people are usually reluctant to share or adopt new ideas (memes) unless they feel safe knowing their peers approve of it. Innovators who "oversell themselves" by being too novel too quickly, before they have the requisite "social status license", are labelled outcasts and associating with them is reputationally risky. And the conversation topics that end up spreading are usually very marginal contributions that people know how to cheaply evaluate.

By segmenting the market for ideas into small-world network of tight-knit groups loosely connected by central hubs, you enable research groups to specialise to their niche while feeling less pressure to keep up with the global conversation. We don't need everybody to be correct, we want the community to explore broadly so at least one group finds the next universally-verifiable great solution. If everybody else gets stuck in a variety of delusional echo-chambers, their impact is usually limited to themselves, so the potential upside seems greater. Imo. Maybe.

Any other points worth highlighting from the 10-page long rules? I find it confusing. Is this normal for legalspeak? The requirements include, and I quote:

- All information provided in the Entry must be true, accurate, and correct in all

respects. [oops, excludes nearly all possible utterances I could say] - The Contest is open to any natural person who meets all of the following eligibility

requirements:- [Resides in a place where the Contest is not prohibited by law]

- The entrant is at least eighteen (18) years old at the time of entry.

- The entrant has access to the internet. [What?]

I actually like a lot of these. I wish the forum had collapsible sections or bullet points so that curious readers could expand sections we wish to learn more about.

- Extremify

- I do some version of this a lot and call it "limiting-case analysis". The idea is that you want to find the most generalisable aspects of the pattern you're analysing, and that often means setting some variables to or or even removing them altogether.

- “The art of doing mathematics consists in finding that special case which contains all the germs of generality.” -- David Hilbert

- Notice which aspects are parameters. If they're set to some specific value, generalise them by turning them into variables instead.

- Play the generalised game of whatever you're trying to do. Chess boards with an infinite number or unconstrained variable of squares are more general than 8x8.

- I do some version of this a lot and call it "limiting-case analysis". The idea is that you want to find the most generalisable aspects of the pattern you're analysing, and that often means setting some variables to or or even removing them altogether.

- Social proof

- This is so crucial for motivation. If we're worried about how our ideas will be received, our brains will refuse to innovate.

- However, I would caution against the need for social validation. If you rely on others to check your ideas for you, you'll have less incentive to try to check them yourself. At the start, it may just be true that others are better at judging your ideas than you are, but I'd still recommend trusting your own judgment because it won't get practice otherwise.

- "I was used to just having everybody else being wrong and obviously wrong, and I think that's important. I think you need the faith in science to be willing to work on stuff just because it's obviously right even though everybody else says it's nonsense." -- Geoffrey Hinton

- Plus, you need to be able to use your ideas to reach more distant nodes on the search tree without slowing down for validation at every step. It's better to have the option to internalise the entire process. This gives you a much shorter feedback loop that gets better over time.

- YourBias.Is

- You advise me to "familiarise myself with common cognitive biases" so that I can learn to avoid them. I agree of course, but I think there's important nuance. If you defer to empirical experiments and solid statistics to form your beliefs about how you're biased, you may learn to be statistically without understanding why you're correct.

- Imo, the reason you should consult literature and statistics about biases is mostly just so that you can learn to recognise how they work internally via introspection. I think that's the only realistic way to learn to mitigate them.

Quirks: I say “we” because writing “I” in formal writing makes me feel weird.

Good to say so! But I encourage people to use "I" precisely in order to push back against formalspeak norms. Ostentation is bad for the mind, and it's hard to do good research while feeling pressure to be formal.

Regardless though, I trust your abstract. Thanks. : )

Second-best theories & Nash equilibria

A general frame I often find comes in handy while analysing systems is to look for look for equilibria, figure out the key variables sustaining it (e.g., strategic complements, balancing selection, latency or asymmetrical information in commons-tragedies), and well, that's it. Those are the leverage points to the system. If you understand them, you're in a much better position to evaluate whether some suggested changes might work, is guaranteed to fail, or suffers from a lack of imagination.

Suggestions that fail to consider the relevant system variables are often what I call "second-best theories". Though they might be locally correct, they're also blind to the broader implications or underappreciative of the full space of possibilities.

Examples