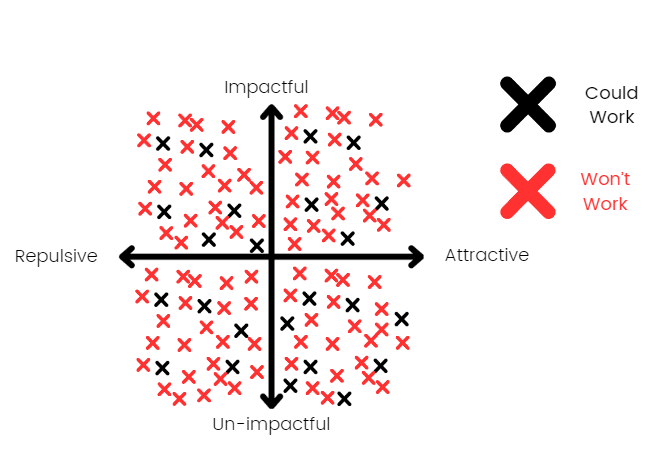

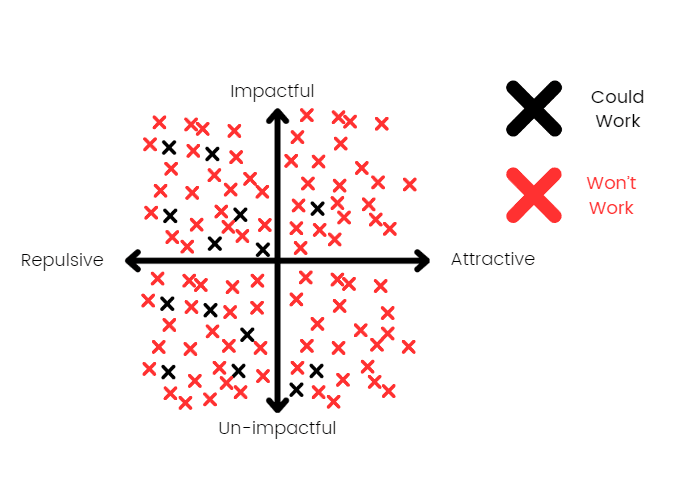

Behold the space of ideas, back before EA even existed. The universe gives zero fucks about ensuring impactful ideas are also nice to work on, so there's no correlation between them. Isn't it symmetrical and beautiful? Oh no, people are coming...

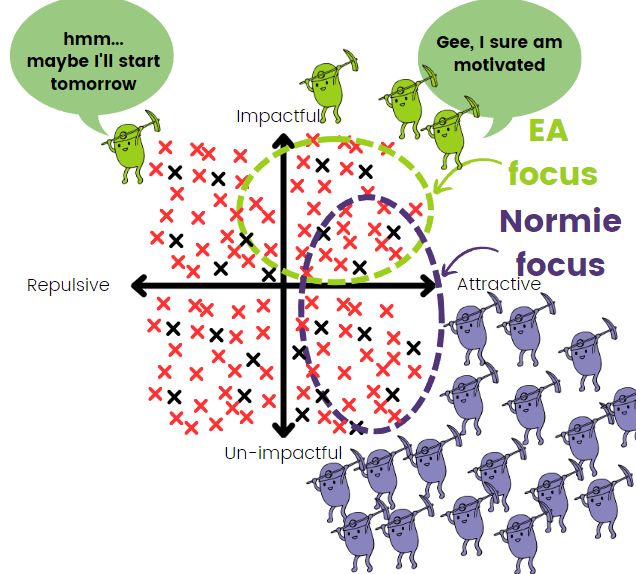

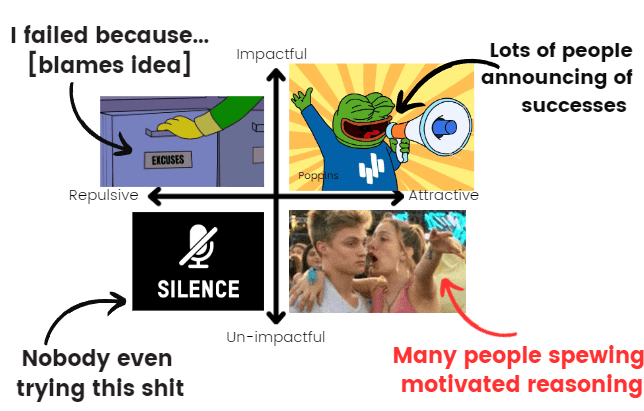

By virtue of being of conferring more social status and enjoyment, attractive ideas get more attention and retain it better. When these ideas work, people make their celebratory EA forum post and everyone cheers. When they fail, the founders keep it quiet because announcing it is painful, embarrassing and poorly incentivized.

Be reminded of the earlier situation and then predict how it will affect the distribution of the remaining ideas

Leads to...

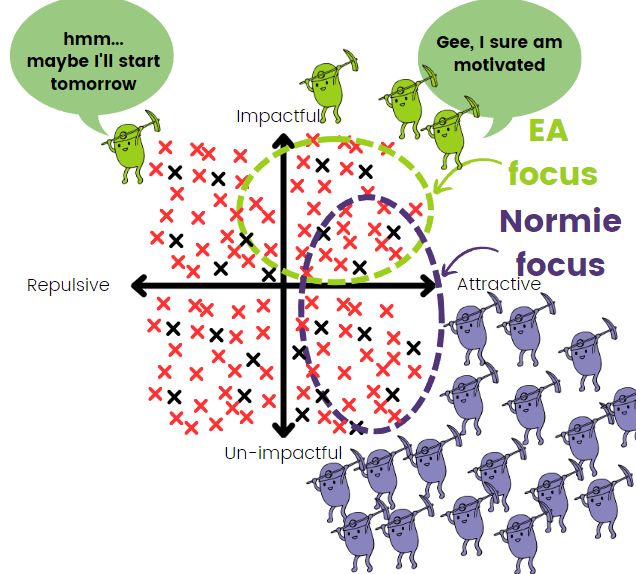

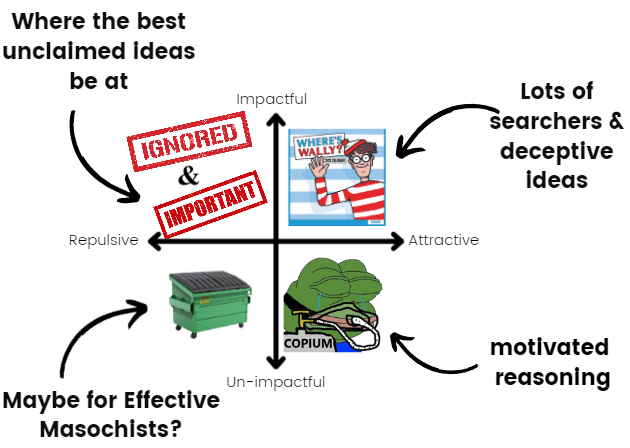

The impactful, attractive ideas that could work have largely been taken (leaving the stuff that looks good but doesn't work). The repulsive but impactful quadrant is rich in impactful ideas.

So to summarise, here's the EA idea space at present

So what do I recommend?

- Funders should give less credence to projects that EAs typically like to work on and more credence to those they don't. The reasoning presented to them is less likely to be motivated reasoning.

- EA should prioritise ideas that sound like no fun

- They're more neglected, less likely to have been tried already,

- You overestimated how much it would affect your happiness

- It's less likely that the idea hasn't already been tried and failed

- You're probably biased against them due to having heard less about them, for no reason other than people are less excited to work on them

- Announcing failed projects should be expected of EAs

- EAs should start setting an example

- Tune in next week for my list of failed projects

- There should be a small prize for the most embarrassing submissions

- Funders should look positively on EAs who announce their failed projects and negatively on EAs who don't.

- EAs should start setting an example

Keyest of points: Pay close attention to ideas that repel others people for non-impact related reasons, but not you. If you can get obsessed about something important that most people find horribly boring, you're uniquely well placed to make a big impact.

Thanks for this post! I've been thinking about similar issues.

One thing that may be worth emphasizing is that there are large and systematic interindividual differences in idea attractiveness—different people or groups probably find different ideas attractive.

For example, for non-EA altruists, the idea of "work in soup kitchen" is probably much more attractive than for EAs because it gives you warm fuzzies (due to direct personal contact with the people you are helping), is not that effortful, and so on. Sure, it's not very cost-effective but this is not something that non-EAs take much into account. In contrast, the idea of "earning-to-give" may be extremely unattractive to non-EAs because it might involve working at a job that you don't feel passionate about, you might be disliked by all your left-leaning friends, and so on. For EAs, the reverse is true (though earning-to-give may still be somewhat unattractive but not that unattractive).

In fact, in an important sense, one primary reason for starting the EA movement was the realization of schlep blindness in the world at large—certain ideas (earning to give, donate to the global poor or animal charities) were unattractive / uninspiring / weird but seemed to do (much) more good than the attractive ideas (helping locally, becoming a doctor, volunteering at a dog shelter, etc.).

Of course, it's wise to ask ourselves whether we EAs share certain characteristics that would lead us to find a certain type of idea more attractive than others. As you write in a comment, it's fair to say that most of us are quite nerdy (interested in science, math, philosophy, intellectual activities) and we might thus be overly attracted to pursue careers that primarily involve such work (e.g., quantitative research, broadly speaking). On the other hand, most people don't like quantitative research, so you could also argue that quantitative research is neglected! (And that certainly seems to be true sometimes, e.g., GiveWell does great work relating to global poverty.)

I see where you're coming from but I also think it would be unwise to ignore your passions and personal fit. If you, say, really love math and are good at it, it's plausible that you should try to use your talents somehow! And if you're really bad at something and find it incredibly boring and repulsive, that's a pretty strong reason to not work on this yourself. Of course, we need to be careful to not make general judgments based on these personal considerations (and this plausibly happens sometimes subconsciously), we need to be mindful of imitating the behavior of high-status folks of our tribe, etc.)

We could zoom out even more and ask ourselves if we as EAs might be attracted to certain worldviews/philosophies that are more attractive.

What type of worldviews might EAs be attracted to? I can't speak for others but personally, I think that I've been too attracted to worldviews according to which I can (way) more impact than the average do-gooder. This is probably because I derive much of my meaning and self-worth in life from how much good I believe I do. If I can change the world only a tiny amount even if I try really, really hard and am willing to bite all the bullets, that makes me feel pretty powerless, insignificant, and depressed—there is so much suffering in the world and I can do almost nothing in the big scheme of things? Very sad.

In contrast, "silver-bullet worldviews" according to which I can have super large amounts of impact because I galaxy-brained my way into finding very potent, clever, neglected levers that will change the world-trajectory—that feels pretty good. It makes me feel like I'm doing something useful, like my life has meaning. More cynically, you could say it's all just vanity and makes me feel special and important. "I'm not like all those others schmucks who just feel content with voting every four years and donating every now and then. Those sheeple. I'm helping way more. But no big deal, of course! I'm just that altruistic."

To be clear, I think probably something in the middle is true. Most likely, you can have more (expected) impact than the average do-gooder if you really try and reflect hard and really optimize for this. But in the (distant) past, following the reasoning of people like Anna Salamon (2010) [to be clear: I respect and like Anna a lot], I thought this might buy you like a factor of a million, whereas now I think it might only buy you a factor of a 100 or something. As usual, Brian has argued for this a long time ago. However, a factor of a 100 is still a lot and most importantly, the absolute good you can do is what ultimately matters, not the relative amount of good, and even if you only save one life in your whole life, that really, really matters.

Also, to be clear, I do believe that many interventions of most longtermist causes like AI alignment plausibly do (a great deal) more good than most "standard" approaches to doing good. I just think that the difference is considerably smaller than I previously believed, mostly for reasons related to cluelessness.

For me personally, the main take-away is something like this: Because of my desperate desire to have ever-more impact, I've stayed on the train to crazy town for too long and was too hesitant to walk a few stops back. The stop I'm now in is still pretty far away from where I started (and many non-EAs would think it uncomfortably far away from normal town) but my best guess is that it's a good place to be in.

(Lastly, there are also biases against "silver-bullet worldviews". I've been thinking about writing a whole post about this topic at some point.)

This is a fantastic comment

Can you think of any Schlep ideas in EA?

I think a good question to ask is, what work do you wish someone else would do?

Horrible career moves e.g. investigating the corrupt practices of powerful EAs / Orgs

Boring to most people e.g. compiling lists and data

Low status outside EA e.g. welfare of animals nobody cares about (e.g. shrimp)

Low status within EA e.g. global mental health

Living in relatively low quality of living areas e.g. fieldwork in many African countries

John -- this is a refreshingly concise, visually funny, & insightful post.

I guess a key tradeoff here is that the 'repulsive-but-impactful' work might be great for finding neglected ideas, causes, and interventions, but might be quite toxic to EA 'community safety' and 'movement-building' -- insofar as repulsive ideas tend to make people within EA feel 'unsafe' or offended, and make people outside EA think that EA is weird and gross. Also, most 'repulsive' ideas are strongly partisan-coded at the political level, insofar as the Left and Right tend to think very different things are 'repulsive'.

So, my hunch is that a lot of the low-hanging 'repulsive-but-impactful' work is avoided through self-censorship and self-deception and attempted political neutrality.

Do you have examples of ideas that would fall into each category? I think that would help me better understand your idea.

OP commented on this here.

Ajeya Cotra posted an essay on schlep in the context of AI 2 weeks ago:

https://www.planned-obsolescence.org/scale-schlep-and-systems/

I find that many of the topics she suggests as 'schlep' are actually very exciting and lots of fun to work on. This is plausible why we see so much open source effort in the space of LLM-hacking.

What would you think of as examples of schlep in other EA areas?

I think schlep blindness is everywhere in EA. I think the work activities of the average EA suspiciously align with activities nerds enjoy and very few roles strike me as antithetical. This makes me suspicious that a lot of EA activity is justified by motivated reasoning, as EAs are massive nerds.

It'd be very kind of an otherwise callous universe to make the most impactful activities things that we'd naturally enjoy to do.

Could you operationalize what "attractive" means a little more? The closest I could discern is ideas that offer "more social status and enjoyment." It seems that concepts like "I think I could more easily get funding for this / get a good salary doing this / have some work-life balance doing this" would also count on this axis.

Everything that you mentioned would be fall under "attractive". I'm referring to anything that would make an idea appealing to work on (besides impactfulness).

Thanks for the clarification. One important qualification on the EA focus / normie focus chart, then, is that an idea's "attractiveness" score may be significantly different for the average non-EA vs. the average EA.

Good point.

I shamelessly stole the ideas from Paul Graham and just applied them to EA. All credit for the good should go to him, all blame for the bad should be placed on me.

http://www.paulgraham.com/schlep.html

Re: 2, I don't. I actually think they are more prone to it. I think funders, for this reason, should prioritise projects that sound unfun to work on because it's less likely to have be proposed for non-impact reasons.

e.g. if choosing between funding two similar projects, one that sounds horribly boring to execute and another that sounds like a nerd's wet dream, you should fund the former rather than the latter.

(apologies if I've misread your comment)

Got it. In that case either there's a missing negative in your point 1 in the post, or there's a word I misunderstood 😅

You were right! I typo'd - have fixed now, ty.

One critique of this piece would be that perhaps Impactful | Attractive should be at 20° rather than 45, since it seems like attractive ideas are more likely to be unimpactful. This is because (1) They fit preconceived biases of the world (2) They're less neglected.

So to some extent, I wonder if schlep ideas exist at all in EA.

Tja! Good post. I have contemplated this myself for the last few weeks so I am really happy to see you post about it right now. Do you or anyone else have takes on whether it is advisable for people to pursue schlep? The concept on Ikigai, for example, emphasizes passion in what you pursue. Schlep is like the anti-passion. My personality is somewhat adapt at tolerating quite large amounts of schlep so I could just ignore what I think is fun and grit through some serious schlep, using caffeine, nicotine and motivating music as my crutches.

I am asking as some people have advised me against this, and think my impact will be higher, and my life better if I instead, a bit more selfishly pursue what I think is fun and instantly rewarding.

I am really unsure about how to resolve this. I guess the choice would be easy if there are 2 different projects I could work on with similar impact but where the only difference is that I think one is much more fun.

The reality is more like there are some things that are fun, but I am really unsure of the impact, especially the counterfactual impact, as other people seem to think that it is fun too. The schlep option, on the other hand, is stuff where I am really unsure if anyone else would pick it up if I did not pursue it.

To schlep or not to schlep - that is the question. I am partly inspired by Joey at CE - I think he is pretty serious about schlepping if I understood an interview with him correctly (search "sacrifice all your own happiness"). ASB also posted on some serious schlep being needed in biosecurity.

2 points I'd raise:

We're really bad at predicting how a change would affect our happiness. We overwhelmingly overestimate the impact. If you know this, schlep won't seem as bigger sacrifice

I think we, as a community, need to incentivise schlepping by granting it more social status and discriminating towards people who do it.

Number 2 there sounds like a new EA podcast: "The unsung warriors of EA" or something. All the podcasts are now about the "thinkers" and researchers - fascinating ideas and intellectually stimulating. The new podcast could instead be made interesting by focusing on the pain, challenges and the coping mechanisms of those schlepping hard. Maybe someone could start asking some serious schleppers about how they would like to be recognized, I just thought podcasts are good as they might simultaneously to recognizing people also inspire others to pursue schlep.